TensorFlow 模型包含批标准化层时无法保存

原文标题 :Can not save Tensorflow model when it contains batchnormalization layer

我正在尝试在 1 个 epoch 训练后保存自定义的 Tensorflow 模型。当模型包含 BatchNormalization 层时,它无法保存。我可以看到“fused_batch_norm”无法序列化。如何调用另一个可以序列化的 BatchNormalization 层并以“.h5”和“.pb”格式保存。我在 MacOS 上使用带有 Tensorflow-metal 的 Tensorflow 2.8。

def conv_batchnorm_relu(x, filters, kernel_size, strides=1):

# s

x = tf.keras.layers.Conv2D(filters=filters, kernel_size=kernel_size, strides=strides, padding = 'same')(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.ReLU()(x)

return x

类型错误:层 tf.compat.v1.nn.fused_batch_norm 传递了非 JSON 可序列化参数。参数类型:{‘scale’:

, ‘offset’: <类’tensorflow.python.ops.resource_variable_ops.ResourceVariable’>,’mean’:<类’tensorflow.python.ops.resource_variable_ops.ResourceVariable’>,’方差’:<类’tensorflow.python.ops.resource_variable_ops.ResourceVariable’ >, ‘epsilon’: , ‘is_training’: , ‘data_format’: }。保存模型时不能序列化出来。

回复

我来回复-

Jirayu Kaewprateep 评论

Jirayu Kaewprateep 评论您可以应用矩阵属性,也可以创建具有支持功能的模型。

样本:

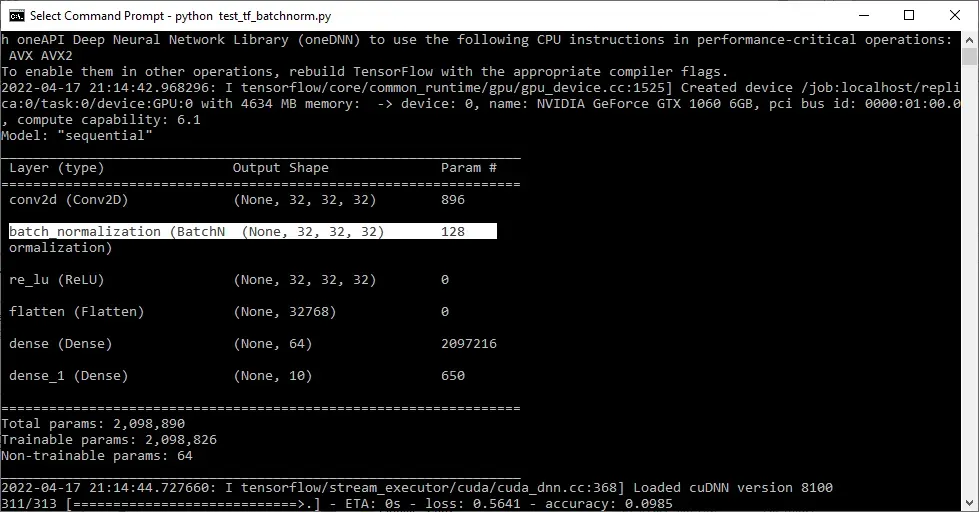

import os from os.path import exists import tensorflow as tf import h5py """"""""""""""""""""""""""""""""""""""""""""""""""""""""" Variables """"""""""""""""""""""""""""""""""""""""""""""""""""""""" filters = 32 kernel_size = (3, 3) strides = 1 database_buffer = "F:\\models\\buffer\\" + os.path.basename(__file__).split('.')[0] + "\\TF_DataSets_01.h5" database_buffer_dir = os.path.dirname(database_buffer) checkpoint_path = "F:\\models\\checkpoint\\" + os.path.basename(__file__).split('.')[0] + "\\TF_DataSets_01.h5" checkpoint_dir = os.path.dirname(checkpoint_path) loggings = "F:\\models\\checkpoint\\" + os.path.basename(__file__).split('.')[0] + "\\loggings.log" if not exists(checkpoint_dir) : os.mkdir(checkpoint_dir) print("Create directory: " + checkpoint_dir) if not exists(database_buffer_dir) : os.mkdir(database_buffer_dir) print("Create directory: " + database_buffer_dir) """"""""""""""""""""""""""""""""""""""""""""""""""""""""" Functions """"""""""""""""""""""""""""""""""""""""""""""""""""""""" def conv_batchnorm_relu(filters, kernel_size, strides=1): model = tf.keras.models.Sequential([ tf.keras.layers.InputLayer(input_shape=( 32, 32, 3 )), tf.keras.layers.Conv2D(filters=filters, kernel_size=kernel_size, strides=strides, padding = 'same'), tf.keras.layers.BatchNormalization(), tf.keras.layers.ReLU(), ]) model.add(tf.keras.layers.Flatten()) model.add(tf.keras.layers.Dense(64)) model.add(tf.keras.layers.Dense(10)) model.summary() return model """"""""""""""""""""""""""""""""""""""""""""""""""""""""" DataSet """"""""""""""""""""""""""""""""""""""""""""""""""""""""" (train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.cifar10.load_data() # Create hdf5 file hdf5_file = h5py.File(database_buffer, mode='w') # Train images hdf5_file['x_train'] = train_images hdf5_file['y_train'] = train_labels # Test images hdf5_file['x_test'] = test_images hdf5_file['y_test'] = test_labels hdf5_file.close() # Visualize dataset train sample hdf5_file = h5py.File(database_buffer, mode='r') x_train = hdf5_file['x_train'][0: 10000] x_test = hdf5_file['x_test'][0: 100] y_train = hdf5_file['y_train'][0: 10000] y_test = hdf5_file['y_test'][0: 100] """"""""""""""""""""""""""""""""""""""""""""""""""""""""" : Optimizer """"""""""""""""""""""""""""""""""""""""""""""""""""""""" optimizer = tf.keras.optimizers.Nadam( learning_rate=0.0001, beta_1=0.9, beta_2=0.999, epsilon=1e-07, name='Nadam' ) """"""""""""""""""""""""""""""""""""""""""""""""""""""""" : Loss Fn """"""""""""""""""""""""""""""""""""""""""""""""""""""""" lossfn = tf.keras.losses.MeanSquaredLogarithmicError(reduction=tf.keras.losses.Reduction.AUTO, name='mean_squared_logarithmic_error') """"""""""""""""""""""""""""""""""""""""""""""""""""""""" : Model Summary """"""""""""""""""""""""""""""""""""""""""""""""""""""""" model = conv_batchnorm_relu(filters, kernel_size, strides=1) model.compile(optimizer=optimizer, loss=lossfn, metrics=['accuracy']) """"""""""""""""""""""""""""""""""""""""""""""""""""""""" : FileWriter """"""""""""""""""""""""""""""""""""""""""""""""""""""""" if exists(checkpoint_path) : model.load_weights(checkpoint_path) print("model load: " + checkpoint_path) input("Press Any Key!") """"""""""""""""""""""""""""""""""""""""""""""""""""""""" : Training """"""""""""""""""""""""""""""""""""""""""""""""""""""""" history = model.fit(x_train, y_train, epochs=1 ,validation_data=(x_train, y_train)) model.save_weights(checkpoint_path) input('...') 2年前

2年前 -

Sifat Amin 评论

Sifat Amin 评论我从您给定的模板中了解到,您不能以这种方式使用支持功能在模型中添加自定义层。通常,当您需要模型方法时,您从 keras.Model 继承:Model.fit、Model.evaluate 和 Model.save

这是一个示例代码,以便更好地理解

class ExampleBlock(tf.keras.Model): def __init__(self, kernel_size, filter1,filter2): super(ExampleBlock, self).__init__(name='') self.conv2a = tf.keras.layers.Conv2D(filters1, (1, 1)) self.bn2a = tf.keras.layers.BatchNormalization() self.conv2b = tf.keras.layers.Conv2D(filters2, kernel_size, padding='same') self.bn2b = tf.keras.layers.BatchNormalization() # You can as many layer you like def call(self, input_tensor, training=False): x = self.conv2a(input_tensor) x = self.bn2a(x, training=training) x = tf.nn.relu(x) x = self.conv2b(x) x = self.bn2b(x, training=training) x = tf.nn.relu(x) return tf.nn.relu(x)定义模型后,您可以通过定义回调函数在每个 epoch 之后创建检查点。

import keras model_save_path = "/content/drive/MyDrive/Project/" #path for saving checkpoint checkpoint = keras.callbacks.ModelCheckpoint(model_save_path+'checkpoint_{epoch:02d}', save_freq='epoch')您可以在回调函数中使用此检查点

H = model.fit( aug.flow(trainX, trainY, batch_size=BS), validation_data=(validX, validY), steps_per_epoch=len(trainX) // BS, epochs=EPOCHS, class_weight=classWeight, verbose=1,callbacks=[checkpoint])2年前