阅读本文需要的背景知识点:集成学习、拉格朗日乘子法、一点点编程知识

一、简介

前面一节我们学习了随机森林算法(Random Forest Algorithm),讲到了其中一种集成学习的方法——Bagging 算法,这一节我们来学习另一种集成学习的方法——提升算法1(Boosting Algorithm),同时介绍其中比较常见的算法——自适应增强算法2(Adaptive Boosting Algorithm / AdaBoost Algorithm)

二、模型介绍

Boosting 算法

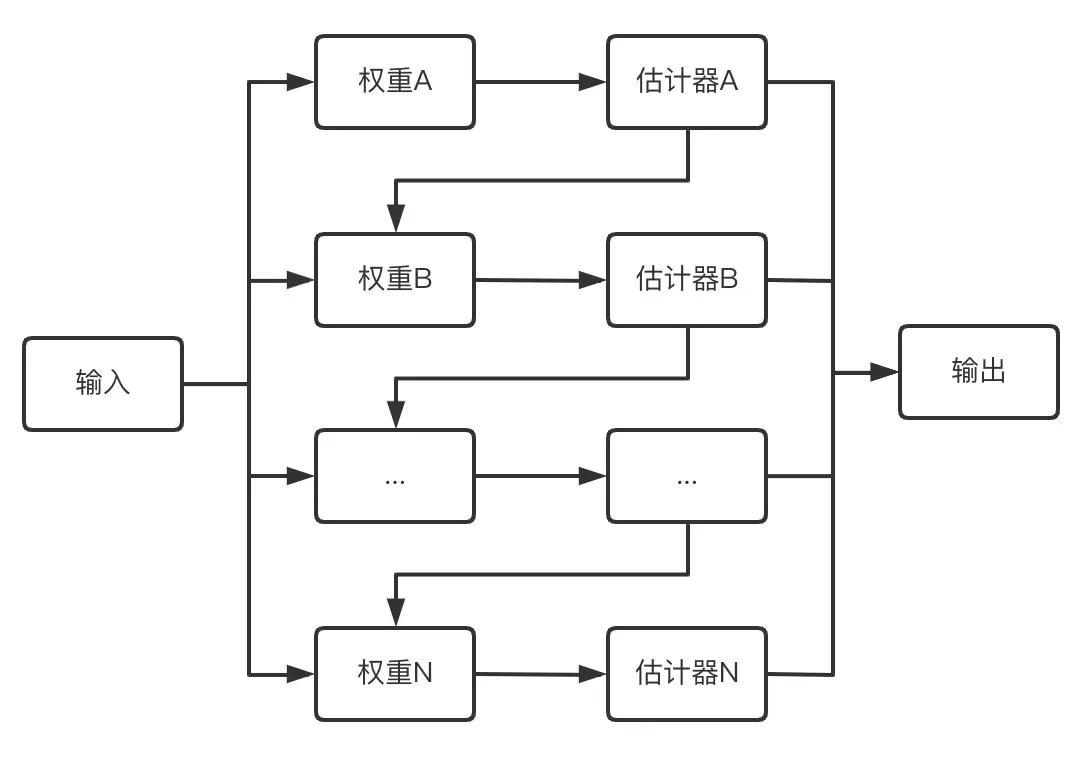

Boosting 算法也是一种集成学习,与 Bagging 算法不同的是,每次训练更关注训练出的估计器中分类错误或者回归误差大的样本,即每次训练都是根据上次训练的结果调整不同的样本权重,直到最后的输出小于预设的阈值。

图 2-1 展示了提示算法的具体流程,其与 Bagging 算法的区别在于:其一,Bagging 算法的每个估计器相对独立且权重都相同,而 Boosting 算法的每个估计器都依赖于上一个估计器同时权重也不同。其二,一般情况下 Bagging 算法可以减小方差、而 Boosting 算法则是减小偏差。

Boosting 算法中比较有代表性的算法就是自适应增强算法(Adaptive Boosting Algorithm / AdaBoost Algorithm)

AdaBoost 算法

AdaBoost 算法是由 Yoav Freund 和 Robert E。Schapire 在 1995 年提出的,同时还提出了 AdaBoost.M1、AdaBoost.M2 算法用于多分类问题,AdaBoost.R 算法用于回归问题。后面陆续又有人提出了上述算法的变体 AdaBoost-SAMME、AdaBoost-SAMME.R、AdaBoost.R2 算法。

AdaBoost 算法的基本步骤与 Boosting 算法一样,是 Boosting 算法的具体实现,其定义了每次循环如何更新样本权重以及最后如何将每个估计器结合起来。

由于笔者能力所限,本文只会介绍基础的 AdaBoost 算法和现在 scikit-learn 中所实现的 AdaBoost-SAMME、AdaBoost-SAMME.R、AdaBoost.R2算法,其他的算法暂无法一一介绍,感兴趣的读者可以参考文末对应算法的论文原文。

3.算法步骤

下面给出各个算法的执行步骤,然后对这些算法步骤中公式的来源进行一一说明。

二级

假设训练集 T = { X_i, y_i },i = 1,…,N,y_i 可取-1,+1,h(x) 为估计器,估计器的数量为 K。

AdaBoost 算法步骤如下:

初始化样本权重向量 ω_1

遍历估计器的数量 K 次:

在样本权重 ω_k 下训练估计器 h(x)

计算第k次的误差率 e_k

如果误差率 e_k 大于 0.5

中断循环

计算第k次的估计器权重 α_k

计算第 k+1 次的权重向量 ω_{k+1}

结束循环

最后的结合策略,采用加权后的结果取 sign 函数,得到最终的强估计器:

多类

假设训练集 T = { X_i, y_i },i = 1,…,N,y 的取值有 M 种可能,h(x) 为估计器,估计器的数量为 K。

AdaBoost-SUMME 算法步骤如下:

初始化样本权重向量 ω_1

遍历估计器的数量 K 次:

在样本权重 ω_k 下训练估计器 h(x)

计算第k次的误差率 e_k

计算第 k 次的估计器权重 α_k

计算第 k+1 次的权重向量 ω_{k+1}

对权重向量 ω_{k+1} 进行归一化

结束循环

在最终的组合策略中,对正确分类的结果进行加权,取最大值的分类得到最终的强估计量:

AdaBoost-SUMME.R 算法步骤如下:

初始化样本权重向量 ω_1

遍历估计器的数量 K 次:

在样本权重 ω_k 下计算加权类概率估计向量 P_k

计算第 k+1 次的权重向量 ω_{k+1}

对权重向量 ω_{k+1} 进行归一化

结束循环

最终的组合策略使用概率估计计算结果中值最大的分类,得到最终的强估计量:

返回

假设训练集 T = { X_i, y_i },i = 1,…,N,h(x) 为估计器,估计器的数量为 K

AdaBoost.R2 算法步骤如下:

初始化样本权重向量 ω_1

遍历估计器的数量 K 次:

在样本权重 ω_k 下训练估计器 h(x)

计算最大误差 E_k

计算第 k 次的误差率 e_k

如果误差率 e_k 大于 0.5

中断循环

计算第 k 次的估计器权重 α_k

计算第 k+1 次的权重向量 ω_{k+1}

对权重向量 ω_{k+1} 进行归一化

结束循环

最终的组合策略使用与估计量权重的中位数对应的估计量的结果来获得最终的强估计量:

4. 原理证明

AdaBoost 算法推导

同算法步骤中的前提条件一样,假设训练集 T = { X_i, y_i },i = 1,…,N,y_i 可取-1,+1,h(x) 为估计器,估计器的数量为 K。

AdaBoost 算法的一种解释是加法模型,通过多个估计器 h(x) 加权以后得到最后的强估计器 H(x),如下所示:

(1)第 k-1 轮的强估计器表达式

(2)第 k 轮的强估计器表达式

(3)第 k 轮的强估计器可以由第 k-1 轮的强估计器和第 k 轮的加权估计器来表示

接下来我们来定义最后强估计器的代价函数,AdaBoost 算法选用的是指数函数,相比于0/1 函数具有更好的数学性质。

(1)指数代价函数

(2)带入式 4-1中的(3)式

(3)我们的目标就是找到最优的估计器权重 α 和估计器 h(x)

(4)定义一个新的变量 ω,包含前一轮的强估计器等与 α 、h(x)无关的值

(5)替换 ω

我们先来看下估计器 h(x),在每次训练估计器后,估计器已经确定下来了,所以我们现在只需要关心每个估计器的权重 α 即可。

(1)找到最优的估计器权重 α 使得代价函数的取值最小

(2)代价函数 Cost(α)

(3)由于标签值可取正负 1,根据预测值与标签值是否相同拆为两项

(4)增加第二、三两项,不影响最后的结果

(5)将(4)式中前两项和后两项分别合并得到

(1)对代价函数求导数并令其为零

(2)定义错误率 e_k 的表达式

(3)将错误率 e_k 带入(2)式

(4)两边同时乘以 exp(α)

(5)移项后整理得

(6)求得最后的估计器权重 α 的表达式

(1)错误率 e_k 的定义

(2)定义 ω_k

(3)得到错误率 e_k 的表达式

接下来是ω的更新方法:

(1) ω_{k+1} 的定义

(2)带入式 4-1中的(3)式

(3)替换为 ω_k

(1)式 4-6中的(3)

(2)两边同时除以归一化参数

(3)分子按照式 4-5中(2)式的定义替换,分母用式 4-7中(1)式替换

(4)分母再按照式 4-5中(2)式的定义替换

(5)由于 ω 的和为一个常数 C

(6)分子分母的常数 C 可以消除,得到 ω 的更新方表达式

综合式 4-1~式 4-7 可以得到 AdaBoost 算法的表达式:

由于文章太长,CSDN无法发布,所以将文章拆成上下两篇发布

本文首发于——AI导图,欢迎关注

文章出处登录后可见!