GMA的架构

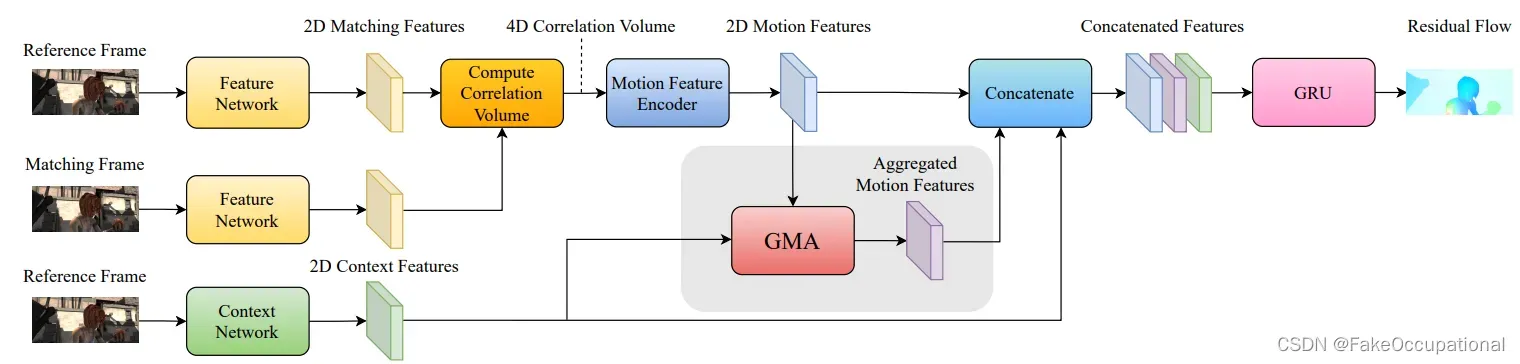

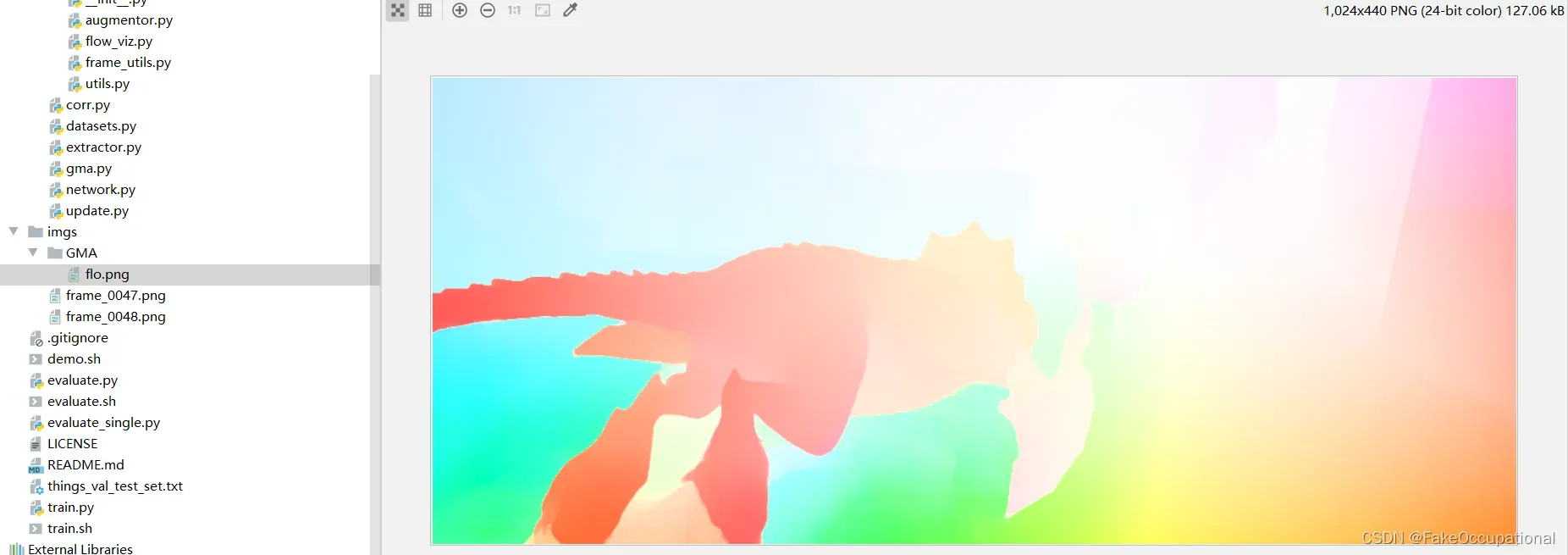

文中的的全局运动聚合(GMA)模块包含在阴影框中,这是RAFT的一个独立附加模块,具有较低的计算开销,可显著提高性能。它将视觉上下文特征和运动特征作为输入,并输出聚合的运动特征,这些特征在整个图像中共享信息。然后将这些聚合的全局运动特征与局部运动特征和视觉上下文特征连接起来,由GRU解码为剩余流。这使网络能够根据特定像素位置的需要,灵活地选择或组合局部和全局运动特征。例如,局部图像证据较差的位置(例如由遮挡引起)可能会优先考虑全局运动特征。GMA的详细示意图如下图所示:

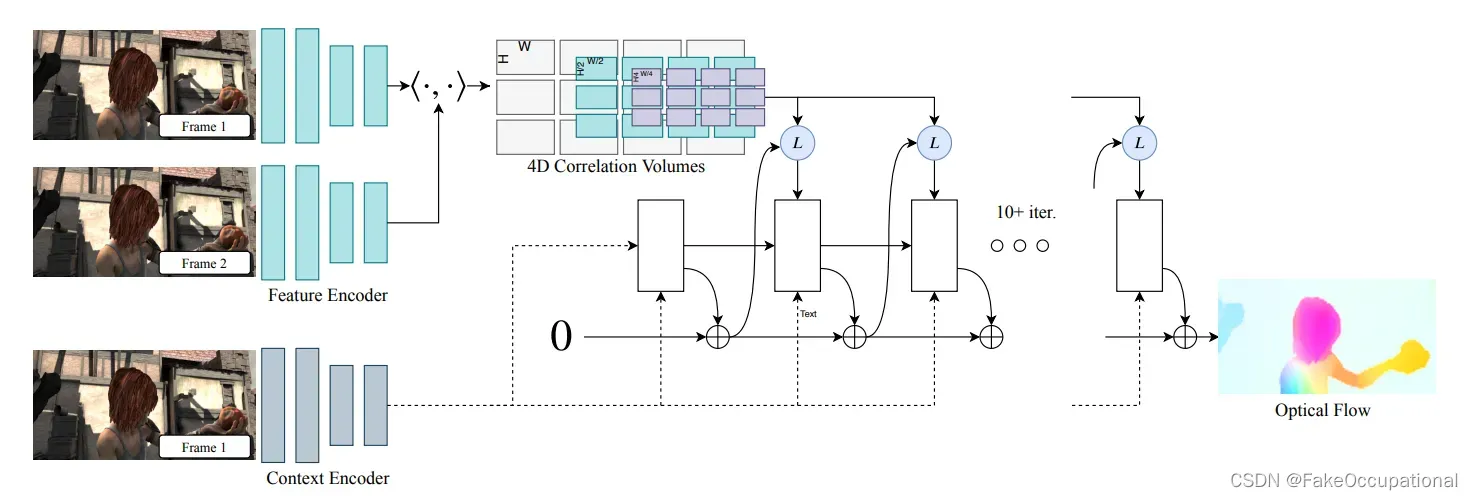

RAFT

GMA的论文观点

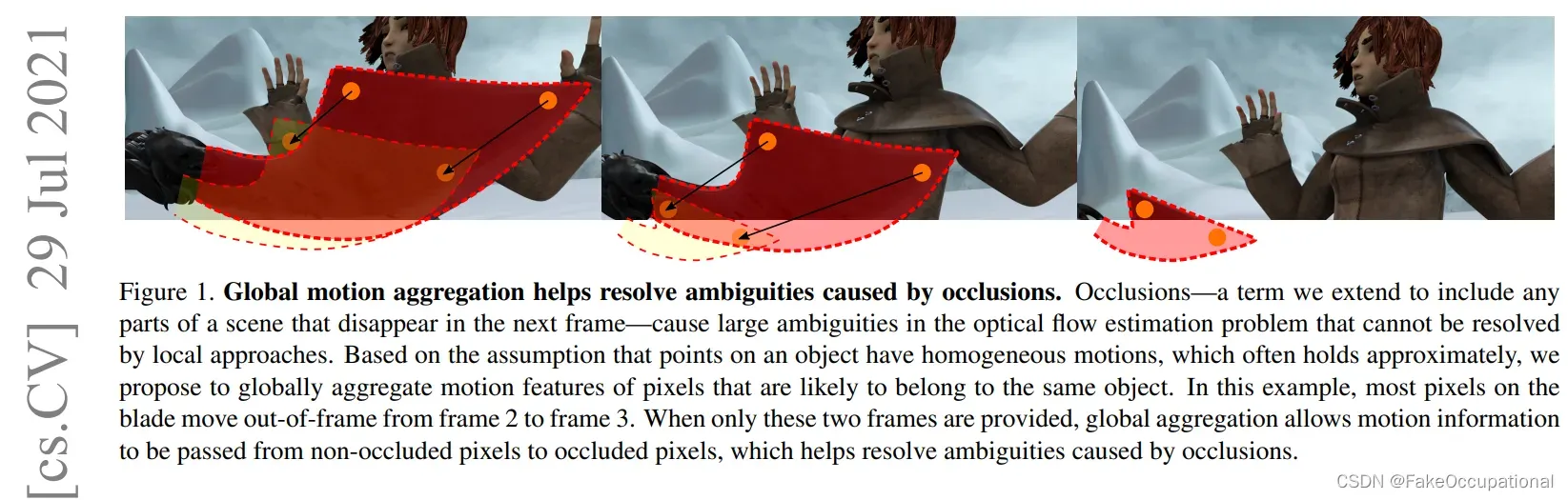

Geoffrey Hinton在1976年的第一篇论文中写道,“必须通过找到最佳的全球解释来解决局部歧义”[13]。这种想法在现代深度学习时代仍然适用。为了解决遮挡造成的歧义,我们的核心思想是允许网络在更高的层次上推理,也就是说,在隐式推理了哪些像素在外观特征空间中是相似的之后,全局聚合相似像素的运动特征。我们假设,通过在参考框架中寻找具有相似外观的点,网络将能够找到具有相似运动的点。这是因为观察到单个物体上的点的运动通常是均匀的。例如,向右奔跑的人的运动向量向右偏移,即使我们看不到该人的大部分由于遮挡而在匹配帧中结束的位置,也会保持这种偏移。我们可以使用这种统计偏差将运动信息从高(隐式)置信度的非遮挡像素传播到低置信度的遮挡像素。这里,置信度可以解释为是否存在明显的匹配,即在正确的位移处存在高相关值。

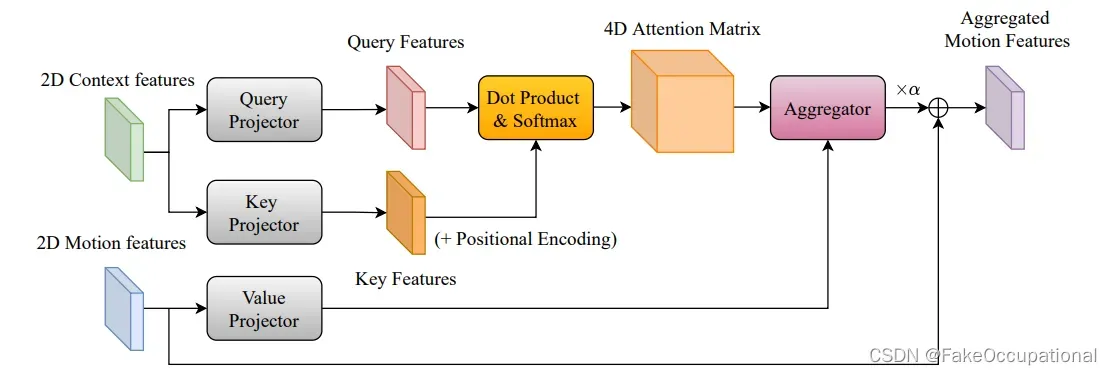

有了这些想法,我们从transformers网络中获得了灵感,transformers网络以其建模长期依赖性的能力而闻名。与transformers中的自我注意机制不同,在transformers中,查询、键和值来自相同的特征向量,我们使用了一种广义的注意变体。我们的查询和关键特征是上下文特征图的投影,用于建模第1帧中的外观自相似性。值特征是运动特征的投影,运动特征本身就是4D相关体积的编码。根据查询和关键特征计算出的注意矩阵用于聚合作为运动隐藏表示的值特征。我们将其命名为全局运动聚合(GMA)模块。聚合后的运动特征与局部运动特征以及背景特征连接起来,由GRU解码。GMA的详细示意图如图4所示。

代码和链接

可以直接运行demo的命令进行官方提供的demo的测试,经过读取数据,调整大小,计算光流:

python evaluate_single.py --model checkpoints/gma-sintel.pth --path imgs

def load_image(imfile): # 'imgs\\frame_0047.png'

img = np.array(Image.open(imfile)).astype(np.uint8) # (436, 1024, 3)

img = torch.from_numpy(img).permute(2, 0, 1).float() # torch.Size([3, 436, 1024])

return img[None].to(DEVICE)# torch.Size([1, 3, 436, 1024])

padder = InputPadder(image1.shape)

image1, image2 = padder.pad(image1, image2)

flow_low, flow_up = model(image1, image2, iters=12, test_mode=True)

# torch.Size([1, 2, 55, 128]) torch.Size([1, 2, 440, 1024])

The overall code framework is adapted from RAFT. We thank the authors for the contribution. We also thank Phil Wang for open-sourcing transformer implementations.

整个代码框架改编自RAFT。我们感谢作者的贡献。我们还感谢Phil Wang开放源代码转换器的实现。

网络结构:

RAFTGMA(

(fnet): BasicEncoder(

(norm1): InstanceNorm2d(64, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))

(relu1): ReLU(inplace=True)

(layer1): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): InstanceNorm2d(64, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(norm2): InstanceNorm2d(64, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

)

(1): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): InstanceNorm2d(64, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(norm2): InstanceNorm2d(64, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

)

)

(layer2): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(64, 96, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(conv2): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): InstanceNorm2d(96, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(norm2): InstanceNorm2d(96, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(norm3): InstanceNorm2d(96, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(downsample): Sequential(

(0): Conv2d(64, 96, kernel_size=(1, 1), stride=(2, 2))

(1): InstanceNorm2d(96, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

)

)

(1): ResidualBlock(

(conv1): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): InstanceNorm2d(96, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(norm2): InstanceNorm2d(96, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

)

)

(layer3): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(96, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): InstanceNorm2d(128, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(norm2): InstanceNorm2d(128, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(norm3): InstanceNorm2d(128, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(downsample): Sequential(

(0): Conv2d(96, 128, kernel_size=(1, 1), stride=(2, 2))

(1): InstanceNorm2d(128, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

)

)

(1): ResidualBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): InstanceNorm2d(128, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(norm2): InstanceNorm2d(128, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

)

)

(conv2): Conv2d(128, 256, kernel_size=(1, 1), stride=(1, 1))

)

(cnet): BasicEncoder(

(norm1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))

(relu1): ReLU(inplace=True)

(layer1): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(64, 96, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(conv2): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm2): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm3): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(64, 96, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm2): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(96, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm3): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(96, 128, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

(norm1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(conv2): Conv2d(128, 256, kernel_size=(1, 1), stride=(1, 1))

)

(update_block): GMAUpdateBlock(

(encoder): BasicMotionEncoder(

(convc1): Conv2d(324, 256, kernel_size=(1, 1), stride=(1, 1))

(convc2): Conv2d(256, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(convf1): Conv2d(2, 128, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3))

(convf2): Conv2d(128, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv): Conv2d(256, 126, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(gru): SepConvGRU(

(convz1): Conv2d(512, 128, kernel_size=(1, 5), stride=(1, 1), padding=(0, 2))

(convr1): Conv2d(512, 128, kernel_size=(1, 5), stride=(1, 1), padding=(0, 2))

(convq1): Conv2d(512, 128, kernel_size=(1, 5), stride=(1, 1), padding=(0, 2))

(convz2): Conv2d(512, 128, kernel_size=(5, 1), stride=(1, 1), padding=(2, 0))

(convr2): Conv2d(512, 128, kernel_size=(5, 1), stride=(1, 1), padding=(2, 0))

(convq2): Conv2d(512, 128, kernel_size=(5, 1), stride=(1, 1), padding=(2, 0))

)

(flow_head): FlowHead(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(256, 2, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

)

(mask): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(256, 576, kernel_size=(1, 1), stride=(1, 1))

)

(aggregator): Aggregate(

(to_v): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

(att): Attention(

(to_qk): Conv2d(128, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(pos_emb): RelPosEmb(

(rel_height): Embedding(319, 128)

(rel_width): Embedding(319, 128)

)

)

)

GMAUpdateBlock(

(encoder): BasicMotionEncoder(

(convc1): Conv2d(324, 256, kernel_size=(1, 1), stride=(1, 1))

(convc2): Conv2d(256, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(convf1): Conv2d(2, 128, kernel_size=(7, 7), stride=(1, 1), padding=(3, 3))

(convf2): Conv2d(128, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv): Conv2d(256, 126, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(gru): SepConvGRU(

(convz1): Conv2d(512, 128, kernel_size=(1, 5), stride=(1, 1), padding=(0, 2))

(convr1): Conv2d(512, 128, kernel_size=(1, 5), stride=(1, 1), padding=(0, 2))

(convq1): Conv2d(512, 128, kernel_size=(1, 5), stride=(1, 1), padding=(0, 2))

(convz2): Conv2d(512, 128, kernel_size=(5, 1), stride=(1, 1), padding=(2, 0))

(convr2): Conv2d(512, 128, kernel_size=(5, 1), stride=(1, 1), padding=(2, 0))

(convq2): Conv2d(512, 128, kernel_size=(5, 1), stride=(1, 1), padding=(2, 0))

)

(flow_head): FlowHead(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(conv2): Conv2d(256, 2, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu): ReLU(inplace=True)

)

(mask): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(256, 576, kernel_size=(1, 1), stride=(1, 1))

)

(aggregator): Aggregate(

(to_v): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

[RAFT论文](https://arxiv.org/abs/2003.12039)

[GMA论文](https://arxiv.org/pdf/2104.02409.pdf)

[官方代码实现](https://github.com/zacjiang/GMA)

文章出处登录后可见!