introduce

题目:Multi-instance learning based on representative instance and feature mapping[0]

@article{WANG2016790,

title = {Multi-instance learning based on representative instance and feature mapping},

journal = {Neurocomputing},

author = {Xingqi Wang and Dan Wei and Hui Cheng and Jinglong Fang},

volume = {216},

pages = {790-796},

year = {2016}

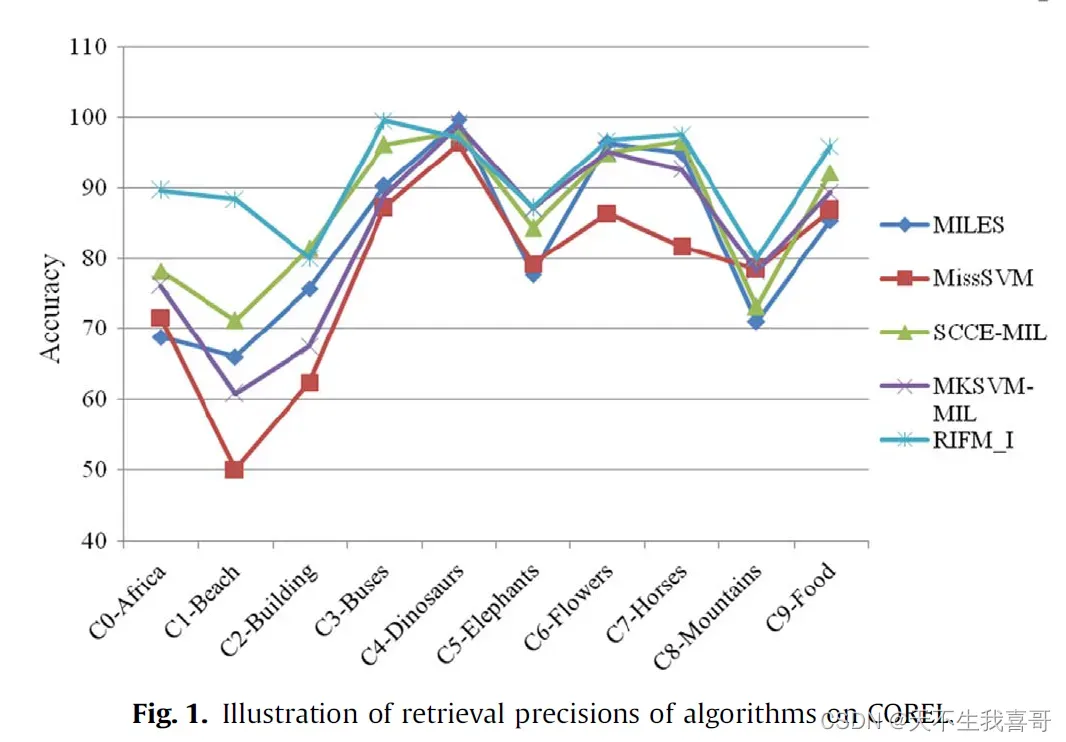

摘要:在本文中,基于代表性实例和特征映射,我们提出了两种多示例学习(MIL)算法,即实例的代表性实例和特征映射(RIFM-I)和包的代表性实例和特征映射(RIFM-B) )。这两种算法首先分别从正负包中选择具有代表性的正负实例,然后将选择的实例和包映射到特征空间,在特征空间中将MIL问题转化为传统的单实例学习问题。最后,引入支持向量数据描述(SVDD)方法来解决转换问题。在 MUSK 数据集上的实验表明,与所有方法中获得的最佳结果相比,RIFM-I 的性能优于 RIFM-B,并且提供了最高的分类精度,并且 RIFM-B 实现了具有竞争力的平均精度性能。此外,RIFM-I 应用于 COREL 图像存储库,用于基于内容的图像检索。实验结果表明,RIFM-I 优于 MILES 和 MissSVM 等其他图像检索方法,并且能够很好地区分两个容易混淆的类别 Beach 和 Mountains。此外,在 MIL 常用的十个数据集中的结果也表明,RIFM-I 在大多数情况下都能取得更好的结果。

introduction

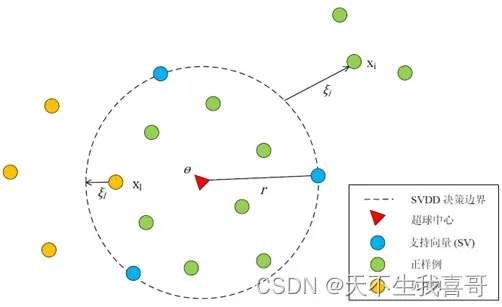

我们提出了两种基于代表性实例特征映射的多示例学习方法。一种是实例级算法,称为RIFM-I; 另一种是包级算法,称为 RIFM-B。 这两种方法都分别从正负包中选择具有代表性的正负实例,然后将代表性实例映射到特征空间。多实例学习问题因此在特征空间中转化为传统的单实例机器学习问题。 之后,SVDD 被应用于解决传统的机器学习问题。

RIFM-I and RIFM-B

Basic definition

Trainging bag set: ,其中

对应的是正包,

对应的是负包。

Instance set:

多实例学习问题是找到一个决策函数,记为,它可以正确分类实例。对于多实例学习,包

的标签与实例的标签有如下关系:

因此,多实例学习的问题是要找到一个可以正确分类实例

的决策函数

本文提出的方法,基于代表性实例和特征映射的方法(RIFM),是找到一个决策函数,超球,它满足以下条件:

1.半径尽可能小;

2.正包中的正实例绑定到超球面上,负包中的所有负实例和正包中的负实例都被排除在外。

优化目标

RIFM-I的优化目标:

其中和

分别是超球面的中心和半径;

是容差误差,

是特征映射函数。当

,

;当

,

。这不是一个凸二次规划问题,因此很难解决。但是,它可以通过求解一系列凸二次规划问题来迭代求解。

和

,决策函数

的参数可以通过反复训练得到。

RIFM-B

同样,和

,决策函数

的参数也可以通过反复训练得到。

代表性实例选择

bb一句,上面的优化目标就是超球的优化目标。

正代表实例选择

定义1:如果 t 是正实例,则实例 x 是正实例的概率定义为:

其中

和

分别实实例

和

的标签,

是一个远大于0的参数。

定义2:如果 是一个正实例,那么一个包

是一个正包的概率被定义为:

其中,

实例与袋子之间的距离等于该实例与袋子中最近的实例之间的距离。

可以证明,如果是一个正例,那么有一个阈值

使得下面的决策函数符合贝叶斯决策理论来标记一个袋子:

综上所述,如果是一个正实例,则必须有由式 (7) 定义的决策函数对包进行标注,即正包与

的距离应小于负包与

的距离 . 但是,如果

不是正例,我们仍然可以根据 (7) 定义决策函数。但是这个决策函数不能足够正确地标记袋子,这正是我们预测正例的基础。所以在下面的讨论中,没有必要要求

是一个正例,它可以是正例,也可以是负例。

定义3:使用式(7)中的决策函数对包进行标注,

,其准确率定义为:

,其中

是包装的标签

为了找到最佳参数,需要最大化其准确性

此时,最佳标注精度为

从本质上讲, 反映了使用

的实例来标记袋子的能力。

的值越大,越有可能是正例。

不难证明,对于 在

集中可以达到最佳精度

。只需要计算

到每个正包的距离,没有太多的计算成本。

此外,我们可以使用以下方法来预测正包中的正实例。 对于每个正包中的每个实例,使用公式(10)计算。 每个正包中具有最大

的实例表示为

实际上,没有必要从每个正包中只选择一个代表实例。 我们使用另一种策略,即对于正包中的每个实例,由公式(10)计算的是按降序排序的,前面的 m 个实例形成一个正实例集,

。

负代表实例选择

同样,我们可以为每个负包选择一组具有代表性的负实例。我们不仅限于选择一个具有代表性的负面实例。负包中每个实例 的最佳精度可以通过

计算。然后对

进行升序排序,取前面的n个实例组成一个负实例集,

特征图

实例级特征图

The RIFM-I training set

包级特征映射:

The RIFM-B training set

实验结果

文章出处登录后可见!