MobileNet v3

onnx 导出参考:torchvision onnx 模型导出_星魂非梦的博客-CSDN博客

1. 模型描述

MobileNet v3 来自论文:“Searching for MobileNetV3”.

| Model structure | Top-1 error | Top-5 error |

|---|---|---|

| MobileNet V3 Large | 25.96 | 8.66 |

| MobileNet V3 Small | 32.33 | 12.6 |

| Model structure | 模型大小 |

|---|---|

| MobileNet V3 Large onnx | 22.1 MB |

| MobileNet V3 Small onnx | 10.3 MB |

2. onxx 导出

参考:轻量化模型之mobilenet v2_星魂非梦的博客-CSDN博客

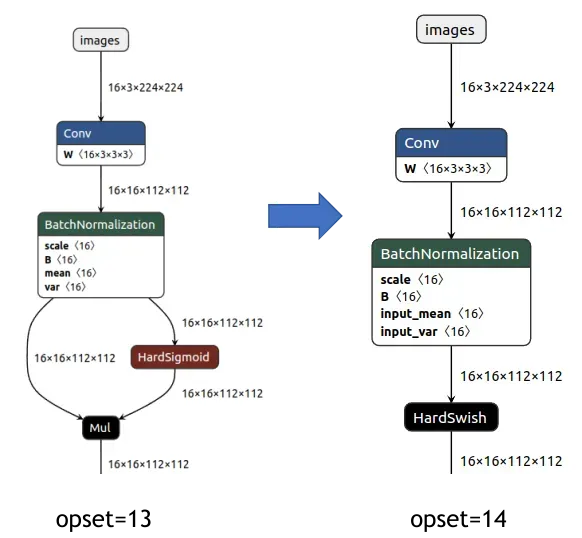

由于 Onnx support hardswish in opset-14 version. 所以opset设为14,需要修改下导出代码:

export_onnx(model, im, file, 14, train = True, dynamic = False, simplify=True) # opset 14

MobileNet v3 中使用了3种激活函数,分别为:Relu、HardSwish和HardSigmoid(SE模块中使用)。

Hardsigmoid — PyTorch 1.11.0 documentation

Hardswish — PyTorch 1.11.0 documentation

torchvision 中有两个 MobileNet v3 模型我们先介绍 MobileNet V3 Small。

3. MobileNet V3 Small

上图中,虚线部分表示该模块可选,见上图右表。上表中的 3×3, 5×5 为benck中CBA(dw)的卷积核大小。

论文中:

补充:

python partial函数

functools.partial(func[,*args][, **kwargs])第一个参数是函数,后面的是参数。这在SE 模块中被使用:

se_layer: Callable[..., nn.Module] = partial(SElayer, scale_activation=nn.Hardsigmoid)SElayer 代码如下:

class SqueezeExcitation(torch.nn.Module):

def __init__(

self,

input_channels: int,

squeeze_channels: int,

activation: Callable[..., torch.nn.Module] = torch.nn.ReLU,

scale_activation: Callable[..., torch.nn.Module] = torch.nn.Sigmoid,

) -> None:

super().__init__()

self.avgpool = torch.nn.AdaptiveAvgPool2d(1)

self.fc1 = torch.nn.Conv2d(input_channels, squeeze_channels, 1)

self.fc2 = torch.nn.Conv2d(squeeze_channels, input_channels, 1)

self.activation = activation()

self.scale_activation = scale_activation()

def _scale(self, input: Tensor) -> Tensor:

scale = self.avgpool(input)

scale = self.fc1(scale)

scale = self.activation(scale)

scale = self.fc2(scale)

return self.scale_activation(scale)

def forward(self, input: Tensor) -> Tensor:

scale = self._scale(input)

return scale * input上图中的benck 模块代码如下:

class InvertedResidual(nn.Module):

# Implemented as described at section 5 of MobileNetV3 paper

def __init__(self, cnf: InvertedResidualConfig, norm_layer: Callable[..., nn.Module],

se_layer: Callable[..., nn.Module] = partial(SElayer, scale_activation=nn.Hardsigmoid)):

super().__init__()

if not (1 <= cnf.stride <= 2):

raise ValueError('illegal stride value')

self.use_res_connect = cnf.stride == 1 and cnf.input_channels == cnf.out_channels

layers: List[nn.Module] = []

activation_layer = nn.Hardswish if cnf.use_hs else nn.ReLU

# expand

if cnf.expanded_channels != cnf.input_channels:

layers.append(ConvNormActivation(cnf.input_channels, cnf.expanded_channels, kernel_size=1,

norm_layer=norm_layer, activation_layer=activation_layer))

# depthwise

stride = 1 if cnf.dilation > 1 else cnf.stride

layers.append(ConvNormActivation(cnf.expanded_channels, cnf.expanded_channels, kernel_size=cnf.kernel,

stride=stride, dilation=cnf.dilation, groups=cnf.expanded_channels,

norm_layer=norm_layer, activation_layer=activation_layer))

if cnf.use_se:

squeeze_channels = _make_divisible(cnf.expanded_channels // 4, 8)

layers.append(se_layer(cnf.expanded_channels, squeeze_channels))

# project

layers.append(ConvNormActivation(cnf.expanded_channels, cnf.out_channels, kernel_size=1, norm_layer=norm_layer,

activation_layer=None))

self.block = nn.Sequential(*layers)

self.out_channels = cnf.out_channels

self._is_cn = cnf.stride > 1

def forward(self, input: Tensor) -> Tensor:

result = self.block(input)

if self.use_res_connect:

result += input

return result4. MobileNet V3 Large

5. 核心模块

InvertedResidual:

上图为选取的一个代表模块,其中虚线部分可能不存在。具体看上面图。

此外,我自己导出的 onnx 文件链接:

文章出处登录后可见!

已经登录?立即刷新