数据导入

% Load Data

data = load('ex1data2.txt');

X = data(:, 1:2);

y = data(:, 3);

m = length(y);

% Print out some data points

% First 10 examples from the dataset

fprintf(' x = [%.0f %.0f], y = %.0f \n', [X(1:10,:) y(1:10,:)]');

吴恩达写的代码,data = load('ex1data2.txt');将数据先读进来,然后用X = data(:, 1:2);y = data(:, 3);将数据中的一二列取出来放到X作为特征值,把第三列取出来放到y作为解,顺便用m = length(y);把样本数求出来。

特征值归一化

function [X_norm, mu, sigma] = featureNormalize(X)

%FEATURENORMALIZE Normalizes the features in X

% FEATURENORMALIZE(X) returns a normalized version of X where

% the mean value of each feature is 0 and the standard deviation

% is 1. This is often a good preprocessing step to do when

% working with learning algorithms.

% You need to set these values correctly

X_norm = X;

mu = zeros(1, size(X, 2));

sigma = zeros(1, size(X, 2));

% ====================== YOUR CODE HERE ======================

% Instructions: First, for each feature dimension, compute the mean

% of the feature and subtract it from the dataset,

% storing the mean value in mu. Next, compute the

% standard deviation of each feature and divide

% each feature by it's standard deviation, storing

% the standard deviation in sigma.

%

% Note that X is a matrix where each column is a

% feature and each row is an example. You need

% to perform the normalization separately for

% each feature.

%

% Hint: You might find the 'mean' and 'std' functions useful.

%

mu=mean(X);

sigma=std(X);

X_norm=(X-mu)./sigma;

% ============================================================

end

上面为特征值归一化函数,用mean()和std()函数把矩阵每一列的均值mu和标准差sigma求出来,之后X_norm=(X-mu)./sigma;把所有数据归一化,使其取值范围大致相等。

需要注意,这里求出来的mu和sigma数组也是要保留的,因为我们根据归一化数据求出来的线性回归在使用的时候要求待预测的变量同样进行了归一化。

调用归一化:

% Scale features and set them to zero mean

[X, mu, sigma] = featureNormalize(X);

添加 项

项

众所周知,因为线性回归里假设方程为

为了让这个方程更整齐,补一位,就有

对于整个线性回归问题而言,令

就有

从而方便计算,所以我们要在特征矩阵X的左边加一列1:

% Add intercept term to X

X = [ones(m, 1) X];

梯度下降

梯度下降公式为

其中

求偏导为

后面的求和部分可以化成矩阵形式

故参数矩阵theta的运算为

得益于matlab强大的向量运算,这玩意儿实现起来很容易:

function [theta, J_history] = gradientDescentMulti(X, y, theta, alpha, num_iters)

%GRADIENTDESCENTMULTI Performs gradient descent to learn theta

% theta = GRADIENTDESCENTMULTI(x, y, theta, alpha, num_iters) updates theta by

% taking num_iters gradient steps with learning rate alpha

% Initialize some useful values

m = length(y); % number of training examples

J_history = zeros(num_iters, 1);

for iter = 1:num_iters

% ====================== YOUR CODE HERE ======================

% Instructions: Perform a single gradient step on the parameter vector

% theta.

%

% Hint: While debugging, it can be useful to print out the values

% of the cost function (computeCostMulti) and gradient here.

%

theta=theta-(alpha/m).*X'*(X*theta-y);

% ============================================================

% Save the cost J in every iteration

J_history(iter) = computeCostMulti(X, y, theta);

end

end

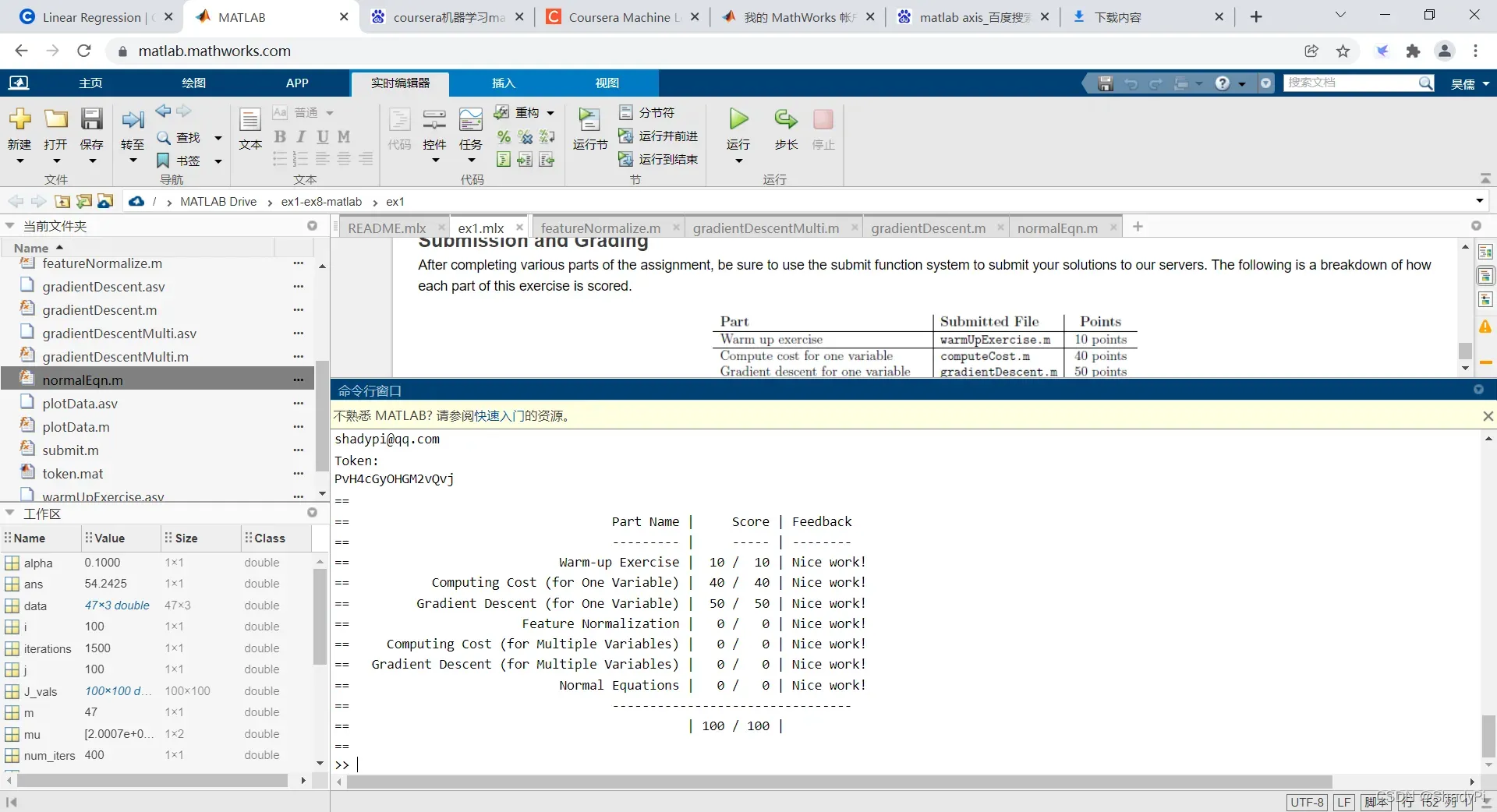

之后调用该函数多次迭代就可以完成梯度下降算法了。

% Run gradient descent

% Choose some alpha value

alpha = 0.1;

num_iters = 400;

% Init Theta and Run Gradient Descent

theta = zeros(3, 1);

[theta, ~] = gradientDescentMulti(X, y, theta, alpha, num_iters);

% Display gradient descent's result

fprintf('Theta computed from gradient descent:\n%f\n%f\n%f',theta(1),theta(2),theta(3))

预测

要预测一个特征值为1650 3的数据,只需要把他归一化带进方程即可:

% Estimate the price of a 1650 sq-ft, 3 br house

% ====================== YOUR CODE HERE ======================

price = theta(1,1)+theta(2,1)*(1650-mu(1))/sigma(1)+theta(3,1)*(3-mu(2))/sigma(2); % Enter your price formula here

% ============================================================

fprintf('Predicted price of a 1650 sq-ft, 3 br house (using gradient descent):\n $%f', price);

版权声明:本文为博主ShadyPi原创文章,版权归属原作者,如果侵权,请联系我们删除!

原文链接:https://blog.csdn.net/ShadyPi/article/details/122589273