深度学习论文: A ConvNet for the 2020s及其PyTorch实现

A ConvNet for the 2020s

PDF: https://arxiv.org/pdf/2103.09950.pdf

PyTorch代码: https://github.com/shanglianlm0525/CvPytorch

PyTorch代码: https://github.com/shanglianlm0525/PyTorch-Networks

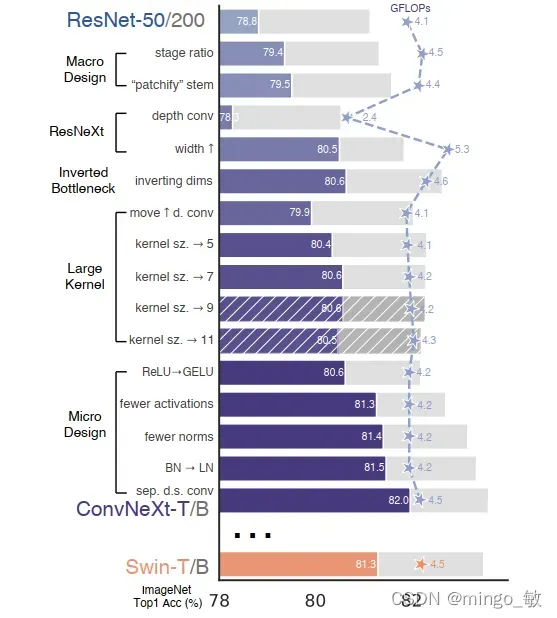

手把手教你改模型,把ResNet50从76.1一步步干到82.0。

Modernizing a ConvNet: a Roadmap

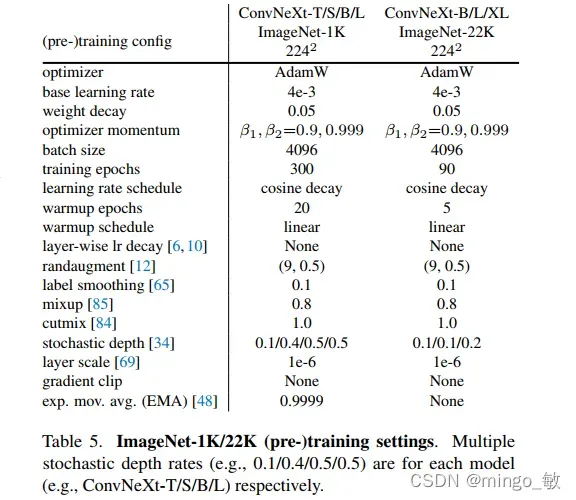

1 Training Techniques (76.1—>78.8)

使用较新的训练策略(AdamW)、数据增强策略(Mixup, Cutmix, RandAugment, Random Erasing, Stochastic Depth, Label Smoothing)和超参设置。

2 Macro Design (78.8—>79.5)

宏观的结构调整

2-1 改变stage compute ratio (78.8—>79.4)

改变layer0到layer3的block数量比例,由标准的(3,4,6,3)改为Swin-T使用的(3,3,9,3),即1:1:3:1。对于更大的模型,可以采用Swin所使用的1:1:9:1。

2-2 使用Patchify的stem (79.4—>79.5)

从ViT开始,为了将图片转化为token,图片都会先被分割成一个一个的patch,而在传统ResNet中stem层是使用一个stride=2的7×7卷积加最大池化层。

这里采用仿照Swin-T的做法,用stride=4的4×4卷积来进行stem,使得滑动窗口不再相交,每次只处理一个patch的信息。

# 标准ResNet

stem = nn.Sequential(

nn.Conv2d(in_chans, dims[0], kernel_size=7, stride=2),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

# ConvNeXt

stem = nn.Sequential(

nn.Conv2d(in_chans, dims[0], kernel_size=4, stride=4),

LayerNorm(dims[0], eps=1e-6, data_format="channels_first")

)

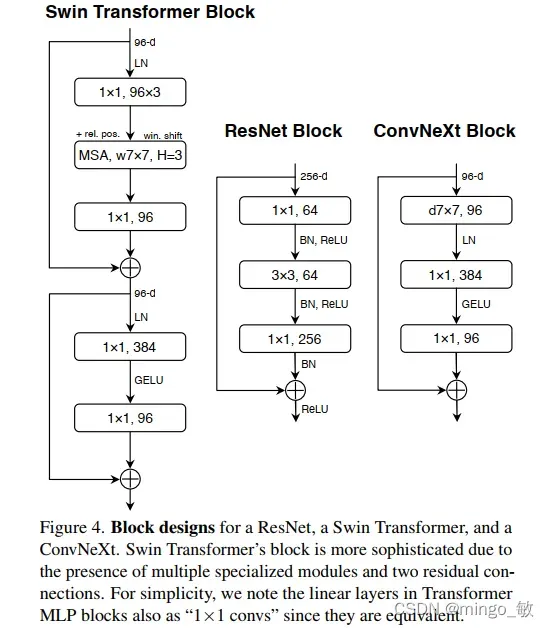

3 ResNeXt-ify (79.5—>80.5)

借鉴了ResNeXt在FLOPs/accuracy的trade-off,即“分更多的组,拓宽width”。

将bottleneck中的3×3卷积替换成dw conv,再把网络宽度从64提升到96。

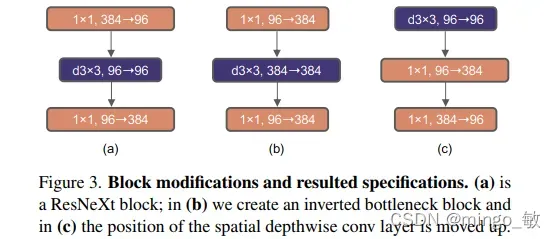

4 Inverted Bottleneck (80.5—>80.6)

传统的ResNet中使用的bottleneck是(大维度-小维度-大维度)的形式来减小计算量。但是在MobileNetV2中使用的inverted bottleneck结构,采用(小维度-大维度-小维度)形式,inverted bottleneck结构能让信息在不同维度特征空间之间转换时避免压缩维度带来的信息损失。此外,Transformer的MLP中也使用了类似的结构,中间层全连接层维度数是两端的4倍。

5 Large Kernel Sizes (80.6—>80.6)

由于Swin-T中使用了7×7卷积核,这里也引入7X7卷积块,这一步主要是为了对齐比较。

5-1 Moving up depthwise conv layer (80.6—>79.9)

因为inverted bottleneck放大了中间卷积层的缘故,直接替换会导致参数量增大,因而把dw conv的位置进行了调整,放到了inverted bottleneck的开头。这样会使计算量减少,但是会带来性能略微下降。

5-2 Increasing the kernel size (79.9—>80.6)

从3×3增加到7×7,性能稳步提升。同时实验表明7×7的卷积已经达到一定的饱和点。

6 Micro Design (80.6—>82.0)

其他一些微观的结构调整。

6-1 用GELU替换ReLU (80.6—>80.6)

GELU主要用于NLP任务,可以看做一个平滑版本的ReLU 。

用GELU替换ReLU,主要是为了对齐比较,并没有带来性能提升。

6-2 减少激活层数量 (80.6—>81.3)

由于Transformer中只使用了一个激活层,因此在设计上进行了效仿,结果发现只在block中的两个1×1卷积之间使用一层激活层,其他地方不适用,反而带来了0.7个点的提升。这说明太频繁地做非线性投影对于网络特征的信息传递实际上是有害的。

6-3 减少归一化层数量 (81.3—>81.4)

由于Transformer中BN层很少,本文也只保留了1×1卷积之前的一层BN,而两个1×1卷积层之间没有使用归一化层,只做了非线性投影。同时实验表明,在block的开始添加BN,不会提升性能。

6-4 用LN替换BN (81.4—>81.5)

由于Transformer中使用了LN,且一些研究发现BN会对网络性能带来一些负面影响,因此将所有的BN替换为LN。

6-5 单独的下采样层 (81.5—>82.0)

标准ResNet的下采样层通常是stride=2的3×3卷积,对于有残差结构的block则在短路连接中使用stride=2的1×1卷积。而Swin-T中的下采样层是单独的,因此这里用stride=2的2×2卷积进行模拟Swin-T中可分离的下采样,但是这样会使训练不稳定,因此在stem后面、每个下采样层前面、global average pooling后面增加LN来稳定训练。

self.downsample_layers = nn.ModuleList()

# stem也可以看成下采样层,一起存到downsample_layers中,推理时通过index进行访问

stem = nn.Sequential(

nn.Conv2d(in_chans, dims[0], kernel_size=4, stride=4),

LayerNorm(dims[0], eps=1e-6, data_format="channels_first")

)

self.downsample_layers.append(stem)

for i in range(3):

downsample_layer = nn.Sequential(

LayerNorm(dims[i], eps=1e-6, data_format="channels_first"),

nn.Conv2d(dims[i], dims[i+1], kernel_size=2, stride=2),

)

self.downsample_layers.append(downsample_layer)

# 由于网络结构是downsample-stage-downsample-stage的形式,

# 所以stem和后面的下采样层中的LN是不会连在一起的

PyTorch代码:

import torch

import torch.nn as nn

import torch.nn.functional as F

def drop_path(x, drop_prob: float = 0., training: bool = False, scale_by_keep: bool = True):

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

This is the same as the DropConnect impl I created for EfficientNet, etc networks, however,

the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...

See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted for

changing the layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use

'survival rate' as the argument.

"""

if drop_prob == 0. or not training:

return x

keep_prob = 1 - drop_prob

shape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

random_tensor = x.new_empty(shape).bernoulli_(keep_prob)

if keep_prob > 0.0 and scale_by_keep:

random_tensor.div_(keep_prob)

return x * random_tensor

class DropPath(nn.Module):

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

"""

def __init__(self, drop_prob=None, scale_by_keep=True):

super(DropPath, self).__init__()

self.drop_prob = drop_prob

self.scale_by_keep = scale_by_keep

def forward(self, x):

return drop_path(x, self.drop_prob, self.training, self.scale_by_keep)

class Block(nn.Module):

r""" ConvNeXt Block. There are two equivalent implementations:

(1) DwConv -> LayerNorm (channels_first) -> 1x1 Conv -> GELU -> 1x1 Conv; all in (N, C, H, W)

(2) DwConv -> Permute to (N, H, W, C); LayerNorm (channels_last) -> Linear -> GELU -> Linear; Permute back

We use (2) as we find it slightly faster in PyTorch

Args:

dim (int): Number of input channels.

drop_path (float): Stochastic depth rate. Default: 0.0

layer_scale_init_value (float): Init value for Layer Scale. Default: 1e-6.

"""

def __init__(self, dim, drop_path=0., layer_scale_init_value=1e-6):

super().__init__()

self.dwconv = nn.Conv2d(dim, dim, kernel_size=7, padding=3, groups=dim) # depthwise conv

self.norm = LayerNorm(dim, eps=1e-6)

self.pwconv1 = nn.Linear(dim, 4 * dim) # pointwise/1x1 convs, implemented with linear layers

self.act = nn.GELU()

self.pwconv2 = nn.Linear(4 * dim, dim)

self.gamma = nn.Parameter(layer_scale_init_value * torch.ones((dim)),

requires_grad=True) if layer_scale_init_value > 0 else None

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

def forward(self, x):

input = x

x = self.dwconv(x)

x = x.permute(0, 2, 3, 1) # (N, C, H, W) -> (N, H, W, C)

x = self.norm(x)

x = self.pwconv1(x)

x = self.act(x)

x = self.pwconv2(x)

if self.gamma is not None:

x = self.gamma * x

x = x.permute(0, 3, 1, 2) # (N, H, W, C) -> (N, C, H, W)

x = input + self.drop_path(x)

return x

class LayerNorm(nn.Module):

r""" LayerNorm that supports two data formats: channels_last (default) or channels_first.

The ordering of the dimensions in the inputs. channels_last corresponds to inputs with

shape (batch_size, height, width, channels) while channels_first corresponds to inputs

with shape (batch_size, channels, height, width).

"""

def __init__(self, normalized_shape, eps=1e-6, data_format="channels_last"):

super().__init__()

self.weight = nn.Parameter(torch.ones(normalized_shape))

self.bias = nn.Parameter(torch.zeros(normalized_shape))

self.eps = eps

self.data_format = data_format

if self.data_format not in ["channels_last", "channels_first"]:

raise NotImplementedError

self.normalized_shape = (normalized_shape,)

def forward(self, x):

if self.data_format == "channels_last":

return F.layer_norm(x, self.normalized_shape, self.weight, self.bias, self.eps)

elif self.data_format == "channels_first":

u = x.mean(1, keepdim=True)

s = (x - u).pow(2).mean(1, keepdim=True)

x = (x - u) / torch.sqrt(s + self.eps)

x = self.weight[:, None, None] * x + self.bias[:, None, None]

return x

class ConvNeXt(nn.Module):

r""" ConvNeXt

A PyTorch impl of : `A ConvNet for the 2020s` -

https://arxiv.org/pdf/2201.03545.pdf

Args:

in_chans (int): Number of input image channels. Default: 3

num_classes (int): Number of classes for classification head. Default: 1000

depths (tuple(int)): Number of blocks at each stage. Default: [3, 3, 9, 3]

dims (int): Feature dimension at each stage. Default: [96, 192, 384, 768]

drop_path_rate (float): Stochastic depth rate. Default: 0.

layer_scale_init_value (float): Init value for Layer Scale. Default: 1e-6.

head_init_scale (float): Init scaling value for classifier weights and biases. Default: 1.

"""

def __init__(self, in_chans=3, num_classes=1000,

depths=[3, 3, 9, 3], dims=[96, 192, 384, 768], drop_path_rate=0.,

layer_scale_init_value=1e-6, head_init_scale=1.,

):

super().__init__()

self.downsample_layers = nn.ModuleList() # stem and 3 intermediate downsampling conv layers

stem = nn.Sequential(

nn.Conv2d(in_chans, dims[0], kernel_size=4, stride=4),

LayerNorm(dims[0], eps=1e-6, data_format="channels_first")

)

self.downsample_layers.append(stem)

for i in range(3):

downsample_layer = nn.Sequential(

LayerNorm(dims[i], eps=1e-6, data_format="channels_first"),

nn.Conv2d(dims[i], dims[i + 1], kernel_size=2, stride=2),

)

self.downsample_layers.append(downsample_layer)

self.stages = nn.ModuleList() # 4 feature resolution stages, each consisting of multiple residual blocks

dp_rates = [x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))]

cur = 0

for i in range(4):

stage = nn.Sequential(

*[Block(dim=dims[i], drop_path=dp_rates[cur + j],

layer_scale_init_value=layer_scale_init_value) for j in range(depths[i])]

)

self.stages.append(stage)

cur += depths[i]

self.norm = nn.LayerNorm(dims[-1], eps=1e-6) # final norm layer

self.head = nn.Linear(dims[-1], num_classes)

self.apply(self._init_weights)

self.head.weight.data.mul_(head_init_scale)

self.head.bias.data.mul_(head_init_scale)

def _init_weights(self, m):

if isinstance(m, (nn.Conv2d, nn.Linear)):

nn.init.trunc_normal_(m.weight, std=.02)

nn.init.constant_(m.bias, 0)

def forward_features(self, x):

for i in range(4):

x = self.downsample_layers[i](x)

x = self.stages[i](x)

return self.norm(x.mean([-2, -1])) # global average pooling, (N, C, H, W) -> (N, C)

def forward(self, x):

x = self.forward_features(x)

x = self.head(x)

return x

版权声明:本文为博主mingo_敏原创文章,版权归属原作者,如果侵权,请联系我们删除!

原文链接:https://blog.csdn.net/shanglianlm/article/details/122559793