前言

这个是继续上一个猜想进行的编码研究,有没有类似的论文这个我不太清楚,在知网我是找到了一篇有使用GAN+KMeans的图片分类算法的。不过这个还是有不少差别的。

原理

所有我这样设计。

我同样保留一开始的欧氏距离Kmeans,再学习的过程当中去训练我的GAN网络。当发现我们的距离无法工作的时候,我们使用GAN。换一句话说,俺们这个其实是个复合算法。

那么问题来了,为什么用神经网络?其实分类问题,你发现其实它背后是有个”隐藏“逻辑的。我们一开始使用的是距离公式,也就是说我们期望找到一种距离的关系,然后去分类。但是有些点,可能受到距离和一种非距离的约束,也就是说和距离相关但是又有点其他特殊的东西约束,那么使用距离公式可能无法感知到,也就无法分类。但是使用神经网络,它最擅长的就是这个特殊的,隐含的,非线性的关系。所以,我们就可以进行一定的分类。

在举一个例子。老师教学生,这个学生可能天赋异禀,虽然老师教的是按照规定的规则的,但是学生学的时候可能出来老师教的规则,还很有可能有自己的思考,然后去”创新“。所以这也是我为什么认为可以这样干的原因。

只不过性能开销是可能有的,而且适合关系复杂的分类,因为越复杂,它可能捕捉到的东西就越多,而且复杂,数据量大可以学得更久一点儿。

编码

这里我做了简单的优化。

从上到下

import numpy as np

import torch

import random

import matplotlib.pyplot as plt

class ENV(object):

K = 4

Classfiy = ['red', 'blue', 'green', 'black']

NUMBERS = 100

DIM = 2

MinRange = 1

MaxRange = 10

def __init__(self):

self.DataSet = torch.tensor([random.sample(range(self.MinRange, self.MaxRange), 2) for _ in range(self.NUMBERS)],dtype=torch.float)

self.Clusters = np.random.choice(np.arange(len(self.DataSet)),self.K,replace=False)

self.Clusters = self.DataSet[self.Clusters]

self.plt = plt

self.plt.ion()

def Show(self,ClassPoints:dict,Clusters:np.array)->None:

# The show must be the numpy data running

for index in range(len(Clusters)):

# Draw center point

self.plt.scatter(Clusters[index][0], Clusters[index][1], marker='o', color=self.Classfiy[index], s=100)

# Draws the points of the category

Points = ClassPoints.get(self.Classfiy[index])

Points = np.array(Points)

sca = self.plt.plot(Points[:, 0], Points[:, 1], '--', color=self.Classfiy[index])

self.plt.show()

import torch

from torch import nn

import numpy as np

import torch.nn.functional as F

from KMeansNN.GANKMeans.ENV import ENV

from KMeansNN.GANKMeans.Professor import Professor

"""

Let's default BatchSize = 1

Now, what happens here is that we have a very bad classification network at the beginning,

so we can't just go there at first

We use neural networks, but we use experts first, and when the experts can't tell,

or we can start, we use GAN

In other words, GAN is a set of auxiliary systems

"""

DIM = 2

HIDDEN = 32

K = 4

EPOCH = 100

FRESHTIME = 10

ROUNDNUMBER = 50

FRESH = 1 / FRESHTIME

class Generate(nn.Module):

def __init__(self):

super(Generate,self).__init__()

self.fc1 = nn.Linear(DIM, HIDDEN)

self.fc1.weight.data.normal_(0, 0.1) # initialization

self.fc2 = nn.Linear(HIDDEN,int(HIDDEN/2))

self.fc2.weight.data.normal_(0, 0.1) # initialization

self.out = nn.Linear(int(HIDDEN/2),K)

self.out.weight.data.normal_(0, 0.1) # initialization

def forward(self,point):

out = self.fc1(point)

out = F.relu(out)

out = self.fc2(out)

out = F.relu(out)

classfiy = self.out(out)

return classfiy

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator,self).__init__()

self.fc1 = nn.Linear(1,HIDDEN*2)

self.out = nn.Linear(HIDDEN*2,1)

def forward(self,classfiy):

score = self.fc1(classfiy)

score = F.relu(score)

score = self.out(score)

score = torch.sigmoid(score)

return score

class GANKMeans(object):

def __init__(self):

self.Env = ENV()

self.Professor = Professor()

self.D = Discriminator()

self.G = Generate()

self.LR_G = 0.0001

self.LR_D = 0.0001

#See if it doesn't work well with SGD

self.opt_D = torch.optim.Adam(self.D.parameters(), lr=self.LR_D)

self.opt_G = torch.optim.Adam(self.G.parameters(), lr=self.LR_G)

def Learn(self,pred_G,point:torch.tensor,Clusters:torch.tensor):

#EPOCH * NUMBERS

#Tips:there epoch is actually epoch because of we will stop running if have arrived

#changed limited

#Remember that you have a tensor input here

pred_P = self.Professor.CalculateDist(point.numpy(),Clusters.numpy())

pred_P = torch.tensor(pred_P,dtype=torch.float)

pred_P = pred_P.reshape((1,1))

pred_G = pred_G.float()

pred_G = pred_G.reshape((1,1))

if(pred_P==-1):

#is indicates that experts will not, so self-study is the only way out

# so we will classfiy by G

pred_P = pred_G.detach()

prob_G = self.D(pred_G)

G_loss = torch.mean(torch.log(1. - prob_G))

self.opt_G.zero_grad()

G_loss.backward()

self.opt_G.step()

prob_0 = self.D(pred_P)

prob_1 = self.D(pred_G.detach())

D_loss = - torch.mean(torch.log(prob_0) + torch.log(1. - prob_1))

self.opt_D.zero_grad()

D_loss.backward(retain_graph=True)

self.opt_D.step()

return pred_P

def Divide(self,DataSet:torch.tensor,Clusters:torch.tensor):

#The divided Clusters are fed back to the current center and

# return the change from the previous comparison

ClassPoints = {}

for key in self.Env.Classfiy:

ClassPoints[key]=[]

for point in DataSet:

pred_G = self.G(point)

pred_G = torch.argmax(pred_G)

pred_P = self.Learn(pred_G,point,Clusters)

ClassPoints[self.Env.Classfiy[int(pred_P.item())]].append(point.numpy())

# Learning update network

#Computing the new ClusterCenter

newClusters = []

for key in ClassPoints.keys():

temp = np.array(ClassPoints.get(key))

newcluster = np.average(temp, axis=0).tolist()

newClusters.append(newcluster)

newClusters = torch.tensor(newClusters)

Changed = Clusters-newClusters

return ClassPoints,newClusters,Changed

def KMeans(self):

"""

we will stop run if epoch have arrived EPOCH limit or

we have got stable Clusters. we will plot Clusters points

after divided in every epoch

:return:

"""

dataSet,Clusters = self.Env.DataSet,self.Env.Clusters

ClassPoints,newClusters,Changed = self.Divide(dataSet,Clusters)

self.Env.Show(ClassPoints,Clusters)

Roundnumber = 0

for epoch in range(EPOCH):

Clusters = newClusters

ClassPoints,newClusters,Changed = self.Divide(dataSet,Clusters)

self.Env.Show(ClassPoints,Clusters)

self.Env.plt.pause(FRESH)

self.Env.plt.cla()

if(Changed.all()==0.):

Roundnumber+=1

if(Roundnumber>=ROUNDNUMBER):

break

self.Env.plt.ioff()

self.Env.Show(ClassPoints,Clusters)

if __name__ == '__main__':

ganKmeans = GANKMeans()

ganKmeans.KMeans()

"""

Experts do one simple thing: provide data,RunTimeDataProcess

"""

import math

import numpy as np

class Professor(object):

def CalculateDist(self,point:np.array,Clusters:np.array):

x,y = point

dists = []

for Cluster in Clusters:

x1,y1 = Cluster

dist = math.sqrt(math.pow((x-x1),2)+math.pow((y-y1),2))

dists.append(dist)

if(dists.count(min(dists))>1):

return -1

else:

return dists.index(min(dists))

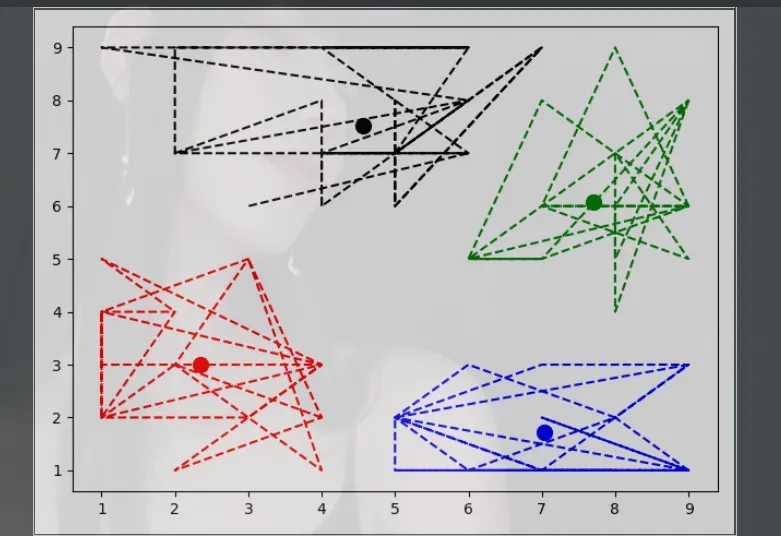

效果

这个肯定是不用说的。

不过其实后面我还没有测试,例如,当训练到足够的轮数的时候,我是否可以拜托老师,独立分类?等等,这些都要去实验才知道,后面如果有时间我可以看看,把这玩意改一下用在图片分类上面怎么样?

文章出处登录后可见!

已经登录?立即刷新