I. 前言

系列文章:

- 深入理解PyTorch中LSTM的输入和输出(从input输入到Linear输出)

- PyTorch搭建LSTM实现时间序列预测(负荷预测)

- PyTorch搭建LSTM实现多变量时间序列预测(负荷预测)

- PyTorch搭建双向LSTM实现时间序列预测(负荷预测)

- PyTorch搭建LSTM实现多变量多步长时间序列预测(一):直接多输出

- PyTorch搭建LSTM实现多变量多步长时间序列预测(二):单步滚动预测

- PyTorch搭建LSTM实现多变量多步长时间序列预测(三):多模型单步预测

- PyTorch搭建LSTM实现多变量多步长时间序列预测(四):多模型滚动预测

- PyTorch中实现LSTM多步长时间序列预测的几种方法总结(负荷预测)

II. 多模型单步预测

所谓多模型单步预测:比如前10个预测后3个,那么我们可以训练三个模型分别根据[1…10]预测[11]、[12]以及[13]。也就是说如果需要进行n步预测,那么我们一共需要训练n个LSTM模型,缺点很突出。

III. 代码实现

3.1 数据处理

我们根据前24个时刻的负荷以及该时刻的环境变量来预测接下来12个时刻的负荷(步长pred_step_size可调)。

数据处理代码:

# multiple model single step.

def nn_seq_mmss(B, pred_step_size):

data = load_data()

# 划分为训练集和测试

train = data[:int(len(data) * 0.7)]

test = data[int(len(data) * 0.7):len(data)]

def process(dataset, batch_size):

load = dataset[dataset.columns[1]]

load = load.tolist()

m, n = np.max(load), np.min(load)

load = (load - n) / (m - n)

dataset = dataset.values.tolist()

# 需要针对每一个步骤返回多个seq

seqs = [[] for i in range(pred_step_size)]

for i in range(0, len(dataset) - 24 - pred_step_size, pred_step_size):

train_seq = []

for j in range(i, i + 24):

x = [load[j]]

for c in range(2, 8):

x.append(dataset[j][c])

train_seq.append(x)

for j, ind in zip(range(i + 24, i + 24 + pred_step_size), range(pred_step_size)):

# 形成多个label和seq

train_label = [load[j]]

seq = torch.FloatTensor(train_seq)

train_label = torch.FloatTensor(train_label).view(-1)

seqs[ind].append((seq, train_label))

# 形成多个Dte

res = []

for seq in seqs:

seq = MyDataset(seq)

seq = DataLoader(dataset=seq, batch_size=batch_size, shuffle=False, num_workers=0, drop_last=True)

res.append(seq)

return res, [m, n]

Dtrs, lis1 = process(train, B)

Dtes, lis2 = process(test, B)

return Dtrs, Dtes, lis1, lis2

简单来说,如果需要利用前24个值预测接下来12个值,那么我们需要生成12个数据集。

3.2 模型搭建

模型和之前的文章一致:

class LSTM(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, output_size, batch_size):

super().__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.num_layers = num_layers

self.output_size = output_size

self.num_directions = 1

self.batch_size = batch_size

self.lstm = nn.LSTM(self.input_size, self.hidden_size, self.num_layers, batch_first=True)

self.linear = nn.Linear(self.hidden_size, self.output_size)

def forward(self, input_seq):

batch_size, seq_len = input_seq[0], input_seq[1]

h_0 = torch.randn(self.num_directions * self.num_layers, self.batch_size, self.hidden_size).to(device)

c_0 = torch.randn(self.num_directions * self.num_layers, self.batch_size, self.hidden_size).to(device)

# output(batch_size, seq_len, num_directions * hidden_size)

output, _ = self.lstm(input_seq, (h_0, c_0))

pred = self.linear(output)

pred = pred[:, -1, :]

return pred

3.3 模型训练/测试

与前文不同的是,这种方法需要训练多个模型:

Dtrs, Dtes, lis1, lis2 = load_data(args, flag, batch_size=args.batch_size)

for Dtr, path in zip(Dtrs, LSTM_PATHS):

train(args, Dtr, path)

模型测试:

def m_test(args, Dtes, lis, PATHS):

# Dtr, Dte, lis1, lis2 = load_data(args, flag, args.batch_size)

pred = []

y = []

print('loading model...')

input_size, hidden_size, num_layers = args.input_size, args.hidden_size, args.num_layers

output_size = args.output_size

# model = LSTM(input_size, hidden_size, num_layers, output_size, batch_size=args.batch_size).to(device)

Dtes = [[x for x in iter(Dte)] for Dte in Dtes]

models = []

for path in PATHS:

if args.bidirectional:

model = BiLSTM(input_size, hidden_size, num_layers, output_size, batch_size=args.batch_size).to(device)

else:

model = LSTM(input_size, hidden_size, num_layers, output_size, batch_size=args.batch_size).to(device)

model.load_state_dict(torch.load(path)['model'])

model.eval()

models.append(model)

print('predicting...')

for i in range(len(Dtes[0])):

for j in range(len(Dtes)):

model = models[j]

seq, label = Dtes[j][i][0], Dtes[j][i][1]

label = list(chain.from_iterable(label.data.tolist()))

y.extend(label)

seq = seq.to(device)

with torch.no_grad():

y_pred = model(seq)

y_pred = list(chain.from_iterable(y_pred.data.tolist()))

pred.extend(y_pred)

y, pred = np.array(y), np.array(pred)

m, n = lis[0], lis[1]

y = (m - n) * y + n

pred = (m - n) * pred + n

print('mape:', get_mape(y, pred))

# plot

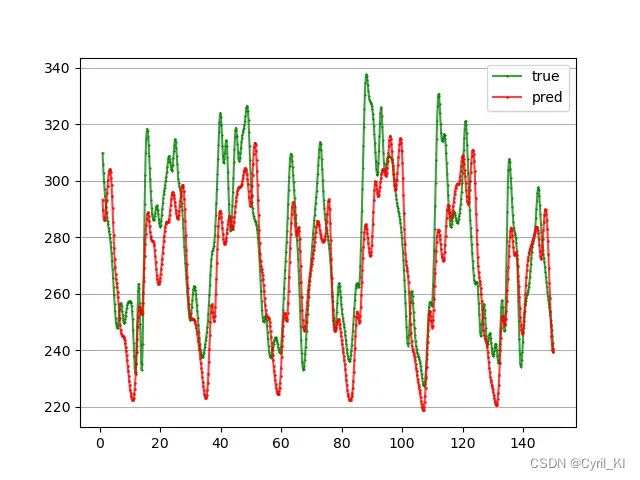

x = [i for i in range(1, 151)]

x_smooth = np.linspace(np.min(x), np.max(x), 900)

y_smooth = make_interp_spline(x, y[150:300])(x_smooth)

plt.plot(x_smooth, y_smooth, c='green', marker='*', ms=1, alpha=0.75, label='true')

y_smooth = make_interp_spline(x, pred[150:300])(x_smooth)

plt.plot(x_smooth, y_smooth, c='red', marker='o', ms=1, alpha=0.75, label='pred')

plt.grid(axis='y')

plt.legend()

plt.show()

多步预测的每一步,都需要用不同的模型来进行预测。值得注意的是,在正式预测时,数据的batch_size需要设置为1。

3.4 实验结果

前24个预测未来12个,每个模型训练50轮,MAPE为9.94%,还需要进一步完善。

IV. 源码及数据

源码及数据我放在了GitHub上,下载时请随手给个follow和star,感谢!

LSTM-MultiStep-Forecasting

文章出处登录后可见!

已经登录?立即刷新