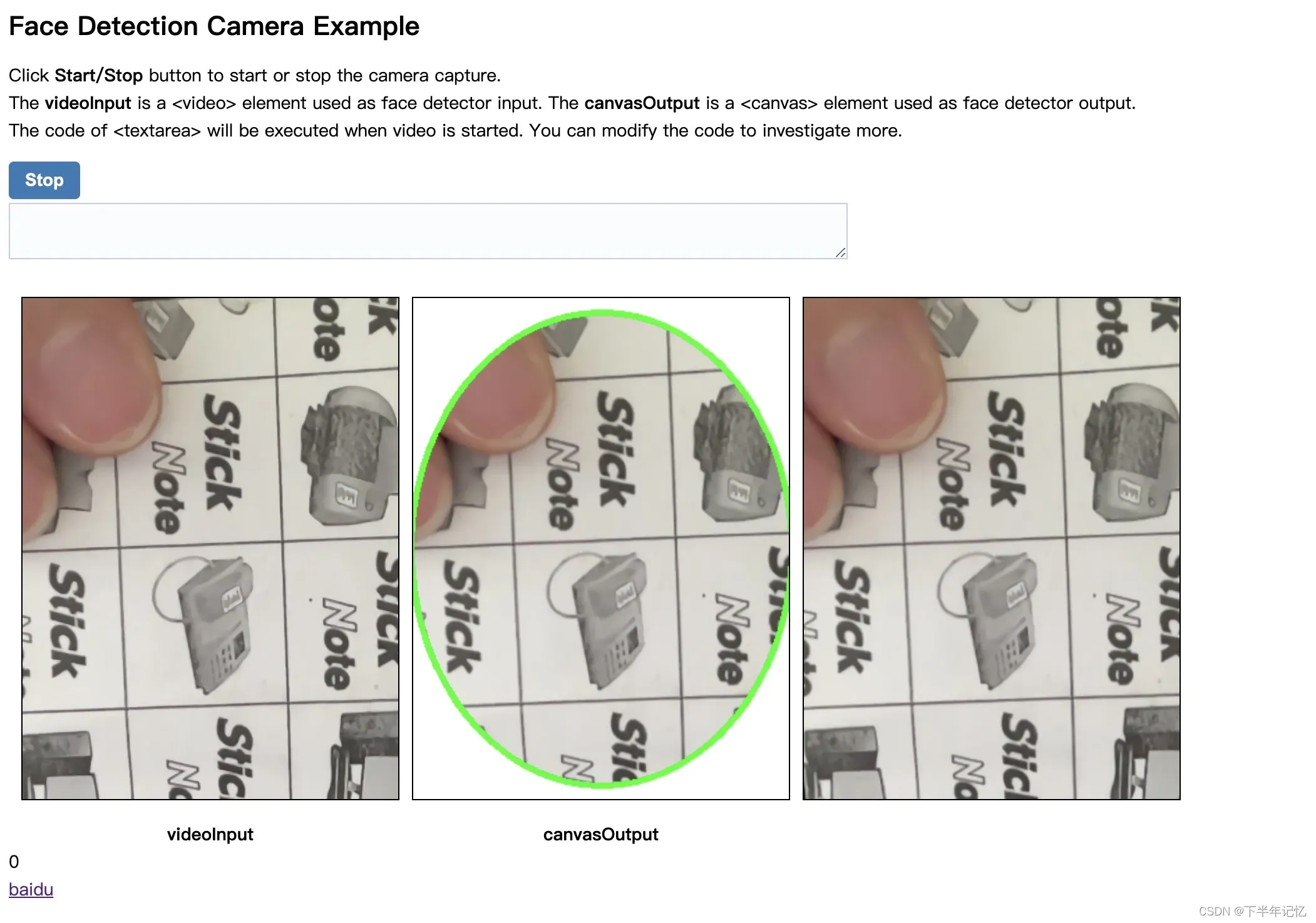

效果图

JS部分

function Utils(errorOutputId) { // eslint-disable-line no-unused-vars

let self = this;

this.errorOutput = document.getElementById(errorOutputId);

console.log("===init utils====");

const OPENCV_URL = 'opencv.js';

this.loadOpenCv = function(onloadCallback) {

let script = document.createElement('script');

script.setAttribute('async', '');

script.setAttribute('type', 'text/javascript');

script.addEventListener('load', async () => {

if (cv.getBuildInformation)

{

console.log(cv.getBuildInformation());

onloadCallback();

}

else

{

// WASM

if (cv instanceof Promise) {

cv = await cv;

console.log(cv.getBuildInformation());

onloadCallback();

} else {

cv['onRuntimeInitialized']=()=>{

console.log(cv.getBuildInformation());

onloadCallback();

}

}

}

});

script.addEventListener('error', () => {

self.printError('Failed to load ' + OPENCV_URL);

});

script.src = OPENCV_URL;

let node = document.getElementsByTagName('script')[0];

node.parentNode.insertBefore(script, node);

};

this.createFileFromUrl = function(path, url, callback) {

let request = new XMLHttpRequest();

request.open('GET', url, true);

request.responseType = 'arraybuffer';

request.onload = function(ev) {

if (request.readyState === 4) {

if (request.status === 200) {

let data = new Uint8Array(request.response);

cv.FS_createDataFile('/', path, data, true, false, false);

callback();

} else {

self.printError('Failed to load ' + url + ' status: ' + request.status);

}

}

};

request.send();

};

this.loadImageToCanvas = function(url, cavansId) {

let canvas = document.getElementById(cavansId);

let ctx = canvas.getContext('2d');

let img = new Image();

img.crossOrigin = 'anonymous';

img.onload = function() {

canvas.width = img.width;

canvas.height = img.height;

ctx.fillStyle = 'red';

ctx.drawImage(img, 0, 0, img.width, img.height);

};

img.src = url;

};

this.executeCode = function(textAreaId) {

try {

this.clearError();

let code = document.getElementById(textAreaId).value;

eval(code);

} catch (err) {

this.printError(err);

}

};

this.clearError = function() {

this.errorOutput.innerHTML = '';

};

this.printError = function(err) {

if (typeof err === 'undefined') {

err = '';

} else if (typeof err === 'number') {

if (!isNaN(err)) {

if (typeof cv !== 'undefined') {

err = 'Exception: ' + cv.exceptionFromPtr(err).msg;

}

}

} else if (typeof err === 'string') {

let ptr = Number(err.split(' ')[0]);

if (!isNaN(ptr)) {

if (typeof cv !== 'undefined') {

err = 'Exception: ' + cv.exceptionFromPtr(ptr).msg;

}

}

} else if (err instanceof Error) {

err = err.stack.replace(/\n/g, '<br>');

}

this.errorOutput.innerHTML = err;

};

this.loadCode = function(scriptId, textAreaId) {

let scriptNode = document.getElementById(scriptId);

let textArea = document.getElementById(textAreaId);

if (scriptNode.type !== 'text/code-snippet') {

throw Error('Unknown code snippet type');

}

textArea.value = scriptNode.text.replace(/^\n/, '');

};

this.loadOpenCVprocess = function() {

// openCV运行主逻辑

let video = document.getElementById('videoInput');

let canvasOutput2 = document.getElementById('canvasOutput2');

let src = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let dst = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let maskBg = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let addedPic = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let resPic = new cv.Mat(video.height, video.width, cv.CV_8UC4);

let mask = new cv.Mat.zeros(video.height, video.width, cv.CV_8UC1);

let gray = new cv.Mat();

let cap = new cv.VideoCapture(video);

let faces = new cv.RectVector();

let classifier = new cv.CascadeClassifier();

console.log("===============")

console.log(video.width, video.height)

console.log("===============")

//截图次数限制

let picture_count = 3;

let count_sec = 3; //截图之间的间隔

// load pre-trained classifiers

classifier.load('haarcascade_frontalface_default.xml');

const FPS = 30;

function processVideo() {

try {

if (!streaming) {

// clean and stop.

src.delete();

dst.delete();

mask.delete();

maskBg.delete();

resPic.delete();

addedPic.delete();

gray.delete();

faces.delete();

classifier.delete();

return;

}

let begin = Date.now();

// start processing.

cap.read(src);

// src.copyTo(dst);

cv.cvtColor(dst, gray, cv.COLOR_RGBA2GRAY, 0);

// detect faces.

classifier.detectMultiScale(gray, faces, 1.3, 3, 0);

src.copyTo(maskBg);

let center = new cv.Point(video.width * (1/2), video.height * (1/2));

// cv.ellipse(dst, center, new cv.Size(video.height * (4/16), video.height * (5/16)), 0,0,360,[0, 255, 0, 255], 6);

cv.ellipse(mask, center, new cv.Size(video.height * (12/32), video.height * (15/32)),0,0,360, [255, 255, 255, 255], -1);

cv.add(maskBg, addedPic, dst, mask, cv.CV_8UC4); //图像添加圆形mask

// let rect = new cv.Rect((video.width - video.height)/2,0,video.height,video.height);

// dst.roi(rect);

// // 标准边框

// let regularPoint1 = new cv.Point(video.width * (1/8), video.height * (1/8));

// let regularPoint2 = new cv.Point(video.width * (7/8), video.height * (7/8));

// cv.rectangle(dst, regularPoint1, regularPoint2, [0, 255, 0, 255]);

// // 文案部分,不建议使用,效果不好

// let font = cv.FONT_HERSHEY_SIMPLEX

// let textPosition = new cv.Point(video.width * (1/8), video.height * (1/8));

// cv.putText(dst,'this is title!', textPosition, font, 1,[0, 255, 0, 255],2,cv.LINE_4)

// draw faces.

for (let i = 0; i < faces.size(); ++i) {

let face = faces.get(i);

// //调试用

// let point1 = new cv.Point(face.x, face.y);

// let point2 = new cv.Point(face.x + face.width, face.y + face.height);

// cv.rectangle(dst, point1, point2, [255, 0, 0, 255]);

// 判断头像大小和位置是否符合要求

if(face.width <= video.width * (3/4) && face.width >= video.width * (1/4) && face.height <= video.height * (3/4) && face.height >= video.height * (1/4)) {

if(face.x <= video.width * (3/8) && face.x >= video.width * (1/8) && face.y <= video.height * (3/8) && face.y >= video.height * (1/8)) {

if(picture_count > 0) {

picture_count--;

setTimeout(function(){

let fullQuality = canvasOutput2.toDataURL('image/png', 1)

console.log(fullQuality);

}, 100);

console.log('==========识别成功' + picture_count + '=============');

}

}

}

}

if(picture_count == 0) {

cv.ellipse(dst, center, new cv.Size(video.height * (12/32)+2, video.height * (15/32)+2),0,0,360, [0, 255, 0, 255], 4);

}else{

cv.ellipse(dst, center, new cv.Size(video.height * (12/32)+2, video.height * (15/32)+2),0,0,360, [255, 0, 0, 255], 4);

}

cv.imshow('canvasOutput', dst);

cv.imshow('canvasOutput2', src);

// schedule the next one.

let delay = 1000/FPS - (Date.now() - begin);

setTimeout(processVideo, delay);

} catch (err) {

utils.printError(err);

}

};

// schedule the first one.

setTimeout(processVideo, 0);

};

this.addFileInputHandler = function(fileInputId, canvasId) {

let inputElement = document.getElementById(fileInputId);

inputElement.addEventListener('change', (e) => {

let files = e.target.files;

if (files.length > 0) {

let imgUrl = URL.createObjectURL(files[0]);

self.loadImageToCanvas(imgUrl, canvasId);

}

}, false);

};

function onVideoCanPlay() {

if (self.onCameraStartedCallback) {

self.onCameraStartedCallback(self.stream, self.video);

}

};

this.startCamera = function(resolution, callback, videoId) {

const constraints = {

// 'qvga': {width: {exact: 320}, height: {exact: 240}},

'qvga': {width: {exact: 480}, height: {exact: 640}},

'vga': {width: {exact: 480}, height: {exact: 480}}};

let video = document.getElementById(videoId);

if (!video) {

video = document.createElement('video');

}

let videoConstraint = constraints[resolution];

if (!videoConstraint) {

videoConstraint = true;

}

navigator.mediaDevices.getUserMedia({video: videoConstraint, audio: false})

.then(function(stream) {

video.srcObject = stream;

video.play();

self.video = video;

self.stream = stream;

self.onCameraStartedCallback = callback;

video.addEventListener('canplay', onVideoCanPlay, false);

})

.catch(function(err) {

self.printError('Camera Error: ' + err.name + ' ' + err.message);

});

};

this.stopCamera = function() {

if (this.video) {

this.video.pause();

this.video.srcObject = null;

this.video.removeEventListener('canplay', onVideoCanPlay);

}

if (this.stream) {

this.stream.getVideoTracks()[0].stop();

}

};

};

HTML部分

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>Face Detection Camera Example</title>

<link href="js_example_style.css" rel="stylesheet" type="text/css" />

</head>

<body>

<h2>Face Detection Camera Example</h2>

<p>

Click <b>Start/Stop</b> button to start or stop the camera capture.<br>

The <b>videoInput</b> is a <video> element used as face detector input.

The <b>canvasOutput</b> is a <canvas> element used as face detector output.<br>

The code of <textarea> will be executed when video is started.

You can modify the code to investigate more.

</p>

<div>

<div class="control"><button id="startAndStop" disabled>Start</button></div>

<textarea class="code" rows="3" cols="80" id="codeEditor" spellcheck="false"></textarea>

</div>

<p class="err" id="errorMessage"></p>

<div>

<table cellpadding="0" cellspacing="0" width="0" border="0">

<tr>

<td>

<video id="videoInput" width=300 height="400"></video>

</td>

<td>

<canvas id="canvasOutput" width=300 height=400></canvas>

</td>

<td>

<canvas id="canvasOutput2" width=300 height=400></canvas>

</td>

<td></td>

<td></td>

</tr>

<tr>

<td>

<div class="caption">videoInput</div>

</td>

<td>

<div class="caption">canvasOutput</div>

</td>

<td></td>

<td></td>

</tr>

</table>

</div>

<div id="timerBox">5</div>

<a href="https://www.baidu.com">baidu</a>

<!--<script type="text/javascript" src="opencv.js"></script>-->

<script src="adapter-5.0.4.js" type="text/javascript"></script>

<script src="utils.js" type="text/javascript"></script>

<script type="text/javascript">

let processIndex = 3

let sec = 5

let timerBox = document.getElementById('timerBox')

let timer = setInterval(()=>{

if(sec > 0) {

sec -= 1

timerBox.innerText = sec

} else {

clearInterval(timer)

}

},1000)

let utils = new Utils('errorMessage');

// utils.loadCode('codeSnippet', 'codeEditor');

let streaming = false;

let videoInput = document.getElementById('videoInput');

let startAndStop = document.getElementById('startAndStop');

let canvasOutput = document.getElementById('canvasOutput');

let canvasContext = canvasOutput.getContext('2d');

startAndStop.addEventListener('click', () => {

if (!streaming) {

utils.clearError();

utils.startCamera('qvga', onVideoStarted, 'videoInput');

} else {

utils.stopCamera();

onVideoStopped();

}

});

function onVideoStarted() {

streaming = true;

startAndStop.innerText = 'Stop';

// videoInput.width = 300;

// videoInput.width = videoInput.videoWidth;

// videoInput.height = videoInput.videoHeight;

// utils.executeCode('codeEditor');

utils.loadOpenCVprocess();

}

function onVideoStopped() {

streaming = false;

canvasContext.clearRect(0, 0, canvasOutput.width, canvasOutput.height);

startAndStop.innerText = 'Start';

}

utils.loadOpenCv(() => {

let faceCascadeFile = 'haarcascade_frontalface_default.xml';

utils.createFileFromUrl(faceCascadeFile, faceCascadeFile, () => {

startAndStop.removeAttribute('disabled');

});

});

</script>

</body>

</html>```

文章出处登录后可见!

已经登录?立即刷新