文章目录

Federated Graph Neural Networks: Overview, Techniques and Challenges

论文信息

联邦图神经网络:概述、技术和挑战

摘要

With its powerful capability to deal with graph data widely found in practical applications, graph neural networks (GNNs) have received significant research attention. However, as societies become in-creasingly concerned with data privacy, GNNs face the need to adapt to this new normal. This has led to the rapid development of federated graph neural networks (FedGNNs) research in recent years.Although promising, this interdisciplinary field is highly challenging for interested researchers to enter into. The lack of an insightful survey on this topic only exacerbates this problem. In this paper, we bridge this gap by offering a comprehensive survey of this emerging field. We propose a unique 3-tiered taxonomy of the FedGNNs literature to provide a clear view into how GNNs work in the context of Federated Learning (FL). It puts existing works into perspective by analyzing how graph data manifest themselves in FL settings, how GNN training is performed under different FL system architectures and degrees of graph data overlapacross data silo, and how GNN aggregation is performed under various FL settings. Through discussions of the advantages and limitations of existingworks, we envision future research directions that can help build more robust, dynamic, efficient, and interpretable FedGNNs.

图神经网络( GNNs )凭借其强大的处理实际应用中广泛存在的图数据的能力,受到了广泛的研究关注。然而,随着社会越来越关注数据隐私,GNNs面临着适应这种新常态的需要。这导致了近年来联邦图神经网络( FedGNNs )研究的快速发展。虽然前景广阔,但这一跨学科领域感兴趣的研究者来说是极具挑战性的。对这一领域缺乏深入的调查只会加剧这一问题。在本文中,我们通过提供对这一新兴领域的全面调查来弥补这一差距。我们对FedGNNs文献提出了一个独特的3层分类法,以提供对GNNs如何在联邦学习( Federation Learning,FL )背景下工作的清晰视图。通过分析图数据在FL设置中的表现形式,不同FL系统架构和图数据跨数据仓重叠程度下如何进行GNN训练,以及不同FL设置下如何进行GNN聚合,对现有工作进行了展望。通过讨论现有工作的优势和局限性,我们展望了未来的研究方向,这些方向可以帮助构建更健壮、更动态、更高效和更易于解释的联邦图神经网络。

主要内容

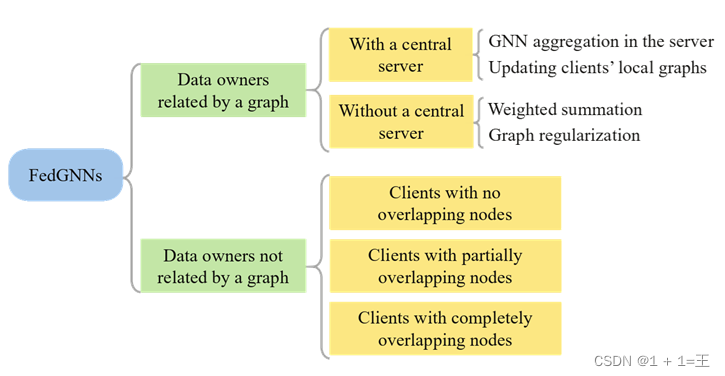

- 该文提出了一种多层分类法。

该分类法首先根据图数据与联邦学习数据拥有者之间的关系划分;然后基于不同联邦学习架构下如何执行图神经网络训练、图数据重叠程度划分,最后基于不同联邦学习设置下如何执行GNN聚合。 - 并分析了现有FedGNN方法的优势和局限性,并讨论了未来的研究方向。

A federated graph neural network framework forprivacy-preserving personalization

论文信息

面向隐私保护个性化的联合图神经网络框架

摘要

Graph neural network (GNN) is effective in modeling high-order interactions and has been widely used in various personalized applications such as recommendation. However, mainstream personalization methods rely on centralized GNN learning on global graphs, which have considerable privacy risks due to the privacy-sensitive nature of user data. Here, we present a federated GNN framework named FedPerGNN for both effective and privacy-preserving personalization. Through a privacy-preserving model update method, we can collaboratively train GNN models based on decentralized graphs inferred from local data. To further exploit graph information beyond local interactions, we introduce a privacy-preserving graph expansion protocol to incorporate high-order information under privacy protection. Experimental results on six datasets for personalization in different scenarios show that FedPerGNN achieves 4.0% ~ 9.6% lower errors than the state-of-the-art federated personalization methods under good privacy protection. FedPerGNN provides a promising direction to mining decentralized graph data in a privacy-preserving manner for responsible and intelligent personalization.

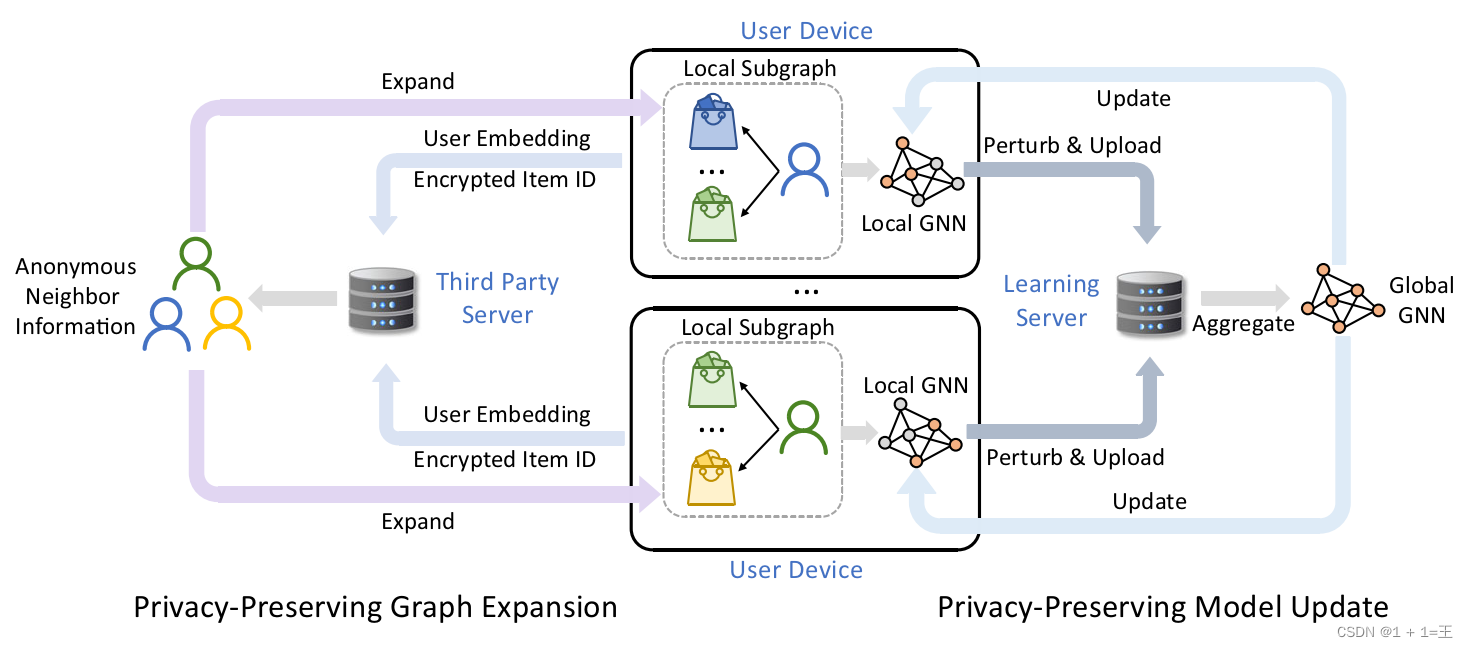

图神经网络( Graph Neural Network,GNN )在建模高阶交互方面十分有效,已被广泛应用于推荐等各种个性化应用中。然而,主流的个性化方法依赖于全局图上的集中式GNN学习,由于用户数据的隐私敏感特性,这些方法存在相当大的隐私风险。在这里,我们提出了一个名为FedPerGNN的联邦GNN框架,用于有效和隐私保护的个性化。通过一种保护隐私的模型更新方法,我们可以基于从本地数据推断的分散图协同训练GNN模型。为了进一步利用局部交互之外的图信息,引入了一个隐私保护的图扩展协议,以在隐私保护下纳入高阶信息。在6个不同场景下的个性化数据集上的实验结果表明,在良好的隐私保护下,FedPerGNN比现有的联合个性化方法降低了4.0 % ~ 9.6 %的误差。FedPerGNN以保护隐私的方式挖掘分散的图数据,为智能个性化提供了一个很有前途的方向。

主要内容

- 提出了一个名为FedPerGNN的联邦GNN框架,它可以通过以保护隐私的方式挖掘高阶用户-项目交互信息来支持保护隐私的个性化。

- 开发了一种保护隐私的模型更新方法,通过局部差分隐私( LDP )和伪交互项采样方法来保护用户-项目交互信息。

- 设计了一个隐私保护的图扩展协议,在不泄露用户隐私的情况下利用高阶图信息。

- 在六个广泛使用的数据集上进行了不同场景下的个性化实验。

Decentralized Federated Graph Neural Networks

论文信息

去中心化的联邦图神经网络

原文地址:https://federated-learning.org/fl-ijcai-2021/FTL-IJCAI21_paper_20.pdf

摘要

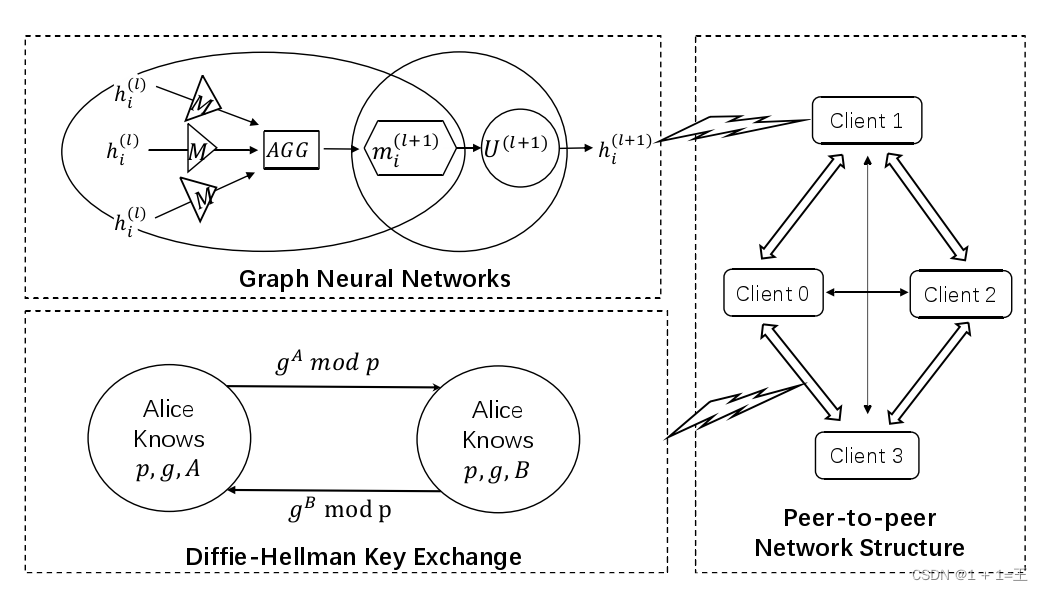

Due to the increasing importance of user-side pri-vacy, federated graph neural networks are pro-posed recently as a reliable solution for privacy-preserving data analysis by enabling the jointtraining of a graph neural network from graph-structured data located at different clients. How-ever, existing federated graph neural networks arebased on a centralized server to orchestrate thetraining process, which is unacceptable in manyreal-world applications such as building financialrisk control models across competitive banks. Inthis paper, we propose a new Decentralized Feder-ated Graph Neural Network (D-FedGNN for short)which allows multiple participants to train a graphneural network model without a centralized server.Specifically, D-FedGNN uses a decentralized par-allel stochastic gradient descent algorithm DP-SGD to train the graph neural network model ina peer-to-peer network structure. To protect privacy during model aggregation, D-FedGNN intro-duces the Diffie-Hellman key exchange method toachieve secure model aggregation between clients.Both theoretical and empirical studies show that theproposed D-FedGNN model is capable of achiev-ing competitive results compared with traditionalcentralized federated graph neural networks in thetasks of classification and regression, as well as significantly reducing time cost of the communication of model parameters.

由于用户侧隐私的重要性日益增加,最近,联邦图神经网络被提出作为隐私保护数据分析的可靠解决方案,它能够从位于不同客户端的图结构数据中联合训练图神经网络。然而,现有的联邦图神经网络都是基于一个集中的服务器来协调训练过程,这在许多实际应用中都是不可接受的,比如在竞争银行之间建立金融风险控制模型。本文提出了一种新的去中心化的Federated图神经网络(简称D – FedGNN),它允许多个参与者在没有中心化服务器的情况下训练一个图神经网络模型。具体地,D – FedGNN采用去中心化的并行随机梯度下降算法DP – SGD在对等网络结构中训练图神经网络模型。为了保护模型聚合过程中的隐私,D – FedGNN引入了Diffie -赫尔曼密钥交换方法来实现客户端之间的安全模型聚合。理论和实证研究均表明,与传统的集中式联邦图神经网络相比,所提出的D – FedGNN模型能够在分类和回归任务中获得有竞争力的结果,并显著降低了模型参数通信的时间成本。

主要内容

- 研究了图数据上的去中心化联邦学习问题,使得多个参与者可以在不依赖中心服务器的情况下协同训练图神经网络模型;

- 提出了一种新的基于去中心化并行随机梯度下降算法( DP-SGD )和Diffie -赫尔曼密钥交换方法的D – FedGNN模型,以实现具有隐私保护的图神经网络的去中心化学习;

- 从理论和实证两方面研究了D – FedGNN的性能。

文章出处登录后可见!