本文利用Python对数据集进行数据分析,并用多种机器学习算法进行分类预测。

具体文章和数据集可以见我所发布的资源:发布的资源

前半部分:Python | 基于LendingClub数据的分类预测研究Part01——问题重述+特征选择+算法对比

Python | 基于LendingClub数据的分类预测研究Part02——进一步分类研究+结论+完整详细代码

- 三、对Lending Club数据集分类预测的进一步分析

- 3.1 特征选取与预处理

- 3.2 算法的介绍

- 3.2.1 随机森林

- 3.2.2 极端随机树

- 3.3 建模分析与结果比较

- 3.3.1 决策树

- 3.3.2 随机森林

- 3.3.3 极端随机树

- 3.3.4 比较分析

- 四、结论

- 五、完整代码汇总

- 5.1 Lending Club部分数据分析

- 5.2 逻辑回归对Lending Club二分类

- 5.3 多源数据集分类

- 5.4 Lending Club三分类

三、对Lending Club数据集分类预测的进一步分析

在前文中,我们用 Logistic Regression 模型研究 Lending Club 数据组,选取 四组 3 特征的的数据组进行预测分类。该部分依旧针对 Lending Club 数据集进行研究,由于在前文数据分析中 Full Paid 和 Current 两类客户数量都十分客观,因此我们该部分可以将 Full Paid 和 Current 分离开,将问题转化为三分类问题。

3.1 特征选取与预处理

对于标签,因为是三分类问题,我们将 ’Full Paid’ 编码改为 2,其他保持不变。

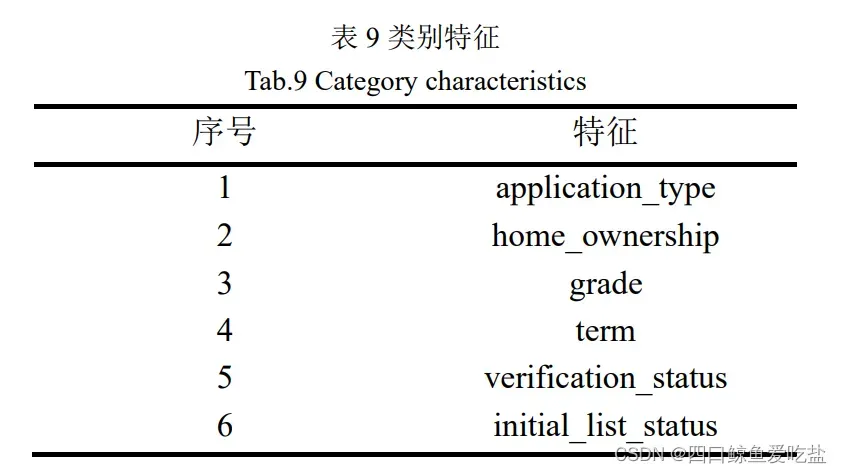

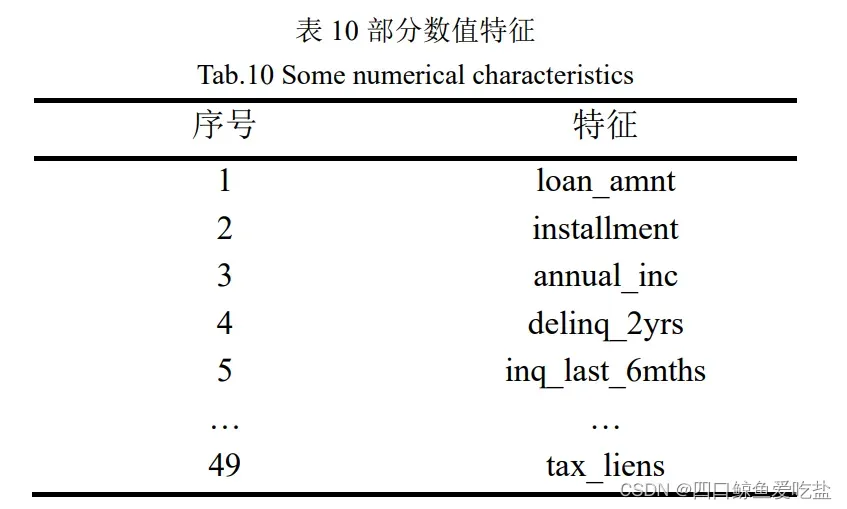

为了便于模型处理,我们将有缺失值的特征列删除,并进一步将指标进行筛选,最后筛选出共 55个特征,其中类别特征共 6 个,数值特征 49 个,表9为类别特征,表10为部分数值特征:

对于类别特征,我们仍然将其进行类别编码处理,便于模型的建立。

3.2 算法的介绍

在前文,我们已经介绍过决策树这种基本树类算法,而在决策树作为基学习器的基础上,为了消除简单决策树的拟合效果较差、适用性不广泛的弊端,在数据集分为训练集和测试集后,我们在训练集上采用集成的树模型:随机森林和极端随机树对数据集进行建模分析,并与决策树模型结果进行对比分析,筛选出其中最好的模型。

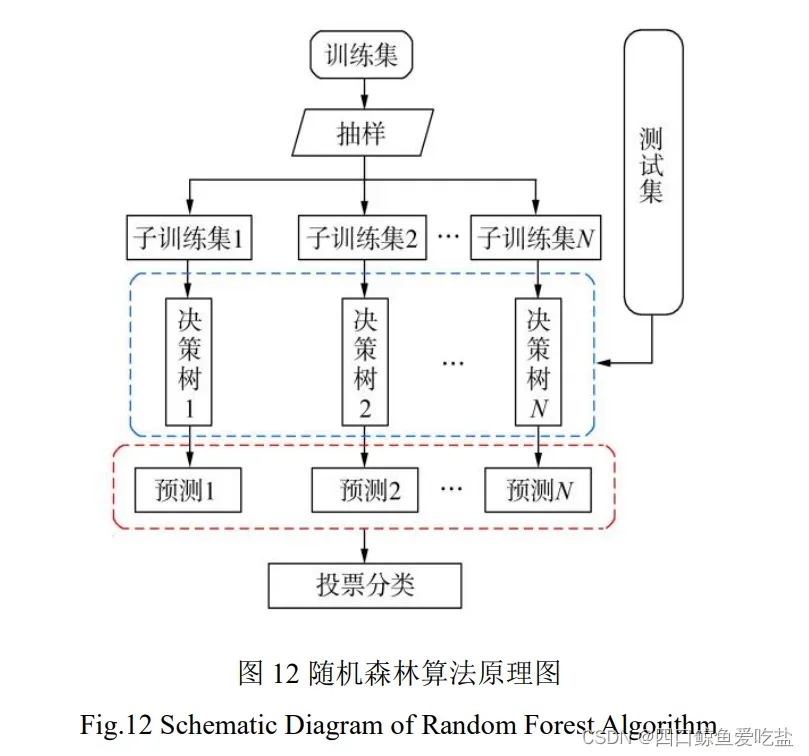

3.2.1 随机森林

随机森林(Random Forest)是一种基于 Bagging 框架下的集成学习算法,首先对数据进行 Boostrap 采样,其次在采样后的数据中选择部分特征进行决策树构建,从而可以构造出一系列的决策树,最后利用结合策略对构造出的决策树进行集成。对于分类问题,可采用多数投票或逻辑回归进行集成分类;对于回归问题,可采用简单算数平均的方法进行最后的预测输出。随机森林算法的流程如图所示,随机森林的优势在于考虑了数据的随机性同时也考虑特征的随机性,大大增强了算法的稳定性,可以有效防止过拟合。并且在对基学习器进行结合时,可以降低基学习器的方差,极大增强了模型的泛化能力。图12是极端随机树的原理示意图:

3.2.2 极端随机树

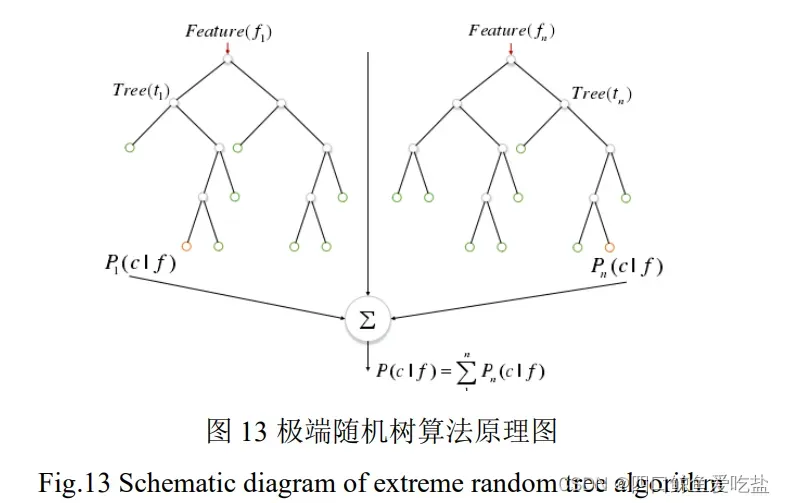

极端随机树算法是经典的集成学习算法,将众多的决策树模型进行集成得到最终结果。对于新的测试样本,每个决策树模型都能够输出相应的结果,将这些结果进行综合得到最后的结果,例如对于分类问题,每棵决策树会输出一个类别,极端随机树最终使用投票方法,选出票数最高的类别作为预测类别,这点和随机森林类似。

但是,极端随机树算法中每棵决策树所使用的是全部原始数据,而随机森林算法则是使用 bootstrap 取样生成训练样本。并且极端随机树在节点分裂时,是随机选取分裂节点,并非选取最佳分裂阈值或特征。图13是极端随机树的原理示意图:

3.3 建模分析与结果比较

3.3.1 决策树

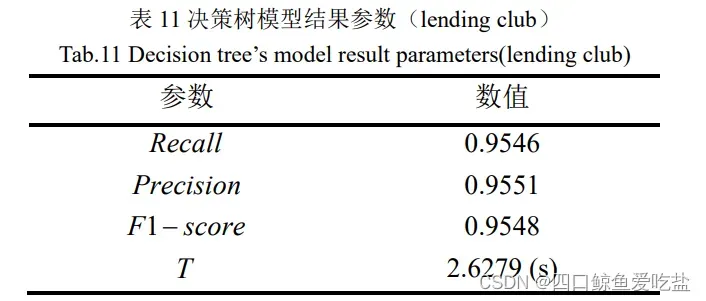

表11为采用决策树的模型结果参数:

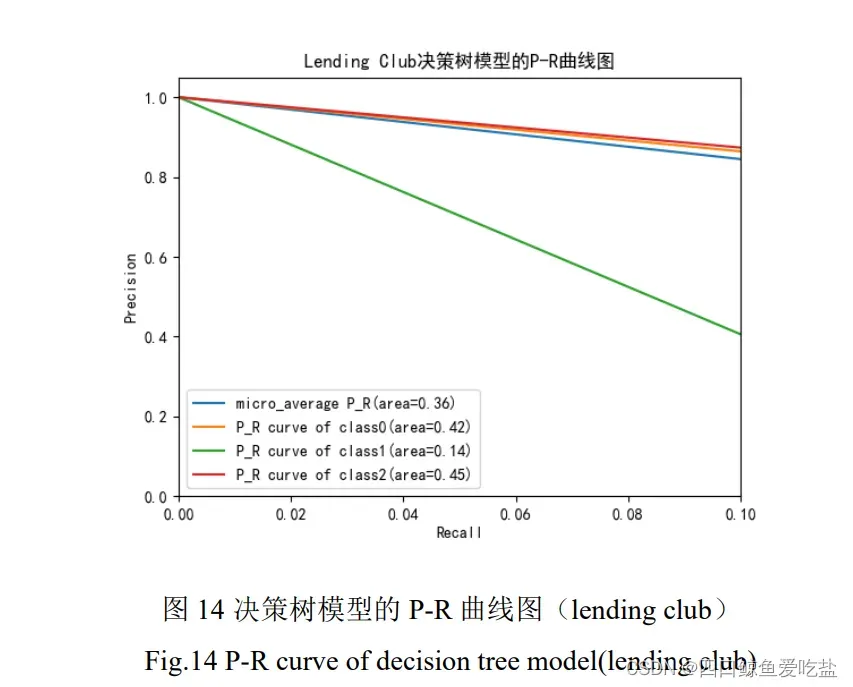

图14是决策树模型的P-R曲线图,各条曲线都呈下降趋势。

3.3.2 随机森林

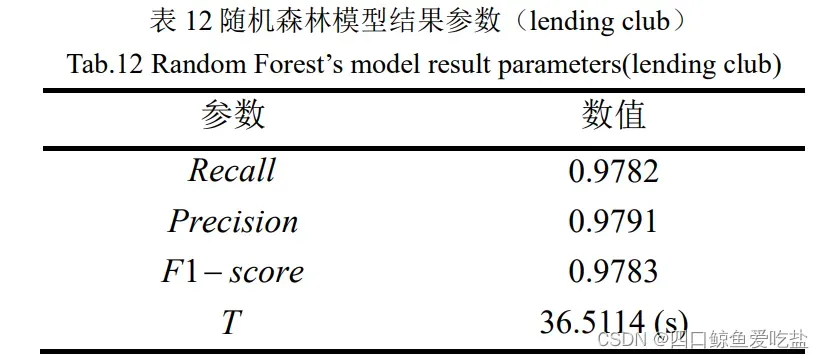

表12为采用决策树的模型结果参数,相比于决策树,其在程序运行时间上花费过多,而准确度等方面都有明显提高。

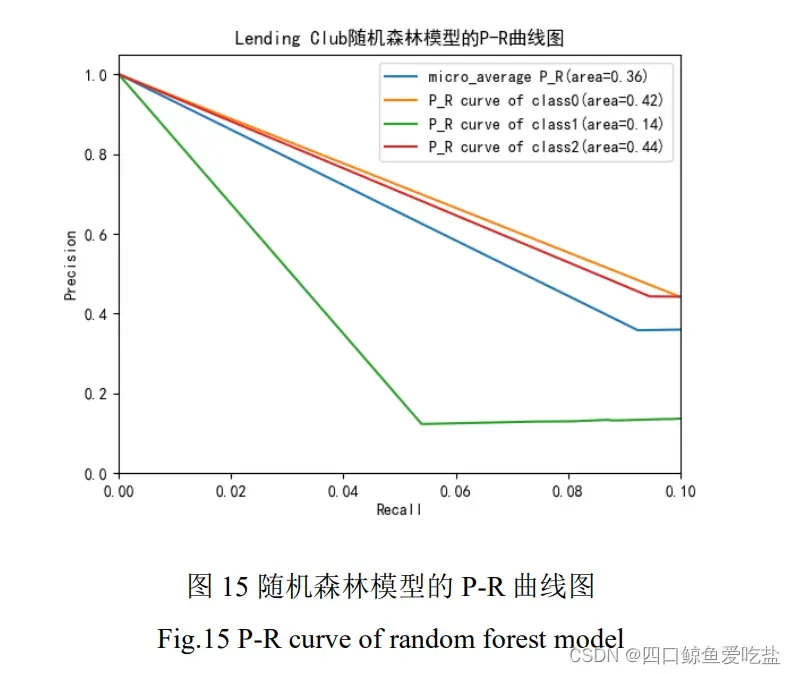

图15是随机森林模型的P-R曲线图,其中各类样本和总体平均水平的准确度都随着召回率不断下降。

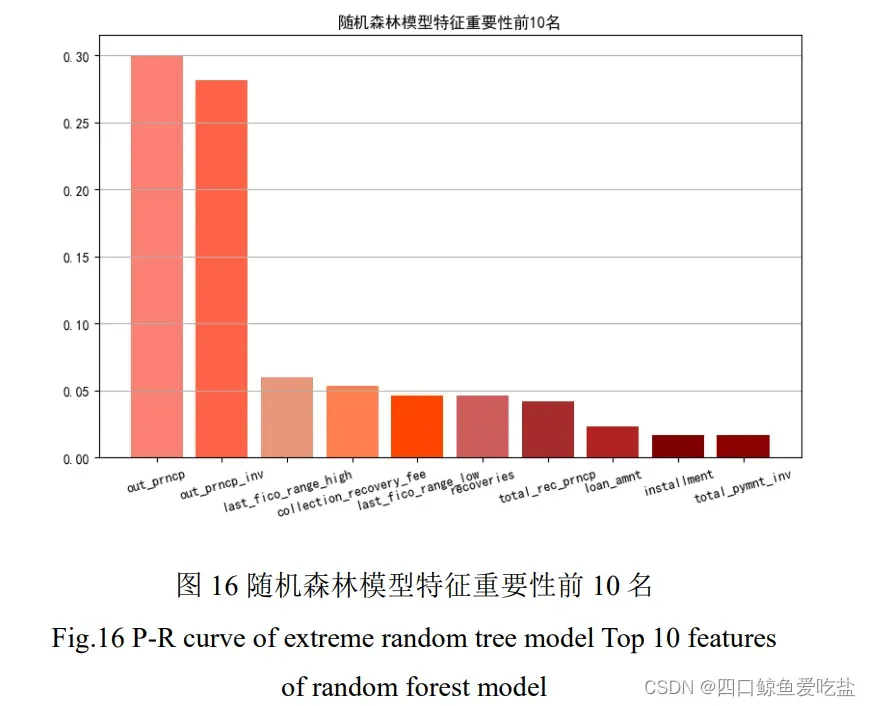

除了模型的准确度等性能,我们也对随机森林模型关于数据特征的选取进行分析,图16选取了在随机森林模型中,选取特征重要性前十名进行表示:

3.3.3 极端随机树

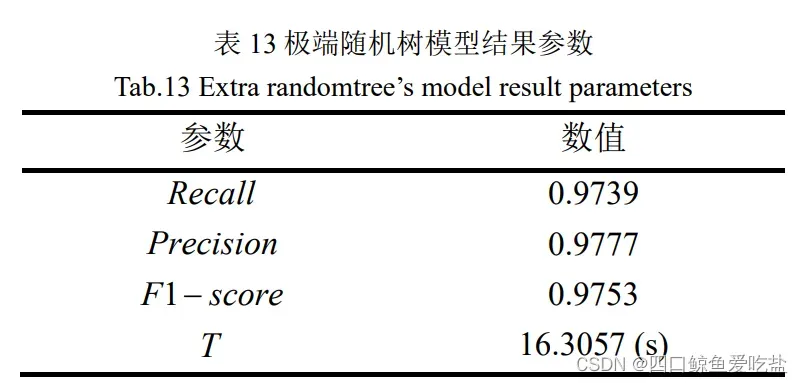

表13为采用极端随机树的模型结果参数,相比于决策树,其在程序运行时间上花费较多,但少于随机森林,而准确度等方面都有明显提高,但也不如随机森林。

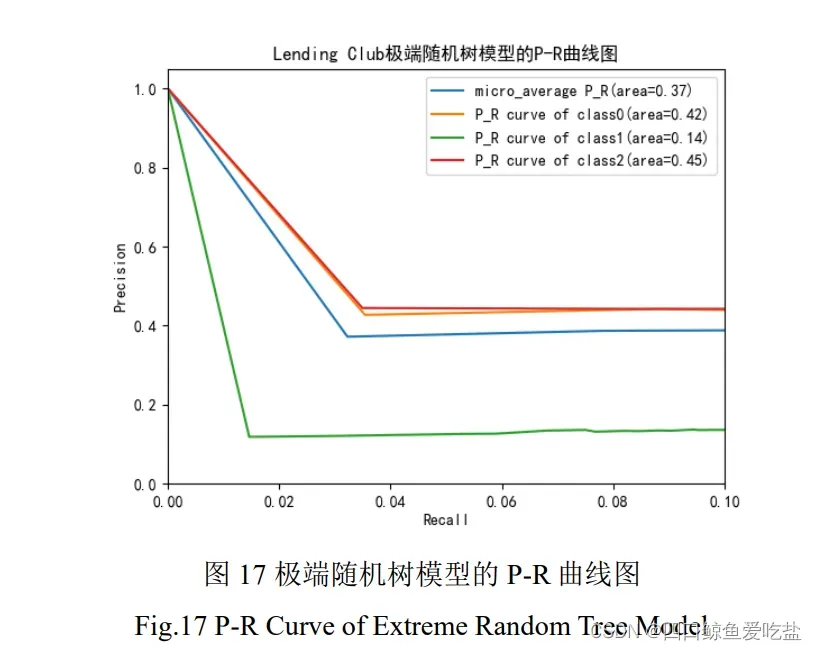

图17是极端随机树模型的P-R曲线图其中各类样本和总体平均水平的准确度都随着召回率不断下降。

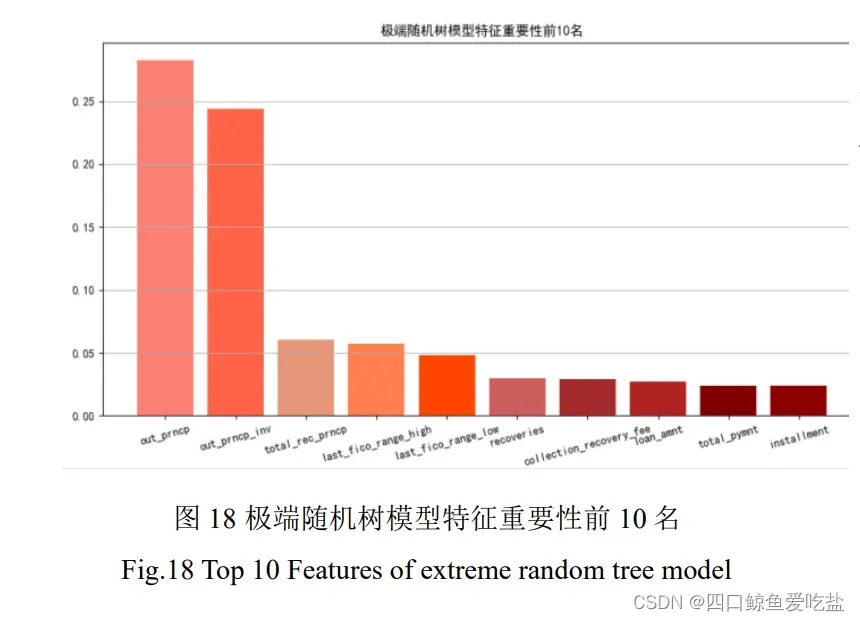

图18选取了在极端随机树模型中,选取特征重要性前十名进行表示:

3.3.4 比较分析

对于随机森林模型和极端树模型,两者选取的特征重要性前10名有相同,如:out_prncp,out_prncp_inv,但也存在差异。

对于随机森林模型,相比于决策树,其召回率(Recall),准确率(Precision)和 F1-score 都有显著提升,但是也暴露出集成模型的缺点——模型运行时间长,随机森林模型运行时间超过了36.5 秒。

对于极端树模型,其相比于决策树,其召回率(Recall),准确率(Precision)和 F1-score 也都有相当水平的提升,而且其运行时间也仅有 16 秒,不足随机森林模型运行时间的一半。

综上,我们可以知道,集成模型相比于单一模型能够显著提升准确度等,但同时也会花费更多的计算机资源。

四、结论

- 采用

LR(Logistic Regression)对于Lending Club数据集进行二分类预测,在选取特征时,相对最优的是,

和

.

- 针对多源数据集,采用神经网络、贝叶斯分类器和决策树三种模型实现分类预测,对比三种算法,决策树模型的准确度和泛化能力最优,且相比于贝叶斯分类的运行时间,决策树的运行时间也属于可接受范围内,因此我们可以认为,三者之间决策树模型最优。

- 集成模型(随机森林,极端随机树)相比于单一模型(决策树)能够显著提升准确度等,但同时也会花费更多的计算机资源。

五、完整代码汇总

5.1 Lending Club部分数据分析

## 第一部分(1)——数据分析

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import warnings

import seaborn as sns

warnings.filterwarnings('ignore')

plt.rcParams["font.sans-serif"] = ["SimHei"]

plt.rcParams["axes.unicode_minus"] = False

data = pd.read_csv('LendingClub.csv')

## 各类贷款状态数量统计

Count = data.loan_status.value_counts()

print(Count)

print(Count['Fully Paid'])

loan_type = ['Fully Paid', 'Current', 'Charged Off', 'Late (31-120 days)', 'In Grace Period ',

'Late (16-30 days)', 'Default']

loan_type_amount = [Count['Fully Paid'], Count['Current'], Count['Charged Off'], Count['Late (31-120 days)'],

Count['In Grace Period'], Count['Late (16-30 days)'], Count['Default']]

for i in range(len(loan_type)):

plt.bar(loan_type[i], loan_type_amount[i])

plt.title('各类贷款状态数量统计')

plt.ylabel('数量')

plt.xticks(rotation=15)

plt.tick_params(labelsize=8)

plt.show()

print('正常客户数量:')

Good = Count['Fully Paid']+Count['Current']

print(Good)

print('违约客户数量:')

Bad = Count['Charged Off']+Count['Late (31-120 days)']+Count['In Grace Period']+Count['Late (16-30 days)']+Count['Default']

print(Bad)

print('正常客户占比:')

print(Good/(Good+Bad))

print('违约客户占比:')

print(Bad/(Good+Bad))

## 贷款额度与贷款状态箱型图

SandA = data[['loan_status','loan_amnt']]

FP_d = SandA[SandA['loan_status']=='Fully Paid']['loan_amnt']

C_d = SandA[SandA['loan_status']=='Current']['loan_amnt']

CO_d = SandA[SandA['loan_status']=='Charged Off']['loan_amnt']

LL_d = SandA[SandA['loan_status']=='Late (31-120 days)']['loan_amnt']

IGP_d = SandA[SandA['loan_status']=='In Grace Period']['loan_amnt']

LS_d = SandA[SandA['loan_status']=='Late (16-30 days)']['loan_amnt']

D_d = SandA[SandA['loan_status']=='Default']['loan_amnt']

Box_data = [FP_d, C_d, CO_d, LL_d, IGP_d, LS_d, D_d]

bplot = plt.boxplot(Box_data, patch_artist=True, labels=loan_type, vert=True, showmeans=True, showfliers=False,

meanline=True)

colors = ['pink', 'lightblue', 'lightgreen', 'Cyan', 'DarkGreen', 'DarkKhaki', 'Chocolate']

for patch, color in zip(bplot['boxes'], colors):

patch.set_facecolor(color) # 为不同的箱型图填充不同的颜色

plt.xlabel('贷款状态')

plt.ylabel('贷款额度')

plt.title('贷款额度与贷款状态箱线图')

plt.xticks(rotation=15)

plt.tick_params(labelsize=8)

plt.show()

## 不同信用等级的违约客户占比

SandG = data[['loan_status','grade']]

grade_count = data.grade.value_counts()

print('不同信用等级的违约客户占比:')

A_d = (SandG[((SandG['loan_status']!='Fully Paid') & (SandG['loan_status']!='Current')) &

(SandG['grade']=='A')].shape[0])/(grade_count['A'])

print(A_d)

B_d = (SandG[((SandG['loan_status']!='Fully Paid') & (SandG['loan_status']!='Current')) &

(SandG['grade']=='B')].shape[0])/(grade_count['B'])

print(B_d)

C_d = (SandG[((SandG['loan_status']!='Fully Paid') & (SandG['loan_status']!='Current')) &

(SandG['grade']=='C')].shape[0])/(grade_count['C'])

print(C_d)

D_d = (SandG[((SandG['loan_status']!='Fully Paid') & (SandG['loan_status']!='Current')) &

(SandG['grade']=='D')].shape[0])/(grade_count['D'])

print(D_d)

E_d = (SandG[((SandG['loan_status']!='Fully Paid') & (SandG['loan_status']!='Current')) &

(SandG['grade']=='E')].shape[0])/(grade_count['E'])

print(E_d)

F_d = (SandG[((SandG['loan_status']!='Fully Paid') & (SandG['loan_status']!='Current')) &

(SandG['grade']=='F')].shape[0])/(grade_count['F'])

print(F_d)

G_d = (SandG[((SandG['loan_status']!='Fully Paid') & (SandG['loan_status']!='Current')) &

(SandG['grade']=='G')].shape[0])/(grade_count['A'])

print(G_d)

grade_type = ['A', 'B', 'C', 'D', 'E', 'F', 'G']

grade_data = [A_d, B_d, C_d, D_d, E_d, F_d, G_d]

for i in range(len(grade_type)):

plt.bar(grade_type[i], grade_data[i])

plt.title('不同信用等级的违约客户占比')

plt.ylabel('比例')

plt.show()

data = pd.read_csv('loan_data.csv')

loan_type = ['Fully Paid', 'Current', 'Charged Off', 'Late (31-120 days)', 'In Grace Period ',

'Late (16-30 days)', 'Default']

## 不同总还款月份中违约客户的比例

Count_term = data.term.value_counts()

print(Count_term)

Term36_Default = (data[((data['loan_status']!='Fully Paid') & (data['loan_status']!='Current')) &

(data['term']=='36 months')].shape[0])/(Count_term['36 months'])

print(Term36_Default)

Term60_Default = (data[((data['loan_status']!='Fully Paid') & (data['loan_status']!='Current')) &

(data['term']=='60 months')].shape[0])/(Count_term['60 months'])

print(Term60_Default)

Term_type = ['36 months', '60 months']

Term_Default = [Term36_Default, Term60_Default]

for i in range(len(Term_type)):

plt.barh(Term_type[i], Term_Default[i])

plt.title('不同总还款月份的违约客户占比')

plt.xlabel('比例')

plt.show()

## 贷款额度与贷款状态箱型图

SandAnn = data[['loan_status','annual_inc']]

FP_i = SandAnn[SandAnn['loan_status']=='Fully Paid']['annual_inc']

C_i = SandAnn[SandAnn['loan_status']=='Current']['annual_inc']

CO_i = SandAnn[SandAnn['loan_status']=='Charged Off']['annual_inc']

LL_i = SandAnn[SandAnn['loan_status']=='Late (31-120 days)']['annual_inc']

IGP_i = SandAnn[SandAnn['loan_status']=='In Grace Period']['annual_inc']

LS_i = SandAnn[SandAnn['loan_status']=='Late (16-30 days)']['annual_inc']

D_i = SandAnn[SandAnn['loan_status']=='Default']['annual_inc']

Box_data = [FP_i, C_i, CO_i, LL_i, IGP_i, LS_i, D_i]

bplot = plt.boxplot(Box_data, patch_artist=True, labels=loan_type, showmeans=True, showfliers=False, vert=False,

meanline=True)

colors = ['pink', 'lightblue', 'lightgreen', 'Cyan', 'DarkGreen', 'DarkKhaki', 'Chocolate']

for patch, color in zip(bplot['boxes'], colors):

patch.set_facecolor(color) # 为不同的箱型图填充不同的颜色

plt.xlabel('贷款年收入')

plt.title('贷款人年收入与贷款状态箱线图')

plt.yticks(rotation=45)

plt.tick_params(labelsize=8)

plt.show()

5.2 逻辑回归对Lending Club二分类

## 第一部分(2)——逻辑回归

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import warnings

import seaborn as sns

from sklearn.model_selection import train_test_split # 用于随机划分训练集和测试集数据

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report

from sklearn.metrics import roc_curve

from sklearn.metrics import roc_auc_score

warnings.filterwarnings('ignore')

plt.rcParams["font.sans-serif"] = ["SimHei"]

plt.rcParams["axes.unicode_minus"] = False

data = pd.read_csv('LendingClub.csv')

# 数据预处理,将类别变量编码,将数值变量标准化

data['loan_status'] = data.loan_status.replace({'Fully Paid':0, 'Current':0, 'Charged Off':1, 'Late (31-120 days)':1,

'In Grace Period':1, 'Late (16-30 days)':1, 'Default':1})

data['grade'] = data.grade.replace({'A':0, 'B':1, 'C':2, 'D':3, 'E':4, 'F':5, 'G':6})

data['term'] = data.term.replace({'36 months':0, '60 months':1})

max_1 = max(data.loan_amnt)

min_1 = min(data.loan_amnt)

mean_1 = np.mean(data.loan_amnt)

max_2 = max(data.annual_inc)

min_2 = min(data.annual_inc)

mean_2 = np.mean(data.annual_inc)

# for i in data['loan_amnt']:

# i = (i-mean_1)/(max_1-min_1)

#

# for j in data['annual_inc']:

# j = (j-mean_2)/(max_2-min_2)

#

# print(data.term)

# print(data.loan_amnt)

loan_df = data[['loan_status', 'loan_amnt', 'grade', 'term', 'annual_inc']]

X1 = data[['loan_amnt', 'grade', 'term']]

X2 = data[['annual_inc', 'grade', 'term']]

X3 = data[['loan_amnt', 'annual_inc', 'term']]

X4 = data[['loan_amnt', 'grade', 'annual_inc']]

y = data['loan_status']

print(X1)

print(y)

# 数据集1

x_train_1, x_test_1, y_train_1, y_test_1 = train_test_split(X1, y, test_size=0.2, random_state=1);

model = LogisticRegression()

model.fit(x_train_1, y_train_1) # 导入训练集

y_pred_1 = model.predict(x_test_1) # 测试集

print('数据集1训练结果:')

print(classification_report(y_test_1, y_pred_1, digits=4))

auc = roc_auc_score(y_test_1, model.predict_proba(x_test_1)[:,1])

fpr, tpr, thresholds = roc_curve(y_test_1, model.predict_proba(x_test_1)[:,1])

plt.subplot(2, 2, 1)

plt.plot(fpr, tpr,color='darkorange', lw=3, label='ROC curve(area=%0.2f)' % auc)

plt.plot([0,1],[0,1], color='navy', lw=3, linestyle='--')

plt.xlabel('FPR')

plt.ylabel('TPR')

plt.legend(loc='lower right')

plt.title('数据集1', loc='left')

# plt.show()

# 数据集2

x_train_2, x_test_2, y_train_2, y_test_2 = train_test_split(X2, y, test_size=0.2, random_state=2);

model = LogisticRegression()

model.fit(x_train_2, y_train_2) # 导入训练集

y_pred_2 = model.predict(x_test_2) # 测试集

print('数据集2训练结果:')

print(classification_report(y_test_2, y_pred_2, digits=4))

auc = roc_auc_score(y_test_2, model.predict_proba(x_test_2)[:,1])

fpr, tpr, thresholds = roc_curve(y_test_2, model.predict_proba(x_test_2)[:,1])

plt.subplot(2, 2, 2)

plt.plot(fpr, tpr,color='darkorange', lw=3, label='ROC curve(area=%0.2f)' % auc)

plt.plot([0,1],[0,1], color='navy', lw=3, linestyle='--')

plt.xlabel('FPR')

plt.ylabel('TPR')

plt.legend(loc='lower right')

plt.title('数据集2', loc='left')

# plt.show()

# 数据集3

x_train_3, x_test_3, y_train_3, y_test_3 = train_test_split(X3, y, test_size=0.2, random_state=3);

model = LogisticRegression()

model.fit(x_train_3, y_train_3) # 导入训练集

y_pred_3 = model.predict(x_test_3) # 测试集

print('数据集3训练结果:')

print(classification_report(y_test_3, y_pred_3, digits=4))

auc = roc_auc_score(y_test_3, model.predict_proba(x_test_3)[:,1])

fpr, tpr, thresholds = roc_curve(y_test_3, model.predict_proba(x_test_3)[:,1])

plt.subplot(2, 2, 3)

plt.plot(fpr, tpr,color='darkorange', lw=3, label='ROC curve(area=%0.2f)' % auc)

plt.plot([0,1],[0,1], color='navy', lw=3, linestyle='--')

plt.xlabel('FPR')

plt.ylabel('TPR')

plt.legend(loc='lower right')

plt.title('数据集3', loc='left')

# plt.show()

# 数据集4

x_train_4, x_test_4, y_train_4, y_test_4 = train_test_split(X4, y, test_size=0.2, random_state=4);

model = LogisticRegression()

model.fit(x_train_4, y_train_4) # 导入训练集

y_pred_4 = model.predict(x_test_4) # 测试集

print('数据集4训练结果:')

print(classification_report(y_test_4, y_pred_4, digits=4))

auc = roc_auc_score(y_test_4, model.predict_proba(x_test_4)[:,1])

fpr, tpr, thresholds = roc_curve(y_test_4, model.predict_proba(x_test_4)[:,1])

plt.subplot(2, 2, 4)

plt.plot(fpr, tpr,color='darkorange', lw=3, label='ROC curve(area=%0.2f)' % auc)

plt.plot([0,1],[0,1], color='navy', lw=3, linestyle='--')

plt.xlabel('FPR')

plt.ylabel('TPR')

plt.legend(loc='lower right')

plt.title('数据集4', loc='left')

plt.show()

5.3 多源数据集分类

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import warnings

import seaborn as sns

from sklearn.model_selection import train_test_split # 用于随机划分训练集和测试集数据

from sklearn.metrics import classification_report

from sklearn.metrics import roc_curve

from sklearn.metrics import roc_auc_score

from sklearn.metrics import precision_recall_curve, average_precision_score

from sklearn import neural_network

from sklearn.neural_network import MLPClassifier

from sklearn.neural_network import BernoulliRBM

from sklearn.neural_network import MLPRegressor

from sklearn.naive_bayes import GaussianNB

from sklearn import tree

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import plot_confusion_matrix

from sklearn.metrics import plot_roc_curve

from sklearn.preprocessing import label_binarize

import time

warnings.filterwarnings('ignore')

plt.rcParams["font.sans-serif"] = ["SimHei"]

plt.rcParams["axes.unicode_minus"] = False

## 数据集

data = pd.read_csv('CodedData.csv')

print(data.info)

check_null = data.isnull().sum().sort_values(ascending=False)/float(len(data))

print(check_null[check_null>0])

label = [0, 1, 2, 3, 4]

feature = data.columns.values.tolist()

feature.remove('Y')

print(feature)

print(feature)

X = data[feature]

y = data['Y']

print(y)

y = label_binarize(y, classes=label)

print(y.shape)

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=520)

y_bin = label_binarize(y,classes=label)

print(y_bin)

n_classes = y_bin.shape[1]

## 神经网络

# 有26个输入层结点,4个输出层结点

Neu_start = time.time()

neu = MLPClassifier(hidden_layer_sizes=10)

neu.fit(x_train, y_train)

y_predict = neu.predict(x_test)

print('神经网络模型评价参数:')

print(classification_report(y_test, y_predict, digits=4))

Neu_end = time.time()

print('神经网络运行时间:')

Neu_time = Neu_end - Neu_start

print(Neu_time)

y_score = neu.predict_proba(x_test)

print(y_score)

# P-R曲线

precision = dict()

recall = dict()

average_precision = dict()

for i in range(n_classes):

precision[i], recall[i], _ = precision_recall_curve(y_test[:, i], y_score[:, i])

average_precision[i] = average_precision_score(y_test[:, i], y_score[:, i])

precision["micro"], recall["micro"], _ = precision_recall_curve(y_test.ravel(), y_score.ravel())

average_precision["micro"] = average_precision_score(y_test, y_score, average="micro")

plt.clf()

plt.plot(recall["micro"], precision["micro"],

label="micro_average P_R(area={0:0.2f})".format(average_precision["micro"]))

for i in range(n_classes):

plt.plot(recall[i], precision[i], label="P_R curve of class{0}(area={1:0.2f})".format(i, average_precision[i]))

plt.xlim([0.0, 0.1])

plt.ylim([0.0, 1.05])

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.title('神经网络模型的P-R曲线图')

plt.legend()

plt.show()

## 决策树

Det_start = time.time()

det = DecisionTreeClassifier()

det = det.fit(x_train, y_train)

y_predict = det.predict(x_test)

print('决策树分类器模型评价参数:')

print(classification_report(y_test, y_predict, digits=4))

Det_end = time.time()

Det_time = Det_end - Det_start

print('决策树分类器运行时间:')

print(Det_time)

# P-R曲线

# 决策树的y_score格式有问题,要获得如神经网络y_score格式的y_score

y_det = data['Y']

x_train_det, x_test_det, y_train_det, y_test_det = train_test_split(X, y_det, test_size=0.2, random_state=520)

det_score = DecisionTreeClassifier()

det_score = det.fit(x_train_det, y_train_det)

y_score = det.predict_proba(x_test_det)

print(y_score)

precision = dict()

recall = dict()

average_precision = dict()

for i in range(n_classes):

precision[i], recall[i], _ = precision_recall_curve(y_test[:, i], y_score[:, i])

average_precision[i] = average_precision_score(y_test[:, i], y_score[:, i])

print(y_test.shape)

print(y_score.shape)

precision["micro"], recall["micro"], _ = precision_recall_curve(y_test.ravel(), y_score.ravel())

average_precision["micro"] = average_precision_score(y_test, y_score, average="micro")

plt.clf()

plt.plot(recall["micro"], precision["micro"],

label="micro_average P_R(area={0:0.2f})".format(average_precision["micro"]))

for i in range(n_classes):

plt.plot(recall[i], precision[i], label="P_R curve of class{0}(area={1:0.2f})".format(i, average_precision[i]))

plt.xlim([0.0, 0.1])

plt.ylim([0.0, 1.05])

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.title('决策树模型的P-R曲线图')

plt.legend()

plt.show()

## 贝叶斯分类器

Byt_start = time.time()

y_byt = data['Y']

x_train_byt, x_test_byt, y_train_byt, y_test_byt = train_test_split(X, y_byt, test_size=0.2, random_state=520)

byt_score = GaussianNB()

byt_score = byt_score.fit(x_train_byt, y_train_byt)

y_score = byt_score.predict_proba(x_test_byt)

print(y_score)

y_predict = byt_score.predict(x_test_byt)

print('高斯朴素贝叶斯分类器模型评价参数:')

print(classification_report(y_test_byt, y_predict, digits=4))

Byt_end = time.time()

Byt_time = Byt_end - Byt_start

print('高斯朴素贝叶斯分类器运行时间:')

print(Byt_time)

# P-R曲线

precision = dict()

recall = dict()

average_precision = dict()

for i in range(n_classes):

precision[i], recall[i], _ = precision_recall_curve(y_test[:, i], y_score[:, i])

average_precision[i] = average_precision_score(y_test[:, i], y_score[:, i])

precision["micro"], recall["micro"], _ = precision_recall_curve(y_test.ravel(), y_score.ravel())

average_precision["micro"] = average_precision_score(y_test, y_score, average="micro")

plt.clf()

plt.plot(recall["micro"], precision["micro"],

label="micro_average P_R(area={0:0.2f})".format(average_precision["micro"]))

for i in range(n_classes):

plt.plot(recall[i], precision[i], label="P_R curve of class{0}(area={1:0.2f})".format(i, average_precision[i]))

plt.xlim([0.0, 0.1])

plt.ylim([0.0, 1.05])

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.title('贝叶斯模型的P-R曲线图')

plt.legend()

plt.show()

5.4 Lending Club三分类

# 第三部分

from sklearn.model_selection import cross_val_score

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import ExtraTreesClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import classification_report

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import warnings

import seaborn as sns

from sklearn.model_selection import train_test_split # 用于随机划分训练集和测试集数据

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report

from sklearn.metrics import roc_curve

from sklearn.metrics import roc_auc_score

from sklearn.metrics import precision_recall_curve, average_precision_score

from sklearn import tree

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import plot_confusion_matrix

from sklearn.metrics import plot_roc_curve

from sklearn.preprocessing import label_binarize

import time

warnings.filterwarnings('ignore')

plt.rcParams["font.sans-serif"] = ["SimHei"]

plt.rcParams["axes.unicode_minus"] = False

data = pd.read_csv('LendingClub.csv')

print('原数据:')

print(data.info())

## 数据预处理——处理缺失值,将有缺失值的属性删除

check_null = data.isnull().sum().sort_values(ascending=False)/float(len(data))

print(check_null[check_null>0])

thresh_count = len(data)

data = data.dropna(thresh=thresh_count, axis=1)

print('将有缺失值的属性列删除后的数据:')

print(data.info())

# 删除一些属性

data = data.drop(['id', 'int_rate', 'earliest_cr_line', 'issue_d', 'last_pymnt_d', 'pymnt_plan', 'purpose'], axis=1)

print(data.info())

# 数据预处理,将类别变量编码

data['application_type'] = data.application_type.replace({'Individual':0, 'Joint App':1})

data['home_ownership'] = data.home_ownership.replace({'ANY':0, 'MORTGAGE':1, 'NONE':2, 'OWN':3, 'RENT':4})

data['loan_status'] = data.loan_status.replace({'Fully Paid':2, 'Current':0, 'Charged Off':1, 'Late (31-120 days)':1,

'In Grace Period':1, 'Late (16-30 days)':1, 'Default':1})

data['grade'] = data.grade.replace({'A':0, 'B':1, 'C':2, 'D':3, 'E':4, 'F':5, 'G':6})

data['term'] = data.term.replace({'36 months':0, '60 months':1})

data['verification_status'] = data.verification_status.replace({'Verified':0, 'Not Verified':1, 'Source Verified':2})

data['initial_list_status'] = data.initial_list_status.replace({'f':0, 'w':1})

## 数据集划分

feature = data.columns.values.tolist()

feature.remove('loan_status')

print(feature)

X = data[feature]

y = data['loan_status']

label = [0, 1, 2]

y = label_binarize(y, classes=label)

print(y.shape)

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=520)

n_classes = y.shape[1]

print(n_classes)

## 决策树

Det_start = time.time()

det = DecisionTreeClassifier()

det = det.fit(x_train, y_train)

y_predict = det.predict(x_test)

print('决策树分类器模型评价参数:')

print(classification_report(y_test, y_predict, digits=4))

Det_end = time.time()

Det_time = Det_end - Det_start

print('决策树分类器运行时间:')

print(Det_time)

# P-R曲线

# 决策树的y_score格式有问题,要获得如神经网络y_score格式的y_score

y_det = data['loan_status']

x_train_det, x_test_det, y_train_det, y_test_det = train_test_split(X, y_det, test_size=0.2, random_state=666)

det_score_model = DecisionTreeClassifier()

det_score = det_score_model.fit(x_train_det, y_train_det)

y_score = det_score_model.predict_proba(x_test_det)

print(y_score)

precision = dict()

recall = dict()

average_precision = dict()

print(y_test.shape)

print(y_score.shape)

for i in range(n_classes):

precision[i], recall[i], _ = precision_recall_curve(y_test[:, i], y_score[:, i])

average_precision[i] = average_precision_score(y_test[:, i], y_score[:, i])

print(y_test.shape)

print(y_score.shape)

precision["micro"], recall["micro"], _ = precision_recall_curve(y_test.ravel(), y_score.ravel())

average_precision["micro"] = average_precision_score(y_test, y_score, average="micro")

plt.clf()

plt.plot(recall["micro"], precision["micro"],

label="micro_average P_R(area={0:0.2f})".format(average_precision["micro"]))

for i in range(n_classes):

plt.plot(recall[i], precision[i], label="P_R curve of class{0}(area={1:0.2f})".format(i, average_precision[i]))

plt.xlim([0.0, 0.1])

plt.ylim([0.0, 1.05])

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.title('Lending Club决策树模型的P-R曲线图')

plt.legend()

plt.show()

## 随机森林

model01_start = time.time()

clf2 = RandomForestClassifier(n_estimators=10, max_depth=None, min_samples_split=2, random_state=0)

scores2 = cross_val_score(clf2, X, y)

print('随机森林每次交叉验证得分:')

print(scores2)

model01 = RandomForestClassifier()

model01.fit(x_train, y_train)

y_predict = model01.predict(x_test)

print('随机森林分类器模型评价参数:')

print(classification_report(y_test, y_predict, digits=4))

model01_end = time.time()

model01_time = model01_end - model01_start

print('随机森林时间:')

print(model01_time)

# P-R曲线

# 决策树的y_score格式有问题,要获得如神经网络y_score格式的y_score

y_det = data['loan_status']

x_train_det, x_test_det, y_train_det, y_test_det = train_test_split(X, y_det, test_size=0.2, random_state=666)

det_score_model = RandomForestClassifier()

det_score = det_score_model.fit(x_train_det, y_train_det)

y_score = det_score_model.predict_proba(x_test_det)

print(y_score)

precision = dict()

recall = dict()

average_precision = dict()

print(y_test.shape)

print(y_score.shape)

for i in range(n_classes):

precision[i], recall[i], _ = precision_recall_curve(y_test[:, i], y_score[:, i])

average_precision[i] = average_precision_score(y_test[:, i], y_score[:, i])

print(y_test.shape)

print(y_score.shape)

precision["micro"], recall["micro"], _ = precision_recall_curve(y_test.ravel(), y_score.ravel())

average_precision["micro"] = average_precision_score(y_test, y_score, average="micro")

plt.clf()

plt.plot(recall["micro"], precision["micro"],

label="micro_average P_R(area={0:0.2f})".format(average_precision["micro"]))

for i in range(n_classes):

plt.plot(recall[i], precision[i], label="P_R curve of class{0}(area={1:0.2f})".format(i, average_precision[i]))

plt.xlim([0.0, 0.1])

plt.ylim([0.0, 1.05])

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.title('Lending Club随机森林模型的P-R曲线图')

plt.legend()

plt.show()

# 特征重要性

importances = model01.feature_importances_

indices = np.argsort(importances)[::-1]

indices = indices[0:10]

names = [feature[i] for i in indices]

sort_importances = [importances[i] for i in indices]

print(names)

print(sort_importances)

colors = ['salmon', 'tomato', 'darksalmon', 'coral', 'orangered', 'indianred', 'brown', 'firebrick', 'maroon', 'darkred']

plt.bar(range(10), sort_importances, color=colors)

plt.xticks(range(10), names, rotation=15)

plt.title('随机森林模型特征重要性前10名')

plt.grid(axis='y')

plt.show()

## 极端随机树

model02_start = time.time()

clf3 = ExtraTreesClassifier(n_estimators=10, max_depth=None, min_samples_split=2, random_state=0)

scores3 = cross_val_score(clf3, X, y)

print('极端随机树每次交叉验证得分:')

print(scores3)

model02 = ExtraTreesClassifier()

model02.fit(x_train, y_train)

y_predict = model02.predict(x_test)

print('极端随机树模型评价参数:')

print(classification_report(y_test, y_predict, digits=4))

model02_end = time.time()

model02_time = model02_end - model02_start

print('极端随机树模型时间:')

print(model02_time)

# P-R曲线

# 决策树的y_score格式有问题,要获得如神经网络y_score格式的y_score

y_det = data['loan_status']

x_train_det, x_test_det, y_train_det, y_test_det = train_test_split(X, y_det, test_size=0.2, random_state=666)

det_score_model = ExtraTreesClassifier()

det_score = det_score_model.fit(x_train_det, y_train_det)

y_score = det_score_model.predict_proba(x_test_det)

print(y_score)

precision = dict()

recall = dict()

average_precision = dict()

print(y_test.shape)

print(y_score.shape)

for i in range(n_classes):

precision[i], recall[i], _ = precision_recall_curve(y_test[:, i], y_score[:, i])

average_precision[i] = average_precision_score(y_test[:, i], y_score[:, i])

print(y_test.shape)

print(y_score.shape)

precision["micro"], recall["micro"], _ = precision_recall_curve(y_test.ravel(), y_score.ravel())

average_precision["micro"] = average_precision_score(y_test, y_score, average="micro")

plt.clf()

plt.plot(recall["micro"], precision["micro"],

label="micro_average P_R(area={0:0.2f})".format(average_precision["micro"]))

for i in range(n_classes):

plt.plot(recall[i], precision[i], label="P_R curve of class{0}(area={1:0.2f})".format(i, average_precision[i]))

plt.xlim([0.0, 0.1])

plt.ylim([0.0, 1.05])

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.title('Lending Club极端随机树模型的P-R曲线图')

plt.legend()

plt.show()

# 特征重要性

importances = model02.feature_importances_

indices = np.argsort(importances)[::-1]

indices = indices[0:10]

names = [feature[i] for i in indices]

sort_importances = [importances[i] for i in indices]

print(names)

print(sort_importances)

colors = ['salmon', 'tomato', 'darksalmon', 'coral', 'orangered', 'indianred', 'brown', 'firebrick', 'maroon', 'darkred']

plt.bar(range(10), sort_importances, color=colors)

plt.xticks(range(10), names, rotation=15)

plt.title('极端随机树模型特征重要性前10名')

plt.grid(axis='y')

plt.show()

文章出处登录后可见!