0. 简介

作为深度学习用户,经常会听到ONNX、TensorRT等一系列常用的文件保存格式。而对于ONNX而言,经常我们会发现在利用TensorRT部署到NVIDIA显卡上时,onnx模型的计算图不好修改,在以前的操作中很多时候大佬是将onnx转换成ncnn的.paran和.bin文件后对.param的计算图做调整的。在这篇文章《TensorRT 入门(5) TensorRT官方文档浏览》和这篇文章《TensorRT 开始》中,作者都提到了可用 ONNX GraphSurgeon 修改onnx模型并完成模型的修改,以适用于TensorRT模型中。这是onnx-graphsurgeon官网的链接,当中基本需要使用的内容都已经全部写明了。

1. API官网以及实现

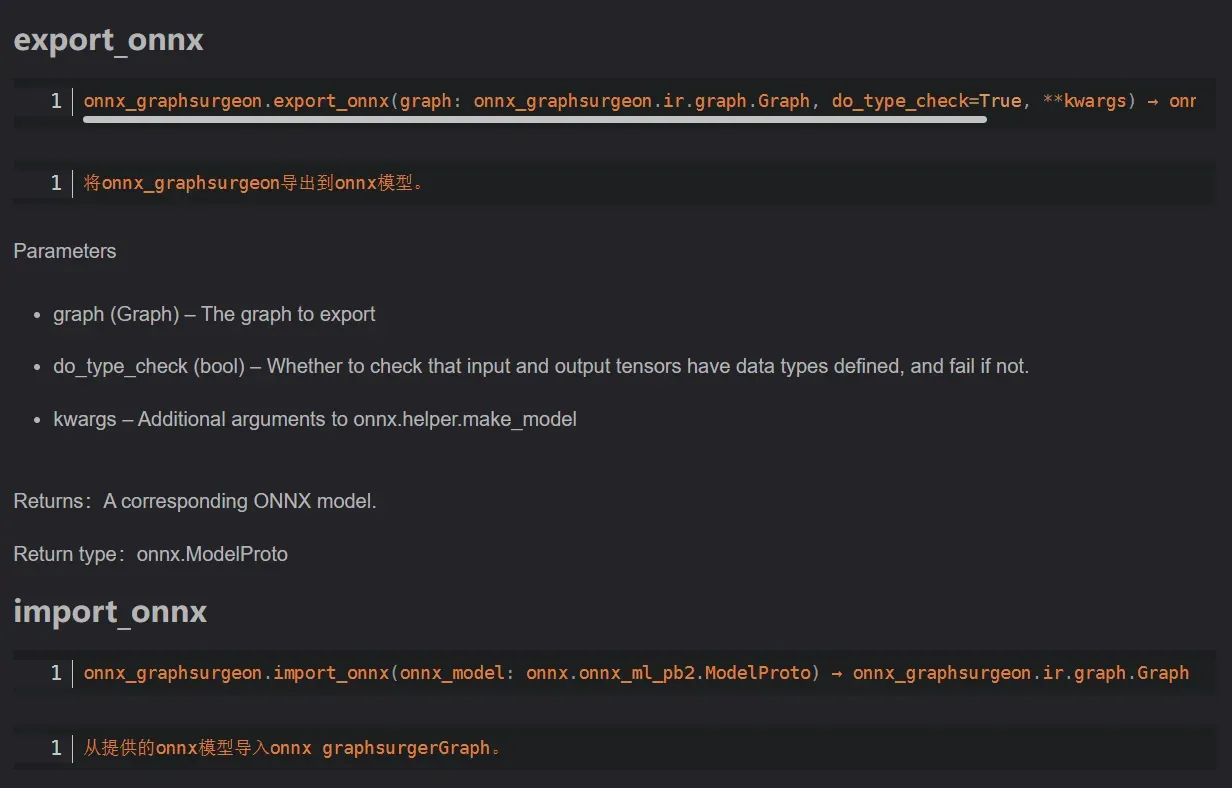

对于onnx-graphsurgeon而言,内网和外网的资料都相对较少,而最近看到了令狐少侠有对onnx-graphsurgeon的API进行了翻译,这里就不详细的去说了。有兴趣的同学可以看一下博客或者直接阅读官网的内容,当中的细节还是比较简单的,具体操作也比较简单

1.1 ONNX模型

import onnx_graphsurgeon as gs

import numpy as np

import onnx

# Register functions to make graph generation easier

@gs.Graph.register()

def min(self, *args):

return self.layer(op="Min", inputs=args, outputs=["min_out"])[0]

@gs.Graph.register()

def max(self, *args):

return self.layer(op="Max", inputs=args, outputs=["max_out"])[0]

@gs.Graph.register()

def identity(self, inp):

return self.layer(op="Identity", inputs=[inp], outputs=["identity_out"])[0]

# Generate the graph

graph = gs.Graph()

graph.inputs = [gs.Variable("input", shape=(4, 4), dtype=np.float32)]

# Clip values to [0, 6]

MIN_VAL = np.array(0, np.float32)

MAX_VAL = np.array(6, np.float32)

# Add identity nodes to make the graph structure a bit more interesting

inp = graph.identity(graph.inputs[0])

max_out = graph.max(graph.min(inp, MAX_VAL), MIN_VAL)

graph.outputs = [graph.identity(max_out), ]

# Graph outputs must include dtype information

graph.outputs[0].to_variable(dtype=np.float32, shape=(4, 4))

onnx.save(gs.export_onnx(graph), "model.onnx")

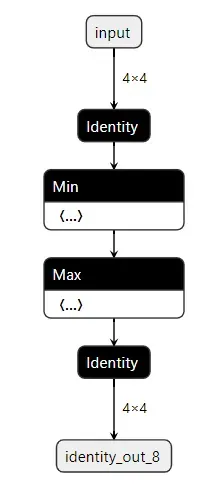

然后netron查看如下

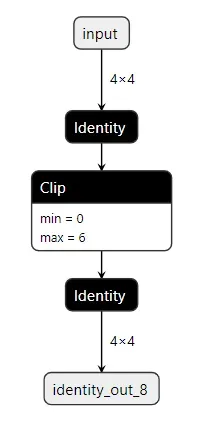

现在就是想使用onnx_graphsurgeon这个工具将OP Min和Max整合成一个叫Clip的心OP这样即使部署时也只需要写个Clip插件就好了,当然本文只是为了演示,Clip OP已经TensorRT支持了。

1.2 修改代码

方法非常简单,先把你想要合并的OP和外界所有联系切断,然后替换成新的ONNX OP保存就好了。本质上就是把Min和Identity断开,Min和c2常数断开,Max和c5常数断开,Max和下面那个Identity断开,然后替换成新的OP就好

import onnx_graphsurgeon as gs

import numpy as np

import onnx

# Here we'll register a function to do all the subgraph-replacement heavy-lifting.

# NOTE: Since registered functions are entirely reusable, it may be a good idea to

# refactor them into a separate module so you can use them across all your models.

# 这里写成函数是为了,万一还需要这样的替换操作就可以重复利用了

@gs.Graph.register()

def replace_with_clip(self, inputs, outputs):

# Disconnect output nodes of all input tensors

for inp in inputs:

inp.outputs.clear()

# Disconnet input nodes of all output tensors

for out in outputs:

out.inputs.clear()

# Insert the new node.

return self.layer(op="Clip", inputs=inputs, outputs=outputs)

# Now we'll do the actual replacement

# 导入onnx模型

graph = gs.import_onnx(onnx.load("model.onnx"))

tmap = graph.tensors()

# You can figure out the input and output tensors using Netron. In our case:

# Inputs: [inp, MIN_VAL, MAX_VAL]

# Outputs: [max_out]

# 子图的需要断开的输入name和子图需要断开的输出name

inputs = [tmap["identity_out_0"], tmap["onnx_graphsurgeon_constant_5"], tmap["onnx_graphsurgeon_constant_2"]]

outputs = [tmap["max_out_6"]]

# 断开并替换成新的名叫Clip的 OP

graph.replace_with_clip(inputs, outputs)

# Remove the now-dangling subgraph.

graph.cleanup().toposort()

# That's it!

onnx.save(gs.export_onnx(graph), "replaced.onnx")

2. ONNX 转成 TRT 模型详细例子

trtexec 将 ONNX 转成 TensorRT engine:

export PATH=/usr/local/TensorRT/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/TensorRT/lib:$LD_LIBRARY_PATH

trtexec --onnx=rvm_mobilenetv3_fp32.onnx --workspace=64 --saveEngine=rvm_mobilenetv3_fp32.engine --verbose

发生问题:

[01/08/2022-20:20:36] [E] [TRT] ModelImporter.cpp:773: While parsing node number 3 [Resize -> "389"]:

[01/08/2022-20:20:36] [E] [TRT] ModelImporter.cpp:774: --- Begin node ---

[01/08/2022-20:20:36] [E] [TRT] ModelImporter.cpp:775: input: "src"

input: "386"

input: "388"

output: "389"

name: "Resize_3"

op_type: "Resize"

attribute {

name: "coordinate_transformation_mode"

s: "pytorch_half_pixel"

type: STRING

}

attribute {

name: "cubic_coeff_a"

f: -0.75

type: FLOAT

}

attribute {

name: "mode"

s: "linear"

type: STRING

}

attribute {

name: "nearest_mode"

s: "floor"

type: STRING

}

[01/08/2022-20:20:36] [E] [TRT] ModelImporter.cpp:776: --- End node ---

[01/08/2022-20:20:36] [E] [TRT] ModelImporter.cpp:779: ERROR: builtin_op_importers.cpp:3608 In function importResize:

[8] Assertion failed: scales.is_weights() && "Resize scales must be an initializer!"

这时,需要动手改动模型了。

首先,安装必要工具:

snap install netron

pip install onnx-simplifier

pip install onnx_graphsurgeon --index-url https://pypi.ngc.nvidia.com

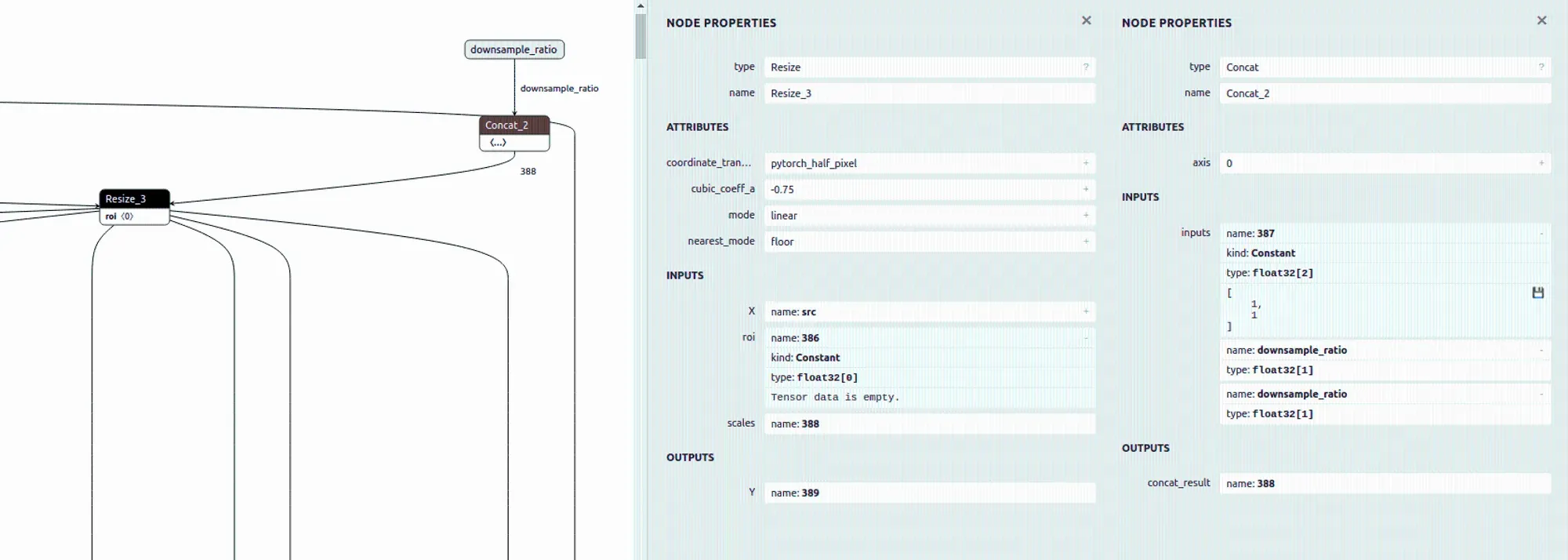

之后,Netron 查看模型 Resize_3 节点:

…详情请参照古月居

文章出处登录后可见!