一.全卷积网络FCN

1. 介绍

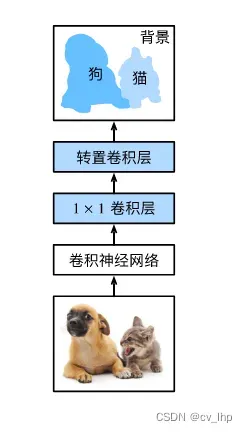

语义分割是对图像中的每个像素分类,全卷积网络(fully convolutional network,FCN)采用卷积神经网络实现了从图像像素到像素类别的变换 ,与前面在图像分类或目标检测部分介绍的卷积神经网络不同,全卷积网络通过转置卷积层将中间层特征图的高和宽变换回输入图像的尺寸,因此输出的类别预测与输入图像在像素级别上具有一一对应关系:通道维的输出即该位置对应像素的类别预测。

2. 模型构造

如下图所示为全卷积网络模型最基本的设计,全卷积网络先使用卷积神经网络抽取图像特征,然后通过 1×1 卷积层将通道数变换为类别个数(1×1卷积层作用:融合相同空间位置上面的通道信息,改变通道数,减少计算量,能够降低对边缘特征位置(锐化)敏感性),最后通过转置卷积层将特征图的高和宽变换为输入图像的尺寸。 因此,模型输出与输入图像的高和宽相同,且最终输出通道包含了该空间位置像素的类别预测。

下面使用在ImageNet数据集上预训练的ResNet-18模型来提取图像特征,注意ResNet-18模型的最后几层包括全局平均汇聚层和全连接层,而全卷积网络中不需要它们。

import torch

import d2l.torch

import torchvision

from torch import nn

from torch.nn import functional as F

pretrained_net = torchvision.models.resnet18(pretrained=True)

list(pretrained_net.children())

接下来创建一个全卷积网络net,它复制了ResNet-18中前面大部分的预训练层,除了最后的全局平均汇聚层和全连接层,给定高为320和宽为480的输入,net的前向传播将输入的高和宽减小至原来的 1/32 ,即10和15。

net = nn.Sequential(*list(pretrained_net.children())[:-2])

num_classes = 21

X =torch.rand(size=(1,3,320,480))

Y = net(X)

Y.shape

接下来使用卷积层将输出通道数转换为Pascal VOC2012数据集的类数(21类)。最后需要将特征图的高度和宽度增加32倍,从而将其变回输入图像的高和宽。

卷积层输出形状的计算方法:由于且

,因此构造一个步幅为

的转置卷积层,并将卷积核的高和宽设为

,填充为

。这是因为需要将经过net前向传播后得到的特征图形状大小(h=10,w=15)变为输入的形状大小(h=320,w=480),因此需要将特征图高和宽扩大32倍,根据转置卷积的尺寸形状计算方法(上一篇博客转置卷积计算方法:(李沐动手学深度学习V2-转置卷积和代码实现)),需要满足K = 2P+S条件,由于K=64,S = 32,因此P=16。也即是如果步幅为

,填充为

(假设

是整数)且卷积核的高和宽为

,转置卷积核会将输入的高和宽分别放大

倍。

3. 转置卷积初始化

在图像处理中有时需要将图像放大即上采样(upsampling),双线性插值(bilinear interpolation) 是常用的上采样方法之一,它也经常用于初始化转置卷积层。

为了解释双线性插值,假设给定输入图像,计算上采样输出图像上的每个像素。 首先将输出图像的坐标 (𝑥,𝑦) 映射到输入图像的坐标 (𝑥′,𝑦′) 上。 例如根据输入与输出的尺寸之比来映射,注意映射后的 𝑥′ 和 𝑦′ 是实数。 然后在输入图像上找到离坐标(𝑥′,𝑦′) 最近的4个像素。 最后输出图像在坐标 (𝑥,𝑦) 上的像素依据输入图像上这4个像素及其与 (𝑥′,𝑦′) 的相对距离来计算。

双线性插值的上采样可以通过转置卷积层实现,内核由以下bilinear_kernel()函数构造。

def bilinear_weight(in_channels,out_channels,kernel_size):

factor = (kernel_size+1)//2

if kernel_size%2 == 1:

center = factor-1

else:

center = factor-0.5

og = (torch.arange(kernel_size).reshape(-1,1),torch.arange(kernel_size).reshape(1,-1))

filt = (1-torch.abs(og[0]-center)/factor)*(1-torch.abs(og[1]-center)/factor)

#注意Pytorch中ConvTranspose2d()转置卷积层权重参数形状大小是(input_channels,output_channels,height,width),跟Conv2d()卷积层权重参数形状大小:(output_channels,input_channels,height,width)不一样,两者input_channels和output_channels通道数位置相反。

weight = torch.zeros(size=(in_channels,out_channels,kernel_size,kernel_size))

weight[range(in_channels),range(out_channels),:,:] = filt

return weight

构造一个将输入的高和宽放大2倍的转置卷积层,并将其卷积核用bilinear_kernel()函数初始化。

注意Pytorch中ConvTranspose2d()转置卷积层权重参数形状大小是(input_channels,output_channels,height,width),跟Conv2d()卷积层权重参数形状大小:(output_channels,input_channels,height,width)不一样,两者input_channels和output_channels通道数位置相反。

conv_trans = nn.ConvTranspose2d(in_channels=3,out_channels=3,kernel_size=4,stride=2,padding=1,bias=False)

conv_trans.weight.data.copy_(bilinear_weight(3,3,4))

conv_trans.weight.data

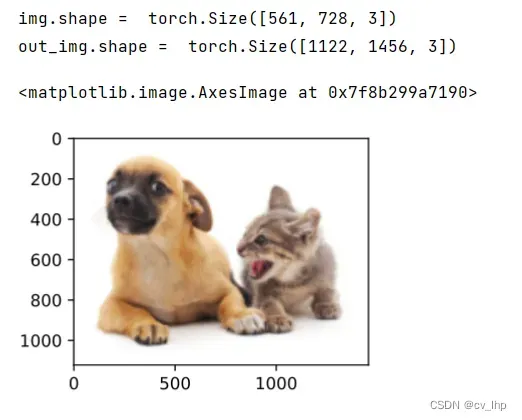

读取图像X,将上采样的结果记作Y。如下图所示可以看到转置卷积层将图像的高和宽分别放大了2倍, 除了坐标刻度不同,双线性插值放大的图像和在上面打印出的原图看上去没什么两样(注意打印图像时需要调整通道维的位置)。

img = torchvision.transforms.ToTensor()(d2l.torch.Image.open('../images/catdog.jpg'))

X = img.unsqueeze(0)

Y = conv_trans(X)

out_img = Y[0].permute(1,2,0).detach()

conv_transpose_weight = bilinear_weight(num_classes,num_classes,64)

net.transposed_conv2d.weight.data.copy_(conv_transpose_weight)

4.数据集读取

使用前面博客介绍的语义分割读取数据集(李沐动手学深度学习V2-语义分割和Pascal VOC2012数据集加载代码实现),指定随机裁剪图像的形状为 320×480 :高和宽都可以被 32 整除。

batch_size,crop_size = 32,(320,480)

train_iter,val_iter = d2l.torch.load_data_voc(batch_size,crop_size)

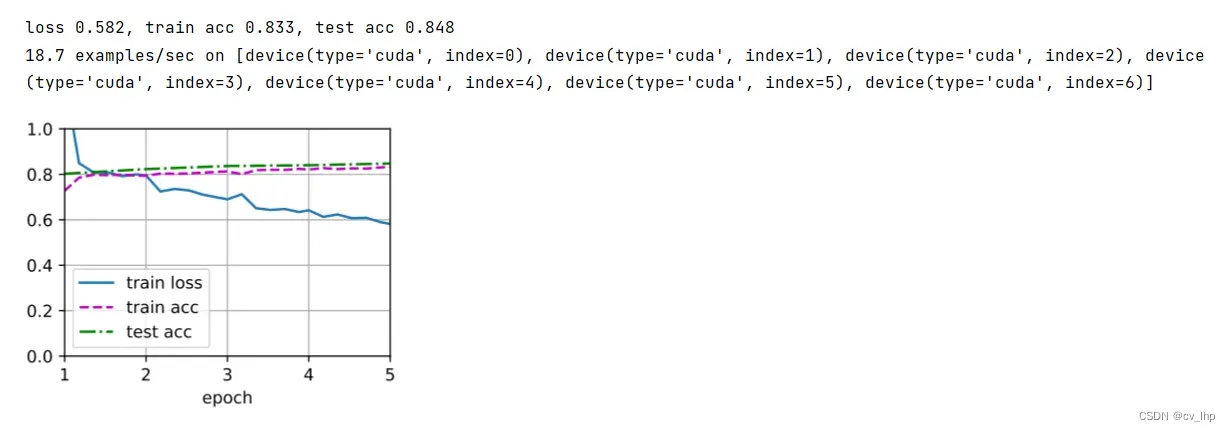

5. 模型训练

训练全卷积网络,模型损失函数和准确率计算与图像分类中的并没有本质上的不同, 此外模型基于每个像素的预测类别是否正确来计算准确率,训练结果如下图所示。

def loss(inputs,targets):

return F.cross_entropy(inputs,targets,reduction='none').mean(1).mean(1)

num_epochs,lr,weight_decay,devices= 5,1e-3,1e-3,d2l.torch.try_all_gpus()

optim = torch.optim.SGD(params=net.parameters(),lr =lr,weight_decay=weight_decay)

d2l.torch.train_ch13(net,train_iter,val_iter,loss,optim,num_epochs,devices)

def train_batch_ch13(net, X, y, loss, trainer, devices):

"""Train for a minibatch with mutiple GPUs (defined in Chapter 13).

Defined in :numref:`sec_image_augmentation`"""

if isinstance(X, list):

# Required for BERT fine-tuning (to be covered later)

X = [x.to(devices[0]) for x in X]

else:

X = X.to(devices[0])

y = y.to(devices[0])

net.train()

trainer.zero_grad()

pred = net(X)

l = loss(pred, y)

l.sum().backward()

trainer.step()

train_loss_sum = l.sum()

train_acc_sum = d2l.accuracy(pred, y)

return train_loss_sum, train_acc_sum

def train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs,

devices=d2l.torch.try_all_gpus()):

"""Train a model with mutiple GPUs (defined in Chapter 13).

Defined in :numref:`sec_image_augmentation`"""

timer, num_batches = d2l.torch.Timer(), len(train_iter)

animator = d2l.torch.Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0, 1],

legend=['train loss', 'train acc', 'test acc'])

net = nn.DataParallel(net, device_ids=devices).to(devices[0])

for epoch in range(num_epochs):

# Sum of training loss, sum of training accuracy, no. of examples,

# no. of predictions

metric = d2l.torch.Accumulator(4)

for i, (features, labels) in enumerate(train_iter):

timer.start()

l, acc = train_batch_ch13(

net, features, labels, loss, trainer, devices)

metric.add(l, acc, labels.shape[0], labels.numel())

timer.stop()

if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:

animator.add(epoch + (i + 1) / num_batches,

(metric[0] / metric[2], metric[1] / metric[3],

None))

test_acc = d2l.torch.evaluate_accuracy_gpu(net, test_iter)

animator.add(epoch + 1, (None, None, test_acc))

print(f'loss {metric[0] / metric[2]:.3f}, train acc '

f'{metric[1] / metric[3]:.3f}, test acc {test_acc:.3f}')

print(f'{metric[2] * num_epochs / timer.sum():.1f} examples/sec on '

f'{str(devices)}')

def evaluate_accuracy_gpu(net, data_iter, device=None):

"""Compute the accuracy for a model on a dataset using a GPU.

Defined in :numref:`sec_lenet`"""

if isinstance(net, nn.Module):

net.eval() # Set the model to evaluation mode

if not device:

device = next(iter(net.parameters())).device

# No. of correct predictions, no. of predictions

metric = d2l.Accumulator(2)

with torch.no_grad():

for X, y in data_iter:

if isinstance(X, list):

# Required for BERT Fine-tuning (to be covered later)

X = [x.to(device) for x in X]

else:

X = X.to(device)

y = y.to(device)

metric.add(d2l.torch.accuracy(net(X), y), d2l.size(y))

return metric[0] / metric[1]

def accuracy(y_hat, y):

"""Compute the number of correct predictions.

Defined in :numref:`sec_softmax_scratch`"""

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = d2l.torch.argmax(y_hat, axis=1)

cmp = d2l.torch.astype(y_hat, y.dtype) == y

return float(d2l.torch.reduce_sum(d2l.torch.astype(cmp, y.dtype)))

6. 模型预测

预测时需要将输入图像在各个通道做标准化,并转成卷积神经网络所需要的四维输入格式。

def predict(img):

X = val_iter.dataset.normalize_image(img).unsqueeze(0)

preds = net(X.to(devices[0])).argmax(dim=1)

return preds.reshape(preds.shape[1],preds.shape[2])

将预测图片每个像素的类别映射回它们在数据集中的标注的RGB颜色。

def label2image(pred):

colormap = torch.tensor(d2l.torch.VOC_COLORMAP)

pred = pred.long()

return colormap[pred,:]

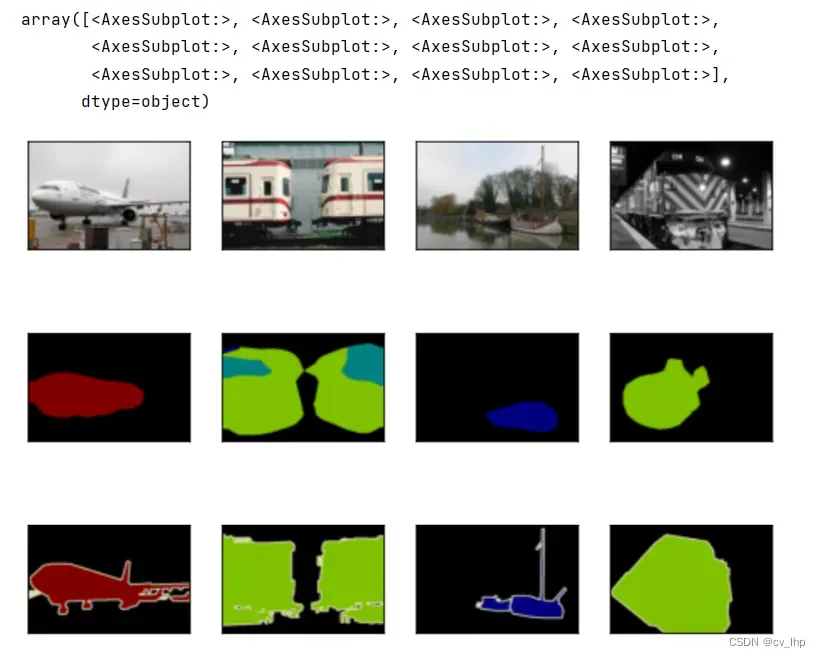

测试数据集中的图像大小和形状各异,由于模型使用了步幅为32的转置卷积层,因此当输入图像的高或宽无法被32整除时,转置卷积层输出的高或宽会与输入图像的尺寸有偏差。 为了解决这个问题可以在图像中截取多块高和宽为32的整数倍的矩形区域,并分别对这些区域中的像素做前向传播。 注意这些区域的并集需要完整覆盖输入图像。 当一个像素被多个区域所覆盖时,它在不同区域前向传播中转置卷积层输出的平均值可以作为softmax运算的输入,从而预测类别。

为简单起见只读取几张较大的测试图像,并从图像的左上角开始截取形状为 320×480 的区域用于预测。 对于这些测试图像逐一打印它们截取的区域,再打印预测结果,最后打印标注的类别,输出结果如下图所示。

voc_dir = d2l.torch.download_extract('voc2012', 'VOCdevkit/VOC2012')

test_images,test_labels = d2l.torch.read_voc_images(voc_dir,is_train=False)

n,images = 4,[]

for i in range(n):

crop_rect = (0,0,320,480)

crop_img = torchvision.transforms.functional.crop(test_images[i],*crop_rect)

crop_label = torchvision.transforms.functional.crop(test_labels[i],*crop_rect)

pred_image = label2image(predict(crop_img))

images+=[crop_img.permute(1,2,0),pred_image.cpu(),crop_label.permute(1,2,0)]

d2l.torch.show_images(images[::3]+images[1::3]+images[2::3],3,n,scale=2)

7.小结

- 全卷积网络先使用卷积神经网络抽取图像特征,然后通过 1×1 卷积层将通道数变换为类别个数,最后通过转置卷积层将特征图的高和宽变换为输入图像的尺寸,转置卷积层的输出通道数为预测的类别数,表示每个通道相同位置的值代表某个类别值的预测,从而使输出结果对输入图像进行语义分割像素级别的类别预测。

- 在全卷积网络中,可以将转置卷积层权重参数初始化为双线性插值的上采样。

- 使用1×1卷积层作用:融合相同空间位置上面的通道信息,改变通道数,减少计算量,能够降低对边缘特征位置(锐化)敏感性。

8. 全部代码

import torch

import d2l.torch

import torchvision

from torch import nn

from torch.nn import functional as F

pretrained_net = torchvision.models.resnet18(pretrained=True)

list(pretrained_net.children())

net = nn.Sequential(*list(pretrained_net.children())[:-2])

num_classes = 21

X = torch.rand(size=(1, 3, 320, 480))

Y = net(X)

Y.shape

net.add_module('final_conv2d', nn.Conv2d(in_channels=512, out_channels=num_classes, kernel_size=1))

net.add_module('transposed_conv2d',

nn.ConvTranspose2d(in_channels=num_classes, out_channels=num_classes, kernel_size=64, padding=16,

stride=32))

net

def bilinear_weight(in_channels, out_channels, kernel_size):

factor = (kernel_size + 1) // 2

if kernel_size % 2 == 1:

center = factor - 1

else:

center = factor - 0.5

og = (torch.arange(kernel_size).reshape(-1, 1), torch.arange(kernel_size).reshape(1, -1))

filt = (1 - torch.abs(og[0] - center) / factor) * (1 - torch.abs(og[1] - center) / factor)

weight = torch.zeros(size=(in_channels, out_channels, kernel_size, kernel_size))

weight[range(in_channels), range(out_channels), :, :] = filt

return weight

conv_trans = nn.ConvTranspose2d(in_channels=3, out_channels=3, kernel_size=4, stride=2, padding=1, bias=False)

conv_trans.weight.data.copy_(bilinear_weight(3, 3, 4))

conv_trans.weight.data

img = torchvision.transforms.ToTensor()(d2l.torch.Image.open('../images/catdog.jpg'))

X = img.unsqueeze(0)

Y = conv_trans(X)

out_img = Y[0].permute(1, 2, 0).detach()

d2l.torch.set_figsize()

print('img.shape = ', img.permute(1, 2, 0).shape)

d2l.torch.plt.imshow(img.permute(1, 2, 0))

print('out_img.shape = ', out_img.shape)

d2l.torch.plt.imshow(out_img)

conv_transpose_weight = bilinear_weight(num_classes, num_classes, 64)

net.transposed_conv2d.weight.data.copy_(conv_transpose_weight)

batch_size, crop_size = 32, (320, 480)

train_iter, val_iter = d2l.torch.load_data_voc(batch_size, crop_size)

def loss(inputs, targets):

return F.cross_entropy(inputs, targets, reduction='none').mean(1).mean(1)

num_epochs, lr, weight_decay, devices = 5, 1e-3, 1e-3, d2l.torch.try_all_gpus()

optim = torch.optim.SGD(params=net.parameters(), lr=lr, weight_decay=weight_decay)

d2l.torch.train_ch13(net, train_iter, val_iter, loss, optim, num_epochs, devices)

def train_batch_ch13(net, X, y, loss, trainer, devices):

"""Train for a minibatch with mutiple GPUs (defined in Chapter 13).

Defined in :numref:`sec_image_augmentation`"""

if isinstance(X, list):

# Required for BERT fine-tuning (to be covered later)

X = [x.to(devices[0]) for x in X]

else:

X = X.to(devices[0])

y = y.to(devices[0])

net.train()

trainer.zero_grad()

pred = net(X)

l = loss(pred, y)

l.sum().backward()

trainer.step()

train_loss_sum = l.sum()

train_acc_sum = d2l.accuracy(pred, y)

return train_loss_sum, train_acc_sum

def train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs,

devices=d2l.torch.try_all_gpus()):

"""Train a model with mutiple GPUs (defined in Chapter 13).

Defined in :numref:`sec_image_augmentation`"""

timer, num_batches = d2l.torch.Timer(), len(train_iter)

animator = d2l.torch.Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0, 1],

legend=['train loss', 'train acc', 'test acc'])

net = nn.DataParallel(net, device_ids=devices).to(devices[0])

for epoch in range(num_epochs):

# Sum of training loss, sum of training accuracy, no. of examples,

# no. of predictions

metric = d2l.torch.Accumulator(4)

for i, (features, labels) in enumerate(train_iter):

timer.start()

l, acc = train_batch_ch13(

net, features, labels, loss, trainer, devices)

metric.add(l, acc, labels.shape[0], labels.numel())

timer.stop()

if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:

animator.add(epoch + (i + 1) / num_batches,

(metric[0] / metric[2], metric[1] / metric[3],

None))

test_acc = d2l.torch.evaluate_accuracy_gpu(net, test_iter)

animator.add(epoch + 1, (None, None, test_acc))

print(f'loss {metric[0] / metric[2]:.3f}, train acc '

f'{metric[1] / metric[3]:.3f}, test acc {test_acc:.3f}')

print(f'{metric[2] * num_epochs / timer.sum():.1f} examples/sec on '

f'{str(devices)}')

def evaluate_accuracy_gpu(net, data_iter, device=None):

"""Compute the accuracy for a model on a dataset using a GPU.

Defined in :numref:`sec_lenet`"""

if isinstance(net, nn.Module):

net.eval() # Set the model to evaluation mode

if not device:

device = next(iter(net.parameters())).device

# No. of correct predictions, no. of predictions

metric = d2l.Accumulator(2)

with torch.no_grad():

for X, y in data_iter:

if isinstance(X, list):

# Required for BERT Fine-tuning (to be covered later)

X = [x.to(device) for x in X]

else:

X = X.to(device)

y = y.to(device)

metric.add(d2l.torch.accuracy(net(X), y), d2l.size(y))

return metric[0] / metric[1]

def accuracy(y_hat, y):

"""Compute the number of correct predictions.

Defined in :numref:`sec_softmax_scratch`"""

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = d2l.torch.argmax(y_hat, axis=1)

cmp = d2l.torch.astype(y_hat, y.dtype) == y

return float(d2l.torch.reduce_sum(d2l.torch.astype(cmp, y.dtype)))

def predict(img):

X = val_iter.dataset.normalize_image(img).unsqueeze(0)

preds = net(X.to(devices[0])).argmax(dim=1)

return preds.reshape(preds.shape[1], preds.shape[2])

def label2image(pred):

colormap = torch.tensor(d2l.torch.VOC_COLORMAP)

pred = pred.long()

return colormap[pred, :]

voc_dir = d2l.torch.download_extract('voc2012', 'VOCdevkit/VOC2012')

test_images, test_labels = d2l.torch.read_voc_images(voc_dir, is_train=False)

n, images = 4, []

for i in range(n):

crop_rect = (0, 0, 320, 480)

crop_img = torchvision.transforms.functional.crop(test_images[i], *crop_rect)

crop_label = torchvision.transforms.functional.crop(test_labels[i], *crop_rect)

pred_image = label2image(predict(crop_img))

images += [crop_img.permute(1, 2, 0), pred_image.cpu(), crop_label.permute(1, 2, 0)]

d2l.torch.show_images(images[::3] + images[1::3] + images[2::3], 3, n, scale=2)

9. 链接

语义分割第一篇:李沐动手学深度学习V2-语义分割和Pascal VOC2012数据集加载代码实现

语义分割第二篇:李沐动手学深度学习V2-转置卷积和代码实现

语义分割第三篇:李沐动手学深度学习V2-语义分割全卷积网络FCN和代码实现

文章出处登录后可见!