本文主要针对目标检测部分的代码。

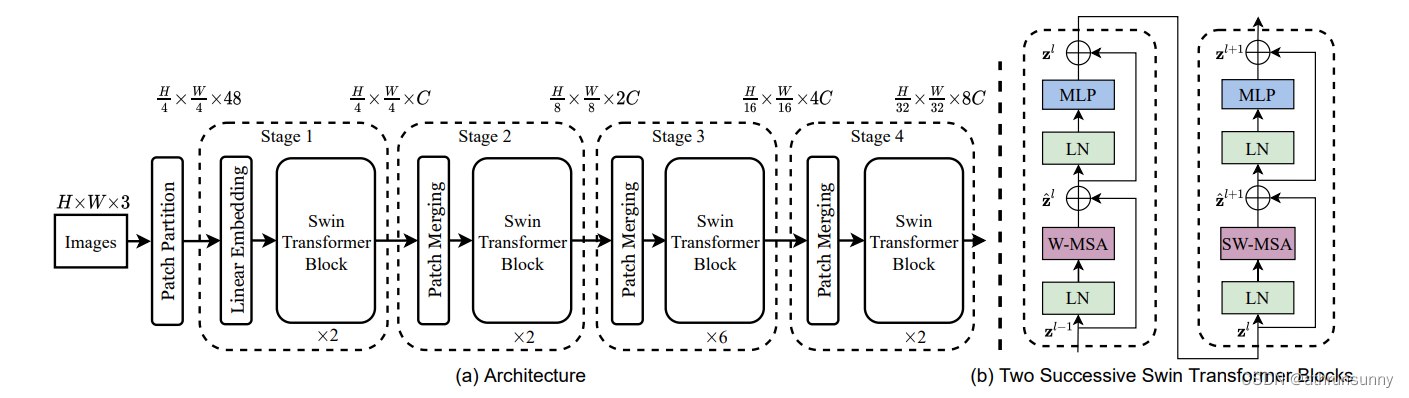

开始之前,先上一下swin transformer 结构图

首先从模型训练开始,训练模型py文件位于项目根目录/tools/train.py,该文件中整体结构简单,仅有一个main函数。为了方便程序运行,我直接在配置项中将config配置成

\configs\swin\mask_rcnn_swin_tiny_patch4_window7_mstrain_480-800_adamw_1x_coco.py

main函数中主要关注161行后的代码,以下是代码片段

model = build_detector(

cfg.model,

train_cfg=cfg.get('train_cfg'),

test_cfg=cfg.get('test_cfg'))

datasets = [build_dataset(cfg.data.train)]

if len(cfg.workflow) == 2:

val_dataset = copy.deepcopy(cfg.data.val)

val_dataset.pipeline = cfg.data.train.pipeline

datasets.append(build_dataset(val_dataset))

if cfg.checkpoint_config is not None:

# save mmdet version, config file content and class names in

# checkpoints as meta data

cfg.checkpoint_config.meta = dict(

mmdet_version=__version__ + get_git_hash()[:7],

CLASSES=datasets[0].CLASSES)

# add an attribute for visualization convenience

model.CLASSES = datasets[0].CLASSES

train_detector(

model,

datasets,

cfg,

distributed=distributed,

validate=(not args.no_validate),

timestamp=timestamp,

meta=meta)这部分主要是构建模型,构建数据集,模型训练函数

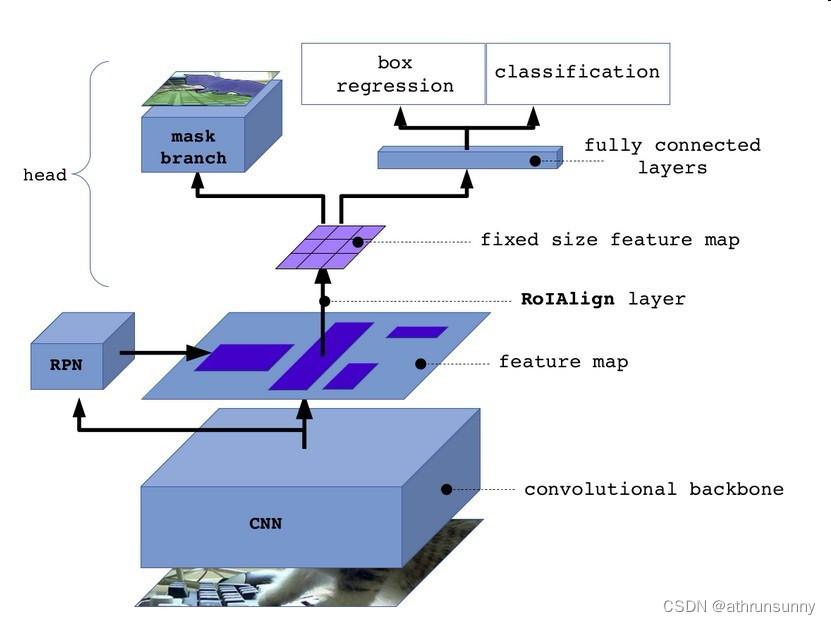

这里用的Mask RCNN结构,构建模型的时候,会分别构建如下文件夹中的相应组件:

mmdet/models/detectors/mask_rcnn.py 中的Mask RCNN类

mmdet/models/detectors/two_stage.py 中的TwoStageDetector类

mmdet/models/backbones/swin_transformer.py 中的SwinTransformer类(算法关键)

mmdet/models/necks/fpn.py 中的FPN类

mmdet/models/dense_heads/rpn_head.py 中的RPNHead类,其中还会构建各种损失函数和一些功能组件

mmdet/models/roi_heads/base_roi_head.py 中的BaseRoIHead 类

mmdet/models/roi_heads/bbox_heads/convfc_bbox_head.py 中的ConvFCBBoxHead类

mmdet/models/losses/cross_entropy_loss.py 中的CrossEntropyLoss类

mmdet/models/losses/smooth_l1_loss.py 中的L1Loss类

mmdet/core/bbox/assigners/max_iou_assigner.py 中的MaxIoUAssigner类

mmdet/core/bbox/samplers/random_sampler.py中的RandomSampler类

构建完的模型:

MaskRCNN(

(backbone): SwinTransformer(

(patch_embed): PatchEmbed(

(proj): Conv2d(3, 96, kernel_size=(4, 4), stride=(4, 4))

(norm): LayerNorm((96,), eps=1e-05, elementwise_affine=True)

)

(pos_drop): Dropout(p=0.0, inplace=False)

(layers): ModuleList(

(0): BasicLayer(

(blocks): ModuleList(

(0): SwinTransformerBlock(

(norm1): LayerNorm((96,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=96, out_features=288, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=96, out_features=96, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): Identity()

(norm2): LayerNorm((96,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=96, out_features=384, bias=True)

(act): GELU()

(fc2): Linear(in_features=384, out_features=96, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(1): SwinTransformerBlock(

(norm1): LayerNorm((96,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=96, out_features=288, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=96, out_features=96, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((96,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=96, out_features=384, bias=True)

(act): GELU()

(fc2): Linear(in_features=384, out_features=96, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

)

(downsample): PatchMerging(

(reduction): Linear(in_features=384, out_features=192, bias=False)

(norm): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

)

)

(1): BasicLayer(

(blocks): ModuleList(

(0): SwinTransformerBlock(

(norm1): LayerNorm((192,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=192, out_features=576, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=192, out_features=192, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((192,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=192, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=192, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(1): SwinTransformerBlock(

(norm1): LayerNorm((192,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=192, out_features=576, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=192, out_features=192, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((192,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=192, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=192, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

)

(downsample): PatchMerging(

(reduction): Linear(in_features=768, out_features=384, bias=False)

(norm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

)

(2): BasicLayer(

(blocks): ModuleList(

(0): SwinTransformerBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=384, out_features=1152, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=1536, bias=True)

(act): GELU()

(fc2): Linear(in_features=1536, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(1): SwinTransformerBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=384, out_features=1152, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=1536, bias=True)

(act): GELU()

(fc2): Linear(in_features=1536, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(2): SwinTransformerBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=384, out_features=1152, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=1536, bias=True)

(act): GELU()

(fc2): Linear(in_features=1536, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(3): SwinTransformerBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=384, out_features=1152, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=1536, bias=True)

(act): GELU()

(fc2): Linear(in_features=1536, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(4): SwinTransformerBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=384, out_features=1152, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=1536, bias=True)

(act): GELU()

(fc2): Linear(in_features=1536, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(5): SwinTransformerBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=384, out_features=1152, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=1536, bias=True)

(act): GELU()

(fc2): Linear(in_features=1536, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

)

(downsample): PatchMerging(

(reduction): Linear(in_features=1536, out_features=768, bias=False)

(norm): LayerNorm((1536,), eps=1e-05, elementwise_affine=True)

)

)

(3): BasicLayer(

(blocks): ModuleList(

(0): SwinTransformerBlock(

(norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=768, out_features=2304, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(1): SwinTransformerBlock(

(norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(attn): WindowAttention(

(qkv): Linear(in_features=768, out_features=2304, bias=True)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

(softmax): Softmax(dim=-1)

)

(drop_path): DropPath()

(norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

)

)

)

(norm0): LayerNorm((96,), eps=1e-05, elementwise_affine=True)

(norm1): LayerNorm((192,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(neck): FPN(

(lateral_convs): ModuleList(

(0): ConvModule(

(conv): Conv2d(96, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): ConvModule(

(conv): Conv2d(192, 256, kernel_size=(1, 1), stride=(1, 1))

)

(2): ConvModule(

(conv): Conv2d(384, 256, kernel_size=(1, 1), stride=(1, 1))

)

(3): ConvModule(

(conv): Conv2d(768, 256, kernel_size=(1, 1), stride=(1, 1))

)

)

(fpn_convs): ModuleList(

(0): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(1): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(2): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(3): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

)

(rpn_head): RPNHead(

(loss_cls): CrossEntropyLoss()

(loss_bbox): L1Loss()

(rpn_conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(rpn_cls): Conv2d(256, 3, kernel_size=(1, 1), stride=(1, 1))

(rpn_reg): Conv2d(256, 12, kernel_size=(1, 1), stride=(1, 1))

)

(roi_head): StandardRoIHead(

(bbox_roi_extractor): SingleRoIExtractor(

(roi_layers): ModuleList(

(0): RoIAlign(output_size=(7, 7), spatial_scale=0.25, sampling_ratio=0, pool_mode=avg, aligned=True, use_torchvision=False)

(1): RoIAlign(output_size=(7, 7), spatial_scale=0.125, sampling_ratio=0, pool_mode=avg, aligned=True, use_torchvision=False)

(2): RoIAlign(output_size=(7, 7), spatial_scale=0.0625, sampling_ratio=0, pool_mode=avg, aligned=True, use_torchvision=False)

(3): RoIAlign(output_size=(7, 7), spatial_scale=0.03125, sampling_ratio=0, pool_mode=avg, aligned=True, use_torchvision=False)

)

)

(bbox_head): Shared2FCBBoxHead(

(loss_cls): CrossEntropyLoss()

(loss_bbox): L1Loss()

(fc_cls): Linear(in_features=1024, out_features=21, bias=True)

(fc_reg): Linear(in_features=1024, out_features=80, bias=True)

(shared_convs): ModuleList()

(shared_fcs): ModuleList(

(0): Linear(in_features=12544, out_features=1024, bias=True)

(1): Linear(in_features=1024, out_features=1024, bias=True)

)

(cls_convs): ModuleList()

(cls_fcs): ModuleList()

(reg_convs): ModuleList()

(reg_fcs): ModuleList()

(relu): ReLU(inplace=True)

)

)

)backbone部分的就是swin transformer的精髓,下图是mask rcnn的结构图

Swin Transformer就是用transformer块替换了图中CNN的结构,作为特征采集器。扯了这么多,是时候进入正题了,代码的关键算法都位于mmdet/models/backbones/swin_transformer.py文件中。主体位于SwinTransformer类中。

class SwinTransformer(nn.Module):

""" Swin Transformer backbone.

A PyTorch impl of : `Swin Transformer: Hierarchical Vision Transformer using Shifted Windows` -

https://arxiv.org/pdf/2103.14030

Args:

pretrain_img_size (int): Input image size for training the pretrained model,

used in absolute postion embedding. Default 224.

patch_size (int | tuple(int)): Patch size. Default: 4.

in_chans (int): Number of input image channels. Default: 3.

embed_dim (int): Number of linear projection output channels. Default: 96.

depths (tuple[int]): Depths of each Swin Transformer stage.

num_heads (tuple[int]): Number of attention head of each stage.

window_size (int): Window size. Default: 7.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim. Default: 4.

qkv_bias (bool): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float): Override default qk scale of head_dim ** -0.5 if set.

drop_rate (float): Dropout rate.

attn_drop_rate (float): Attention dropout rate. Default: 0.

drop_path_rate (float): Stochastic depth rate. Default: 0.2.

norm_layer (nn.Module): Normalization layer. Default: nn.LayerNorm.

ape (bool): If True, add absolute position embedding to the patch embedding. Default: False.

patch_norm (bool): If True, add normalization after patch embedding. Default: True.

out_indices (Sequence[int]): Output from which stages.

frozen_stages (int): Stages to be frozen (stop grad and set eval mode).

-1 means not freezing any parameters.

use_checkpoint (bool): Whether to use checkpointing to save memory. Default: False.

"""

def __init__(self,

pretrain_img_size=224,

patch_size=4,

in_chans=3,

embed_dim=96,

depths=[2, 2, 6, 2],

num_heads=[3, 6, 12, 24],

window_size=7,

mlp_ratio=4.,

qkv_bias=True,

qk_scale=None,

drop_rate=0.,

attn_drop_rate=0.,

drop_path_rate=0.2,

norm_layer=nn.LayerNorm,

ape=False,

patch_norm=True,

out_indices=(0, 1, 2, 3),

frozen_stages=-1,

use_checkpoint=False):

super().__init__()

self.pretrain_img_size = pretrain_img_size

self.num_layers = len(depths)

self.embed_dim = embed_dim

self.ape = ape

self.patch_norm = patch_norm

self.out_indices = out_indices

self.frozen_stages = frozen_stages

# split image into non-overlapping patches

self.patch_embed = PatchEmbed(

patch_size=patch_size, in_chans=in_chans, embed_dim=embed_dim,

norm_layer=norm_layer if self.patch_norm else None)

# absolute position embedding

if self.ape:

pretrain_img_size = to_2tuple(pretrain_img_size)

patch_size = to_2tuple(patch_size)

patches_resolution = [pretrain_img_size[0] // patch_size[0], pretrain_img_size[1] // patch_size[1]]

# 对应网络结构中的 linear embedding 网络结构

self.absolute_pos_embed = nn.Parameter(torch.zeros(1, embed_dim, patches_resolution[0], patches_resolution[1]))

# 绝对位置编码参数初始化

trunc_normal_(self.absolute_pos_embed, std=.02)

self.pos_drop = nn.Dropout(p=drop_rate)

# stochastic depth

# 给网络层数每层设置随机dropout rate

dpr = [x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))] # stochastic depth decay rule

# build layers

self.layers = nn.ModuleList()

# 构建四层网络结构

# mlp_ratio Ratio of mlp hidden dim to embedding dim.

# downsample 下采样 前三个block 会进行下采样 第四个block 不会在进行下采样

for i_layer in range(self.num_layers):

layer = BasicLayer(

dim=int(embed_dim * 2 ** i_layer),

depth=depths[i_layer],

num_heads=num_heads[i_layer],

window_size=window_size,

mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias,

qk_scale=qk_scale,

drop=drop_rate,

attn_drop=attn_drop_rate,

drop_path=dpr[sum(depths[:i_layer]):sum(depths[:i_layer + 1])],

norm_layer=norm_layer,

downsample=PatchMerging if (i_layer < self.num_layers - 1) else None,

use_checkpoint=use_checkpoint)

self.layers.append(layer)

num_features = [int(embed_dim * 2 ** i) for i in range(self.num_layers)]

self.num_features = num_features

# add a norm layer for each output

for i_layer in out_indices:

layer = norm_layer(num_features[i_layer])

layer_name = f'norm{i_layer}'

self.add_module(layer_name, layer)

self._freeze_stages()

def _freeze_stages(self):

if self.frozen_stages >= 0:

self.patch_embed.eval()

for param in self.patch_embed.parameters():

param.requires_grad = False

if self.frozen_stages >= 1 and self.ape:

self.absolute_pos_embed.requires_grad = False

if self.frozen_stages >= 2:

self.pos_drop.eval()

for i in range(0, self.frozen_stages - 1):

m = self.layers[i]

m.eval()

for param in m.parameters():

param.requires_grad = False

def init_weights(self, pretrained=None):

"""Initialize the weights in backbone.

Args:

pretrained (str, optional): Path to pre-trained weights.

Defaults to None.

"""

def _init_weights(m):

if isinstance(m, nn.Linear):

trunc_normal_(m.weight, std=.02)

if isinstance(m, nn.Linear) and m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.LayerNorm):

nn.init.constant_(m.bias, 0)

nn.init.constant_(m.weight, 1.0)

if isinstance(pretrained, str):

self.apply(_init_weights)

logger = get_root_logger()

load_checkpoint(self, pretrained, strict=False, logger=logger)

elif pretrained is None:

self.apply(_init_weights)

else:

raise TypeError('pretrained must be a str or None')

def forward(self, x):

"""Forward function."""

x = self.patch_embed(x)

Wh, Ww = x.size(2), x.size(3)

if self.ape:

# interpolate the position embedding to the corresponding size

absolute_pos_embed = F.interpolate(self.absolute_pos_embed, size=(Wh, Ww), mode='bicubic')

x = (x + absolute_pos_embed).flatten(2).transpose(1, 2) # B Wh*Ww C

else:

x = x.flatten(2).transpose(1, 2)

x = self.pos_drop(x)

outs = []

for i in range(self.num_layers):

layer = self.layers[i]

x_out, H, W, x, Wh, Ww = layer(x, Wh, Ww)

if i in self.out_indices:

norm_layer = getattr(self, f'norm{i}')

x_out = norm_layer(x_out)

out = x_out.view(-1, H, W, self.num_features[i]).permute(0, 3, 1, 2).contiguous()

outs.append(out)

return tuple(outs)

def train(self, mode=True):

"""Convert the model into training mode while keep layers freezed."""

super(SwinTransformer, self).train(mode)

self._freeze_stages()

为了方便将img_scale设置为[(224,224)],此时的数据集中的图片会进行resize,并且将短边padding成32的倍数,按最长边与224的比例缩放最短边,即 x=短边 / (500/224),x即为缩放后的实际长度,比如输入时500*287(w*h)的图片,缩放后的短边长为287 * 224 / 500 = 129,由于输入的图像尺寸需要是32的倍数,此时需要向上取整,所以padding后的短边长为160。

吃了贫穷的亏,电脑只有单卡3080ti,batch size设置为2,使用未修改的img_scale训练coco数据集时还经常贴着最大显存跑,生怕训练的时候爆显存。。。所以单卡训练batch size就设置成2吧,每个batch中的数据会根据边长最大的图片再进行一次padding,比如其中一张第一次padding后大小为(160, 224, 3),另一张为(192, 224, 3),那么最终输入网络的数据尺寸为[2, 3, 192, 224] (B,C,H,W)

之后将会以输入[2, 3, 192, 224]为例进行讲解,按网络结构的顺序来。

PatchEmbed 通过一个size为4,stride为4的2d卷积来达到图像缩小4倍,并将维度升到embed_dim,PatchEmbed可以简单理解为包含了网络结构中的patch partition和linear embedding,所以embed_dim为输入transformer block的维度而不是论文中的48。

class PatchEmbed(nn.Module):

""" Image to Patch Embedding

Args:

patch_size (int): Patch token size. Default: 4.

in_chans (int): Number of input image channels. Default: 3.

embed_dim (int): Number of linear projection output channels. Default: 96.

norm_layer (nn.Module, optional): Normalization layer. Default: None

"""

def __init__(self, patch_size=4, in_chans=3, embed_dim=96, norm_layer=None):

super().__init__()

patch_size = to_2tuple(patch_size)

self.patch_size = patch_size

self.in_chans = in_chans

self.embed_dim = embed_dim

# 用2d卷积实现图像缩小四倍 kernel_size = stride = patch_size = 4

self.proj = nn.Conv2d(in_chans, embed_dim, kernel_size=patch_size, stride=patch_size)

if norm_layer is not None:

self.norm = norm_layer(embed_dim)

else:

self.norm = None

def forward(self, x):

"""Forward function."""

# padding

_, _, H, W = x.size()

if W % self.patch_size[1] != 0:

x = F.pad(x, (0, self.patch_size[1] - W % self.patch_size[1]))

if H % self.patch_size[0] != 0:

x = F.pad(x, (0, 0, 0, self.patch_size[0] - H % self.patch_size[0]))

x = self.proj(x) # B C Wh Ww

if self.norm is not None:

# x的shape变化 B, C, H, W --> B, C, h, w --> B, C, h * w --> B, h * w, c

# x shape 为 [2, 96, 48, 56]

Wh, Ww = x.size(2), x.size(3)

# x shape 为 [2, 2688, 96]

x = x.flatten(2).transpose(1, 2)

x = self.norm(x)

# x shape 为 [2, 96, 48, 56]

x = x.transpose(1, 2).view(-1, self.embed_dim, Wh, Ww)

return x经过PatchEmbed后的输出维度为[2, 96, 48, 56],之后会再经过一个x.flatten(2).transpose(1, 2)将输入维度转换为transformer block能够接收的输入,即[2, 2688, 96]。

接下来是BasicLayer

class BasicLayer(nn.Module):

""" A basic Swin Transformer layer for one stage.

Args:

dim (int): Number of feature channels

depth (int): Depths of this stage.

num_heads (int): Number of attention head.

window_size (int): Local window size. Default: 7.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim. Default: 4.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set.

drop (float, optional): Dropout rate. Default: 0.0

attn_drop (float, optional): Attention dropout rate. Default: 0.0

drop_path (float | tuple[float], optional): Stochastic depth rate. Default: 0.0

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

downsample (nn.Module | None, optional): Downsample layer at the end of the layer. Default: None

use_checkpoint (bool): Whether to use checkpointing to save memory. Default: False.

"""

def __init__(self,

dim,

depth,

num_heads,

window_size=7,

mlp_ratio=4.,

qkv_bias=True,

qk_scale=None,

drop=0.,

attn_drop=0.,

drop_path=0.,

norm_layer=nn.LayerNorm,

downsample=None,

use_checkpoint=False):

super().__init__()

self.window_size = window_size

self.shift_size = window_size // 2

self.depth = depth

self.use_checkpoint = use_checkpoint

# build blocks

# 序号为偶数的block进行W-MSA,奇数进行SW-MSA

# 这样让输出特征包含local window attention和跨窗口的 window attention

self.blocks = nn.ModuleList([

SwinTransformerBlock(

dim=dim,

num_heads=num_heads,

window_size=window_size,

shift_size=0 if (i % 2 == 0) else window_size // 2,

mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias,

qk_scale=qk_scale,

drop=drop,

attn_drop=attn_drop,

drop_path=drop_path[i] if isinstance(drop_path, list) else drop_path,

norm_layer=norm_layer)

for i in range(depth)])

# patch merging layer

# 只有前三个block执行patch merging,最后一个block不会执行 patch merging

if downsample is not None:

self.downsample = downsample(dim=dim, norm_layer=norm_layer)

else:

self.downsample = None

def forward(self, x, H, W):

""" Forward function.

Args:

x: Input feature, tensor size (B, H*W, C).

H, W: Spatial resolution of the input feature.

"""

# calculate attention mask for SW-MSA

Hp = int(np.ceil(H / self.window_size)) * self.window_size

Wp = int(np.ceil(W / self.window_size)) * self.window_size

img_mask = torch.zeros((1, Hp, Wp, 1), device=x.device) # 1 Hp Wp 1

h_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

w_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

cnt = 0

for h in h_slices:

for w in w_slices:

img_mask[:, h, w, :] = cnt

cnt += 1

mask_windows = window_partition(img_mask, self.window_size) # nW, window_size, window_size, 1

mask_windows = mask_windows.view(-1, self.window_size * self.window_size)

attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2)

attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0))

for blk in self.blocks:

blk.H, blk.W = H, W

if self.use_checkpoint:

x = checkpoint.checkpoint(blk, x, attn_mask)

else:

x = blk(x, attn_mask)

if self.downsample is not None:

x_down = self.downsample(x, H, W)

Wh, Ww = (H + 1) // 2, (W + 1) // 2

return x, H, W, x_down, Wh, Ww

else:

return x, H, W, x, H, WBasicLayer构建了一个stage的swin transformer基本结构,包含了带窗(SW-MSA)和不带窗(W-MSA)的transformer block以及一个PatchMerging,可以理解为网络结构图中的swin transformer block + patch merging。

在训练的过程中序号为偶数的block进行W-MSA,奇数进行SW-MSA,比如说第一个transformer block 有一个W-MSA和一个SW-MSA,先计算W-MSA,再计算SW-MSA,这样让输出特征包含local window attention和跨窗口的 window attention,而patch merging layer 仅在前三个block执行。接下来把这部分代码拆分出来解读。

def window_partition(x, window_size):

"""

Args:

x: (B, H, W, C)

window_size (int): window size

Returns:

windows: (num_windows*B, window_size, window_size, C)

"""

# B, H, W, C = x.shape

# x = x.view(B, H // window_size, window_size, W // window_size, window_size, C)

# windows = x.permute(0, 1, 3, 2, 4, 5).contiguous().view(-1, window_size, window_size, C)

H, W = x.shape

x = x.view(H // window_size, window_size, W // window_size, window_size)

windows = x.permute(0, 2, 1, 3).contiguous().view(-1,window_size, window_size)

return windows

Hp = 14

Wp = 14

window_size = 7

shift_size = window_size //2

# img_mask = torch.zeros((1, Hp, Wp, 1)) # 1 Hp Wp 1

img_mask = torch.zeros((Hp, Wp))

h_slices = (slice(0, -window_size),

slice(-window_size, -shift_size),

slice(-shift_size, None))

w_slices = (slice(0, -window_size),

slice(-window_size, -shift_size),

slice(-shift_size, None))

cnt = 0

for h in h_slices:

for w in w_slices:

# img_mask[:, h, w, :] = cnt

img_mask[h, w] = cnt

cnt += 1

print(img_mask)

# tensor([[0., 0., 0., 0., 0., 0., 0.,| 1., 1., 1., 1., 2., 2., 2.],

# [0., 0., 0., 0., 0., 0., 0.,| 1., 1., 1., 1., 2., 2., 2.],

# [0., 0., 0., 0., 0., 0., 0.,| 1., 1., 1., 1., 2., 2., 2.],

# [0., 0., 0., 0., 0., 0., 0.,| 1., 1., 1., 1., 2., 2., 2.],

# [0., 0., 0., 0., 0., 0., 0.,| 1., 1., 1., 1., 2., 2., 2.],

# [0., 0., 0., 0., 0., 0., 0.,| 1., 1., 1., 1., 2., 2., 2.],

# [0., 0., 0., 0., 0., 0., 0.,| 1., 1., 1., 1., 2., 2., 2.],

# - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

# [3., 3., 3., 3., 3., 3., 3.,| 4., 4., 4., 4., 5., 5., 5.],

# [3., 3., 3., 3., 3., 3., 3.,| 4., 4., 4., 4., 5., 5., 5.],

# [3., 3., 3., 3., 3., 3., 3.,| 4., 4., 4., 4., 5., 5., 5.],

# [3., 3., 3., 3., 3., 3., 3.,| 4., 4., 4., 4., 5., 5., 5.],

# [6., 6., 6., 6., 6., 6., 6.,| 7., 7., 7., 7., 8., 8., 8.],

# [6., 6., 6., 6., 6., 6., 6.,| 7., 7., 7., 7., 8., 8., 8.],

# [6., 6., 6., 6., 6., 6., 6.,| 7., 7., 7., 7., 8., 8., 8.]])

mask_windows = window_partition(img_mask, window_size) # nW, window_size, window_size, 1

mask_windows = mask_windows.view(-1, window_size * window_size)

attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2)

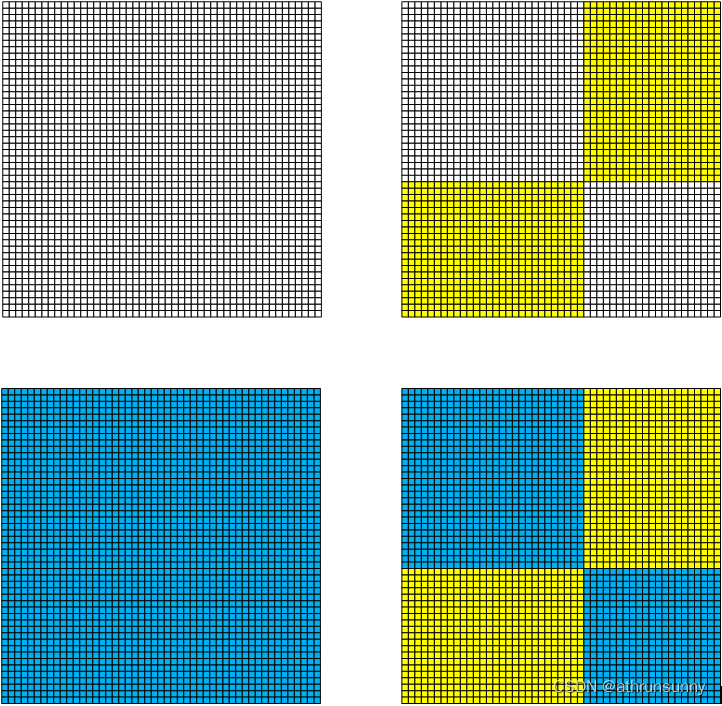

attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0)) 这部分代码是用于生成mask的,源码中的维度比较高,不是很直观,这里将其降为后输出成低维张量,就很直观了,张量的高宽设置为window_size的两倍,这样正好能够完整的看出其中张量做了什么操作,shift_size保持不变,打印出的img_mask可以看出,mask大体上分为四个部分。

window_partition函数则是将img_mask按照每个部分展开,即将张量分成N个[window_size,window_size]的小窗张量,此时的张量shape为[4,7,7]。之后view成[4,49],再在扩充对应的维度,再相减,张量中不为0的填充为-100,最后得到的attn_mask的shape为[4,49,49],这里的尺寸就和后面自注意力中的大小对应上了。

具体形式如下图

其中:

# 白色色块 # [0., 0., 0., 0., 0., 0., 0., # 0., 0., 0., 0., 0., 0., 0., # 0., 0., 0., 0., 0., 0., 0., # 0., 0., 0., 0., 0., 0., 0., # 0., 0., 0., 0., 0., 0., 0., # 0., 0., 0., 0., 0., 0., 0., # 0., 0., 0., 0., 0., 0., 0.],

# 蓝色色块 # [ 0., 0., 0., 0., -100., -100., -100., # 0., 0., 0., 0., -100., -100., -100., # 0., 0., 0., 0., -100., -100., -100., # 0., 0., 0., 0., -100., -100., -100., # -100., -100., -100., -100., 0., 0., 0., # -100., -100., -100., -100., 0., 0., 0., # -100., -100., -100., -100., 0., 0., 0.,],

# 黄色色块 # [-100., -100., -100., -100., -100., -100., -100., # -100., -100., -100., -100., -100., -100., -100., # -100., -100., -100., -100., -100., -100., -100., # -100., -100., -100., -100., -100., -100., -100., # -100., -100., -100., -100., -100., -100., -100., # -100., -100., -100., -100., -100., -100., -100., # -100., -100., -100., -100., -100., -100., -100.,]

实际上输出的attn_mask的shape为[56,49,49],其中的56=(Hp / window_size) * (Wp / window_size)

之后就是transformer block

class SwinTransformerBlock(nn.Module):

""" Swin Transformer Block.

Args:

dim (int): Number of input channels.

num_heads (int): Number of attention heads.

window_size (int): Window size.

shift_size (int): Shift size for SW-MSA.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set.

drop (float, optional): Dropout rate. Default: 0.0

attn_drop (float, optional): Attention dropout rate. Default: 0.0

drop_path (float, optional): Stochastic depth rate. Default: 0.0

act_layer (nn.Module, optional): Activation layer. Default: nn.GELU

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

"""

def __init__(self, dim, num_heads, window_size=7, shift_size=0,

mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0., drop_path=0.,

act_layer=nn.GELU, norm_layer=nn.LayerNorm):

super().__init__()

self.dim = dim

self.num_heads = num_heads

# window_size默认大小 7

self.window_size = window_size

# 进行 SW-MSA shift-size 7//2=3

# 进行 W-MSA shift-size 0

self.shift_size = shift_size

# multi self attention 最后神经网络的隐藏层的维度的倍率

self.mlp_ratio = mlp_ratio

assert 0 <= self.shift_size < self.window_size, "shift_size must in 0-window_size"

self.norm1 = norm_layer(dim)

# local window multi head self attention

self.attn = WindowAttention(

dim, window_size=to_2tuple(self.window_size), num_heads=num_heads,

qkv_bias=qkv_bias, qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

self.norm2 = norm_layer(dim)

mlp_hidden_dim = int(dim * mlp_ratio)

self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, act_layer=act_layer, drop=drop)

self.H = None

self.W = None

def forward(self, x, mask_matrix):

""" Forward function.

Args:

x: Input feature, tensor size (B, H*W, C).

H, W: Spatial resolution of the input feature.

mask_matrix: Attention mask for cyclic shift.

"""

# x的shape为[2,2688,96]

B, L, C = x.shape

H, W = self.H, self.W

assert L == H * W, "input feature has wrong size"

shortcut = x

# 进行LN,再将x展开为[2,48,56,96]

x = self.norm1(x)

x = x.view(B, H, W, C)

# pad feature maps to multiples of window size

# 此处需要根据窗的大小对特征图进行pad操作,pad之后的shape为[2,49,56,96]

pad_l = pad_t = 0

pad_r = (self.window_size - W % self.window_size) % self.window_size

pad_b = (self.window_size - H % self.window_size) % self.window_size

x = F.pad(x, (0, 0, pad_l, pad_r, pad_t, pad_b))

_, Hp, Wp, _ = x.shape

# cyclic shift

if self.shift_size > 0:

# 如果是进行 sw-msa 将数据进行变换

shifted_x = torch.roll(x, shifts=(-self.shift_size, -self.shift_size), dims=(1, 2))

attn_mask = mask_matrix

else:

shifted_x = x

attn_mask = None

# partition windows

# x_windows 的shape为[112,7,7,96]

x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C

x_windows = x_windows.view(-1, self.window_size * self.window_size, C) # nW*B, window_size*window_size, C

# W-MSA/SW-MSA

attn_windows = self.attn(x_windows, mask=attn_mask) # nW*B, window_size*window_size, C

# merge windows

attn_windows = attn_windows.view(-1, self.window_size, self.window_size, C)

# shifted_x的shape为[2,49,56,96]

shifted_x = window_reverse(attn_windows, self.window_size, Hp, Wp) # B H' W' C

# reverse cyclic shift

if self.shift_size > 0:

x = torch.roll(shifted_x, shifts=(self.shift_size, self.shift_size), dims=(1, 2))

else:

x = shifted_x

if pad_r > 0 or pad_b > 0:

# 映射回输入时的大小

x = x[:, :H, :W, :].contiguous()

x = x.view(B, H * W, C)

# FFN

x = shortcut + self.drop_path(x)

x = x + self.drop_path(self.mlp(self.norm2(x)))

return xSwinTransformerBlock由LN,W-MSA/SW-MSA以及MLP组成。在W-MSA中输入为[2,2688,96],通过window_partition函数,将输入划分为window_size大小的窗,此时的shape为[112,7,7,96],输入自注意力层时打平成[112,49,96],其中自注意力层就是标准的自注意力结构,这里就不多说了,要是不知道的话可以参考我的另一篇博文:ref

计算自注意力的代码如下:

class WindowAttention(nn.Module):

""" Window based multi-head self attention (W-MSA) module with relative position bias.

It supports both of shifted and non-shifted window.

Args:

dim (int): Number of input channels.

window_size (tuple[int]): The height and width of the window.

num_heads (int): Number of attention heads.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set

attn_drop (float, optional): Dropout ratio of attention weight. Default: 0.0

proj_drop (float, optional): Dropout ratio of output. Default: 0.0

"""

def __init__(self, dim, window_size, num_heads, qkv_bias=True, qk_scale=None, attn_drop=0., proj_drop=0.):

super().__init__()

self.dim = dim

self.window_size = window_size # Wh, Ww

self.num_heads = num_heads

head_dim = dim // num_heads

self.scale = qk_scale or head_dim ** -0.5

# define a parameter table of relative position bias

self.relative_position_bias_table = nn.Parameter(

torch.zeros((2 * window_size[0] - 1) * (2 * window_size[1] - 1), num_heads)) # 2*Wh-1 * 2*Ww-1, nH

# get pair-wise relative position index for each token inside the window

coords_h = torch.arange(self.window_size[0])

coords_w = torch.arange(self.window_size[1])

coords = torch.stack(torch.meshgrid([coords_h, coords_w])) # 2, Wh, Ww

coords_flatten = torch.flatten(coords, 1) # 2, Wh*Ww

relative_coords = coords_flatten[:, :, None] - coords_flatten[:, None, :] # 2, Wh*Ww, Wh*Ww

relative_coords = relative_coords.permute(1, 2, 0).contiguous() # Wh*Ww, Wh*Ww, 2

relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0

relative_coords[:, :, 1] += self.window_size[1] - 1

relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1

relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww

self.register_buffer("relative_position_index", relative_position_index)

self.qkv = nn.Linear(dim, dim * 3, bias=qkv_bias)

self.attn_drop = nn.Dropout(attn_drop)

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(proj_drop)

trunc_normal_(self.relative_position_bias_table, std=.02)

self.softmax = nn.Softmax(dim=-1)

def forward(self, x, mask=None):

""" Forward function.

Args:

x: input features with shape of (num_windows*B, N, C)

mask: (0/-inf) mask with shape of (num_windows, Wh*Ww, Wh*Ww) or None

"""

B_, N, C = x.shape

qkv = self.qkv(x).reshape(B_, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4)

q, k, v = qkv[0], qkv[1], qkv[2] # make torchscript happy (cannot use tensor as tuple)

q = q * self.scale

attn = (q @ k.transpose(-2, -1))

relative_position_bias = self.relative_position_bias_table[self.relative_position_index.view(-1)].view(

self.window_size[0] * self.window_size[1], self.window_size[0] * self.window_size[1], -1) # Wh*Ww,Wh*Ww,nH

relative_position_bias = relative_position_bias.permute(2, 0, 1).contiguous() # nH, Wh*Ww, Wh*Ww

attn = attn + relative_position_bias.unsqueeze(0)

if mask is not None:

nW = mask.shape[0]

attn = attn.view(B_ // nW, nW, self.num_heads, N, N) + mask.unsqueeze(1).unsqueeze(0)

attn = attn.view(-1, self.num_heads, N, N)

attn = self.softmax(attn)

else:

attn = self.softmax(attn)

attn = self.attn_drop(attn)

x = (attn @ v).transpose(1, 2).reshape(B_, N, C)

x = self.proj(x)

x = self.proj_drop(x)

return x这里有个细节就是在计算自注意力后,加了个截断正太分布的relative_position_bias

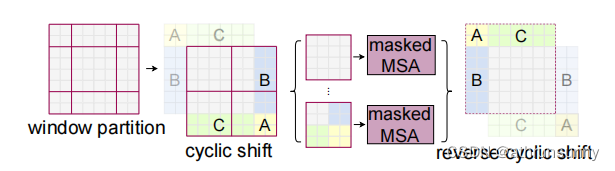

回归transformer block,算法为了使每个窗之间有相互联系,设计了shift window,这一设计能够大幅降低计算量。在计算SW-MSA时,先要使用torch.roll将输入[2,49,56,96]在1,2维上(也就是高宽上)做滚动。

下面有个小例子,还是将维度降低,方便看数据形式,容易理解

import torch

a = torch.arange(0, 49).reshape((7, 7))

print(a)

b = torch.roll(a, shifts=(-3, -3), dims=(0, 1))

print(b)

# tensor([[ 0, 1, 2, 3, 4, 5, 6],

# [ 7, 8, 9, 10, 11, 12, 13],

# [14, 15, 16, 17, 18, 19, 20],

# [21, 22, 23, 24, 25, 26, 27],

# [28, 29, 30, 31, 32, 33, 34],

# [35, 36, 37, 38, 39, 40, 41],

# [42, 43, 44, 45, 46, 47, 48]])

# tensor([[24, 25, 26, 27, |21, 22, 23],

# [31, 32, 33, 34, |28, 29, 30],

# [38, 39, 40, 41, |35, 36, 37],

# [45, 46, 47, 48, |42, 43, 44],

# — — - - - - - - - - - - -- - -

# [ 3, 4, 5, 6, | 0, 1, 2],

# [10, 11, 12, 13, | 7, 8, 9],

# [17, 18, 19, 20, |14, 15, 16]])也是就论文中的这张图中间部分:

进入自注意力层后,基本操作和W-MSA一致,就是多加了个之前生成的mask

最后就是PatchMerging

class PatchMerging(nn.Module):

""" Patch Merging Layer

Args:

dim (int): Number of input channels.

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

"""

def __init__(self, dim, norm_layer=nn.LayerNorm):

super().__init__()

self.dim = dim

self.reduction = nn.Linear(4 * dim, 2 * dim, bias=False)

self.norm = norm_layer(4 * dim)

def forward(self, x, H, W):

""" Forward function.

Args:

x: Input feature, tensor size (B, H*W, C).

H, W: Spatial resolution of the input feature.

"""

B, L, C = x.shape

assert L == H * W, "input feature has wrong size"

x = x.view(B, H, W, C)

# padding

pad_input = (H % 2 == 1) or (W % 2 == 1)

if pad_input:

x = F.pad(x, (0, 0, 0, W % 2, 0, H % 2))

# 这里实现path merging 图片缩小一半

# 0::2 从 0 开始 隔一个点取一个值

# 1::2 从 1 开始 隔一个点取一个值

x0 = x[:, 0::2, 0::2, :] # B H/2 W/2 C

x1 = x[:, 1::2, 0::2, :] # B H/2 W/2 C

x2 = x[:, 0::2, 1::2, :] # B H/2 W/2 C

x3 = x[:, 1::2, 1::2, :] # B H/2 W/2 C

x = torch.cat([x0, x1, x2, x3], -1) # B H/2 W/2 4*C

x = x.view(B, -1, 4 * C) # B H/2*W/2 4*C

x = self.norm(x)

# 降维到2 * dim 图片缩小一半 通道维度增加一倍

x = self.reduction(x)

return xPatchMerging实现的功能有点像yolov5中的focus操作,将图像缩小一半,再将通道数增加一倍。

到这里基本将swin transformer的主体结构讲完了。

文章出处登录后可见!