这一章我们来更具上一章的分析,为手眼标定函数calibrateHandEye 准备他那些麻烦的参数

R,T=cv2.calibrateHandEye(R_all_end_to_base_1,T_all_end_to_base_1,R_all_chess_to_cam_1,T_all_chess_to_cam_1)#手眼标定

一.为首的两个机械臂抓手相对于机器人基坐标系的旋转矩阵与平移向量,

即R_all_end_to_base_1,T_all_end_to_base_1,

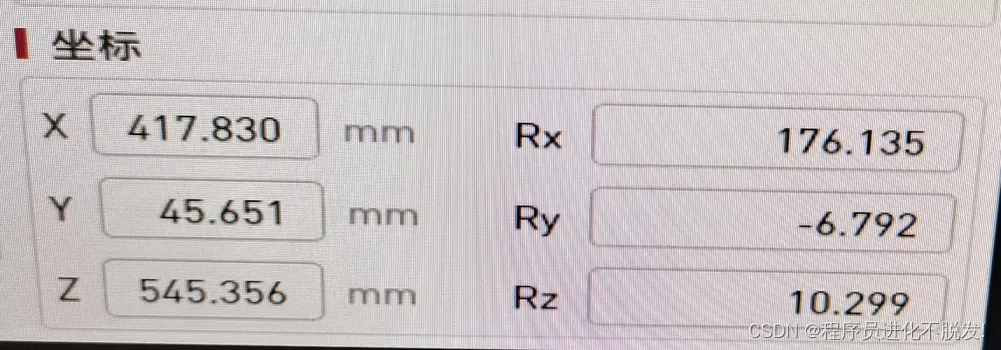

我们可用通过输入的机械臂提供的6组参数得到,3个位姿与3个欧拉角

示例代码

# -*- coding: utf-8 -*-

import cv2

import numpy as np

import glob

from math import *

import pandas as pd

import os

from elephant import elephant_command

import time

import random

import math

erobot = elephant_command()

X0 = 100.0

Y0 = 360.0

Z0 = 375.0 # mm

RX0 = 180.0

RY0 = 0.0

RZ0 = -22.0

ZG = 180.0 # mm

#用于根据欧拉角计算旋转矩阵

def myRPY2R_robot(x, y, z):

Rx = np.array([[1, 0, 0], [0, cos(x), -sin(x)], [0, sin(x), cos(x)]])

Ry = np.array([[cos(y), 0, sin(y)], [0, 1, 0], [-sin(y), 0, cos(y)]])

Rz = np.array([[cos(z), -sin(z), 0], [sin(z), cos(z), 0], [0, 0, 1]])

R = Rz@Ry@Rx

return R

#用于根据位姿计算变换矩阵

def pose_robot(x, y, z, Tx, Ty, Tz):

thetaX = x / 180 * pi

thetaY = y / 180 * pi

thetaZ = z / 180 * pi

R = myRPY2R_robot(thetaX, thetaY, thetaZ)

t = np.array([[Tx], [Ty], [Tz]])

RT1 = np.column_stack([R, t]) # 列合并

RT1 = np.row_stack((RT1, np.array([0,0,0,1])))

# RT1=np.linalg.inv(RT1)

return RT1

# 获得 旋转角度和基于基底的坐标轴 例:[563.777086,-264.753645,216.192196,171.417423,0.173813,54.855032]

# while True:

# time.sleep(0.5)

# a=erobot.get_coords()

#

# a.decode()

# a=str(a)

# a=a[2:]

# print(a)

a=erobot.get_coords()

a.decode()

a=str(a)

a=a[2:-1]

print(a)

print(type(a))

b = eval(a)

print(b)

print(type(b))

R_all_end_to_base_1=[]

T_all_end_to_base_1=[]

RT = pose_robot(b[3],b[4],b[5],b[0],b[1],b[2])

# RT=np.column_stack(((cv2.Rodrigues(np.array([[sheet_1.iloc[i-1]['ax']],[sheet_1.iloc[i-1]['ay']],[sheet_1.iloc[i-1]['az']]])))[0],

# np.array([[sheet_1.iloc[i-1]['dx']],

# [sheet_1.iloc[i-1]['dy']],[sheet_1.iloc[i-1]['dz']]])))

# RT = np.row_stack((RT, np.array([0, 0, 0, 1])))

# RT = np.linalg.inv(RT)

R_all_end_to_base_1.append(RT[:3, :3])

T_all_end_to_base_1.append(RT[:3, 3].reshape((3, 1)))

print(R_all_end_to_base_1)

print("*"*100)

print(T_all_end_to_base_1)

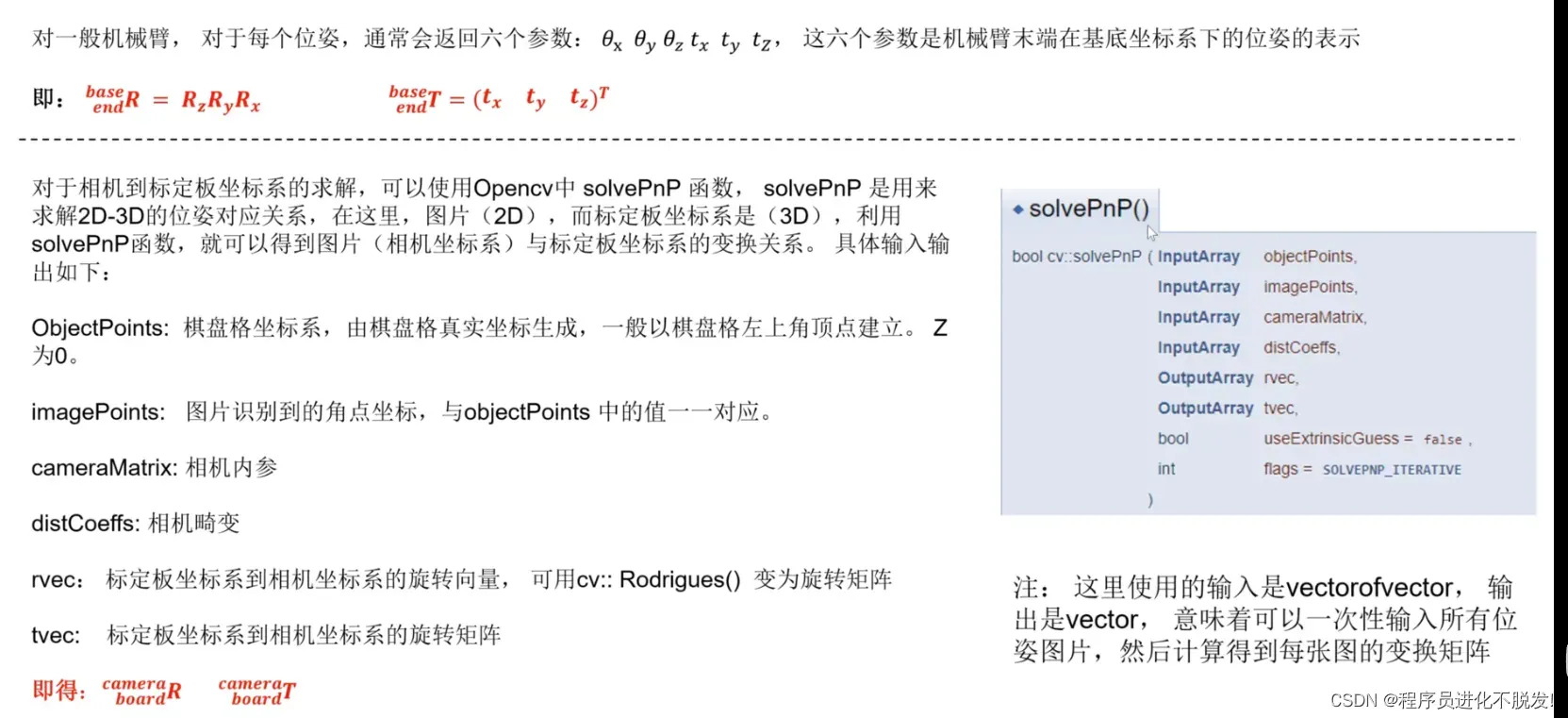

二. 接着是R_all_chess_to_cam_1,T_all_chess_to_cam_1,这俩统称为相机外参,具体是标定板相对于双目相机的齐次矩阵,这里,我们需要用到的函数为

**cv2.findChessboardCorners** 角点查找函数

**cv2.calibrateCamera** 相机标定函数

**cv2.solvePnP** N点透视位姿求解函数

这里是关系是 通过cv2.findChessboardCorners角点查找函数和

cv2.calibrateCamera相机标定函数得到的结果,处理后放入cv2.solvePnPN点透视位姿求解函数求得的结果经过转换最后变成 所谓的相机外参。

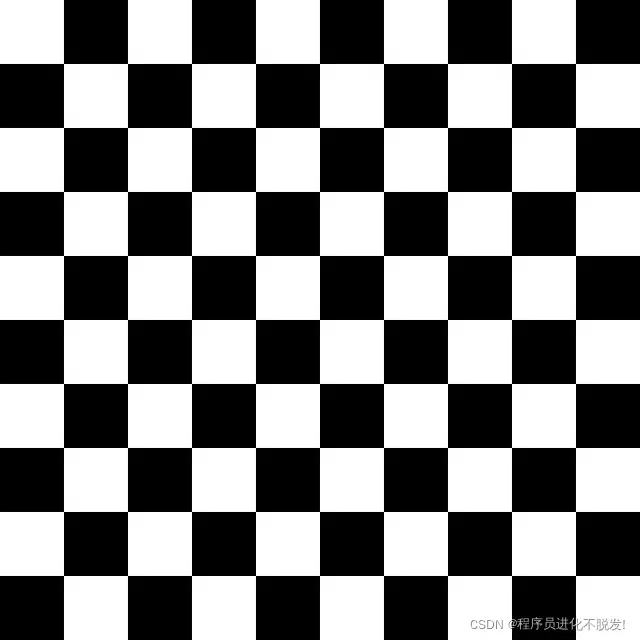

1.准备角点

通过cv2.findChessboardCorners角点查找函数 进行查找

如上图此时从机械臂收到的数据为:[434.578026,-49.310804,524.948340,-178.730009,-4.475156,-15.869448]当然和角点没啥关系,我就是说明下当前机械臂末端与基座的坐标关系。

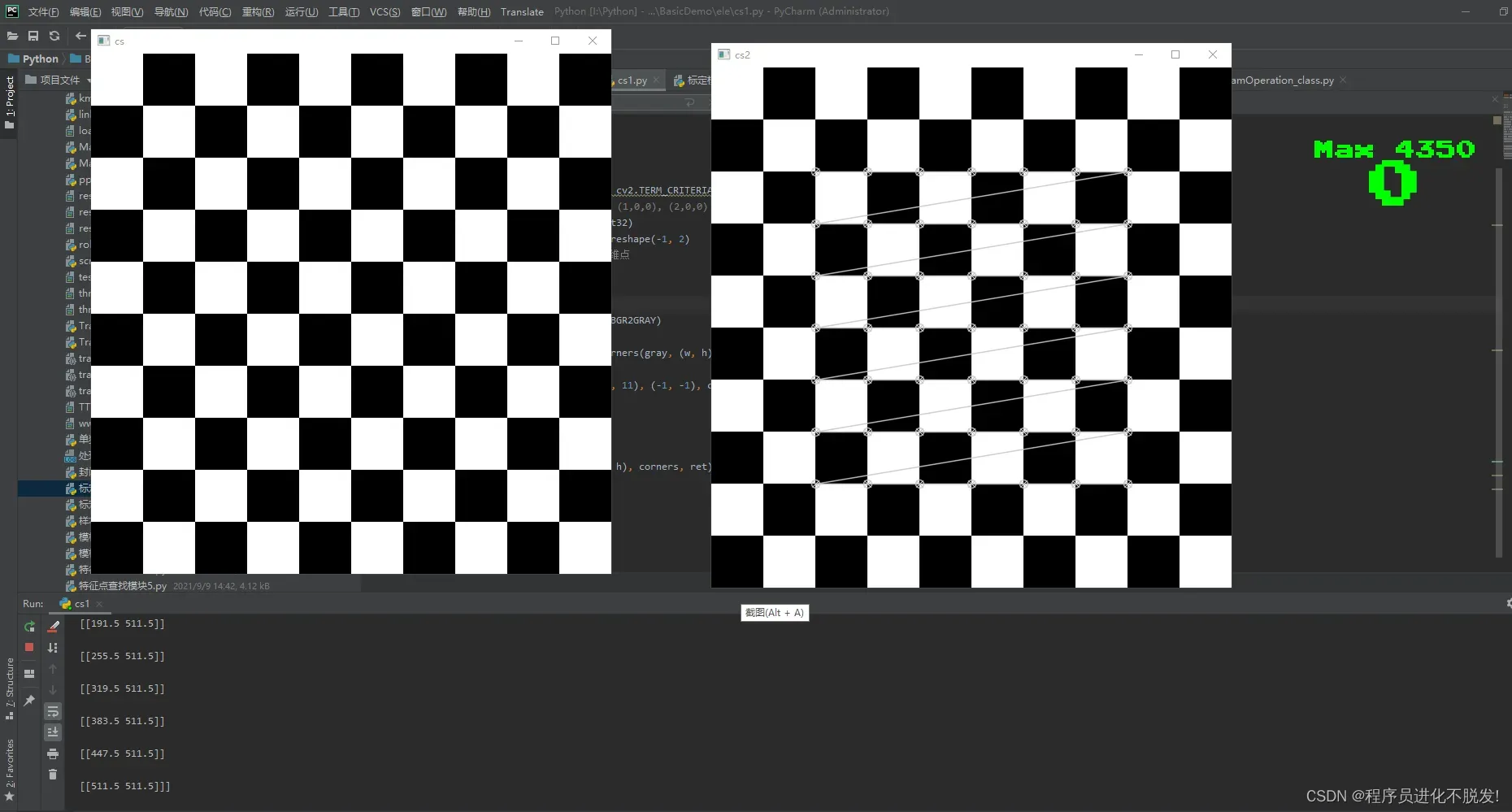

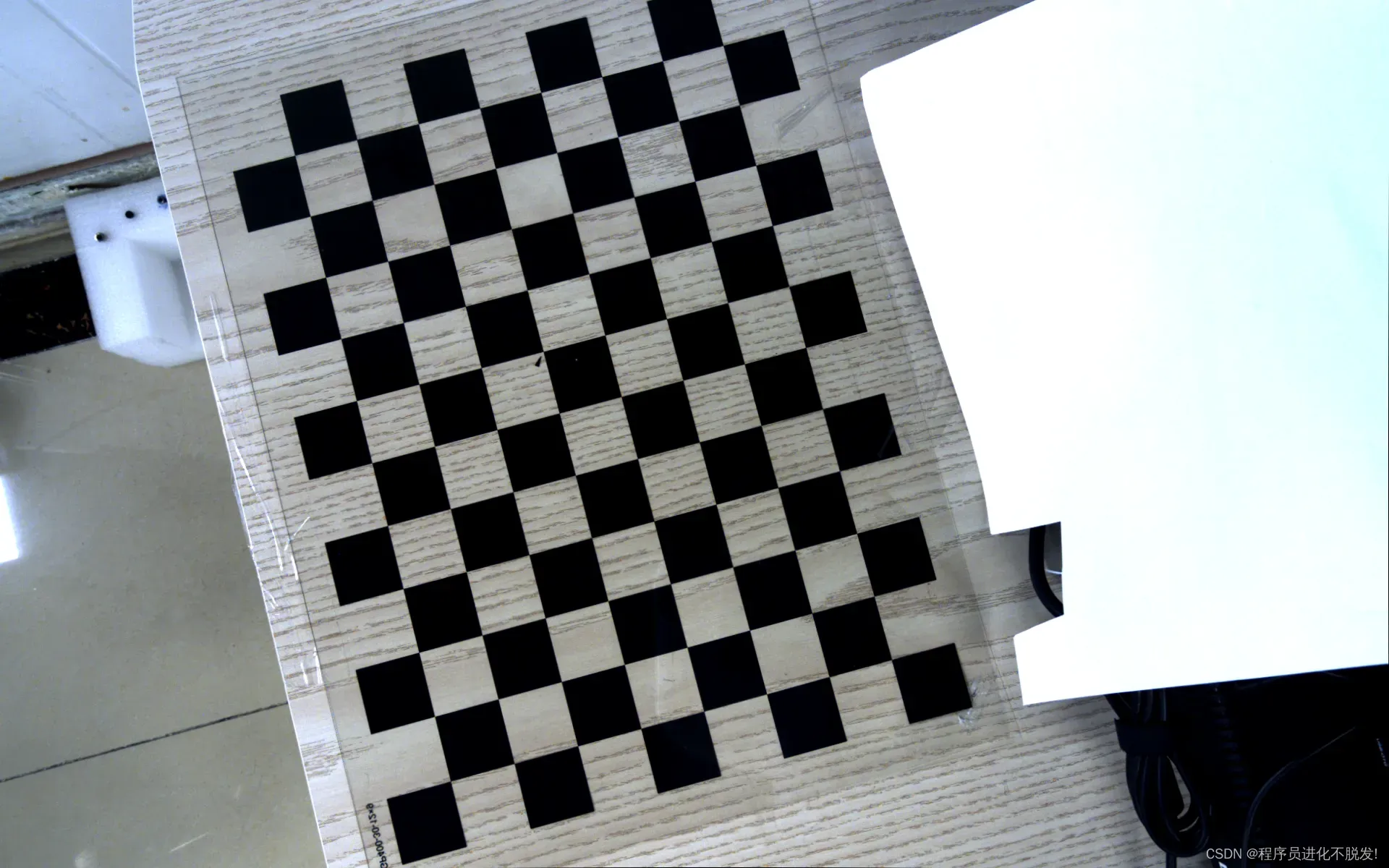

这时候相机拍到的图像是这样的(注意此时图像没有进行畸变校正)

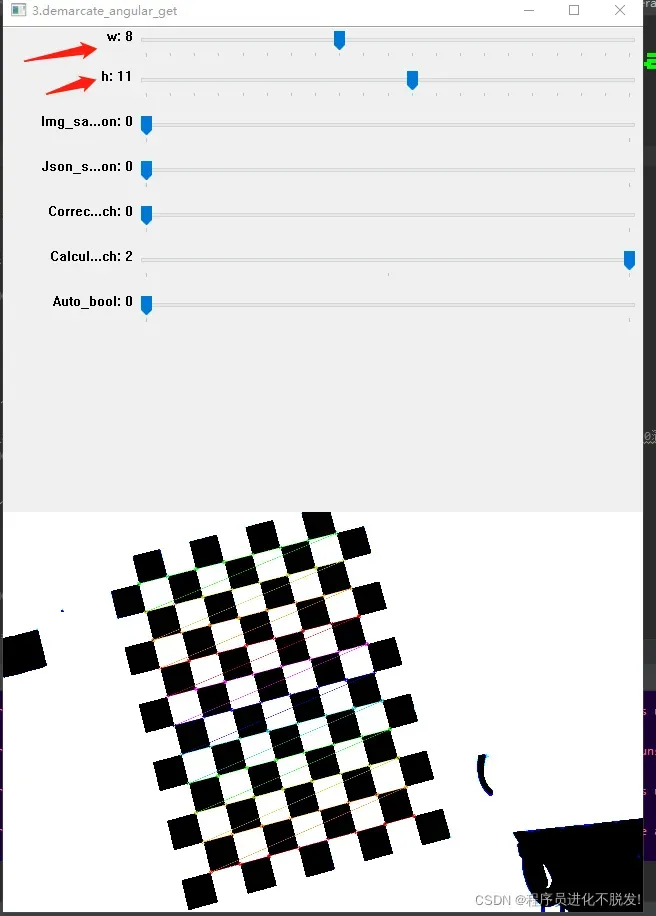

然后我们使用cv2.findChessboardCorners进行角点寻找,如下经过测试8-11 或 11-8 可找到可用角点,下图的窗口是我分装的滑块窗口调试的,不是这个函数运行就这样哈。

如上图

x方向长度为:8

y方向长度为: 11

单位棋盘格长度为: 30 mm

角点检测代码,附对应图片,如果报错,说明没有按照w和h的方式得到对应角点

Traceback (most recent call last): File

“I:/Python/BasicDemo/ele/cs1.py”, line 20, in

cv2.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria) cv2.error: OpenCV(4.5.3)

C:\Users\runneradmin\AppData\Local\Temp\pip-req-build-_xlv4eex\opencv\modules\imgproc\src\cornersubpix.cpp:58:

error: (-215:Assertion failed) count >= 0 in function

‘cv::cornerSubPix’

上图为3.pngcornerSubPix()对检测到的角点作进一步的优化计算,可使角点的精度达到亚像素级别。

功能形式

void cornerSubPix( InputArray image, InputOutputArray corners, Size

winSize, Size zeroZone, TermCriteria criteria )参数说明

具体调用形式如下:

void cv::cornerSubPix(

cv::InputArray image, // 输入图像

cv::InputOutputArray corners, // 角点(既作为输入也作为输出)

cv::Size winSize, // 区域大小为 NXN; N=(winSize*2+1)

cv::Size zeroZone, // 类似于winSize,但是总具有较小的范围,Size(-1,-1)表示忽略

cv::TermCriteria criteria // 停止优化的标准

);第一个参数是输入图像,和cv::goodFeaturesToTrack()中的输入图像是同一个图像。

第二个参数是检测到的角点,既是输入也是输出。第三个参数是计算亚像素角点时考虑的区域的大小,大小为NN, N=(winSize2+1)。

第四个参数作用类似于winSize,但是总是具有较小的范围,通常忽略(即Size(-1, -1))。

第五个参数用于表示计算亚像素时停止迭代的标准,可选的值有cv::TermCriteria::MAX_ITER

、cv::TermCriteria::EPS(可以是两者其一,或两者均选),前者表示迭代次数达到了最大次数时停止,后者表示角点位置变化的最小值已经达到最小时停止迭代。二者均使用cv::TermCriteria()构造函数进行指定。

import cv2

import numpy as np

w = 7

h = 7

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

# 世界坐标系中的棋盘格点,例如(0,0,0), (1,0,0), (2,0,0) ....,(8,5,0),去掉Z坐标,记为二维矩阵

objp = np.zeros((w * h, 3), np.float32)

objp[:, :2] = np.mgrid[0:w, 0:h].T.reshape(-1, 2)

objpoints = [] # 在世界坐标系中的三维点

imgpoints = [] # 在图像平面的二维点

img_path = "3.png"

img = cv2.imread(img_path)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

cv2.imshow("cs",gray)

ret, corners = cv2.findChessboardCorners(gray, (w, h), None)

print(corners)

cv2.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria)

objpoints.append(objp)

imgpoints.append(corners)

# 将角点在图像上显示

cv2.drawChessboardCorners(gray, (w, h), corners, ret)

cv2.imshow("cs2",gray)

cv2.waitKey(0)

2.进行标定

cv2.calibrateCamera相机标定函数

当出现如下错误时,表示没有找到角点

cv2.error: OpenCV(4.5.3) C:\Users\runneradmin\AppData\Local\Temp\pip-req-build-_xlv4eex\opencv\modules\calib3d\src\calibration.cpp:3694: error: (-215:Assertion failed) nimages > 0 in function 'cv::calibrateCameraRO'

# 标定

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1], None, None)

详细看这个

这里,ret表示的是重投影误差;mtx是相机的内参矩阵;dist表述的相机畸变参数;rvecs表示标定棋盘格世界坐标系到相机坐标系的旋转参数:rotation vectors,需要进行罗德里格斯转换;tvecs表示translation vectors,主要是平移参数。

其中 mtx就是我们需要获得的相机内参,通过获得的mtx送入 接下来的 solvePnP中,然后再通过solvePnP求得 手眼标定函数的最后两位参数。。。。。。。

下面是我修改编写的角点和标定的代码分布,

# 标定模块使用说明,通过计算 "save_img_path": "Demarcate_img" 下的文件夹内包含的多张图片,主要为输入calibrateCamera( ) 函数的

# 前两个参数

# objectPoints :世界坐标系中的点。在使用时,应该输入vector< vector< Point3f > >。

# imagePoints :其对应的图像点。和objectPoints一样,应该输入vector< vector< Point2f > >型的变量。

# 分别通过两个列表存储

# // Trackbar_class 的1568行核心代码

# 所以这里标定模块使用的主要目的,是为Demarcate_img文件夹中准备尽可能准确及较多数量的标定模块样本

# 注意点击 Calculate_switch 置为 1 的时候,程序会将当前显示的标定样本的,长宽(w,h)作为 Demarcate_img 内所有准备的标定图片样本w,h输入,

# 所以务必保证文件夹内与现在显示的这个大爷的w,h 是一样的!

def out_demarcate_param(w, h, save_img_path, criteria):

"""解析储存的标定图片数据,输出标定参数"""

objp = np.zeros((w * h, 3), np.float32)

objp[:, :2] = np.mgrid[0:w, 0:h].T.reshape(-1, 2)

# 储存棋盘格角点的世界坐标和图像坐标对

objpoints = [] # 在世界坐标系中的三维点

imgpoints = [] # 在图像平面的二维点

images = glob(save_img_path + '/*.bmp')

print("1040 开始标定分析。。。。。。")

for fname in images:

print("标定图片:" + str(fname))

img = cv2.imread(fname)

try:

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

except:

gray = img

# 找到棋盘格角点

ret, corners = cv2.findChessboardCorners(gray, (w, h), None)

# 如果找到足够点对,将其存储起来

if ret == True:

cv2.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria)

objpoints.append(objp)

imgpoints.append(corners)

# 标定

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1], None, None)

print("1056 标定分析完毕,返回参数")

return ret, mtx, dist, rvecs, tvecs, objpoints, imgpoints

def eliminate_distortion(ret, mtx, dist, rvecs, tvecs, img2, objpoints, imgpoints):

# 去畸变

pr_ll("cs_down")

h, w = img2.shape[:2]

pr_ll(mtx)

pr_ll(mtx.dtype)

pr_ll(type(mtx))

pr_ll(dist)

pr_ll(dist.dtype)

pr_ll(type(dist))

pr_ll(w)

pr_ll(h)

pr_ll("cs_up")

newcameramtx, roi = cv2.getOptimalNewCameraMatrix(mtx, dist, (w, h), 0, (w, h)) # 自由比例参数

dst = cv2.undistort(img2, mtx, dist, None, newcameramtx)

# 根据前面ROI区域裁剪图片

# x,y,w,h = roi

# dst = dst[y:y+h, x:x+w]

# 反投影误差

total_error = 0

for i in range(len(objpoints)):

imgpoints2, _ = cv2.projectPoints(objpoints[i], rvecs[i], tvecs[i], mtx, dist)

error = cv2.norm(imgpoints[i], imgpoints2, cv2.NORM_L2) / len(imgpoints2)

total_error += error

#print("1093 total error: " + str(total_error / len(objpoints)))

return dst

def demarcate_angular_get(img, update_param_dict, fixed_param_dict, img_datas=None):

"""用于为标定检测角点, 调控获得标定数据"""

wind_hint_show_bool, show_img = wind_hint_show_fun(fixed_param_dict)

global global_save_img_button

global global_save_json_button

global global_demarcate_param_list

global global_w2

global global_h2

w = update_param_dict["w"]

h = update_param_dict["h"]

save_img_button = update_param_dict["Img_save_button"]

# 结果存入json文件,需先进行标注计算

save_json_button = update_param_dict["Json_save_button"]

# 通过矫正模块进行矫正处理

correct_switch = update_param_dict["Correct_switch"]

# 计算标定

# cv2.error: OpenCV(4.5.3) C:\Users\runneradmin\AppData\Local\Temp\pip-req-build-_

# xlv4eex\opencv\modules\calib3d\src\calibration.cpp:3694: error: (-215:Assertion failed) nimages > 0 in function 'cv::calibrateCameraRO'

# 报以上错误说明角点找寻有问题

calculate_switch = update_param_dict["Calculate_switch"]

# 固定的图像文件存储路径

save_img_path = fixed_param_dict["save_img_path"]

#固定的json文件存储路径

demarcate_json_path = fixed_param_dict["demarcate_json_path"]

# 为真进入自动查找角点

Auto_bool = update_param_dict["Auto_bool"]

# 找棋盘格角点

# 阈值

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

# 棋盘格模板规格

# w = 9

# h = 6

# w = 12

# h = 9

# 世界坐标系中的棋盘格点,例如(0,0,0), (1,0,0), (2,0,0) ....,(8,5,0),去掉Z坐标,记为二维矩阵

objp = np.zeros((w * h, 3), np.float32)

objp[:, :2] = np.mgrid[0:w, 0:h].T.reshape(-1, 2)

# 储存棋盘格角点的世界坐标和图像坐标对

objpoints = [] # 在世界坐标系中的三维点

imgpoints = [] # 在图像平面的二维点

show_img = img.copy()

try:

gray = cv2.cvtColor(show_img, cv2.COLOR_BGR2GRAY)

except:

gray = show_img

if Auto_bool == 1:

# 找到棋盘格角点

try:

ret, corners = cv2.findChessboardCorners(gray, (global_w2, global_h2), None)

except:

pass

else:

# 找到棋盘格角点

ret, corners = cv2.findChessboardCorners(gray, (w, h), None)

# 如果找到足够点对,将其存储起来

if ret == True:

if Auto_bool == 1:

print("找到角点:" + str(global_w2) + "," + str(global_h2))

cv2.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria)

objpoints.append(objp)

imgpoints.append(corners)

# 将角点在图像上显示

cv2.drawChessboardCorners(show_img, (w, h), corners, ret)

#print("\n")

else:

if Auto_bool == 1:

if global_h2<= 12:

if global_w2<=12:

global_w2 = global_w2 + 1

else:

global_w2 = 4

global_h2 = global_h2 + 1

print("测试角点:" + str(global_w2) + "," + str(global_h2))

else:

print("没有找到角点")

##print("1005 未找到角点,失败!")

pass

if save_img_button != global_save_img_button:

t = time.localtime()

t_sting = str(t.tm_year) + "_" + str(t.tm_mon) + "_" + str(t.tm_mday) + "_" \

+ str(t.tm_hour) + "_" + str(t.tm_min) + "_" + str(t.tm_sec) + "_" + str(t.tm_wday) + ".bmp"

path_name = save_img_path + "/" + t_sting

cv2.imwrite(path_name, img)

global_save_img_button = save_img_button

#print("存入图片: " + path_name)

if calculate_switch == 1:

# 开启后系统会自动分析在对应存储文件夹中已保存的图片,并且将值记录在程序变量中

print("1018 进入标定参数计算程序")

for data in out_demarcate_param(w, h, save_img_path, criteria):

global_demarcate_param_list.append(data)

elif calculate_switch == 0:

#print("清空标定数据")

global_demarcate_param_list = []

else:

pass

if correct_switch == 1:

# 开启标定矫正

#print("开启矫正")

if global_demarcate_param_list != []:

#print("矫正数据:" + str(global_demarcate_param_list))

ret = global_demarcate_param_list[0]

mtx = global_demarcate_param_list[1]

dist = global_demarcate_param_list[2]

rvecs = global_demarcate_param_list[3]

tvecs = global_demarcate_param_list[4]

objpoints = global_demarcate_param_list[5]

imgpoints = global_demarcate_param_list[6]

show_img = eliminate_distortion(ret, mtx, dist, rvecs, tvecs, img, objpoints, imgpoints)

#print(type(show_img))

else:

pr_ll("读入配置文件测试")

data_dict = set_setting_file("Distortion_correct.json")

ret = data_dict["ret"]

mtx = list_to_np_list(data_dict["mtx"],type_name="float64")

dist = list_to_np_list(data_dict["dist"],type_name="float64")

rvecs = list_to_np_list(data_dict["rvecs"])

tvecs = list_to_np_list(data_dict["tvecs"])

objpoints = list_to_np_list(data_dict["objpoints"])

imgpoints = list_to_np_list(data_dict["imgpoints"])

show_img = eliminate_distortion(ret, mtx, dist, rvecs, tvecs, img, objpoints, imgpoints)

#print(type(show_img))

#print("1037 请先获得标定参数")

if save_json_button != global_save_json_button:

#print("存入当前标定数据到json文件")

global_save_json_button = save_json_button

if global_demarcate_param_list != []:

param_dict = {}

ret = global_demarcate_param_list[0]

mtx = global_demarcate_param_list[1] # <class 'numpy.ndarray'>

dist = global_demarcate_param_list[2] # <class 'numpy.ndarray'>

rvecs = global_demarcate_param_list[3] # list <class 'numpy.ndarray'>

tvecs = global_demarcate_param_list[4] # list <class 'numpy.ndarray'>

objpoints = global_demarcate_param_list[5] # list <class 'numpy.ndarray'>

imgpoints = global_demarcate_param_list[6] # list <class 'numpy.ndarray'>

pr_ll("mtx")

#print(mtx)

#print(type(mtx))

pr_ll("mtx")

param_dict["w"] = w

param_dict["h"] = h

param_dict["ret"] = global_demarcate_param_list[0]

param_dict["mtx"] = global_demarcate_param_list[1].tolist()

param_dict["dist"] = np_list_to_list(global_demarcate_param_list[2])

param_dict["rvecs"] = np_list_to_list(global_demarcate_param_list[3])

param_dict["tvecs"] = np_list_to_list(global_demarcate_param_list[4])

param_dict["objpoints"] = np_list_to_list(global_demarcate_param_list[5])

param_dict["imgpoints"] = np_list_to_list(global_demarcate_param_list[6])

save_setting_file(demarcate_json_path,param_dict)

else:

logger_flow.warning("1037 请先获得标定参数")

return show_img, show_img, img_datas

3.基于PNP (Perspective-n-Point)方法的相机位置求解

参考链接

solvepnp通过2D点和3D点求解相机的位姿(R,t)

通过特征点,这里即棋盘格角点的世界坐标(三维坐标)、2D坐标(像素坐标)、相机内参矩阵、相机畸变参数矩阵 以上四个参数即可以解得相机与标志物之间的外参(R、T)

来到了倒数第二步,通过solvePnP函数为标定函数准备最后两个参数,

这里我们需要

- objectPonints 由x方向格子数,y方向格子数,单位棋盘格长度(单位毫米)来构造以棋盘格左上角为原点的三维坐标矩阵,(由于棋盘格可用认作是一个平面,所以z轴为0)

2.通过角点寻找函数获得的角点,构成的二维矩阵(这里可以提前自己审核后存到json或者xml文件中,以避免下面由于图片问题角点找不齐的问题)

#用来从棋盘格图片得到相机外参

def get_RT_from_chessboard(img_path,chess_board_x_num,chess_board_y_num,K,chess_board_len):

'''

:param img_path: 读取图片路径

:param chess_board_x_num: 棋盘格x方向格子数

:param chess_board_y_num: 棋盘格y方向格子数

:param K: 相机内参

:param chess_board_len: 单位棋盘格长度,mm

:return: 相机外参

'''

img=cv2.imread(img_path)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

size = gray.shape[::-1]

ret, corners = cv2.findChessboardCorners(gray, (chess_board_x_num, chess_board_y_num), None)

# print(corners)

corner_points=np.zeros((2,corners.shape[0]),dtype=np.float64)

for i in range(corners.shape[0]):

corner_points[:,i]=corners[i,0,:]

# print(corner_points)

object_points=np.zeros((3,chess_board_x_num*chess_board_y_num),dtype=np.float64)

flag=0

for i in range(chess_board_y_num):

for j in range(chess_board_x_num):

object_points[:2,flag]=np.array([(11-j-1)*chess_board_len,(8-i-1)*chess_board_len])

flag+=1

# print(object_points)

retval,rvec,tvec = cv2.solvePnP(object_points.T,corner_points.T, K, distCoeffs=None)

# print(rvec.reshape((1,3)))

# RT=np.column_stack((rvec,tvec))

RT=np.column_stack(((cv2.Rodrigues(rvec))[0],tvec))

RT = np.row_stack((RT, np.array([0, 0, 0, 1])))

# RT=pose(rvec[0,0],rvec[1,0],rvec[2,0],tvec[0,0],tvec[1,0],tvec[2,0])

# print(RT)

# print(retval, rvec, tvec)

# print(RT)

# print('')

return RT

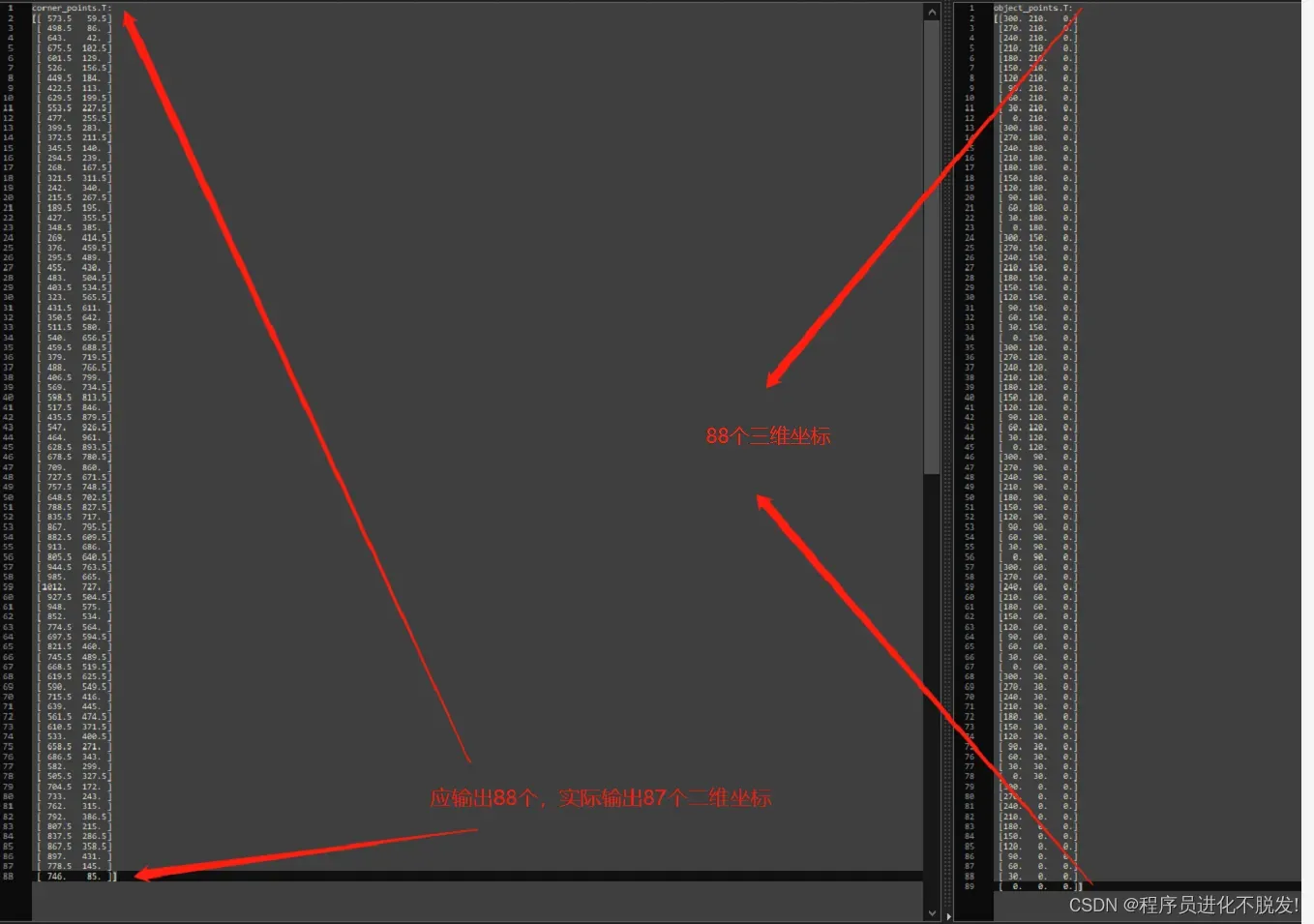

发送后出现如下错误,

File “I:/Python/BasicDemo/ele/cs1.py”, line 116, in

get_RT_from_chessboard

retval, rvec, tvec = cv2.solvePnP(object_points.T, corner_points.T, K, distCoeffs=dist) cv2.error: OpenCV(4.5.3)

C:\Users\runneradmin\AppData\Local\Temp\pip-req-build-_xlv4eex\opencv\modules\calib3d\src\solvepnp.cpp:833:

error: (-215:Assertion failed) ( (npoints >= 4) || (npoints == 3 &&

flags == SOLVEPNP_ITERATIVE && useExtrinsicGuess) || (npoints >= 3 &&

flags == SOLVEPNP_SQPNP) ) && npoints ==

std::max(ipoints.checkVector(2, CV_32F), ipoints.checkVector(2,

CV_64F)) in function ‘cv::solvePnPGeneric’

说明标定板(我这里是棋盘格),通过输入输入的棋盘格x方向,y方向,棋盘格格子的长度,通过代码生成的三维坐标点(下右),和通过角点寻找函数得到的角点构成的映射的二维坐标矩阵(下左),匹配错误, 如下我的错误就是角点查找函数输出时少输出了一个角点(这里主要是和自己准备的图片样本有关)示例:

4.代入手眼标定函数calibrateHandeye()

经过上面一堆的步骤,终于筹齐了该函数需要的4个参数,现在将其输入进去,即可得到输出的一个旋转矩阵,一个平移矩阵,然后把这俩个列合并,然后变成齐次式的形式

RT=np.column_stack((R,T))

RT = np.row_stack((RT, np.array([0, 0, 0, 1])))

手眼矩阵分解得到的旋转矩阵

[[ 0.91155556 -0.05663482 -0.40725785]

[-0.07167205 0.95341986 -0.29300801]

[ 0.40488217 0.29628208 0.86503604]]

手眼矩阵分解得到的平移矩阵

[[ 57.60044963]

[-101.07768643]

[ 124.24813674]]

相机相对于末端的变换矩阵为:

[[ 9.11555562e-01 -5.66348167e-02 -4.07257848e-01 5.76004496e+01]

[-7.16720453e-02 9.53419858e-01 -2.93008007e-01 -1.01077686e+02]

[ 4.04882175e-01 2.96282081e-01 8.65036041e-01 1.24248137e+02]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

以下为带注释的代码,自己做json文件和对应的图片哈

import cv2

import numpy as np

import glob

from math import *

import pandas as pd

import os

import json

# K=np.array([[4283.95148301687,-0.687179973528103,2070.79900757240],

# [0,4283.71915784510,1514.87274457919],

# [0,0,1]],dtype=np.float64)#大恒相机内参

K=np.matrix([[4283.95148301687,-0.687179973528103,2070.79900757240],

[0,4283.71915784510,1514.87274457919],

[0,0,1]],dtype=np.float64)#大恒相机内参

#

# K=np.array( [[1358.1241306355312, 0.0, 979.3369840601881],

# [0.0, 1373.7959382800464, 746.2664607155928],

# [0.0, 0.0, 1.0]],dtype=np.float64)#大恒相机内参

dist = np.array([0.0, 0.0, 0.0, 0.0])

#

# dist = np.array([[0.027380026193468753, -0.08801521475213679, 0.0011824388616034057,

# -0.0008063100320946023, 0.18819181491200773]],dtype=np.float64)

chess_board_x_num=11#棋盘格x方向格子数

chess_board_y_num=8#棋盘格y方向格子数

chess_board_len=30#单位棋盘格长度,mm

#用于根据欧拉角计算旋转矩阵

def myRPY2R_robot(x, y, z):

Rx = np.array([[1, 0, 0], [0, cos(x), -sin(x)], [0, sin(x), cos(x)]])

Ry = np.array([[cos(y), 0, sin(y)], [0, 1, 0], [-sin(y), 0, cos(y)]])

Rz = np.array([[cos(z), -sin(z), 0], [sin(z), cos(z), 0], [0, 0, 1]])

R = Rz@Ry@Rx

return R

#用于根据位姿计算变换矩阵

def pose_robot(x, y, z, Tx, Ty, Tz):

thetaX = x / 180 * pi

thetaY = y / 180 * pi

thetaZ = z / 180 * pi

R = myRPY2R_robot(thetaX, thetaY, thetaZ)

t = np.array([[Tx], [Ty], [Tz]])

RT1 = np.column_stack([R, t]) # 列合并

RT1 = np.row_stack((RT1, np.array([0,0,0,1])))

# RT1=np.linalg.inv(RT1)

return RT1

#用来从棋盘格图片得到相机外参

def get_RT_from_chessboard(img_path,chess_board_x_num,chess_board_y_num,K,chess_board_len):

'''

:param img_path: 读取图片路径

:param chess_board_x_num: 棋盘格x方向格子数

:param chess_board_y_num: 棋盘格y方向格子数

:param K: 相机内参

:param chess_board_len: 单位棋盘格长度,mm

:return: 相机外参

'''

img=cv2.imread(img_path)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

size = gray.shape[::-1]

ret, corners = cv2.findChessboardCorners(gray, (chess_board_x_num, chess_board_y_num), None)

corner_points=np.zeros((2,corners.shape[0]),dtype=np.float64)

for i in range(corners.shape[0]):

corner_points[:,i]=corners[i,0,:]

# print(corner_points)

object_points=np.zeros((3,chess_board_x_num*chess_board_y_num),dtype=np.float64)

flag=0

for i in range(chess_board_y_num):

for j in range(chess_board_x_num):

object_points[:2,flag]=np.array([(11-j-1)*chess_board_len,(8-i-1)*chess_board_len])

flag+=1

# retval,rvec,tvec = cv2.solvePnP(object_points.T,corner_points.T, K, distCoeffs=None)

# print("object_points.T:")

# print(object_points.T)

# print(type(object_points.T))

# print("-" * 20)

#

# print("corner_points.T:")

# print(corner_points.T)

# print(type(corner_points.T))

# print("-" * 20)

# print("objp:")

# print(object_points)

# print(type(object_points))

# print("*" * 20)

#

# print("imgp:")

# print(corner_points)

# print(type(corner_points))

# print("*" * 20)

# print("K:")

# print(K)

# print(type(K))

# print("*" * 20)

#

# print("D_0:")

# print(dist)

# print(type(dist))

# print("*" * 20)

print("开始根据角点信息与对应该组信息的机械臂返回,通过2D点和3D点求解相机的位姿")

print("\n")

retval, rvec, tvec = cv2.solvePnP(object_points.T, corner_points.T, K, distCoeffs=dist)

# print(rvec.reshape((1,3)))

# RT=np.column_stack((rvec,tvec))

RT=np.column_stack(((cv2.Rodrigues(rvec))[0],tvec))

RT = np.row_stack((RT, np.array([0, 0, 0, 1])))

# RT=pose(rvec[0,0],rvec[1,0],rvec[2,0],tvec[0,0],tvec[1,0],tvec[2,0])

# print(RT)

# print(retval, rvec, tvec)

# print(RT)

# print('')

return RT

def set_setting_file(path):

try:

with open(path) as json_file:

setting_dict_string = json_file.readline()[:-2]

return json.loads(setting_dict_string)

except:

return {}

def read_xyz_Rxyz(good_picture, folder, ele_data_dict={}):

"""用于读取每个图片对应的json文件中,对应的"""

for i in good_picture:

ele_data_dict[i] = set_setting_file(folder+'/'+str(i)+'.json')

return ele_data_dict

folder = r"save_cail_data"#棋盘格图片存放文件夹

# files = os.listdir(folder)

# file_num=len(files)

# RT_all=np.zeros((4,4,file_num))

# print(get_RT_from_chessboard('calib/2.bmp', chess_board_x_num, chess_board_y_num, K, chess_board_len))

'''

这个地方很奇怪的特点,有些棋盘格点检测得出来,有些检测不了,可以通过函数get_RT_from_chessboard的运行时间来判断

'''

# good_picture=[2,3,4,5,6,7,8,9]#存放可以检测出棋盘格角点的图片

# good_picture=[1,3,10,11,12]

good_picture=[2,3,4,5,7,8,9]#存放可以检测出棋盘格角点的图片

ele_data_dict = read_xyz_Rxyz(good_picture,folder)

# print(ele_data_dict)

file_num=len(good_picture)

#计算board to cam 变换矩阵

R_all_chess_to_cam_1=[]

T_all_chess_to_cam_1=[]

for i in good_picture:

# print(i)

image_path=folder+'/'+str(i)+'.bmp'

print("开始解析"+ folder+'/'+str(i)+'.bmp'+ "的角点信息")

RT=get_RT_from_chessboard(image_path, chess_board_x_num, chess_board_y_num, K, chess_board_len)

# RT=np.linalg.inv(RT)

R_all_chess_to_cam_1.append(RT[:3,:3])

T_all_chess_to_cam_1.append(RT[:3, 3].reshape((3,1)))

# print(T_all_chess_to_cam.shape)

#计算end to base变换矩阵

R_all_end_to_base_1=[]

T_all_end_to_base_1=[]

# print(sheet_1.iloc[0]['ax'])

for i in good_picture:

print("开始分析第" + str(i) + "张图片对应的json文件")

# RT=pose_robot(sheet_1.iloc[i-1]['ax'],sheet_1.iloc[i-1]['ay'],sheet_1.iloc[i-1]['az'],sheet_1.iloc[i-1]['dx'],

# sheet_1.iloc[i-1]['dy'],sheet_1.iloc[i-1]['dz'])

RT = pose_robot(ele_data_dict[i]["x"], ele_data_dict[i]["y"], ele_data_dict[i]["z"],

ele_data_dict[i]["Rx"],ele_data_dict[i]["Ry"],ele_data_dict[i]["Rz"])

R_all_end_to_base_1.append(RT[:3, :3])

T_all_end_to_base_1.append(RT[:3, 3].reshape((3, 1)))

print("基坐标系的旋转矩阵与平移向量求解完毕......")

print("进入手眼标定函数。。。。。。")

# print(R_all_end_to_base_1)

# print(T_all_end_to_base_1)

print("\n")

R,T=cv2.calibrateHandEye(R_all_end_to_base_1,T_all_end_to_base_1,R_all_chess_to_cam_1,T_all_chess_to_cam_1)#手眼标定

print("手眼矩阵分解得到的旋转矩阵")

print(R)

print("\n")

print("手眼矩阵分解得到的平移矩阵")

print(T)

RT=np.column_stack((R,T))

RT = np.row_stack((RT, np.array([0, 0, 0, 1])))#即为cam to end变换矩阵

print("\n")

print('相机相对于末端的变换矩阵为:')

print(RT)

#结果验证,原则上来说,每次结果相差较小

for i in range(len(good_picture)):

# 得到机械手末端到基座的变换矩阵,通过机械手末端到基座的旋转矩阵与平移向量先按列合并,然后按行合并形成变换矩阵格式

RT_end_to_base=np.column_stack((R_all_end_to_base_1[i],T_all_end_to_base_1[i]))

RT_end_to_base=np.row_stack((RT_end_to_base,np.array([0,0,0,1])))

# print(RT_end_to_base)

# 标定版相对于相机的齐次矩阵

RT_chess_to_cam=np.column_stack((R_all_chess_to_cam_1[i],T_all_chess_to_cam_1[i]))

RT_chess_to_cam=np.row_stack((RT_chess_to_cam,np.array([0,0,0,1])))

# print(RT_chess_to_cam)

# 手眼标定变换矩阵

RT_cam_to_end=np.column_stack((R,T))

RT_cam_to_end=np.row_stack((RT_cam_to_end,np.array([0,0,0,1])))

# print(RT_cam_to_end)

# 即为固定的棋盘格相对于机器人基坐标系位姿

RT_chess_to_base=RT_end_to_base@RT_cam_to_end@RT_chess_to_cam

RT_chess_to_base=np.linalg.inv(RT_chess_to_base)

print('第',i,'次')

print(RT_chess_to_base[:3,:])

print('')

输出

I:\NI\Anaconda\envs\cv2\python.exe I:/Python/BasicDemo/ele/cs2.py

开始解析save_cail_data/2.bmp的角点信息

开始根据角点信息与对应该组信息的机械臂返回,通过2D点和3D点求解相机的位姿

开始解析save_cail_data/3.bmp的角点信息

开始根据角点信息与对应该组信息的机械臂返回,通过2D点和3D点求解相机的位姿

开始解析save_cail_data/4.bmp的角点信息

开始根据角点信息与对应该组信息的机械臂返回,通过2D点和3D点求解相机的位姿

开始解析save_cail_data/5.bmp的角点信息

开始根据角点信息与对应该组信息的机械臂返回,通过2D点和3D点求解相机的位姿

开始解析save_cail_data/7.bmp的角点信息

开始根据角点信息与对应该组信息的机械臂返回,通过2D点和3D点求解相机的位姿

开始解析save_cail_data/8.bmp的角点信息

开始根据角点信息与对应该组信息的机械臂返回,通过2D点和3D点求解相机的位姿

开始解析save_cail_data/9.bmp的角点信息

开始根据角点信息与对应该组信息的机械臂返回,通过2D点和3D点求解相机的位姿

开始分析第2张图片对应的json文件

开始分析第3张图片对应的json文件

开始分析第4张图片对应的json文件

开始分析第5张图片对应的json文件

开始分析第7张图片对应的json文件

开始分析第8张图片对应的json文件

开始分析第9张图片对应的json文件

基坐标系的旋转矩阵与平移向量求解完毕......

进入手眼标定函数。。。。。。

手眼矩阵分解得到的旋转矩阵

[[ 0.91155556 -0.05663482 -0.40725785]

[-0.07167205 0.95341986 -0.29300801]

[ 0.40488217 0.29628208 0.86503604]]

手眼矩阵分解得到的平移矩阵

[[ 57.60044963]

[-101.07768643]

[ 124.24813674]]

相机相对于末端的变换矩阵为:

[[ 9.11555562e-01 -5.66348167e-02 -4.07257848e-01 5.76004496e+01]

[-7.16720453e-02 9.53419858e-01 -2.93008007e-01 -1.01077686e+02]

[ 4.04882175e-01 2.96282081e-01 8.65036041e-01 1.24248137e+02]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

第 0 次

[[ 2.33572044e-01 -9.10009016e-01 3.42531300e-01 7.72449880e+02]

[ 4.00302828e-01 -2.31041758e-01 -8.86779201e-01 -1.56513760e+02]

[ 8.86116102e-01 3.44243079e-01 3.10314286e-01 -1.77002869e+03]]

第 1 次

[[ 2.78244828e-01 -8.84523147e-01 3.74431060e-01 7.78947806e+02]

[ 1.83357762e-01 -3.33742558e-01 -9.24659849e-01 -1.17473049e+02]

[ 9.42846619e-01 3.25936661e-01 6.93220441e-02 -1.76238460e+03]]

第 2 次

[[ 2.46706389e-01 -9.14939020e-01 3.19409686e-01 8.03039487e+02]

[ 2.14992414e-01 -2.69710750e-01 -9.38634313e-01 -1.28440399e+02]

[ 9.44941384e-01 3.00237741e-01 1.30165583e-01 -1.76815689e+03]]

第 3 次

[[ 5.89266715e-01 -6.25720741e-01 5.11114756e-01 6.42562840e+02]

[ 2.32373222e-01 -4.74629044e-01 -8.48958159e-01 -1.33993275e+02]

[ 7.73800636e-01 6.19032168e-01 -1.34282353e-01 -1.71947250e+03]]

第 4 次

[[ 5.47363417e-01 -3.14921335e-01 7.75382384e-01 6.04746992e+02]

[ 6.50659114e-01 -4.22559881e-01 -6.30940460e-01 -2.26083803e+02]

[ 5.26342100e-01 8.49863342e-01 -2.63873836e-02 -1.68417190e+03]]

第 5 次

[[ 7.82415161e-01 -2.89829020e-01 5.51203824e-01 5.75035151e+02]

[ 3.91139161e-01 -4.60040208e-01 -7.97102982e-01 -1.80266317e+02]

[ 4.84599498e-01 8.39262859e-01 -2.46578953e-01 -1.66563927e+03]]

第 6 次

[[ 6.79225047e-01 -2.15407133e-01 7.01607513e-01 5.84337739e+02]

[ 5.82864803e-01 -4.22614543e-01 -6.94021303e-01 -2.20238484e+02]

[ 4.46006678e-01 8.80338977e-01 -1.61497147e-01 -1.66336005e+03]]

进程已结束,退出代码 0

版权声明:本文为博主程序员进化不脱发!原创文章,版权归属原作者,如果侵权,请联系我们删除!

原文链接:https://blog.csdn.net/weixin_43134049/article/details/122922816