【人工智能】国产开源大模型聊天 AquilaChat 快速开始上手实战&效果评测

文章目录

- 【人工智能】国产开源大模型聊天 AquilaChat 快速开始上手实战&效果评测

- 禅与计算机程序设计艺术:评测结论 —— AquilaChat 在写作水平上跟ChatGLM-6B差不多,但是 AquilaChat 多编程语言写代码能力还是比较弱。。

- 输入:

- 输出:

- 输入:

- 报错输出:

- AquilaChat 快速开始

- 环境依赖安装

- 安装相关依赖

- 具体使用方式 / How to use

- Fork 源代码:

- 1. 推理/Inference

- 效果测试

- 输入1:

- 输出1:

- 输入2:

- 输出2:

- 2. 可监督微调/Supervised Fine-tuning(SFT)

- Step 1: 配置模型/ Setup Checkpoints

- Step 2: 修改参数/ Modify Parameters

- Step 3: 启动可监督微调/Start SFT

- AquilaChat 简介 / AquilaChat Overview

- Aquila 模型细节 / Aquila Model details

- 训练数据集/Training data

- 证书/License

禅与计算机程序设计艺术:评测结论 —— AquilaChat 在写作水平上跟ChatGLM-6B差不多,但是 AquilaChat 多编程语言写代码能力还是比较弱。。

AquilaChat 写代码水平还不行(应该是代码数据集训练不够),问技术性问题(比如说:用golang实现冒泡排序算法)会有一定的概率报错:

File "/home/me/ai/FlagAI/flagai/model/predictor/aquila.py", line 41, in aquila_generate

next_token = sample_top_p(probs, top_p)

File "/home/me/ai/FlagAI/flagai/model/predictor/aquila.py", line 84, in sample_top_p

next_token = torch.multinomial(probs_sort, num_samples=1)

RuntimeError: probability tensor contains either `inf`, `nan` or element < 0):

输入:

{“prompt”:“冒泡排序算法代码”}

输出:

下面是冒泡排序的 Python 代码实现:

def bubble_sort(array):

n = len(array)

# 遍历所有数组元素

for i in range(n):

# 最后 i 个元素不需要遍历

for j in range(n-i-1):

# 如果当前元素大于下一个元素,则交换它们

if array[j] > array[j+1]:

# 交换元素

array[j], array[j+1] = array[j+1], array[j]

return array

输入:

{“prompt”:“用golang实现冒泡排序算法”}

报错输出:

prompt is 用golang实现冒泡排序算法

INFO: 127.0.0.1:41664 - "POST / HTTP/1.1" 500 Internal Server Error

ERROR: Exception in ASGI application

Traceback (most recent call last):

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/uvicorn/protocols/http/h11_impl.py", line 428, in run_asgi

result = await app( # type: ignore[func-returns-value]

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/uvicorn/middleware/proxy_headers.py", line 78, in __call__

return await self.app(scope, receive, send)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/fastapi/applications.py", line 282, in __call__

await super().__call__(scope, receive, send)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/starlette/applications.py", line 122, in __call__

await self.middleware_stack(scope, receive, send)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/starlette/middleware/errors.py", line 184, in __call__

raise exc

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/starlette/middleware/errors.py", line 162, in __call__

await self.app(scope, receive, _send)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/starlette/middleware/exceptions.py", line 79, in __call__

raise exc

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/starlette/middleware/exceptions.py", line 68, in __call__

await self.app(scope, receive, sender)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/fastapi/middleware/asyncexitstack.py", line 20, in __call__

raise e

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/fastapi/middleware/asyncexitstack.py", line 17, in __call__

await self.app(scope, receive, send)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/starlette/routing.py", line 718, in __call__

await route.handle(scope, receive, send)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/starlette/routing.py", line 276, in handle

await self.app(scope, receive, send)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/starlette/routing.py", line 66, in app

response = await func(request)

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/fastapi/routing.py", line 241, in app

raw_response = await run_endpoint_function(

File "/home/me/ai/FlagAI/venv/lib/python3.10/site-packages/fastapi/routing.py", line 167, in run_endpoint_function

return await dependant.call(**values)

File "/home/me/ai/FlagAI/examples/Aquila/Aquila-chat/generate_chat.py", line 52, in create_item

out = aquila_generate(tokenizer,

File "/home/me/ai/FlagAI/flagai/model/predictor/aquila.py", line 41, in aquila_generate

next_token = sample_top_p(probs, top_p)

File "/home/me/ai/FlagAI/flagai/model/predictor/aquila.py", line 84, in sample_top_p

next_token = torch.multinomial(probs_sort, num_samples=1)

RuntimeError: probability tensor contains either `inf`, `nan` or element < 0

AquilaChat 快速开始

环境依赖安装

python ./setup.py install

其中, setup.py代码如下:

# Copyright © 2022 BAAI. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License")

from setuptools import find_packages, setup

setup(

name="flagai",

version="v1.7.1",

description="FlagAI aims to help researchers and developers to freely train and test large-scale models for NLP/CV/VL tasks.",

long_description=open("README.md", encoding="utf-8").read(),

long_description_content_type="text/markdown",

author="FlagAI-Open",

author_email="open@baai.ac.cn",

url="https://github.com/FlagAI-Open/FlagAI",

packages=find_packages(exclude="tests"), # same as name

license="Apache 2.0",

include_package_data=True,

python_requires=">=3.8",

install_requires=[

'nltk==3.6.7',

'sentencepiece==0.1.96',

'boto3==1.21.42',

'pandas==1.3.5',

'jieba==0.42.1',

'scikit-learn==1.0.2',

'tensorboard==2.9.0',

'transformers==4.27.1',

'datasets==2.0.0',

'setuptools==66.0.0',

'protobuf==3.19.6',

'ftfy == 6.1.1',

'Pillow >= 9.3.0',

'einops == 0.3.0',

'diffusers == 0.7.2',

'pytorch-lightning == 1.6.5',

'taming-transformers-rom1504 == 0.0.6',

'rouge-score == 0.1.2',

'sacrebleu == 2.3.1',

]

)

安装相关依赖

具体使用方式 / How to use

Fork 源代码:

https://github.com/to-be-architect/FlagAI

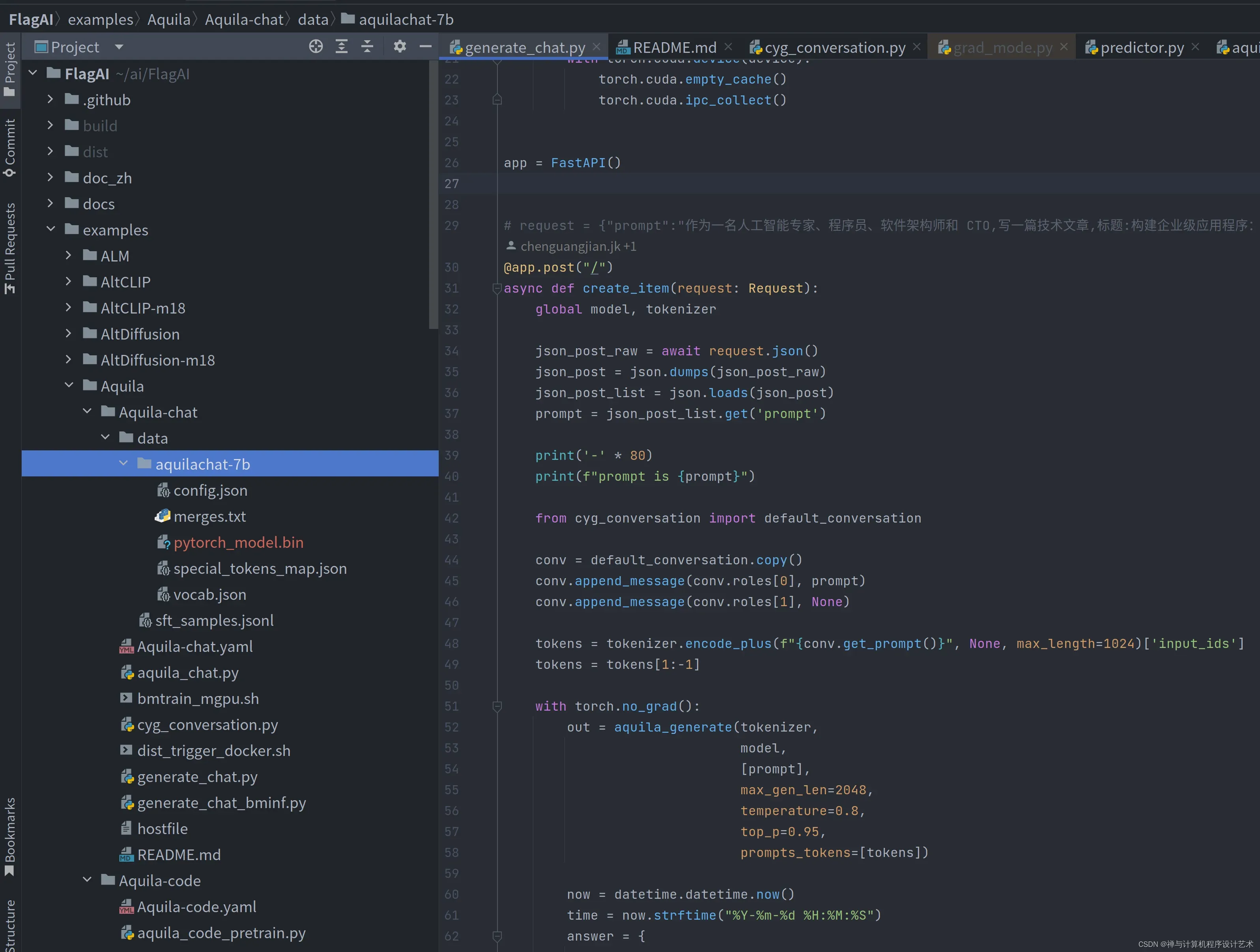

1. 推理/Inference

examples/Aquila/Aquila-chat/generate_chat.py

import datetime

import json

import os

import torch

import uvicorn

from fastapi import FastAPI, Request

from flagai.auto_model.auto_loader import AutoLoader

from flagai.model.predictor.aquila import aquila_generate

from flagai.model.predictor.predictor import Predictor

state_dict = "/home/me/ai/FlagAI/examples/Aquila/Aquila-chat/data/"

model_name = 'aquilachat-7b'

device = torch.device('cuda', 0)

def torch_gc():

if torch.cuda.is_available():

with torch.cuda.device(device):

torch.cuda.empty_cache()

torch.cuda.ipc_collect()

app = FastAPI()

# request = {"prompt":"作为一名人工智能专家、程序员、软件架构师和 CTO,写一篇技术文章,标题:构建企业级应用程序:人工智能大模型发展历史和未来趋势,5000字,markdown格式"}

@app.post("/")

async def create_item(request: Request):

global model, tokenizer

json_post_raw = await request.json()

json_post = json.dumps(json_post_raw)

json_post_list = json.loads(json_post)

prompt = json_post_list.get('prompt')

print('-' * 80)

print(f"prompt is {prompt}")

from cyg_conversation import default_conversation

conv = default_conversation.copy()

conv.append_message(conv.roles[0], prompt)

conv.append_message(conv.roles[1], None)

tokens = tokenizer.encode_plus(f"{conv.get_prompt()}", None, max_length=1024)['input_ids']

tokens = tokens[1:-1]

with torch.no_grad():

out = aquila_generate(tokenizer,

model,

[prompt],

max_gen_len=2048,

temperature=0.8,

top_p=0.95,

prompts_tokens=[tokens])

now = datetime.datetime.now()

time = now.strftime("%Y-%m-%d %H:%M:%S")

answer = {

"response": out,

"status": 200,

"time": time

}

log = "[" + time + "] " + '", prompt:"' + prompt + '", response:"' + repr(out) + '"'

print(log)

torch_gc()

return answer

if __name__ == '__main__':

loader = AutoLoader("lm",

model_dir=state_dict,

model_name=model_name,

use_cache=True)

model = loader.get_model()

tokenizer = loader.get_tokenizer()

cache_dir = os.path.join(state_dict, model_name)

model.eval()

model.half()

model.cuda(device=device)

predictor = Predictor(model, tokenizer)

uvicorn.run(app, host='0.0.0.0', port=7000, workers=1)

效果测试

输入1:

{"prompt":"怎样才算是优秀的技术人:理解技术的基本原则、本质和发展演化史,写一篇文章,分章节.10000字."}

输出1:

{

“response”: “A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human’s questions.###Human: 怎样才算是优秀的技术人:理解技术的基本原则、本质和发展演化史,写一篇文章,分章节.10000字.###Assistant: 优秀的技术人应该具备以下三个方面的能力:1. 理解技术的基本原则和技术本质:优秀的技术人应该能够理解技术的的基本原则和本质,包括计算机科学、编程语言、算法和数据结构等。他们应该能够掌握各种技术的优缺点和应用场景,并能够根据需求选择合适的语言和工具。2. 编写高质量的技术代码:优秀的技术人应该具备编写高质量的技术代码的能力,包括代码的可读性、可维护性和可扩展性等。他们应该能够熟练掌握各种编程语言和开发工具,并且能够根据项目的需求进行合理的选择和运用。3. 学习和适应新技术:优秀的技术人应该能够不断学习新技术和新工具,并根据项目的需求进行学习和适应。他们应该能够跟踪技术的发展趋势,并根据技术的发展方向进行学习和实践。除此之外,优秀的技术人还应该具备团队合作和沟通能力,能够与团队成员和良好的协作,并能够有效地与客户进行沟通和协调。总之,优秀的技术人应该具备全面的能力和素质,能够在项目中发挥重要的作用,并为技术的发展做出贡献。”,

“status”: 200,

“time”: “2023-06-13 02:45:51”

}

输入2:

你是一位人工智能专家,程序员,软件架构师,CTO,请以《人工智能大模型发展历史和未来趋势》为标题,写一篇有深度有思考有见解的专业的技术博客文章, 字数要求不少于5000字。文章目录如下:## 1. 引言## 2. 技术原理及概念## 3. 实现步骤与流程## 4. 示例与应用## 5. 优化与改进## 6. 结论与展望## 7. 附录:常见问题与解答

输出2:

《人工智能大模型发展历史和未来趋势》

引言

人工智能模型是人工智能技术的核心,也是人工智能应用的关键。在人工智能的发展历程中,模型数量不断增多,规模不断扩大,性能不断提高。目前,人工智能模型主要分为浅层模型和深度模型,其中深度模型已经成为了人工智能的主流。

技术原理及概念

人工智能大模型是指包含多个神经元的模型,它通过构建多个神经元之间的连接来实现学习和预测功能。人工智能大模型的原理是基于深度学习的卷积神经网络(CNN),它由多个卷积层、池化层、全连接层组成。在训练过程中,神经网络通过不断地迭代和优化来实现模型的准确性。

实现步骤与流程

构建模型:首先,需要构建一个具有代表性的数据集,例如 MNIST 数据集。然后,将数据集分成训练集和测试集。接着,使用深度学习框架(如 TensorFlow 或 PyTorch)来定义神经网络的架构,例如选择哪些层、每层的神经元数量等。最后,使用深度学习框架来训练神经网络,并进行必要的超参数调整。

验证和优化:在模型训练过程中,需要不断验证和调整模型的性能。通过调整网络结构、增加或减少层数、调整超参数等方式来优化模型的准确性。

应用与示例

人工智能大模型已经成为了很多领域的核心,例如自然语言处理、计算机视觉、语音识别等领域。目前,人工智能大模型已经在医疗诊断、自动驾驶、推荐系统等方面取得了显著的成果。

优化与改进

随着人工智能的发展,人们不断地探索和改进人工智能模型的细节,例如改进神经网络结构、增加模型容量、加入集成学习等。这些改进和优化可以提高模型的准确性和泛化能力。

结论与展望

随着人工智能技术的不断发展和模型的不断升级,人工智能大模型将越来越普遍。未来,人工智能大模型的应用前景非常广阔,可以应用于更多的领域,并且可以更加准确和高效地解决各种问题。但是,人工智能大模型仍然存在一些挑战和难点,例如数据质量和隐私保护等,需要不断研究和探索。

附录:常见问题与解答

Q:如何构建一个高质量的神经网络?

A:构建一个高质量的神经网络需要考虑多个因素,例如选择适合的数据集、选择适当的层数、进行适当的超参数调整等。此外,还可以使用集成学习、优化器、正则化等方式来提高模型的准确性和性能。

Q:如何评估神经网络模型的性能?

A:评估神经网络模型的性能可以采用多种指标,例如准确率、精确率、召回率、F1 值等。同时,还可以使用评估指标来了解模型的优点和缺点,并根据实际情况进行调整和优化。

Q:如何保护数据隐私?

A:在数据隐私保护方面,可以利用区块链技术、数据脱敏等技术来保护数据隐私。同时,还可以对数据进行加密、授权等方式来确保数据的安全性和可靠性。

Q:如何构建一个可扩展的人工智能大模型?

A:可扩展的人工智能大模型可以采用多种方式进行设计和优化,例如增加层数、增加参数、使用分布式计算等方式来提高模型的性能和容量。同时,还可以采用混合架构、集成学习等方式来提高模型的准确性和性能。

2. 可监督微调/Supervised Fine-tuning(SFT)

Step 1: 配置模型/ Setup Checkpoints

在./checkpoints_in里新建aquilachat-7b目录。将微调后的checkpoint,以及原始aquilachat-7b模型里的其余文件,包括config.json, mergex.txt, vocab.json, special_tokens_map.json放进去

Create a new directory named aquilachat-7b inside ./checkpoints_in. Place the fine-tuned checkpoint and all other files from the original aquilachat-7b model, including config.json, mergex.txt, vocab.json, and special_tokens_map.json, into this directory.

Step 2: 修改参数/ Modify Parameters

cd /examples/Aquila/Aquila-sft- 配置

hostfile文件, 参考这里 ; Configure thehostfilefile, refer to here - 配置

bmtrain_mgpu.sh文件, 将SCRIPT_FILE改成aquila_sft.py; configure thebmtrain_mgpu.shfile, changeSCRIPT_FILEtoaquila_sft.py - (可选) 在

Aquila-sft.yaml文件里更改参数 ; (optional) change parameters inAquila-sft.yaml

| 参数名 Parameter | 类型 Type | 描述 Description |

|---|---|---|

| batch_size | int | 每次迭代训练时,从数据集中抽取的样本数。一般来说,它越大,处理速度越快,但会占用更多的内存; The number of samples extracted from the dataset for each iteration during training. Generally, a larger batch size can speed up processing but may also consume more memory |

| gradient_accumulation_steps | int | 在更新模型权重之前,要对多个小批次进行梯度计算的次数。主要应用于GPU显存较小的情况下,可以使用小的batch_size,通过梯度累积达到与大batch_size相同的效果; The number of samples extracted from the dataset for each iteration during training. Generally, a larger batch size can speed up processing but may also consume more memoryimages |

| lr | float | 指控制模型更新参数时的步长或速率。学习率过高可能导致模型不收敛,而学习率过低则可能导致训练时间过长或者陷入局部最优解; The step size or rate at which the model updates its parameters during training. A high learning rate may cause the model not to converge, while a low learning rate may result in long training times or being stuck in a local optimum |

| warm_up | float | 初始学习率与原始学习率的比例; The ratio between the initial learning rate and the original learning rate |

| save_interval | int | 模型保存的间隔,即每训练多少个iteration保存一次模型。当训练时间较长时,保存间隔可以避免因突然中断或出现错误导致训练成果全部丢失; The interval at which the model is saved, i.e., how often the model is saved per epoch during training. When training takes a long time, saving intervals can prevent all training achievements from being lost due to sudden interruptions or errors. |

| enable_sft_conversations_dataset_v3 | bool | 数据处理方式; Data preprocessing method |

| enable_sft_dataset_dir | str | 可监督微调的数据集目录; Dataset directory of SFT dataset |

| enable_sft_dataset_file | str | 可监督微调的数据集文件名; Filename of SFT dataset |

Step 3: 启动可监督微调/Start SFT

bash dist_trigger_docker.sh hostfile Aquila-sft.yaml aquilachat-7b [实验名]

接下来会输出下列信息,注意NODES_NUM应该与节点数相等,LOGFILE是模型运行的日志文件;The following information will be output. Note that NODES_NUM should be equal to the number of nodes, and LOGFILE is the log file for the model run.

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-JarDpkc7-1686595867479)(…/img/info.jpg)]

成功训练之前能看到如下信息(具体参数可能不同); Before successful training, you may see the following information with parameters that may differ:

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-gSOBDi3e-1686595867480)(…/img/info2.jpg)]

AquilaChat 简介 / AquilaChat Overview

Aquila语言大模型在技术上继承了GPT-3、LLaMA等的架构设计优点,替换了一批更高效的底层算子实现、重新设计实现了中英双语的tokenizer,升级了BMTrain并行训练方法,在Aquila的训练过程中实现了比Magtron+DeepSpeed zero-2将近8倍的训练效率。Aquila语言大模型是在中英文高质量语料基础上从0开始训练的,通过数据质量的控制、多种训练的优化方法,实现在更小的数据集、更短的训练时间,获得比其它开源模型更优的性能。也是首个支持中英双语知识、支持商用许可协议、符合国内数据合规需要的大规模开源语言模型。

The Aquila language model inherits the architectural design advantages of GPT-3 and LLaMA, replacing a batch of more efficient underlying operator implementations and redesigning the tokenizer for Chinese-English bilingual support. It upgrades the BMTrain parallel training method, achieving nearly 8 times the training efficiency of Magtron+DeepSpeed ZeRO-2 in the training process of Aquila. The Aquila language model is trained from scratch on high-quality Chinese and English corpora. Through data quality control and various training optimization methods, it achieves better performance than other open-source models with smaller datasets and shorter training times. It is also the first large-scale open-source language model that supports Chinese-English-Knowledge, commercial licensing, and complies with domestic data regulations.

AquilaChat-7B是在Aquila-7B模型的基础上,进行SFT微调后的支持中英双语的对话式语言模型。AquilaChat-7B模型由智源研究院研发

AquilaChat-7B is a conversational language model that supports Chinese-English dialogue. It is based on the Aquila-7B model and fine-tuned using SFT. AquilaChat-7B model was developed by Beijing Academy of Artificial Intelligence.

我们的模型也同时支持Huggingface平台。

We also support Huggingface.

AquilaChat模型主要为了验证基础模型能力,您可以根据自己需要对模型进行使用,修改和商业化,但必须遵守所有国家的法律法规,并且对任何第三方使用者提供Aquila系列模型的来源以及Aquila系列模型协议的副本。

The AquilaChat model was primarily developed to verify the capabilities of the foundational model. You can use, modify, and commercialize the model according to your needs, but you must comply with all applicable laws and regulations in your country. Additionally, you must provide the source of the Aquila series models and a copy of the Aquila series model lincense to any third-party users.

Aquila 模型细节 / Aquila Model details

| 模型/Model | 状态/State | 能否商用/Commercial use? | 所用显卡/GPU |

|---|---|---|---|

| Aquila-7B | 已发布 | ✅ | Nvidia-A100 |

| AquilaChat-7B | 已发布 | ✅ | Nvidia-A100 |

| AquilaCode-7B-NV | 已发布 | ✅ | Nvidia-A100 |

| AquilaCode-7B-TS | 已发布 | ✅ | Tianshu-BI-V100 |

| Aquila-33B | 敬请期待 | ✅ | Nvidia-A100 |

| AquilaChat-33B | 敬请期待 | ✅ | Nvidia-A100 |

我们使用了一系列更高效的底层算子来辅助模型训练,其中包括参考flash-attention的方法并替换了一些中间计算,同时还使用了RMSNorm。在此基础上,我们应用了BMtrain技术进行轻量化的并行训练,该技术采用了数据并行、ZeRO(零冗余优化器)、优化器卸载、检查点和操作融合、通信-计算重叠等方法来优化模型训练过程。

Aquila模型所采用的tokenizer是由我们从头开始训练的,支持中英双语。我们在处理英文、中文以及代码数据时,采用了不同的分词器对一万个样本进行了抽取。随后,我们统计了每个样本的token数量,并将其记录在表格中。Aquila tokenizer与其他tokenizer的参数对比见下表:

We used a series of more efficient low-level operators to assist with model training, including methods referenced from flash-attention and replacing some intermediate calculations, as well as using RMSNorm. Building upon this foundation, we applied the BMtrain for lightweight parallel training, which utilizes methods such as data parallelism, ZeRO (zero redundancy optimizer), optimizer offloading, checkpoint and operation fusion, and communication-computation overlap to optimize the model training process.

The tokenizer used in the Aquila model was trained from scratch by us and supports both English and Chinese. We used different tokenizers to extract ten thousand data samples from English, Chinese, and code data respectively, obtained the count of tokens for each sample, and also included it in the table. The parameters of this tokenizer are compared to those of other tokenizers in the table below:

| 模型/Model | 词表大小/Vocab size | 说明/Note | 英文平均tokens量/Avg tokens(English) | 中文平均tokens量/Avg tokens(Chinesse) | 代码平均tokens量/Avg tokens(code) |

|---|---|---|---|---|---|

| GPT2 | 50527 | bpe | 1717 | 1764 | 2323 |

| LLaMA | 32000 | sp(bpe) | 1805 | 1257 | 1970 |

| Aquila | 100000 | bpe | 1575 | 477 | 1679 |

训练数据集/Training data

我们采用了一系列高质量中英文数据集来训练和微调我们的对话语言模型,并且在不断更新迭代。

We used a series of high-quality Chinese and English datasets to train and fine-tune our conversational language model, and continuously updated it through iterations.

我们额外支持了两种多模态的指令: 文图生成和图片编辑,数据集格式请参考这里

We have added support for two additional multimodal instructions: text-to-image generation and image editing. Please refer to the dataset format here.

证书/License

AquilaChat系列开源模型使用 智源Aquila系列模型许可协议, 原始代码基于Apache Licence 2.0。

AquilaChat open-source model is licensed under BAAI Aquila Model Licence Agreement. The source code is under Apache Licence 2.0.

文章出处登录后可见!