基本思想:一直想学rk3588的视频编解码,奈何没有设备,最近获得机会,利用空闲时间好好研究一番,正好手中的深度相机oak camera支持视频编码,逐想用软解编码和瑞芯微的mpp硬解码去走一波,本实验使用的poe-rj45接口和usb低电压接口测试

测试数据

硬件:rk3588s开发板

oak-d s2深度相机(usb接口)

技术:

rk3588s mpp硬解码

oak h264编码(最高帧率60fps)

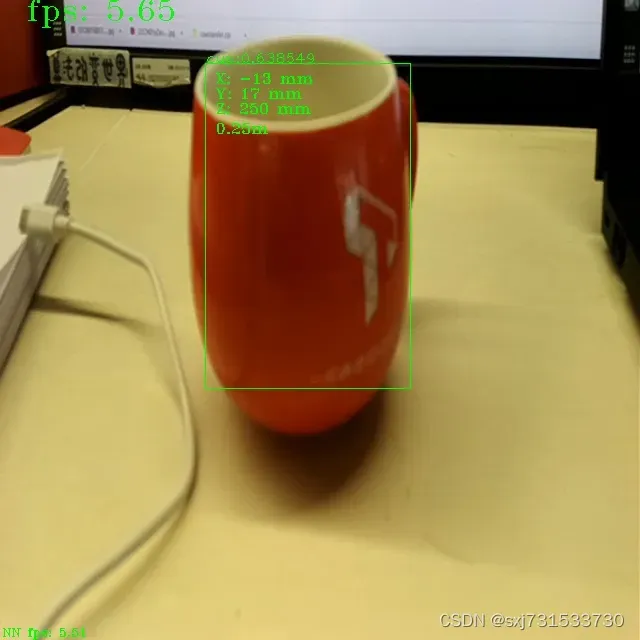

yolov7-tiny 单目标检测

硬件频率设置:

cpu 频率 408000

dmc频率 2112000000

npu频率 1000000000

目标检测准确的情况下,测试数据如下:

解码 总帧率 56-60fps

解码+640推理(15ms)总帧率 32fps

解码+416推理(7ms) 总帧率 47fps

解码+320推理(4ms) 总帧率 48-54fps

首先准备测试视频,截取20s的视频链接: https://pan.baidu.com/s/1_3wff-xizk4G1xBluTtsmQ?pwd=fmc9 提取码: fmc9

ubuntu@ubuntu:~/test_rk3588_mpp$ ffmpeg -ss 00:00:00 -i 1920x1080.mp4 -to 00:00:20 -c:v copy -c:a copy 1920x1080_min.mp4

预先转h264

ubuntu@ubuntu:~/test_rk3588_mpp$ ffmpeg -i 1920x1080_min.mp4 -codec copy -bsf: h264_mp4toannexb -f h264 1920x1080_min.h264一、进入系统

ubuntu@ubuntu:~$ ssh firefly@192.168.71.30

The authenticity of host '192.168.71.30 (192.168.71.30)' can't be established.

ECDSA key fingerprint is SHA256:EABsn4kWnfnqvYNreTaJf6QPvgKnvJPYSFBDsQglD8k.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.71.30' (ECDSA) to the list of known hosts.

firefly@192.168.71.30's password:

_____ _ __ _

| ___(_)_ __ ___ / _| |_ _

| |_ | | '__/ _ \ |_| | | | |

| _| | | | | __/ _| | |_| |

|_| |_|_| \___|_| |_|\__, |

|___/

Welcome to Ubuntu 20.04.4 LTS (GNU/Linux 5.10.110-rt53 aarch64)

* Documentation: http://wiki.t-firefly.com

* Management: http://www.t-firefly.com

System information as of Sun Jun 26 04:18:31 UTC 2022

System load: 0.86 0.93 0.67 Up time: 18:42 hours Local users: 2

Memory usage: 34 % of 3464MB IP: 192.168.71.30

Usage of /: 1% of 23G

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

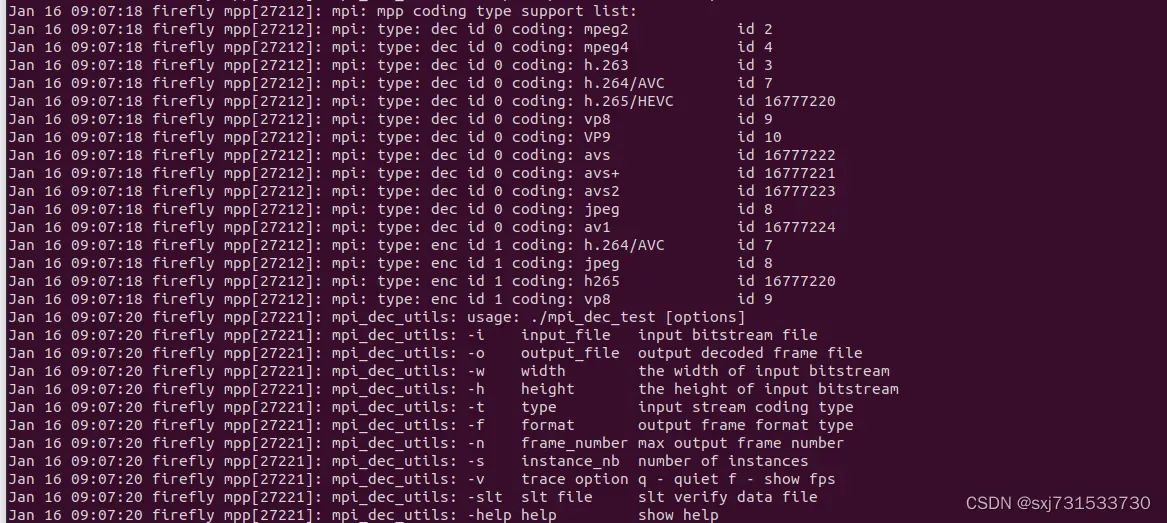

1)下载源码和测试mpp

测试命令

firefly@firefly:~$ git clone https://github.com/rockchip-linux/mpp.git

firefly@firefly:~$ cd mpp

firefly@firefly:~/mpp$ mkidr build

firefly@firefly:~/mpp/build$ cmake ..&make&sudo make install

firefly@firefly:~/mpp/build/test$ sudo ./mpi_dec_test -t 7 -i 1920x1080_min.h264 -f 4 -h 1080 -w 1920 -o 1920x1080_min.nv12

firefly@firefly:~/mpp/build/test$ ffplay -f rawvideo -video_size 1920*1080 -pixel_format nv12 1920x1080_min.nv12

可以播放

监控日志

firefly@firefly:~$ watch -n 1 tail -f /var/log/syslog

....

Every 1.0s: tail -f /var/log/syslog firefly: Mon Jan 16 07:43:58 2023

Jan 16 07:43:56 firefly mpp[12221]: mpi_dec_test: 0x5587aa7160 decode get frame 1896

Jan 16 07:43:57 firefly mpp[12221]: mpi_dec_test: 0x5587aa7160 decode get frame 1897

Jan 16 07:43:57 firefly mpp[12221]: mpi_dec_test: 0x5587aa7160 decode get frame 1898

Jan 16 07:43:57 firefly mpp[12221]: mpi_dec_test: 0x5587aa7160 decode get frame 18992)下载源码和测试(摘自官方测试)ff_media

# 根据操作系统获取源码

git clone https://gitlab.com/firefly-linux/ff_media -b ubuntu20.04

# 安装所需环境

apt install gcc g++ make cmake

apt install libasound2-dev

apt install libopencv-dev

# 编译

cd ff_media

mkdir build; cd build

cmake ../

make

# 运行 demo

## 直接运行 demo 可以查看帮助信息

INFO: usage: Usage: ./demo <Input source> [Options]

Options:

-i, --input Input image size

-o, --output Output image size, default same as input

-a, --inputfmt Input image format, default MJPEG

-b, --outputfmt Output image format, default NV12

-c, --count Instance count, default 1

-d, --drmdisplay Drm display, set display plane, set 0 to auto find plane, default disabled

-e, --encodetype Encode encode, set encode type, default disabled

-f, --file Enable save dec output data to file, set filename, default disabled

-p, --port Enable rtsp stream, set push port, depend on encode enabled, default disabled

-m, --enmux Enable save encode data to mp4 file, depend on encode enabled, default disabled

-r, --rotate Image rotation degree, default 0

0: none

1: vertical mirror

2: horizontal mirror

90: 90 degree

180: 180 degree

270: 270 degree

## 示范:输入是分辨率为 1080p 的 rtsp 摄像头,把解码图像缩放为 720p 输出到显示器上。

./demo 1920x1080.h264 -o 1280x720 -d 0

#打开usb相机

./demo /dev/video12 -i 640x480 -o 640x480 -d 0 测试没问题,速度很快,下一步需要结合官方提供的demo和例子,修改自己可以用的代码

二、先用笔记本测试ffmpeg软解码h264

1)测试软解码在54fps

cmake_minimum_required(VERSION 3.16)

project(untitled1)

find_package(OpenCV REQUIRED)

set(CMAKE_CXX_STANDARD 14)

add_executable(untitled1 main.cpp)

target_link_libraries(untitled1

${OpenCV_LIBS}

-lavformat -lavcodec -lswscale -lavutil -lz

)测试代码

#include <stdio.h>

#include <string>

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libavutil/avutil.h"

#include "libavutil/imgutils.h"

};

#include <chrono>

#include <iomanip>

using namespace std;

using namespace chrono;

int main(int argc, char *argv[]) {

const char *path = "/home/ubuntu/test_rk3588_mpp/1920x1080.h264";

// AVDictionary *optional=NULL;

// av_dict_set(&optional,"stimeout","6000000",0);

// av_dict_set(&optional,"rstp_transport","tcp",0);

// av_dict_set(&optional,"buffer_size","1024000",0);

// av_dict_set(&optional,"max_delay","500000",0);

AVFormatContext *pFormat = NULL;

int ret = avformat_open_input(&pFormat, path, NULL, NULL);

if (ret < 0) {

perror("avformat_open_input");

avformat_free_context(pFormat);

return -1;

}

// av_dict_free(&optional);

printf("avformat_open_input successfully\n");

ret = avformat_find_stream_info(pFormat, NULL);

if (ret < 0) {

perror("avformat_find_stream_info\n");

return -1;

}

printf("avformat_find_stream_info successfully\n");

int time = pFormat->duration;

int mbittime = (time / 100000) / 60;

int mminttime = (time / 100000) % 60;

printf("video time: %d'm %d's\n", mbittime, mminttime);

av_dump_format(pFormat, 0, path, 0);

int videoindex = -1;

for (int i = 0; i < pFormat->nb_streams; i++) {

if (pFormat->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

}

if (videoindex == -1) {

printf("don't find video stream\n");

return -1;

}

AVCodecParameters *codecParameters = pFormat->streams[videoindex]->codecpar;

printf("video width %d\n", codecParameters->width);

printf("video height %d\n", codecParameters->height);

AVCodec *pCodec = avcodec_find_decoder(codecParameters->codec_id);

AVCodecContext *pCodecCtx = avcodec_alloc_context3(pCodec);

ret = avcodec_open2(pCodecCtx, pCodec, NULL);

if (ret < 0) {//打开解码器

printf("Could not open codec.\n");

return -1;

}

AVFrame *picture = av_frame_alloc();

picture->width = codecParameters->width;

picture->height = codecParameters->height;

picture->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(picture, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

printf("picture->linesize[0] %d\n", picture->linesize[0]);

AVFrame *pFrame = av_frame_alloc();

pFrame->width = codecParameters->width;

pFrame->height = codecParameters->height;

pFrame->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(pFrame, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

AVFrame *pFrameRGB = av_frame_alloc();

pFrameRGB->width = codecParameters->width;

pFrameRGB->height = codecParameters->height;

pFrameRGB->format = AV_PIX_FMT_RGB24;

ret = av_frame_get_buffer(pFrameRGB, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

int picture_size = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, codecParameters->width, codecParameters->height,

1);//计算这个格式的图片,需要多少字节来存储

uint8_t *out_buff = (uint8_t *) av_malloc(picture_size * sizeof(uint8_t));

av_image_fill_arrays(picture->data, picture->linesize, out_buff, AV_PIX_FMT_YUV420P, codecParameters->width,

codecParameters->height, 1);

//这个函数 是缓存转换格式,可以不用 以为上面已经设置了AV_PIX_FMT_YUV420P

SwsContext *img_convert_ctx = sws_getContext(codecParameters->width, codecParameters->height, AV_PIX_FMT_YUV420P,

codecParameters->width, codecParameters->height, AV_PIX_FMT_RGB24, 4,

NULL, NULL, NULL);

AVPacket *packet = (AVPacket *) av_malloc(sizeof(AVPacket));

auto startTime = steady_clock::now();

int counter = 0;

float fps = 0;

while (av_read_frame(pFormat, packet) >= 0) {

if (packet->stream_index == videoindex) {

ret = avcodec_send_packet(pCodecCtx, packet);

if (ret < 0) {

printf("avcodec_send_packet error\n");

continue;

}

av_packet_unref(packet);

int got_picture = avcodec_receive_frame(pCodecCtx, pFrame);

if (got_picture < 0) {

printf("avcodec_receive_frame error\n");

continue;

}

sws_scale(img_convert_ctx, pFrame->data, pFrame->linesize, 0,

codecParameters->height,

pFrameRGB->data, pFrameRGB->linesize);

cv::Mat mRGB(cv::Size(codecParameters->width, codecParameters->height), CV_8UC3);

mRGB.data = (unsigned char *) pFrameRGB->data[0];

cv::Mat mBGR;

cv::cvtColor(mRGB, mBGR, cv::COLOR_RGB2BGR);

counter++;

auto currentTime = steady_clock::now();

auto elapsed = duration_cast<duration<float>>(currentTime - startTime);

if (elapsed > seconds(1)) {

fps = counter / elapsed.count();

counter = 0;

startTime = currentTime;

}

std::stringstream fpsStr;

fpsStr << "NN fps: " << std::fixed << std::setprecision(2) << fps;

cv::putText(mBGR, fpsStr.str(), cv::Point(2, 20), cv::FONT_HERSHEY_TRIPLEX, 0.4, cv::Scalar(0,255,0));

cv::imshow("demo", mBGR);

cv::waitKey(1);

}

}

av_frame_free(&picture);

av_frame_free(&pFrame);

av_frame_free(&pFrameRGB);

avformat_free_context(pFormat);

return 0;

}测试结果

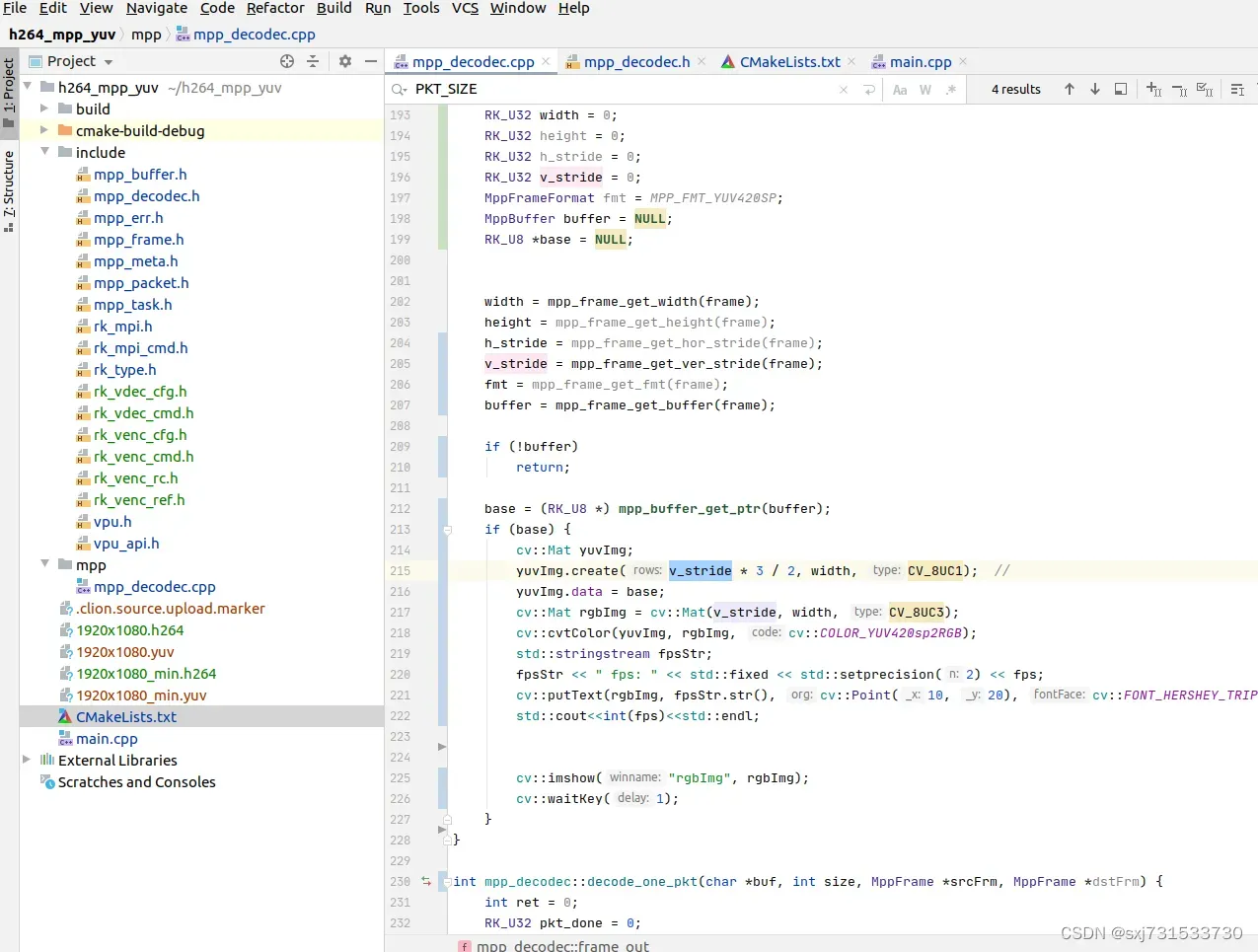

2)修改第一版使用硬件解码h264然后显示,测试帧率

https://github.com/sxj731533730/h264_mpp_yuv

在rk3588 硬件测试mpp解码速度在200fps

测试结果

三、先以ffmpeg软解码去解析oak的视频帧,测试代码,以yolov7-tiny模型为例子

cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(depthai)

set(CMAKE_CXX_STANDARD 11)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

include_directories(${CMAKE_SOURCE_DIR}/include)

include_directories(${CMAKE_SOURCE_DIR}/include/utility)

#链接Opencv库

find_package(depthai CONFIG REQUIRED)

add_executable(depthai main.cpp include/utility/utility.cpp)

target_link_libraries(depthai ${OpenCV_LIBS} depthai::opencv -lavformat -lavcodec -lswscale -lavutil -lz)main.cpp

#include <stdio.h>

#include <string>

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

extern "C"

{

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

#include <libswscale/swscale.h>

}

#include "utility.hpp"

#include <vector>

#include "depthai/depthai.hpp"

static std::atomic<bool> newConfig{false};

using namespace std;

using namespace std::chrono;

using namespace cv;

typedef struct {

int width;

int height;

} YoloSize;

typedef struct {

std::string name;

int stride;

std::vector<YoloSize> anchors;

} YoloLayerData;

class BoxInfo {

public:

int x1, y1, x2, y2, label, id;

float score;

};

static inline float sigmoid(float x) {

return static_cast<float>(1.f / (1.f + exp(-x)));

}

double GetIOU(cv::Rect_<float> bb_test, cv::Rect_<float> bb_gt) {

float in = (bb_test & bb_gt).area();

float un = bb_test.area() + bb_gt.area() - in;

if (un < DBL_EPSILON)

return 0;

return (double) (in / un);

}

std::vector<BoxInfo> decode_infer(vector<float> data_pt, int stride, int net_size, int num_classes,

const std::vector<YoloSize> &anchors, float threshold, int idx) {

const int s[3] = {20, 40, 80};

std::vector<BoxInfo> result;

int batchs, channels, height, width, pred_item;

batchs = 1;

channels = 3;

height = s[idx];

width = s[idx];

pred_item = 6;

float data_ptr[batchs * channels * height * width * pred_item];

//std::cout << batchs * channels * height * width * pred_item << std::endl << data_pt.size() << std::endl;

for (int i = 0; i < data_pt.size(); i++) {

data_ptr[i] = data_pt[i];

}

for (int bi = 0; bi < batchs; bi++) {

auto batch_ptr = data_ptr + bi * (channels * height * width * pred_item);

for (int ci = 0; ci < channels; ci++) {

auto channel_ptr = batch_ptr + ci * (height * width * pred_item);

for (int hi = 0; hi < height; hi++) {

auto height_ptr = channel_ptr + hi * (width * pred_item);

for (int wi = 0; wi < width; wi++) {

auto width_ptr = height_ptr + wi * pred_item;

auto cls_ptr = width_ptr + 5;

auto confidence = sigmoid(width_ptr[4]);

for (int cls_id = 0; cls_id < num_classes; cls_id++) {

float score = sigmoid(cls_ptr[cls_id]) * confidence;

if (score > threshold) {

float cx = (sigmoid(width_ptr[0]) * 2.f - 0.5f + wi) * (float) stride;

float cy = (sigmoid(width_ptr[1]) * 2.f - 0.5f + hi) * (float) stride;

float w = pow(sigmoid(width_ptr[2]) * 2.f, 2) * anchors[ci].width;

float h = pow(sigmoid(width_ptr[3]) * 2.f, 2) * anchors[ci].height;

BoxInfo box;

box.x1 = std::max(0, std::min(net_size, int((cx - w / 2.f))));

box.y1 = std::max(0, std::min(net_size, int((cy - h / 2.f))));

box.x2 = std::max(0, std::min(net_size, int((cx + w / 2.f))));

box.y2 = std::max(0, std::min(net_size, int((cy + h / 2.f))));

box.score = score;

box.label = cls_id;

result.push_back(box);

}

}

}

}

}

}

return result;

}

void nms(std::vector<BoxInfo> &input_boxes, float NMS_THRESH) {

std::sort(input_boxes.begin(), input_boxes.end(), [](BoxInfo a, BoxInfo b) { return a.score > b.score; });

std::vector<float> vArea(input_boxes.size());

for (int i = 0; i < int(input_boxes.size()); ++i) {

vArea[i] = (input_boxes.at(i).x2 - input_boxes.at(i).x1 + 1)

* (input_boxes.at(i).y2 - input_boxes.at(i).y1 + 1);

}

for (int i = 0; i < int(input_boxes.size()); ++i) {

for (int j = i + 1; j < int(input_boxes.size());) {

float xx1 = std::max(input_boxes[i].x1, input_boxes[j].x1);

float yy1 = std::max(input_boxes[i].y1, input_boxes[j].y1);

float xx2 = std::min(input_boxes[i].x2, input_boxes[j].x2);

float yy2 = std::min(input_boxes[i].y2, input_boxes[j].y2);

float w = std::max(float(0), xx2 - xx1 + 1);

float h = std::max(float(0), yy2 - yy1 + 1);

float inter = w * h;

float ovr = inter / (vArea[i] + vArea[j] - inter);

if (ovr >= NMS_THRESH) {

input_boxes.erase(input_boxes.begin() + j);

vArea.erase(vArea.begin() + j);

} else {

j++;

}

}

}

}

void scale_coords(std::vector<BoxInfo> &boxes, int w_from, int h_from, int w_to, int h_to) {

float w_ratio = float(w_to) / float(w_from);

float h_ratio = float(h_to) / float(h_from);

for (auto &box: boxes) {

box.x1 *= w_ratio;

box.x2 *= w_ratio;

box.y1 *= h_ratio;

box.y2 *= h_ratio;

}

return;

}

cv::Mat draw_box(cv::Mat &cv_mat, std::vector<BoxInfo> &boxes, const std::vector<std::string> &labels,

unsigned char colors[][3]) {

for (auto box : boxes) {

int width = box.x2 - box.x1;

int height = box.y2 - box.y1;

cv::Point p = cv::Point(box.x1, box.y1);

cv::Rect rect = cv::Rect(box.x1, box.y1, width, height);

cv::rectangle(cv_mat, rect, cv::Scalar(colors[box.label][0], colors[box.label][1], colors[box.label][2]));

string text = labels[box.label] + ":" + std::to_string(box.score);

cv::putText(cv_mat, text, p, cv::FONT_HERSHEY_PLAIN, 1,

cv::Scalar(colors[box.label][0], colors[box.label][1], colors[box.label][2]));

}

return cv_mat;

}

int main(int argc, char **argv) {

std::vector<YoloLayerData> yolov7_layers{

{"324", 32, {{116, 90}, {156, 198}, {373, 326}}},

{"304", 16, {{30, 61}, {62, 45}, {59, 119}}},

{"output", 8, {{12, 16}, {16, 30}, {33, 23}}},

};

vector<float> origin_rect_cof;

std::vector<YoloLayerData> &layers = yolov7_layers;

float threshold = 0.4;

float nms_threshold = 0.5;

int i = 0;

std::vector<std::string> labels = {

"cup"

};

unsigned char colors[][3] = {

{0, 255, 0}

};

float target_width = 640;

float target_height = 640;

dai::Pipeline pipeline;

auto camRgb = pipeline.create<dai::node::ColorCamera>();

auto rgbOut = pipeline.create<dai::node::XLinkOut>();

rgbOut->setStreamName("rgbOut");

//camRgb->video.link(rgbOut->input);

camRgb->setPreviewSize(640, 640);

camRgb->setBoardSocket(dai::CameraBoardSocket::RGB);

camRgb->setResolution(dai::ColorCameraProperties::SensorResolution::THE_1080_P);

camRgb->setInterleaved(false);

camRgb->setColorOrder(dai::ColorCameraProperties::ColorOrder::RGB);

//camRgb->setFps(20);

camRgb->setPreviewKeepAspectRatio(false);

auto monoLeft = pipeline.create<dai::node::MonoCamera>();

auto monoRight = pipeline.create<dai::node::MonoCamera>();

auto stereo = pipeline.create<dai::node::StereoDepth>();

auto spatialDataCalculator = pipeline.create<dai::node::SpatialLocationCalculator>();

auto Encoder = pipeline.create<dai::node::VideoEncoder>();

Encoder->setDefaultProfilePreset(camRgb->getVideoSize(), camRgb->getFps(),

dai::VideoEncoderProperties::Profile::H265_MAIN);

//camRgb->video.link(Encoder->input);

//定义

//auto cam = pipeline.create<dai::node::XLinkIn>();

//camRgb->setStreamName("inFrame");

auto net = pipeline.create<dai::node::NeuralNetwork>();

dai::OpenVINO::Blob blob("../model_300_300/best_cup.blob");

net->setBlob(blob);

net->input.setBlocking(false);

//基本熟练明白oak的函数使用了

camRgb->preview.link(net->input);

camRgb->setFps(20);

//camRgb->setVideoSize(1920, 1080);

auto xoutDepth = pipeline.create<dai::node::XLinkOut>();

auto xoutSpatialData = pipeline.create<dai::node::XLinkOut>();

auto xinSpatialCalcConfig = pipeline.create<dai::node::XLinkIn>();

xoutDepth->setStreamName("depth");

xoutSpatialData->setStreamName("spatialData");

xinSpatialCalcConfig->setStreamName("spatialCalcConfig");

monoLeft->setResolution(dai::MonoCameraProperties::SensorResolution::THE_400_P);

monoLeft->setBoardSocket(dai::CameraBoardSocket::LEFT);

monoRight->setResolution(dai::MonoCameraProperties::SensorResolution::THE_400_P);

monoRight->setBoardSocket(dai::CameraBoardSocket::RIGHT);

stereo->setDefaultProfilePreset(dai::node::StereoDepth::PresetMode::HIGH_ACCURACY);

stereo->setLeftRightCheck(true);

stereo->setDepthAlign(dai::CameraBoardSocket::RGB);

stereo->setExtendedDisparity(true);

// LR-check is required for depth alignment

dai::Point2f topLeft(0.4f, 0.4f);

dai::Point2f bottomRight(0.6f, 0.6f);

dai::SpatialLocationCalculatorConfigData config;

config.depthThresholds.lowerThreshold = 100;

config.depthThresholds.upperThreshold = 10000;

config.roi = dai::Rect(topLeft, bottomRight);

spatialDataCalculator->inputConfig.setWaitForMessage(false);

spatialDataCalculator->initialConfig.addROI(config);

// Linking

monoLeft->out.link(stereo->left);

monoRight->out.link(stereo->right);

spatialDataCalculator->passthroughDepth.link(xoutDepth->input);

stereo->depth.link(spatialDataCalculator->inputDepth);

spatialDataCalculator->out.link(xoutSpatialData->input);

xinSpatialCalcConfig->out.link(spatialDataCalculator->inputConfig);

//定义输出

auto xlinkoutpreviewOut = pipeline.create<dai::node::XLinkOut>();

xlinkoutpreviewOut->setStreamName("out264");

Encoder->bitstream.link(xlinkoutpreviewOut->input);

//定义输出

auto xlinkParserOut = pipeline.create<dai::node::XLinkOut>();

xlinkParserOut->setStreamName("parseOut");

auto xlinkoutOut = pipeline.create<dai::node::XLinkOut>();

xlinkoutOut->setStreamName("out");

auto xlinkoutpassthroughOut = pipeline.create<dai::node::XLinkOut>();

xlinkoutpassthroughOut->setStreamName("passthrough");

net->out.link(xlinkParserOut->input);

net->passthrough.link(xlinkoutpassthroughOut->input);

auto manip = pipeline.create<dai::node::ImageManip>();

manip->initialConfig.setResize(target_width, target_height);

manip->initialConfig.setFrameType(dai::ImgFrame::Type::NV12);

camRgb->preview.link(manip->inputImage);

manip->out.link(Encoder->input);

//结构推送相机

dai::Device device(pipeline,true);

//取帧显示

// auto inqueue = device.getInputQueue("inFrame");//maxsize 代表缓冲数据

auto detqueue = device.getOutputQueue("parseOut", 8, false);//maxsize 代表缓冲数据

auto passthrough = device.getOutputQueue("passthrough", 8, false);//maxsize 代表缓冲数据

auto depthQueue = device.getOutputQueue("depth", 8, false);

auto spatialCalcQueue = device.getOutputQueue("spatialData", 8, false);

auto spatialCalcConfigInQueue = device.getInputQueue("spatialCalcConfig");

auto videoqueue = device.getOutputQueue("out264", camRgb->getFps(), false);

bool printOutputLayersOnce = true;

auto color = cv::Scalar(0, 255, 0);

auto startTime = steady_clock::now();

int counter = 0;

float fps = 0;

int width = target_width;

int height = target_height;

AVCodec *pCodec = avcodec_find_decoder(AV_CODEC_ID_H265);

AVCodecContext *pCodecCtx = avcodec_alloc_context3(pCodec);

int ret = avcodec_open2(pCodecCtx, pCodec, NULL);

if (ret < 0) {//打开解码器

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

cerr << buf << endl;

return -1;

}

AVFrame *picture = av_frame_alloc();

picture->width = width;

picture->height = height;

picture->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(picture, 1);

if (ret < 0) {

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

cerr << buf << endl;

return -1;

}

AVFrame *pFrame = av_frame_alloc();

pFrame->width = width;

pFrame->height = height;

pFrame->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(pFrame, 1);

if (ret < 0) {

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

cerr << buf << endl;

return -1;

}

AVFrame *pFrameRGB = av_frame_alloc();

pFrameRGB->width = width;

pFrameRGB->height = height;

pFrameRGB->format = AV_PIX_FMT_RGB24;

ret = av_frame_get_buffer(pFrameRGB, 1);

if (ret < 0) {

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

cerr << buf << endl;

return -1;

}

int picture_size = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, width, height,

1);//计算这个格式的图片,需要多少字节来存储

uint8_t *out_buff = (uint8_t *) av_malloc(picture_size * sizeof(uint8_t));

av_image_fill_arrays(picture->data, picture->linesize, out_buff, AV_PIX_FMT_YUV420P, width,

height, 1);

//这个函数 是缓存转换格式,可以不用 以为上面已经设置了AV_PIX_FMT_YUV420P

SwsContext *img_convert_ctx = sws_getContext(width, height, AV_PIX_FMT_YUV420P,

width, height, AV_PIX_FMT_RGB24, 4,

NULL, NULL, NULL);

AVPacket *packet = av_packet_alloc();

// auto startTime = steady_clock::now();

// int counter = 0;

// float fps = 0;

while (true) {

auto inDepth = depthQueue->get<dai::ImgFrame>();

//auto ImgFrame = videoqueue->get<dai::ImgFrame>();

// auto ImgFrame = passthrough->get<dai::ImgFrame>();

//auto frame = ImgFrame->getCvFrame();

//printf("h264\n");

//videoFile.write((char *) (h265Packet->getData().data()), h265Packet->getData().size());

auto h264Packet = videoqueue->get<dai::ImgFrame>();

packet->data = (uint8_t *) h264Packet->getData().data(); //这里填入一个指向完整H264数据帧的指针

packet->size = h264Packet->getData().size(); //这个填入H265 数据帧的大小

packet->stream_index = 0;

cv::Mat frame;

ret = avcodec_send_packet(pCodecCtx, packet);

//printf("avcodec_send_packet ret=%d \n", ret);

if (ret < 0) {

char buf[1024] = {0};

av_strerror(ret, buf, sizeof(buf) - 1);

cerr << buf << endl;

continue;

}

av_packet_unref(packet);

while (ret >= 0) {

ret = avcodec_receive_frame(pCodecCtx, pFrame);

//printf("avcodec_receive_frame ret=%d \n", ret);

av_frame_is_writable(pFrame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF || ret < 0) {

// if (ret < 0) {

// char buf[1024] = {0};

// av_strerror(ret, buf, sizeof(buf) - 1);

// cerr << buf << endl;

// continue;

// }

break;

}

sws_scale(img_convert_ctx, pFrame->data, pFrame->linesize, 0,

height,

pFrameRGB->data, pFrameRGB->linesize);

cv::Mat mRGB(cv::Size(width, height), CV_8UC3);

mRGB.data = (unsigned char *) pFrameRGB->data[0];

cv::Mat mBGR;

cv::cvtColor(mRGB, mBGR, cv::COLOR_RGB2BGR);

frame = mBGR;

}

if(frame.empty()){

continue;

}

target_height = frame.rows * 1.0;

target_width = frame.cols * 1.0;

cv::Mat depthFrameColor;

cv::Mat depthFrame = inDepth->getFrame(); // depthFrame values are in millimeters

cv::normalize(depthFrame, depthFrameColor, 255, 0, cv::NORM_INF, CV_8UC1);

cv::equalizeHist(depthFrameColor, depthFrameColor);

cv::applyColorMap(depthFrameColor, depthFrameColor, cv::COLORMAP_HOT);

auto inNN = detqueue->get<dai::NNData>();

if (printOutputLayersOnce && inNN) {

std::cout << "Output layer names: ";

for (const auto &ten : inNN->getAllLayerNames()) {

std::cout << ten << ", ";

}

printOutputLayersOnce = false;

}

std::vector<BoxInfo> result;

std::vector<BoxInfo> boxes;

auto output = inNN->getLayerFp16(inNN->getAllLayerNames()[2]);

boxes = decode_infer(output, layers[2].stride, 640, labels.size(), layers[2].anchors, threshold, 2);

result.insert(result.begin(), boxes.begin(), boxes.end());

auto output_534 = inNN->getLayerFp16(inNN->getAllLayerNames()[0]);

boxes = decode_infer(output_534, layers[1].stride, 640, labels.size(), layers[1].anchors, threshold, 1);

result.insert(result.begin(), boxes.begin(), boxes.end());

auto output_554 = inNN->getLayerFp16(inNN->getAllLayerNames()[1]);

boxes = decode_infer(output_554, layers[0].stride, 640, labels.size(), layers[0].anchors, threshold, 0);

result.insert(result.begin(), boxes.begin(), boxes.end());

nms(result, nms_threshold);

scale_coords(result, 640, 640, frame.cols, frame.rows);

cv::Mat frame_show = draw_box(frame, result, labels, colors);

counter++;

auto currentTime = steady_clock::now();

auto elapsed = duration_cast<duration<float>>(currentTime - startTime);

if (elapsed > seconds(1)) {

fps = counter / elapsed.count();

counter = 0;

startTime = currentTime;

}

std::stringstream fpsStr;

fpsStr << "NN fps: " << std::fixed << std::setprecision(2) << fps;

printf("fps %f\n", fps);

cv::putText(frame, fpsStr.str(), cv::Point(2, frame_show.rows - 4), cv::FONT_HERSHEY_TRIPLEX, 0.4,

cv::Scalar(0, 255, 0));

for (auto &item:result) {

topLeft.x = item.x1 * depthFrameColor.cols / target_width / depthFrameColor.cols;

topLeft.y = item.y1 * depthFrameColor.rows / target_height / depthFrame.rows;

bottomRight.x = (item.x2 * depthFrameColor.cols / target_width) / depthFrameColor.cols;

bottomRight.y = (item.y2 * depthFrameColor.rows / target_height) / depthFrameColor.rows;

//std::cout<<topLeft.x<<" "<<topLeft.y<<" "<<bottomRight.x<<" "<<bottomRight.y<<" "<<std::endl;

config.roi = dai::Rect(topLeft, bottomRight);

dai::SpatialLocationCalculatorConfig cfg;

cfg.addROI(config);

spatialCalcConfigInQueue->send(cfg);

auto spatialData = spatialCalcQueue->get<dai::SpatialLocationCalculatorData>()->getSpatialLocations();

for (auto depthData : spatialData) {

auto roi = depthData.config.roi;

roi = roi.denormalize(depthFrameColor.cols, depthFrameColor.rows);

auto xmin = (int) roi.topLeft().x;

auto ymin = (int) roi.topLeft().y;

auto xmax = (int) roi.bottomRight().x;

auto ymax = (int) roi.bottomRight().y;

auto depthMin = depthData.depthMin;

auto depthMax = depthData.depthMax;

auto coords = depthData.spatialCoordinates;

auto distance = std::sqrt(coords.x * coords.x + coords.y * coords.y + coords.z * coords.z);

auto fontType = cv::FONT_HERSHEY_TRIPLEX;

std::stringstream depthDistance;

depthDistance.precision(2);

depthDistance << fixed << static_cast<float>(distance / 1000.0f) << "m";

cv::putText(depthFrameColor, depthDistance.str(), cv::Point(item.x1 + 10, item.y1 + 70), fontType, 0.5,

color);

std::stringstream rgbDistance;

rgbDistance.precision(2);

rgbDistance << fixed << static_cast<float>(distance / 1000.0f) << "m";

cv::putText(frame, rgbDistance.str(), cv::Point(item.x1 + 10, item.y1 + 70), fontType, 0.5, color);

cv::rectangle(frame, cv::Rect(cv::Point(item.x1, item.y1), cv::Point(item.x2, item.y2)), color,

cv::FONT_HERSHEY_SIMPLEX);

std::stringstream rgb_depthX, depthX;

rgb_depthX << "X: " << (int) depthData.spatialCoordinates.x << " mm";

cv::putText(frame, rgb_depthX.str(), cv::Point(item.x1 + 10, item.y1 + 20), cv::FONT_HERSHEY_TRIPLEX,

0.5, color);

std::stringstream rgb_depthY, depthY;

rgb_depthY << "Y: " << (int) depthData.spatialCoordinates.y << " mm";

cv::putText(frame, rgb_depthY.str(), cv::Point(item.x1 + 10, item.y1 + 35), cv::FONT_HERSHEY_TRIPLEX,

0.5, color);

std::stringstream rgb_depthZ, depthZ;

rgb_depthZ << "Z: " << (int) depthData.spatialCoordinates.z << " mm";

cv::putText(frame, rgb_depthZ.str(), cv::Point(item.x1 + 10, item.y1 + 50), cv::FONT_HERSHEY_TRIPLEX,

0.5, color);

cv::rectangle(depthFrameColor, cv::Rect(cv::Point(xmin, ymin), cv::Point(xmax, ymax)), color,

cv::FONT_HERSHEY_SIMPLEX);

depthX << "X: " << (int) depthData.spatialCoordinates.x << " mm";

cv::putText(depthFrameColor, depthX.str(), cv::Point(xmin + 10, ymin + 20), cv::FONT_HERSHEY_TRIPLEX,

0.5, color);

depthY << "Y: " << (int) depthData.spatialCoordinates.y << " mm";

cv::putText(depthFrameColor, depthY.str(), cv::Point(xmin + 10, ymin + 35), cv::FONT_HERSHEY_TRIPLEX,

0.5, color);

depthZ << "Z: " << (int) depthData.spatialCoordinates.z << " mm";

cv::putText(depthFrameColor, depthZ.str(), cv::Point(xmin + 10, ymin + 50), cv::FONT_HERSHEY_TRIPLEX,

0.5, color);

}

// Show the frame

}

//cv::imshow("depth", depthFrameColor);

//cv::imshow("demo", frame_show);

//int key = cv::waitKey(1);

//if (key == 'q' || key == 'Q') return 0;

}

return 0;

}这里使用rk3588和oak相结合,存在两个问题,多个usb电流都在1a内,只有一个type-c转usb的电流在2a内,oak的基础电流要求900ma,峰值电流要求在1.5a左右,这就限制了oak的目标检测和推理数据传输,所以需要使用编码方式压缩数据量传输,即使使用usb的线进行数据传输,这个实验使用usb3.0的接口,但是由于电流无法满足要求,只能让oak限制使用2.0的速率dai::Device device(pipeline,true);使用设置帧率20fps进行目标检测,帧率过高容易导致失真,且使用压缩比h264还高效的1/2进行压缩数据量进行传输,在rk3588测试数据

/home/firefly/Downloads/cmake-build-debug/depthai

[2023-02-03 00:38:03.204] [warning] VideoEncoder setDefaultProfilePreset: passing 'width'/ 'height' is deprecated. The size is auto-determined from first frame

[194430101139FA1200] [7.1.1] [1.595] [NeuralNetwork(7)] [warning] Network compiled for 4 shaves, maximum available 12, compiling for 6 shaves likely will yield in better performance

[swscaler @ 0x5592956f50] No accelerated colorspace conversion found from yuv420p to rgb24.

[hevc_rkmpp @ 0x5593179e90] Decoder noticed an info change (640x640), format=0

rga_api version 1.8.1_[4]

Output layer names: 304, 324, output, fps 0.989732

fps 0.989732

fps 0.989732

fps 2.422750

fps 2.422750

fps 2.422750

fps 2.422750pc测试数据 11th Gen Intel® Core™ i5-11260H @ 2.60GHz × 12 Ubuntu 20.04.4 LTS 64-bit Mesa Intel® UHD Graphics (TGL GT1) / Mesa Intel® UHD Graphics (TGL GT1),去掉帧率限制和usb2.0的接口限制

/home/ubuntu/nanodet/oak_detect_head/cmake-build-debug/depthai

[2023-02-03 08:51:03.664] [warning] VideoEncoder setDefaultProfilePreset: passing 'width'/ 'height' is deprecated. The size is auto-determined from first frame

[19443010F1F6F51200] [3.1] [37.694] [NeuralNetwork(7)] [warning] Network compiled for 4 shaves, maximum available 12, compiling for 6 shaves likely will yield in better performance

Output layer names: 304, 324, output, fps 0.000000

fps 1.764677

fps 6.032386

fps 6.032386

fps 6.032386

fps 6.032386

fps 6.032386

fps 6.032386

fps 6.032386

fps 6.255304

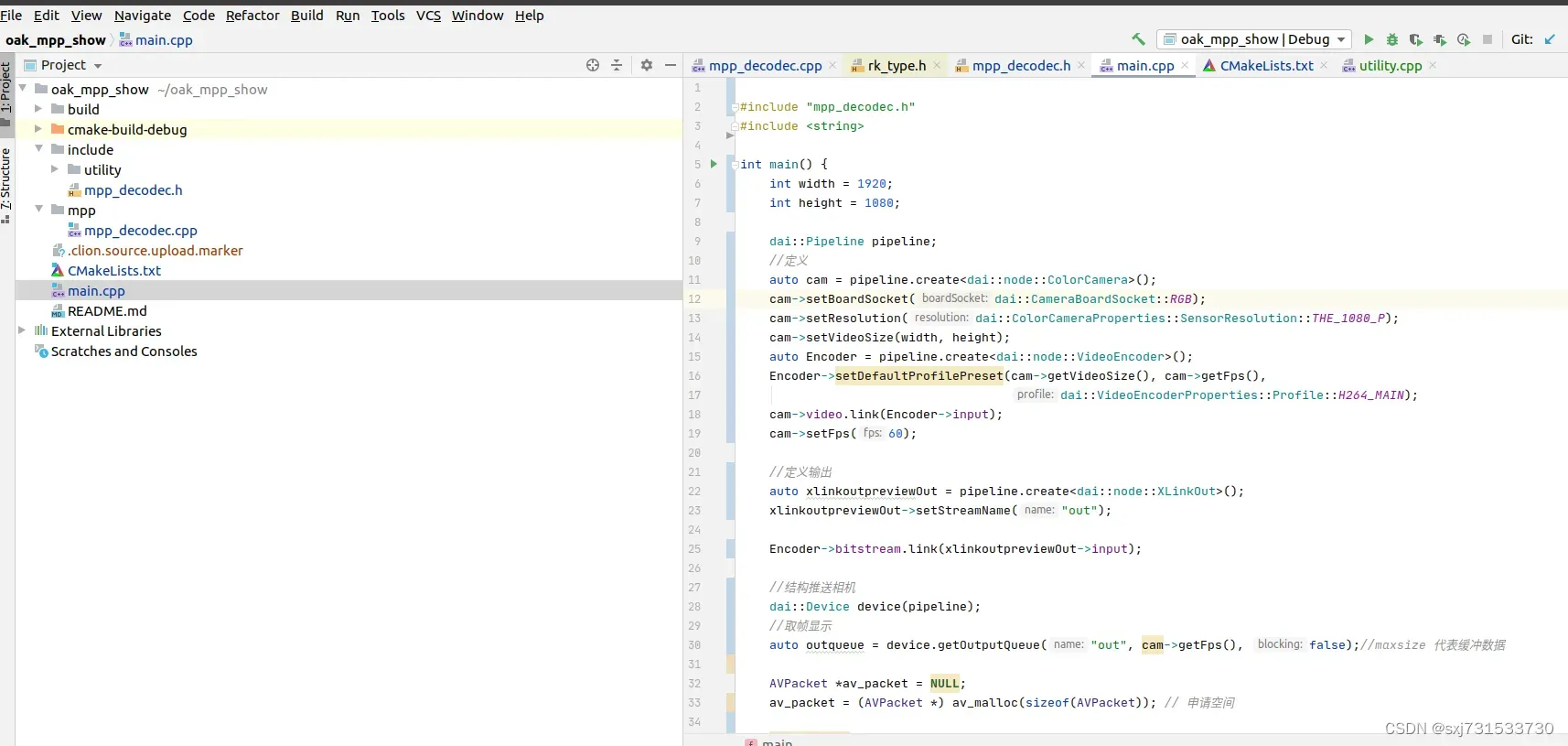

fps 6.2553042)以mpp硬解码去解析oak的视频帧,修改官方的demo代码,先执行

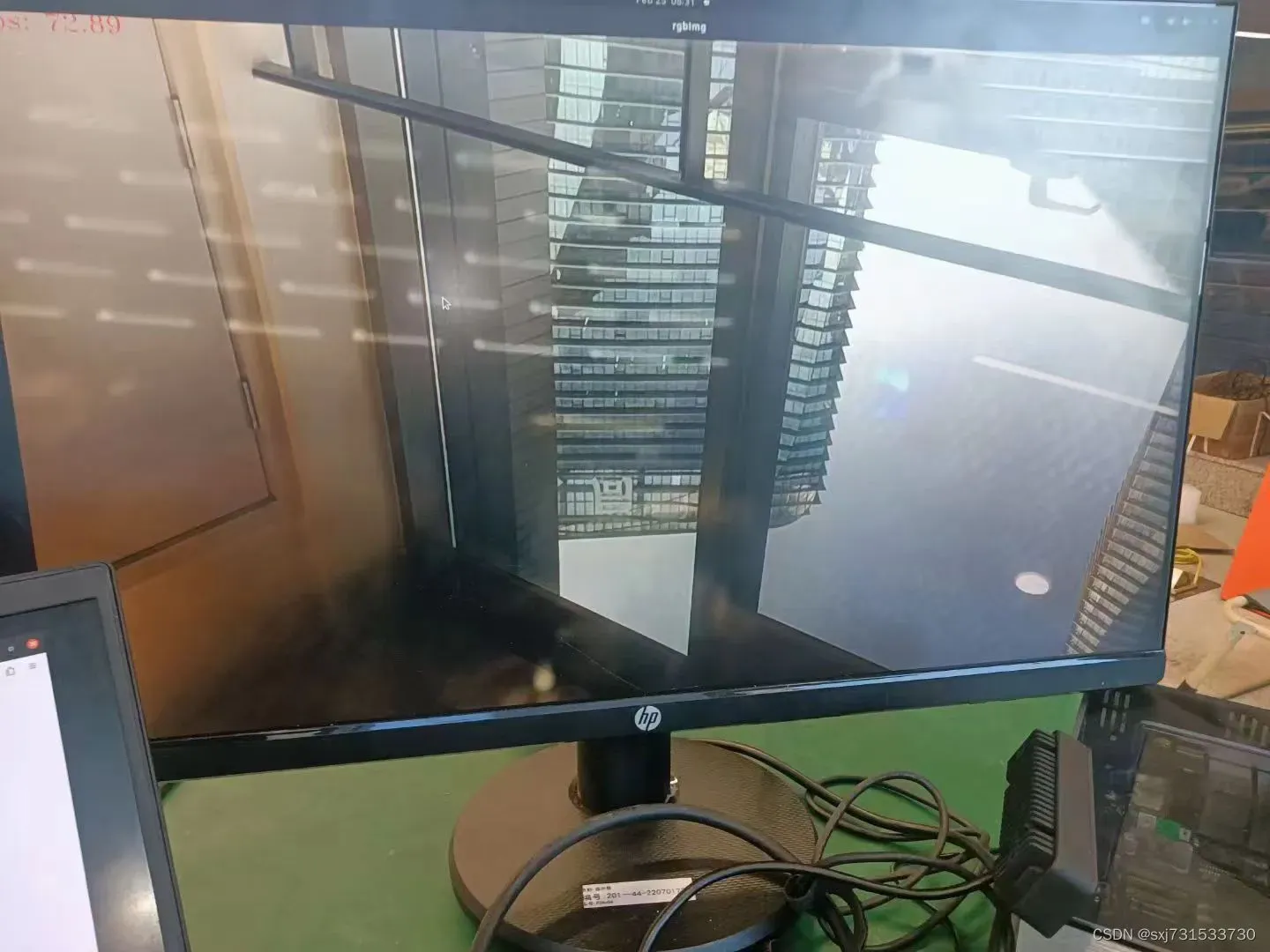

firefly@firefly:~$ sudo cp -r /usr/include/libdrm/* /usr/include/这里提供提供一个单纯的使用mpp解码的oak的编码产生的h264数据https://github.com/sxj731533730/oak_mpp_show.git,真的很快42FPS

测试结果

这就很强了,在开发板的电压和oak峰值限制条件下,使用编码方式降低数据传输量,又用rk的mpp硬件解码加速处理数据,yyds (这里以本地写图片cv.imwrite测试结果,cv.show显示画面好像掉帧率),进一步修正代码,提速检测

3)修改上述代码使用yolov7-tiny的oak编码-mpp硬件解码-写图片本地,也还是不太快在12fps,看样子限制条件在于oak模型推理速度(模型推理太慢了oak vpu)

参考

Welcome to Core-3588J Manual — Firefly Wiki

Firefly-Linux / ff_media · GitLab

https://github.com/yijiesun/mpp_drm_rga_demo

4. 软件开发相关 — Firefly Wiki

文章出处登录后可见!