- 🍊这篇评论文章是用心写了一个下午。个人觉得作为初学者的入门教程,比较适合,请查看

- 🍊 精选专栏,超重构-代码环境搭建-知识汇总

- 🍊 博主:墨理,2020年硕士毕业,目前从事图像算法,AI工程化 相关工作

📘 基本信息

- Blind Image Super-resolution with Elaborate Degradation Modeling on Noise and Kernel

- 基于噪声和核函数的精细退化盲图像的超分辨率重建

- https://github.com/zsyOAOA/BSRDM

- https://arxiv.org/pdf/2107.00986.pdf

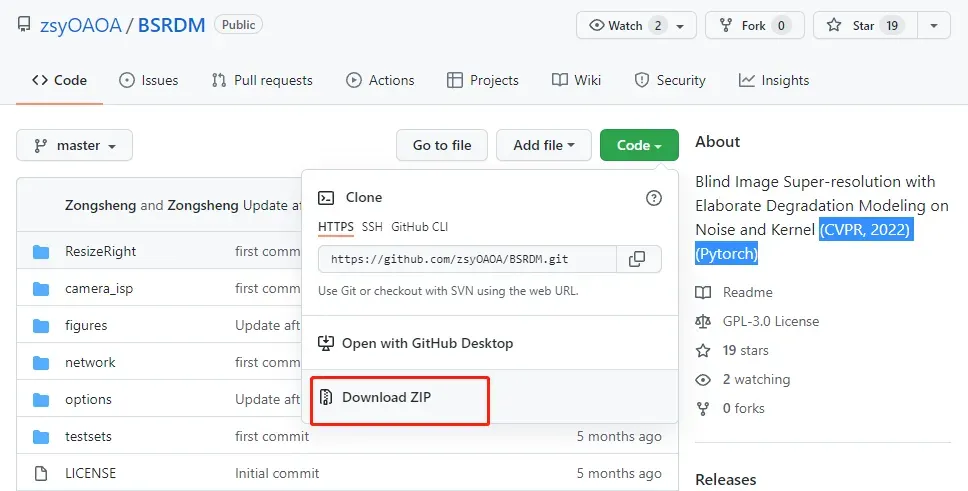

📘 下载代码

摘要翻译(论文要解决的问题)

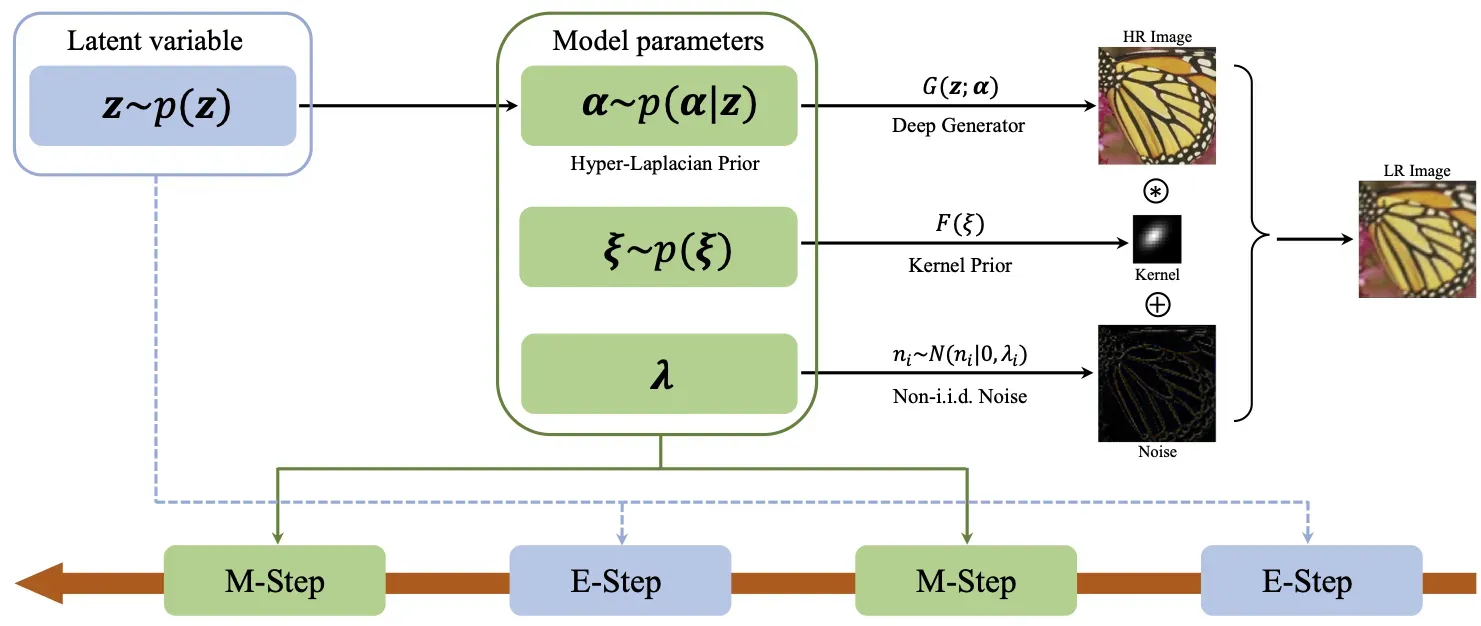

虽然基于模型的单幅盲图像超分辨率(SISR)研究取得了巨大的成功,但大多数都没有充分考虑图像退化问题。首先,他们总是假设图像噪声服从独立且同分布(i.i.d.)的高斯或拉普拉斯分布,这在很大程度上低估了真实噪声的复杂性。其次,以前常用的内核先验(例如,归一化、稀疏性)不足以保证合理的内核解决方案,从而降低了后续 SISR 任务的性能。针对上述问题,本文提出了一种概率框架下的基于模型的盲SISR方法,从噪声和模糊核的角度对图像退化进行了精细建模。具体来说,而不是传统的 i.i.d.噪声假设,基于补丁的非独立同分布。提出了噪声模型来处理复杂的真实噪声,期望增加模型对噪声表示的自由度。至于模糊核,我们新颖地构建了一个简洁而有效的核生成器,并将其作为显式核先验(EKP)插入到所提出的盲 SISR 方法中。为了解决所提出的模型,专门设计了一种具有理论基础的蒙特卡罗电磁算法。综合实验证明了我们的方法在合成和真实数据集上优于当前最先进的方法

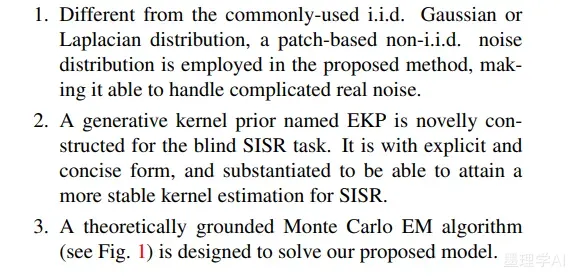

主要贡献( the contributions of this work is three-fold)

论文核心设计,蒙特卡罗EM算法( Monte Carlo EM algorithm )

图像质量评价指标:有参:PSNR、SSIM、LPIPS。无参:NIQE、NRQM、PI;

- 🍊超分重建 psnr 和 SSIM计算(pytorch实现)

Learned Perceptual Image Patch Similarity (LPIPS) metric

- 🍊 https://zhuanlan.zhihu.com/p/206470186

- 🍊 https://github.com/richzhang/PerceptualSimilarity

📘环境建设

git clone https://github.com/zsyOAOA/BSRDM.git

## 或者 下载 zip 进行 解压

unzip BSRDM-master.zip

cd BSRDM-master/

我的服务器环境

- ubuntu18给当前用户安装cuda11.2 图文教程 、 配置cuDNN8.1 ——【一文读懂】

## 服务器

cat /etc/issue

Ubuntu 18.04.5 LTS \n \l

## Cuda版本

nvcc -V

Cuda compilation tools, release 11.2, V11.2.67

Build cuda_11.2.r11.2/compiler.29373293_0

# 显卡

Quadro RTX 5000 16G x 4

# 内存 128G

完全满足代码运行要求

- 🍊 Ubuntu 18.04, cuda 11.0

- 🍊 Python 3.8.11, Pytorch 1.7.1

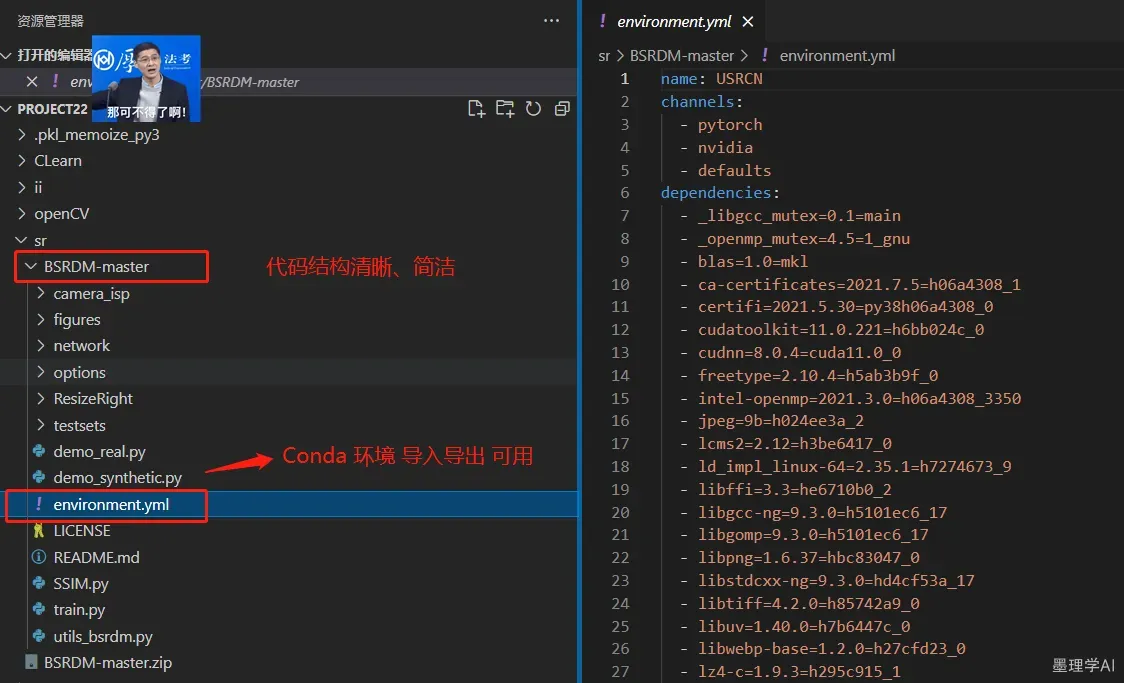

服务器操作,解压之后、使用 Conda 来进行环境搭建

- 🍊anaconda conda 切换为国内源 、windows 和 Linux配置方法、 添加清华源——【一文读懂】

- 🍊 conda 源设置比较好的话、10多分钟就完成这个环境搭建

- 🍊 基本代码结构如下,代码结构清晰,没有太多冗余

环境搭建,具体命令如下

conda env create -f environment.yml

conda activate USRCN

📘 Demo 测试运行

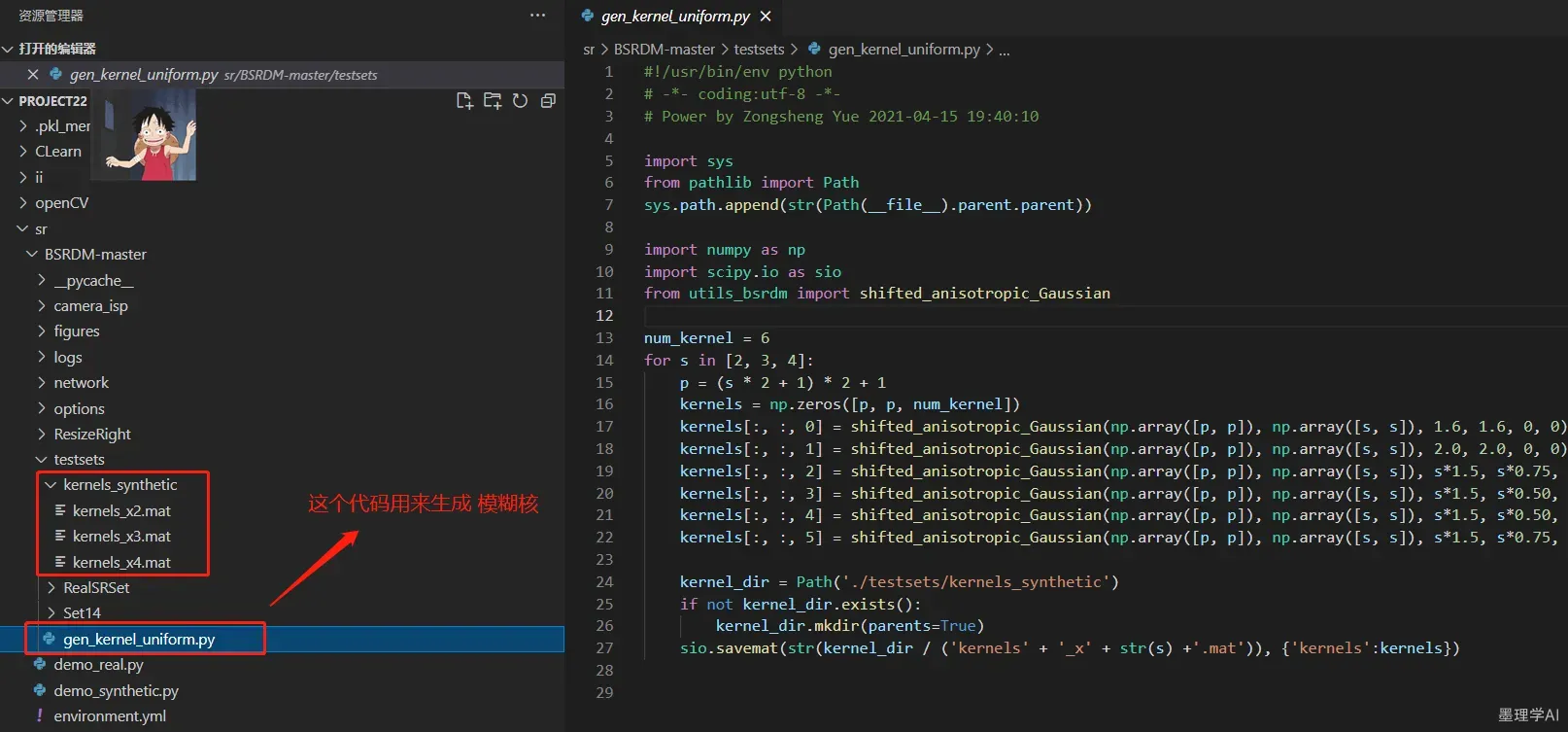

1 The synthesized six blur kernels used in our paper can be obtained from here. They are generated by this manuscript.

2 To test BSRDM under camera sensor noise, run this command:

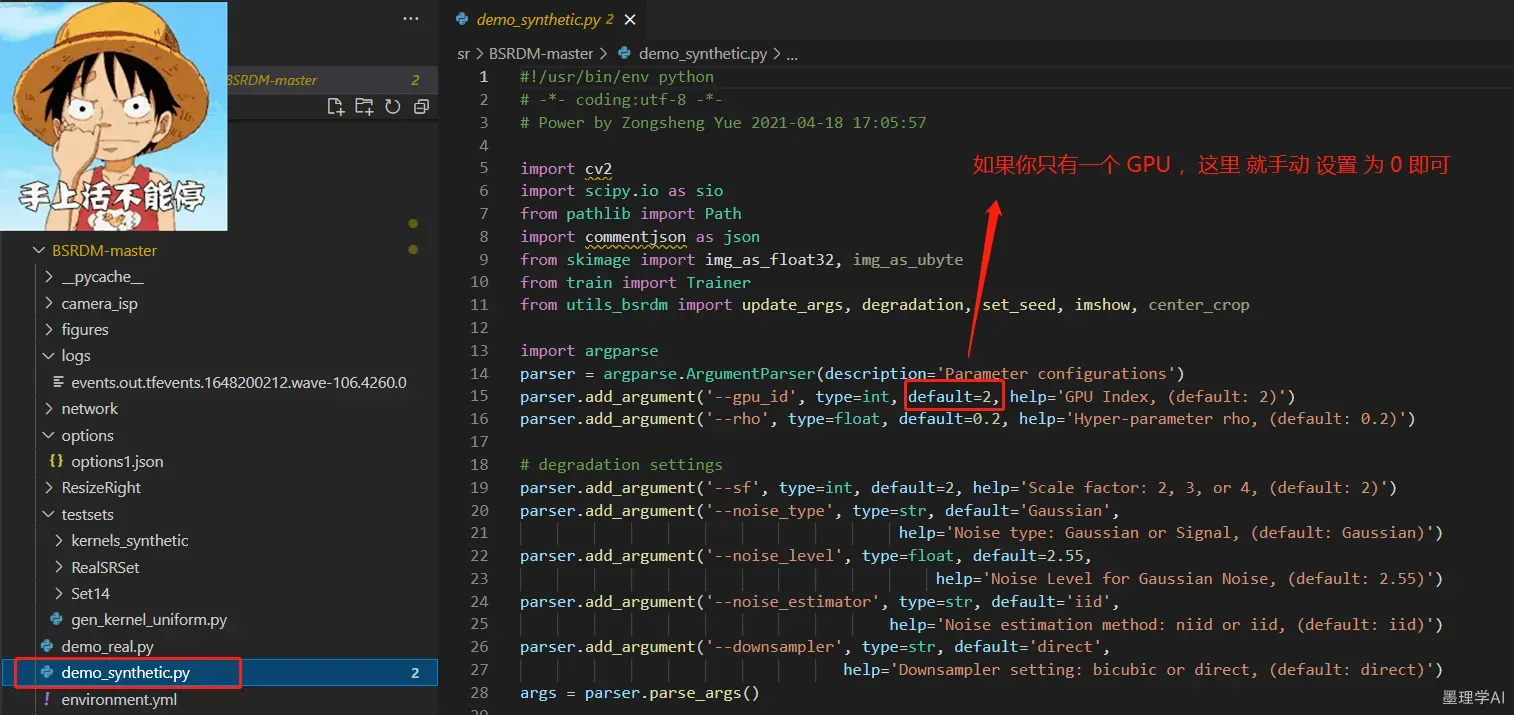

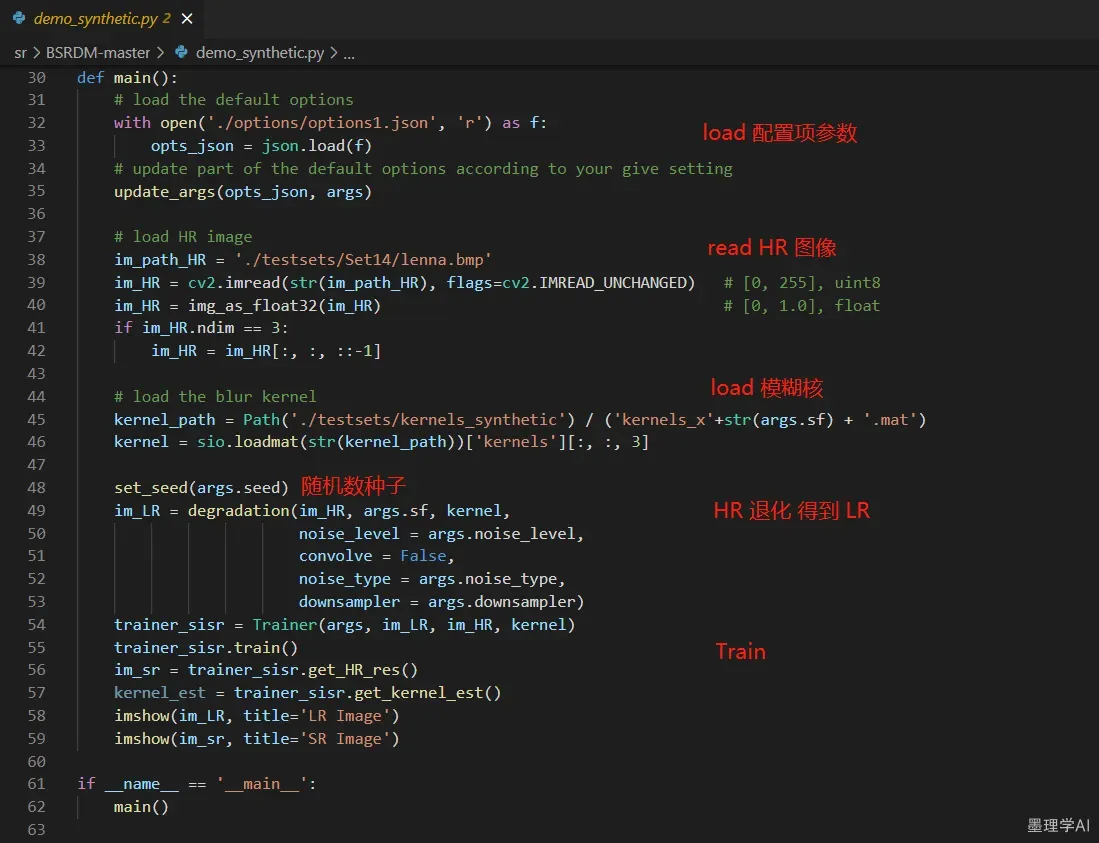

代码运行,GPU 编号设置(该代码其它地方类似,不再赘述)

代码的主要功能,结构如下

- GPU 占用 3103MiB

python demo_synthetic.py --sf 2 --noise_type signal --noise_estimator niid

- 运行的输出如下

python demo_synthetic.py --sf 2 --noise_type signal --noise_estimator niid

/home/墨理学AI/anaconda3/envs/USRCN/lib/python3.8/site-packages/Cython/Compiler/Main.py:369: FutureWarning: ...

## 可以看到 这里有一个 FutureWarning, 这种 Warning 一般是因为 代码版本影响,不影响我们的代码运行,通常不必在意

gpu_id : 2

rho : 0.2

sf : 2

noise_type : signal

noise_level : 2.55

noise_estimator : niid

downsampler : direct

kernel_shift : left

gamma : 0.67

internal_iter_M : 50

window_variance : 15

langevin_steps : 5

delta : 1.0

seed : 1000

max_iters : 400

log_dir : ./logs

lr_G : 0.002

lr_K : 0.005

disp : 1

print_freq : 20

max_grad_norm_G : 10

input_chn : 8

n_scales : 3

down_chn_G : [96, 96, 96]

up_chn_G : [96, 96, 96]

skip_chn_G : 16

use_bn_G : True

2022-03-25 17:03:25.849277: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.11.0

Number of parameters in Generator: 766.21K

Initiliazing the generator...

Initiliazing the kernel...

Iter:0020/0400, Loss:7.571/7.422/0.149, PSNR:28.11, SSIM:0.7661, normG:3.26e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0040/0400, Loss:5.043/4.883/0.161, PSNR:29.51, SSIM:0.8021, normG:1.65e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0060/0400, Loss:0.626/0.464/0.162, PSNR:30.14, SSIM:0.8156, normG:2.00e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0080/0400, Loss:0.557/0.399/0.159, PSNR:30.52, SSIM:0.8233, normG:6.41e-01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0100/0400, Loss:0.534/0.375/0.159, PSNR:30.63, SSIM:0.8254, normG:2.56e-01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0120/0400, Loss:0.935/0.774/0.161, PSNR:30.22, SSIM:0.8244, normG:1.52e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0140/0400, Loss:0.644/0.482/0.163, PSNR:30.66, SSIM:0.8254, normG:3.75e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0160/0400, Loss:0.758/0.592/0.166, PSNR:30.62, SSIM:0.8248, normG:1.41e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0180/0400, Loss:0.722/0.556/0.165, PSNR:30.61, SSIM:0.8246, normG:5.19e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0200/0400, Loss:0.606/0.440/0.167, PSNR:30.85, SSIM:0.8256, normG:1.28e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0220/0400, Loss:0.720/0.550/0.170, PSNR:30.76, SSIM:0.8239, normG:1.30e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0240/0400, Loss:0.682/0.511/0.171, PSNR:30.91, SSIM:0.8244, normG:1.10e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0260/0400, Loss:0.712/0.541/0.171, PSNR:30.97, SSIM:0.8248, normG:9.99e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0280/0400, Loss:0.624/0.450/0.174, PSNR:30.95, SSIM:0.8224, normG:2.67e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0300/0400, Loss:0.640/0.466/0.174, PSNR:30.95, SSIM:0.8223, normG:8.37e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0320/0400, Loss:0.826/0.649/0.176, PSNR:30.68, SSIM:0.8183, normG:1.10e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0340/0400, Loss:0.602/0.427/0.176, PSNR:30.97, SSIM:0.8212, normG:4.31e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0360/0400, Loss:0.561/0.383/0.178, PSNR:30.95, SSIM:0.8189, normG:1.89e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0380/0400, Loss:0.553/0.373/0.180, PSNR:30.91, SSIM:0.8174, normG:4.44e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0400/0400, Loss:0.559/0.378/0.181, PSNR:30.84, SSIM:0.8153, normG:6.78e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

For the Gaussian noise, run this command:

python demo_synthetic.py --sf 2 --noise_type Gaussian --noise_level 2.55

- GPU 占用 3103MiB

- 运行的输出如下

python demo_synthetic.py --sf 2 --noise_type Gaussian --noise_level 2.55

gpu_id : 2

rho : 0.2

sf : 2

noise_type : Gaussian

noise_level : 2.55

noise_estimator : iid

downsampler : direct

kernel_shift : left

gamma : 0.67

internal_iter_M : 50

window_variance : 15

langevin_steps : 5

delta : 1.0

seed : 1000

max_iters : 400

log_dir : ./logs

lr_G : 0.002

lr_K : 0.005

disp : 1

print_freq : 20

max_grad_norm_G : 10

input_chn : 8

n_scales : 3

down_chn_G : [96, 96, 96]

up_chn_G : [96, 96, 96]

skip_chn_G : 16

use_bn_G : True

2022-03-25 17:23:30.937200: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.11.0

Number of parameters in Generator: 766.21K

Initiliazing the generator...

Initiliazing the kernel...

Iter:0020/0400, Loss:5.443/5.311/0.132, PSNR:27.78, SSIM:0.7560, normG:3.69e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0040/0400, Loss:2.502/2.357/0.145, PSNR:29.53, SSIM:0.7940, normG:1.80e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0060/0400, Loss:0.522/0.377/0.145, PSNR:30.50, SSIM:0.8159, normG:2.93e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0080/0400, Loss:0.449/0.306/0.143, PSNR:30.93, SSIM:0.8262, normG:8.00e-01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0100/0400, Loss:0.412/0.270/0.143, PSNR:31.25, SSIM:0.8331, normG:3.34e-01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0120/0400, Loss:1.394/1.250/0.144, PSNR:29.94, SSIM:0.8190, normG:1.17e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0140/0400, Loss:0.688/0.542/0.146, PSNR:31.27, SSIM:0.8324, normG:3.83e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0160/0400, Loss:0.645/0.500/0.146, PSNR:31.72, SSIM:0.8428, normG:9.17e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0180/0400, Loss:0.939/0.795/0.144, PSNR:31.31, SSIM:0.8420, normG:1.17e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0200/0400, Loss:0.563/0.418/0.146, PSNR:32.11, SSIM:0.8486, normG:3.13e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0220/0400, Loss:0.568/0.421/0.147, PSNR:32.50, SSIM:0.8554, normG:1.94e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0240/0400, Loss:0.555/0.408/0.147, PSNR:32.72, SSIM:0.8602, normG:6.04e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0260/0400, Loss:1.757/1.611/0.147, PSNR:31.45, SSIM:0.8603, normG:4.53e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0280/0400, Loss:0.977/0.831/0.146, PSNR:32.21, SSIM:0.8557, normG:1.16e+01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0300/0400, Loss:0.720/0.572/0.148, PSNR:32.80, SSIM:0.8625, normG:9.10e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0320/0400, Loss:0.707/0.559/0.147, PSNR:32.91, SSIM:0.8654, normG:8.81e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0340/0400, Loss:0.599/0.451/0.147, PSNR:33.18, SSIM:0.8681, normG:4.61e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0360/0400, Loss:0.606/0.457/0.149, PSNR:33.30, SSIM:0.8710, normG:8.60e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0380/0400, Loss:0.552/0.403/0.149, PSNR:33.41, SSIM:0.8724, normG:9.64e-01/1.00e+01, lrG/K:2.00e-03/5.00e-03

Iter:0400/0400, Loss:0.544/0.395/0.149, PSNR:33.47, SSIM:0.8737, normG:2.81e+00/1.00e+01, lrG/K:2.00e-03/5.00e-03

Evaluation on Real Data

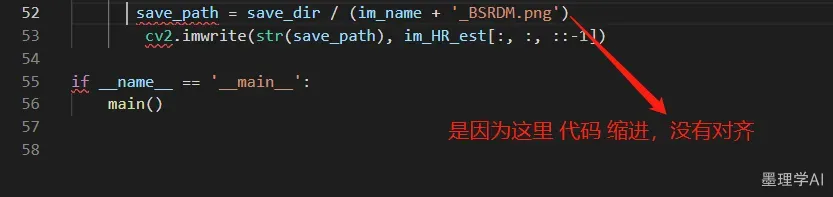

您可能会遇到错误(您下载的代码可能不会遇到此错误):

python demo_real.py --sf 2

File "demo_real.py", line 52

save_path = save_dir / (im_name + '_BSRDM.png')

^

IndentationError: unindent does not match any outer indentation level

- 解决方法是缩进对齐代码

- GPU 占用 3255MiB

- 10多分钟运行完毕(随着服务器卡性能波动)

- 运行的输出如下

python demo_real.py --sf 2

01/20: Image: Lincoln , sf: 2, rho=0.2

02/20: Image: building , sf: 2, rho=0.2

03/20: Image: butterfly , sf: 2, rho=0.2

04/20: Image: butterfly2 , sf: 2, rho=0.2

05/20: Image: chip , sf: 2, rho=0.2

06/20: Image: comic1 , sf: 2, rho=0.2

07/20: Image: comic2 , sf: 2, rho=0.2

08/20: Image: comic3 , sf: 2, rho=0.2

09/20: Image: computer , sf: 2, rho=0.2

10/20: Image: dog , sf: 2, rho=0.2

11/20: Image: dped_crop00061 , sf: 2, rho=0.2

12/20: Image: foreman , sf: 2, rho=0.2

13/20: Image: frog , sf: 2, rho=0.1

14/20: Image: oldphoto2 , sf: 2, rho=0.2

15/20: Image: oldphoto3 , sf: 2, rho=0.4

16/20: Image: oldphoto6 , sf: 2, rho=0.2

17/20: Image: painting , sf: 2, rho=0.1

18/20: Image: pattern , sf: 2, rho=0.2

19/20: Image: ppt3 , sf: 2, rho=0.1

20/20: Image: tiger , sf: 2, rho=0.1

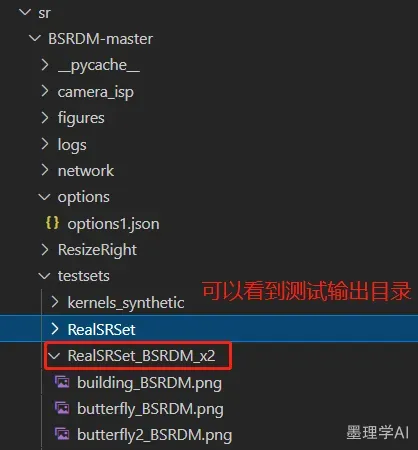

📕 真实数据集测试展示

这个地方,大家有兴趣,可以再用一些定量指标,对 RealSRSet 和 RealSRSet_BSRDM_x2 进行评估,这里就不做记录了

- 🍊超分重建 psnr 和 SSIM计算(pytorch实现)

- HR (高清原图)

- LR ( 退化、下采样得到 LR )

- SR(超分重建得到的图片,可以称之为 SR )

- GT ( ground truth [ 真实数据 ] , 一般理解为 原图 ,如有异议,请评论区补充一下)

本次测试,LR 图像 2 倍 重建,效果如下:

- 🍊 LR

- 🍊 SR

📕 视觉展示

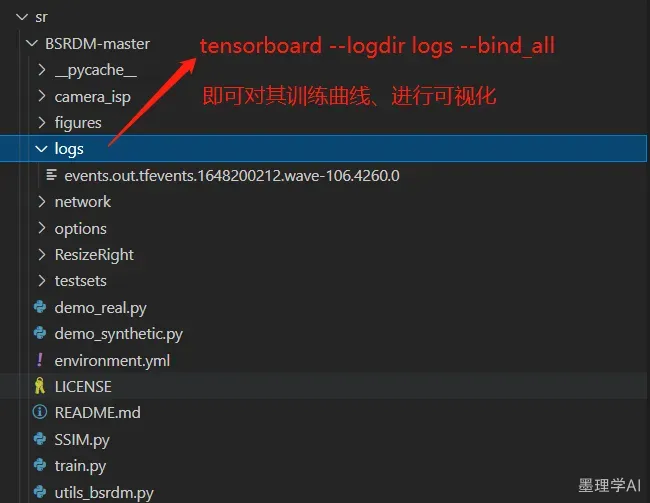

tensorboard --logdir logs --bind_all

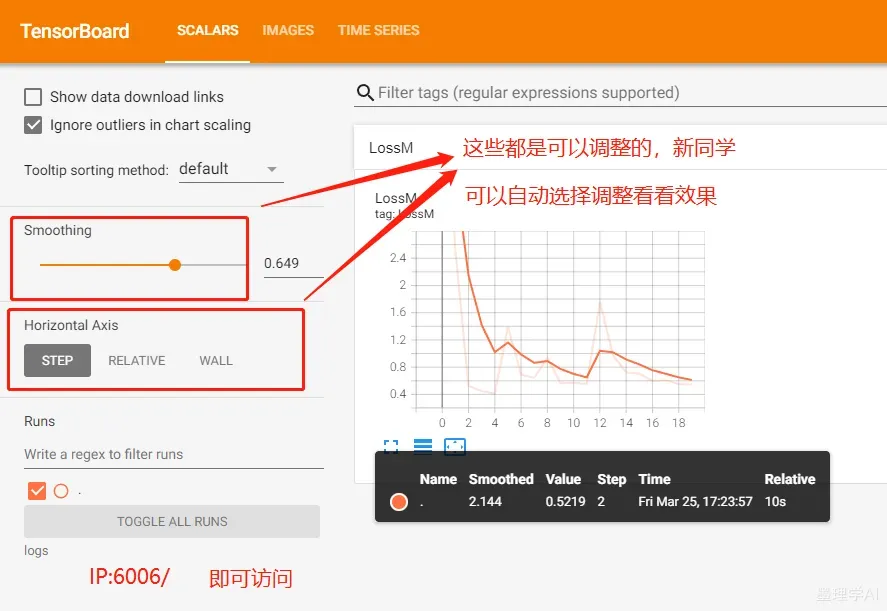

运行效果如下

tensorboard --logdir logs --bind_all

# 输出如下,说明 可视化成功

2022-03-25 18:29:04.270270: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.11.0

TensorBoard 2.6.0 at http://墨理学AI-106:6006/ (Press CTRL+C to quit)

浏览器,通过 IP : 6006 端口号、即可成功访问,可视化效果

📘培训

- 训练部分,官方暂未更新

📕 附源码+论文

官方代码可能会在后续更新,这里只为大家提供这篇博文对应的源码:

后续会补充,可以先关注 墨理学AI

关键词:

20220325

代码目录结构如下

tree -L 2

.

├── camera_isp

│ ├── __init__.py

│ ├── ISP_implement_cbd.py

│ ├── noise_synthetic

│ └── __pycache__

├── demo_real.py

├── demo_synthetic.py

├── environment.yml

├── figures

│ ├── degradation.png

│ └── framework.jpg

├── LICENSE

├── logs

│ └── events.out.tfevents.1648200212.wave-106.4260.0

├── network

│ ├── common.py

│ ├── __init__.py

│ ├── non_local_dot_product.py

│ ├── __pycache__

│ └── skip.py

├── options

│ └── options1.json

├── __pycache__

│ ├── SSIM.cpython-38.pyc

│ ├── train.cpython-38.pyc

│ └── utils_bsrdm.cpython-38.pyc

├── README.md

├── ResizeRight

│ ├── interp_methods.py

│ ├── LICENSE

│ ├── __pycache__

│ ├── README.md

│ └── resize_right.py

├── SSIM.py

├── testsets

│ ├── gen_kernel_uniform.py

│ ├── kernels_synthetic

│ ├── RealSRSet

│ ├── RealSRSet_BSRDM_x2

│ └── Set14

├── train.py

└── utils_bsrdm.py

16 directories, 26 files

📕这篇文章可以带给我们思考

- 使用传统的机器学习相关算法进行创新的网络设计,这需要结合一些图像处理的基础知识

- 只能说这类研究,亦是属于,SISR、超分领域研究的一个方向

- 以后有什么想法,有机会我再补充。

📙 一起学 A I

墨理的博客还有很多,深度学习环境搭建、计算机视觉、目标检测、SISR、图像修复、风格转换、等领域类似干货博文,小伙伴可自行查阅

- 🎉作为全网 AI 领域 干货最多的博主之一,❤️ 不负光阴不负卿 ❤️

- ❤️过去的每一天,你一定很努力,祝你克服困难,前程似锦

- 🍊 深度学习模型训练与推理-基础环境搭建推荐博文复习顺序【基础安装-为大家精心整理】

- 🍊赞👍收藏⭐评论📝是博主不断写作和更新优质博文的最大动力!

文章出处登录后可见!