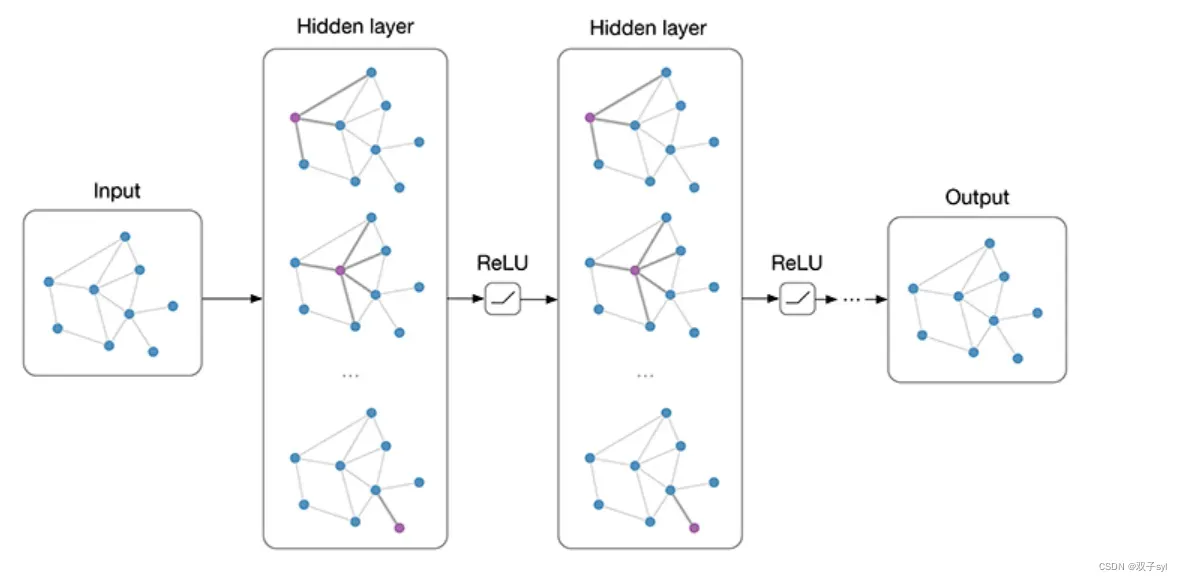

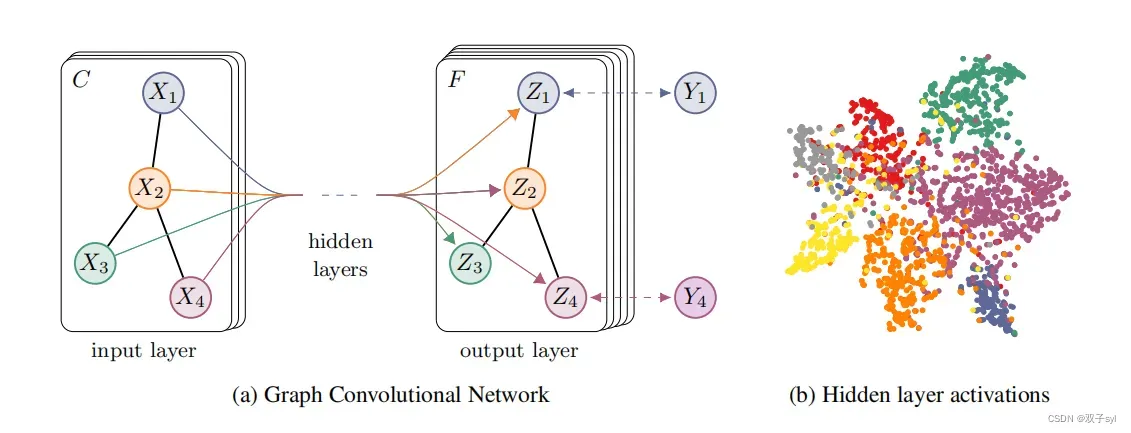

本文考虑了一个具有分层传播规则的多层图卷积网络(GCN),图卷积网络(GCN)是一个对图数据进行操作的神经网络架构,它非常强大,即使是随机初始化的两层GCN也可以生成图网络中节点的有用特征表示。

本文引用参考GCN原作者论文及代码,供自学自用,原作者github网址如下: https://github.com/tkipf/pygcn

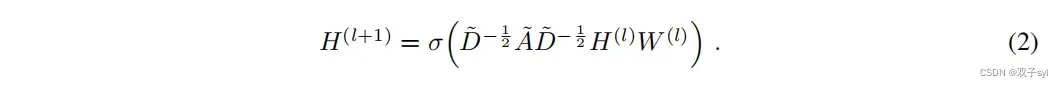

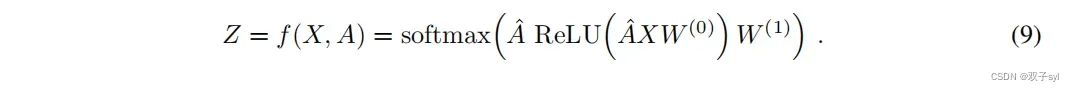

论文原图及核心公式

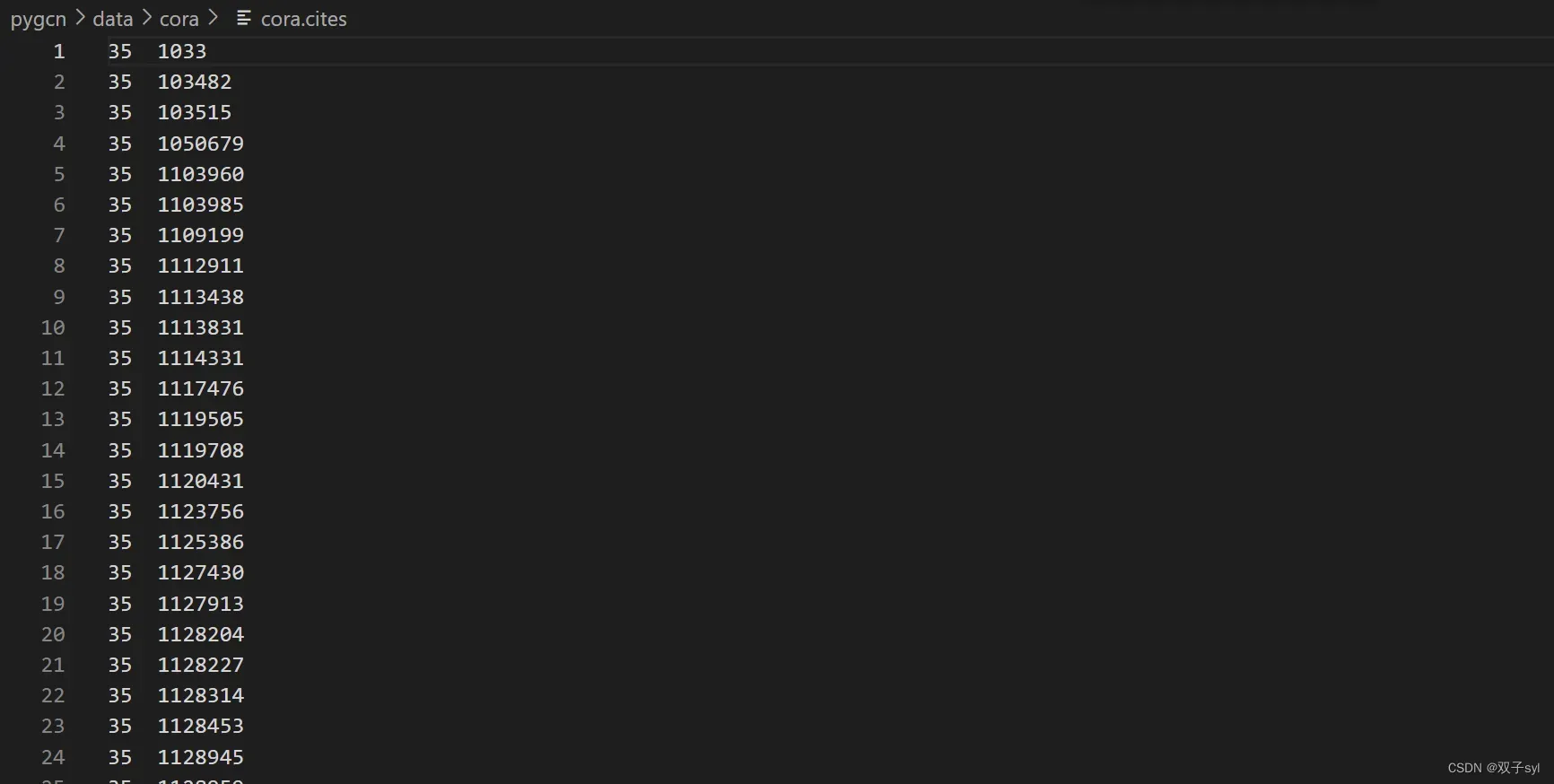

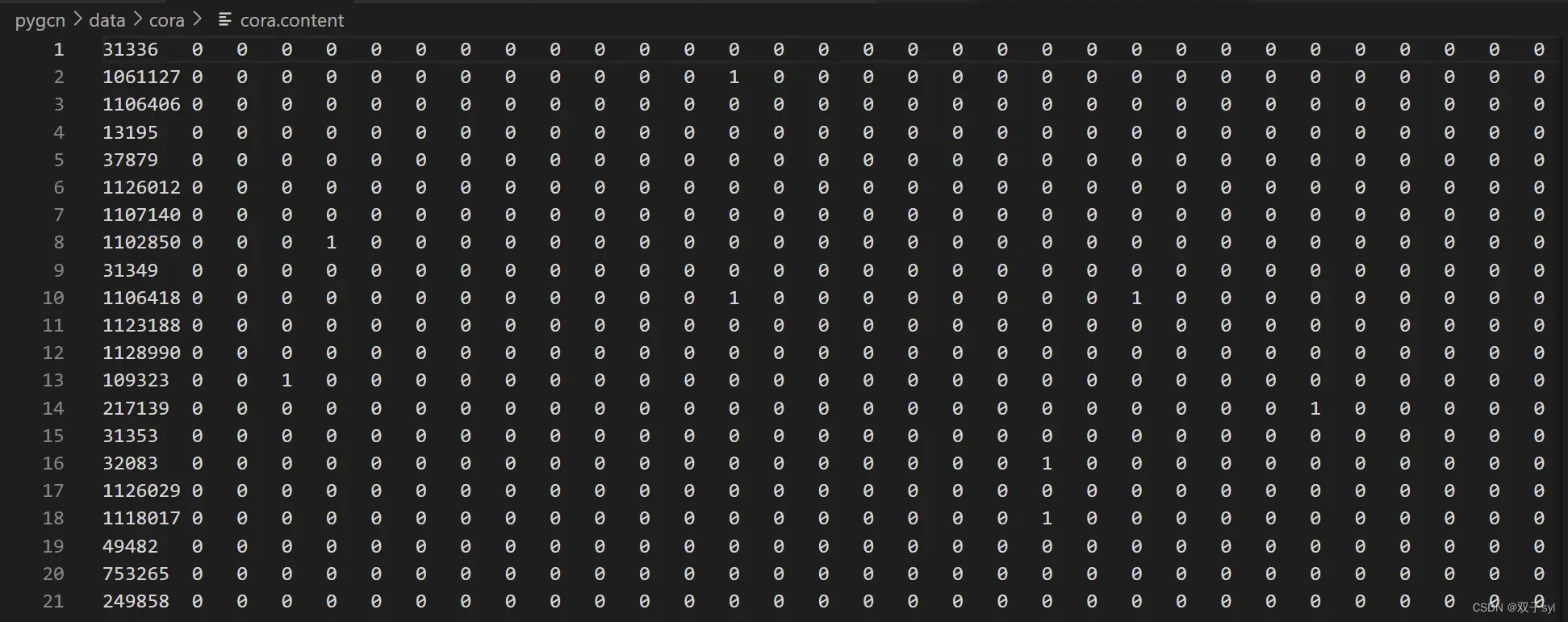

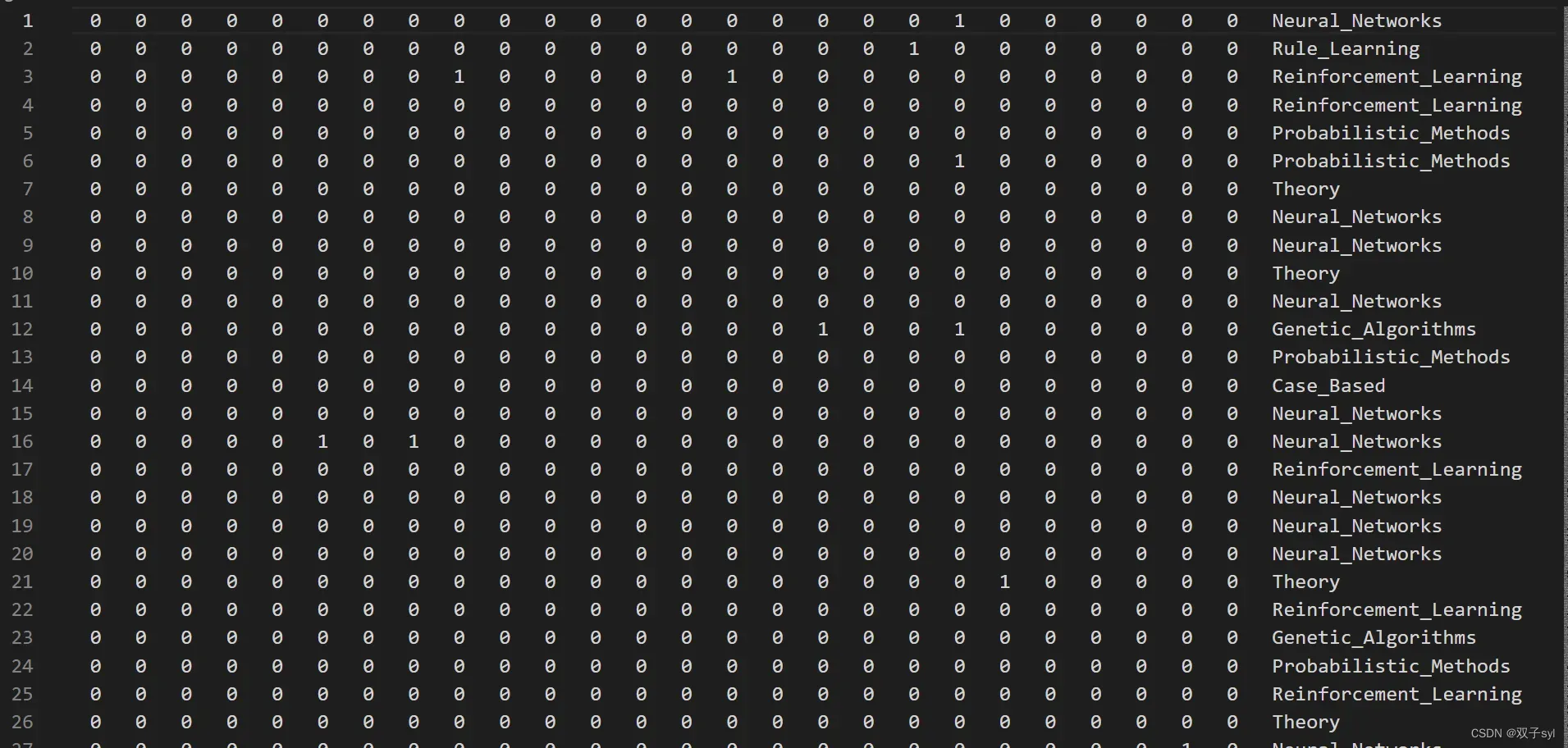

Data

cora.cites如下图所示:

content表示每个点的内容,第一列为序号,最后一列为所属类型。

utils相关代码分析

utils.py

代码解析见注释

load_data()函数

def load_data(path=os.path.join(os.getcwd(),'pygcn','data','cora'), dataset="cora"):

"""Load citation network dataset (cora only for now)"""

print('Loading {} dataset...'.format(dataset))

idx_features_labels = np.genfromtxt("{}/{}.content".format(path, dataset),

dtype=np.dtype(str))

features = sp.csr_matrix(idx_features_labels[:, 1:-1], dtype=np.float32) # 取特征feature

# 前闭后开的,相当于第二列到倒数第二列

labels = encode_onehot(idx_features_labels[:, -1]) # one-hot label

# build graph

idx = np.array(idx_features_labels[:, 0], dtype=np.int32) # 节点

idx_map = {j: i for i, j in enumerate(idx)} # 构建节点的索引字典

# {31336: 0, 1061127: 1, 1106406: 2, 13195: 3},大概这样j=31336,i=0,enumerate可以把数组或者列表的数据和序号变成索引字典

edges_unordered = np.genfromtxt("{}/{}.cites".format(path, dataset), # 导入edge的数据

dtype=np.int32)

edges = np.array(list(map(idx_map.get, edges_unordered.flatten())),

dtype=np.int32).reshape(edges_unordered.shape) # 将之前的转换成字典编号后的边

# map函数用法https://blog.csdn.net/weixin_43641920/article/details/122111417

# 如果找到了key值大概就是31336,用后面的edges_unordered替换

adj = sp.coo_matrix((np.ones(edges.shape[0]), (edges[:, 0], edges[:, 1])), # 构建边的邻接矩阵

shape=(labels.shape[0], labels.shape[0]),

dtype=np.float32)

# build symmetric adjacency matrix,计算转置矩阵。将有向图转成无向图

adj = adj + adj.T.multiply(adj.T > adj) - adj.multiply(adj.T > adj)

#博客在这里 https://blog.csdn.net/iamjingong/article/details/97392571

# 例子在test.py

features = normalize(features) # 对特征做了归一化的操作

adj = normalize(adj + sp.eye(adj.shape[0])) # 对A+I归一化

# 训练,验证,测试的样本

idx_train = range(140)

idx_val = range(200, 500)

idx_test = range(500, 1500)

# 将numpy的数据转换成torch格式

features = torch.FloatTensor(np.array(features.todense()))

labels = torch.LongTensor(np.where(labels)[1])

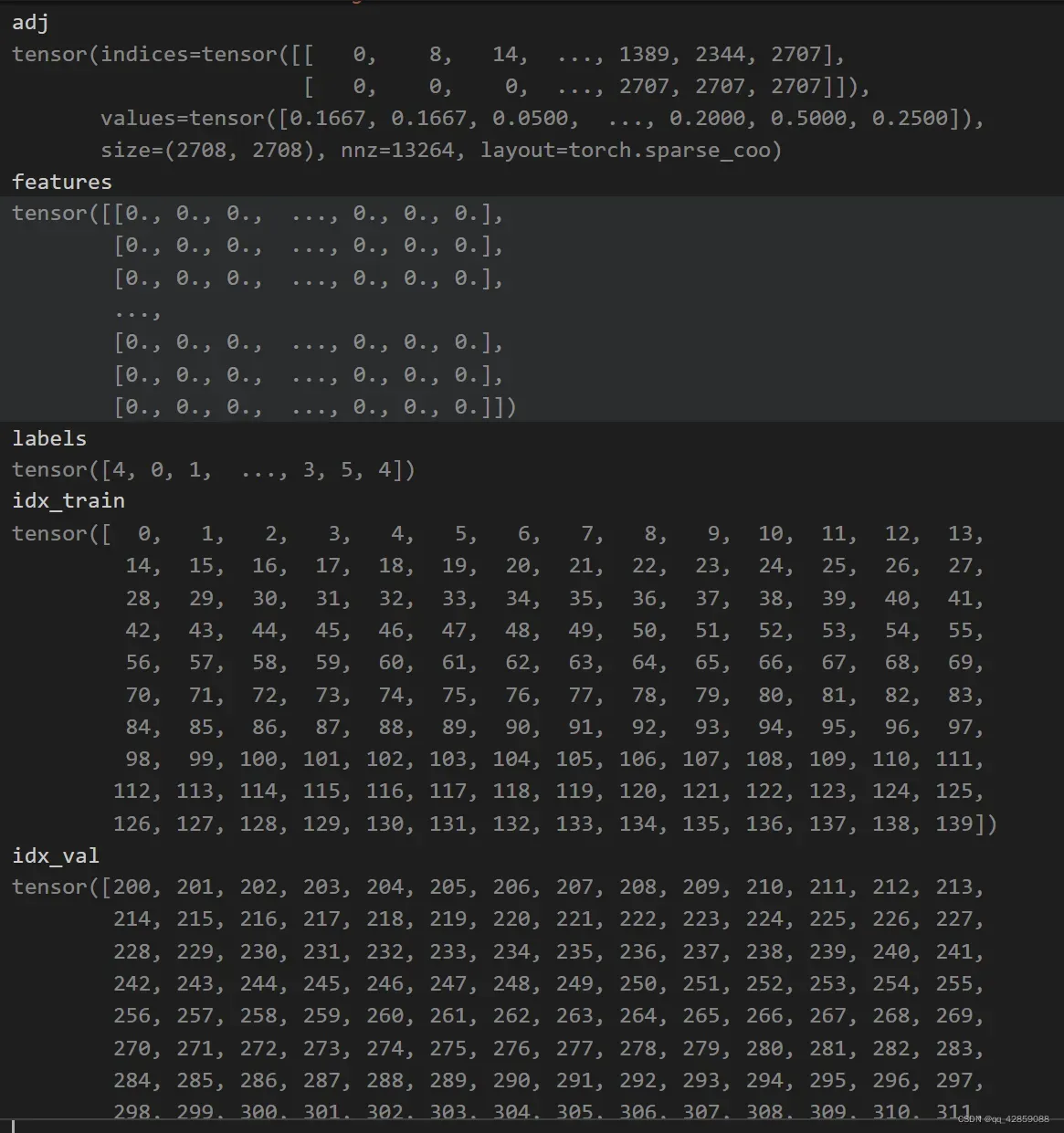

adj = sparse_mx_to_torch_sparse_tensor(adj)

idx_train = torch.LongTensor(idx_train)

idx_val = torch.LongTensor(idx_val)

idx_test = torch.LongTensor(idx_test)

return adj, features, labels, idx_train, idx_val, idx_test

map函数用法https://blog.csdn.net/weixin_43641920/article/details/122111417

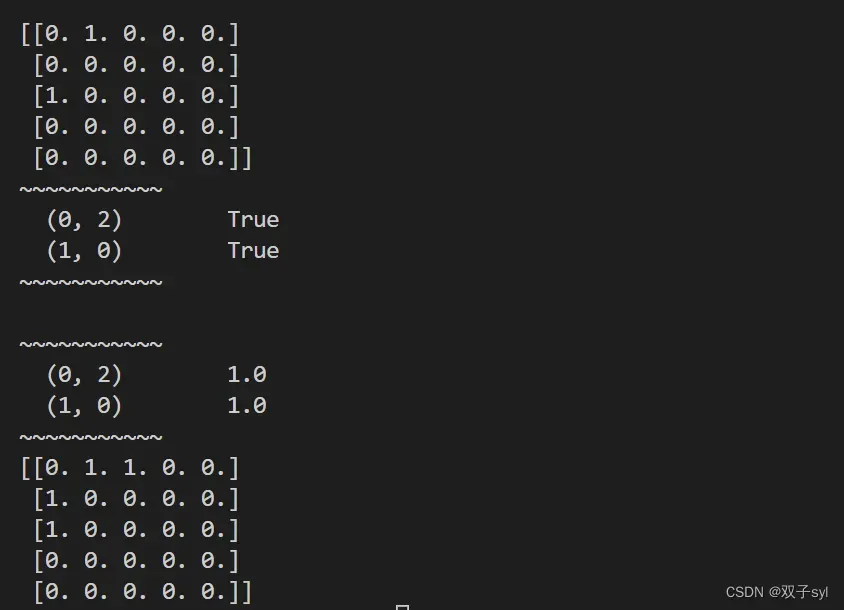

非对称邻接矩阵转变为对称邻接矩阵 https://blog.csdn.net/iamjingong/article/details/97392571

normalize函数

def normalize(mx):

"""Row-normalize sparse matrix"""

rowsum = np.array(mx.sum(1)) # 求矩阵每一行的度

r_inv = np.power(rowsum, -1).flatten() # 求和的-1次方

r_inv[np.isinf(r_inv)] = 0. # 如果是inf,转换成0

r_mat_inv = sp.diags(r_inv) # 构造对角戏矩阵

mx = r_mat_inv.dot(mx) # 构造D-1*A,非对称方式,简化方式

return mx

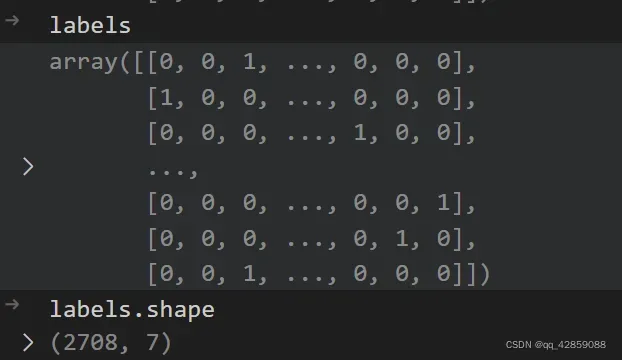

encode_onehot函数

见下方onehot.py

def encode_onehot(labels):

classes = set(labels)

classes_dict = {c: np.identity(len(classes))[i, :] for i, c in

enumerate(classes)}

labels_onehot = np.array(list(map(classes_dict.get, labels)),

dtype=np.int32)

return labels_onehot

accuracy函数

def accuracy(output, labels):

preds = output.max(1)[1].type_as(labels)

correct = preds.eq(labels).double()

correct = correct.sum()

return correct / len(labels)

sparse_mx_to_torch_sparse_tensor函数

def sparse_mx_to_torch_sparse_tensor(sparse_mx):

"""Convert a scipy sparse matrix to a torch sparse tensor."""

sparse_mx = sparse_mx.tocoo().astype(np.float32)

indices = torch.from_numpy(

np.vstack((sparse_mx.row, sparse_mx.col)).astype(np.int64))

#vstack按行堆叠

values = torch.from_numpy(sparse_mx.data)

shape = torch.Size(sparse_mx.shape)

return torch.sparse.FloatTensor(indices, values, shape)

coo稀疏矩阵 https://blog.csdn.net/yhb1047818384/article/details/78996906

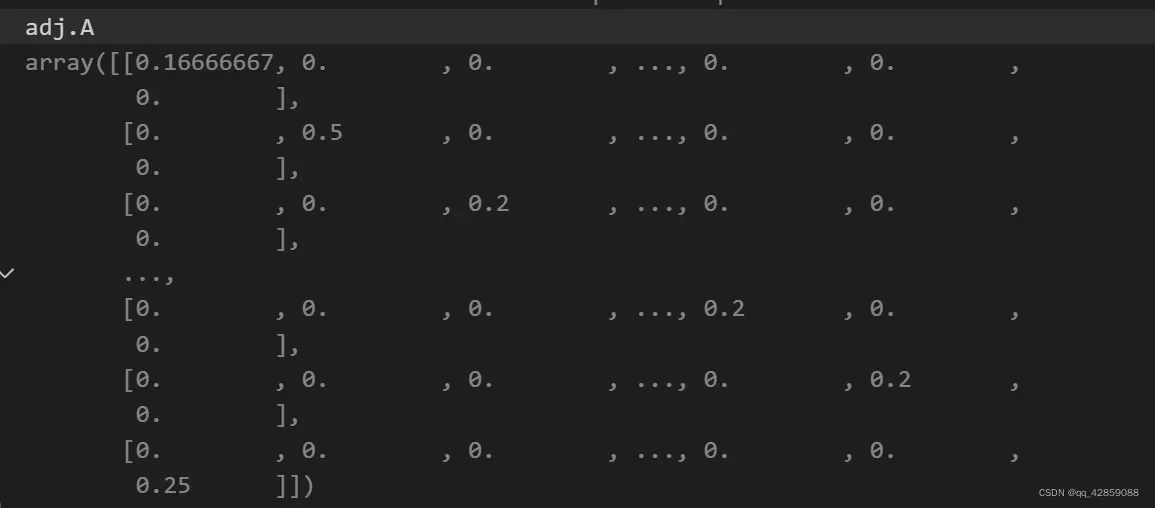

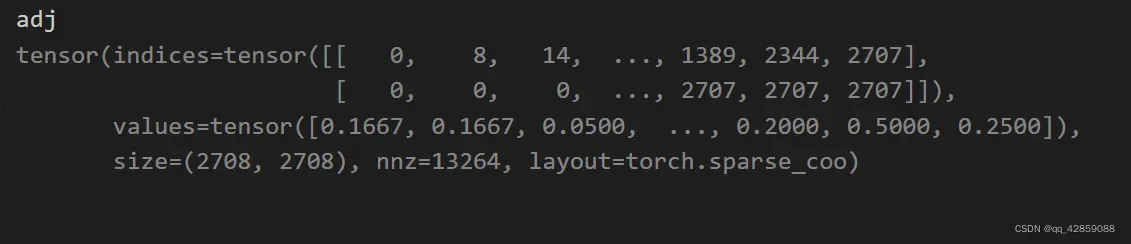

变换前adj

变换后adj

test.py

import torch

import numpy as np

import scipy.sparse as sp

i=torch.LongTensor([[0,1,1],[2,0,2]])

j=i.t()

adj = sp.coo_matrix((np.ones(i.shape[0]), (i[:, 0], i[:, 1])), # 构建边的邻接矩阵

shape=(5,5),

dtype=np.float32)

print(adj.A)

print('~~~~~~~~~~~')

print(adj.T > adj)

print('~~~~~~~~~~~')

print(adj.multiply(adj.T > adj))

print('~~~~~~~~~~~')

print(adj.T.multiply(adj.T > adj))

print('~~~~~~~~~~~')

adj = adj + adj.T.multiply(adj.T > adj) - adj.multiply(adj.T > adj)

print(adj.A)

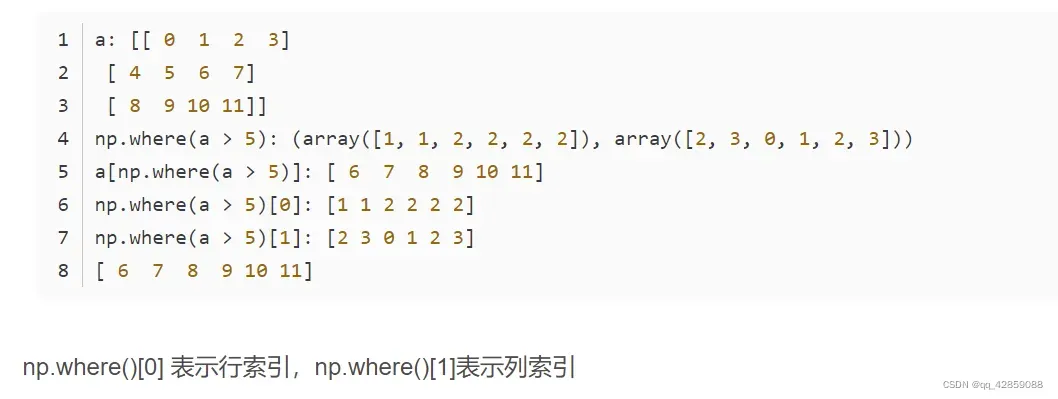

where.py

import numpy as np

a = np.arange(12).reshape(3,4)

print('a:', a)

print('np.where(a > 5):', np.where(a > 5))

print('a[np.where(a > 5)]:', a[np.where(a > 5)])

print('np.where(a > 5)[0]:', np.where(a > 5)[0])

print('np.where(a > 5)[1]:', np.where(a > 5)[1])

print(a[np.where(a > 5)[0], np.where(a > 5)[1]])

https://blog.csdn.net/ysh1026/article/details/109559981

onehot.py

import numpy as np

import os

def encode_onehot(labels):

classes = set(labels)#集合没有重复值,看看有多少个类型

classes_dict = {c: np.identity(len(classes))[i, :] for i, c in

enumerate(classes)}#把classes变成字典

#np.identity(len(classes))[i, :],变成对角矩阵,并切割成i行

#for i, c in enumerate(classes),i是索引,c是元素

labels_onehot = np.array(list(map(classes_dict.get, labels)),

dtype=np.int32)

return labels_onehot

path=os.path.join(os.getcwd(),'pygcn','data','cora')

dataset="cora"

idx_features_labels = np.genfromtxt("{}/{}.content".format(path, dataset),

dtype=np.dtype(str))

labels = encode_onehot(idx_features_labels[:, -1]) # one-hot label

train相关代码分析

代码解析见注释

train.py

from __future__ import division

from __future__ import print_function

from multiprocessing import cpu_count

import time

import argparse

import numpy as np

import torch

import torch.nn.functional as F

import torch.optim as optim

from utils import load_data, accuracy

from models import GCN

# Training settings

parser = argparse.ArgumentParser()

parser.add_argument('--no-cuda', action='store_true', default=False,

help='Disables CUDA training.')

parser.add_argument('--fastmode', action='store_true', default=False,

help='Validate during training pass.')

parser.add_argument('--seed', type=int, default=42, help='Random seed.')

parser.add_argument('--epochs', type=int, default=1000,

help='Number of epochs to train.')

parser.add_argument('--lr', type=float, default=0.01,

help='Initial learning rate.')

parser.add_argument('--weight_decay', type=float, default=5e-4,

help='Weight decay (L2 loss on parameters).')

parser.add_argument('--hidden', type=int, default=16,

help='Number of hidden units.')

parser.add_argument('--dropout', type=float, default=0.5,

help='Dropout rate (1 - keep probability).')

args = parser.parse_args()

args.cuda = (not args.no_cuda) and torch.cuda.is_available()

# np,cpu,gpu三个随机数种子

np.random.seed(args.seed)

torch.manual_seed(args.seed)

if args.cuda:

torch.cuda.manual_seed(args.seed)

# Load data

adj, features, labels, idx_train, idx_val, idx_test = load_data()

# Model and optimizer,构造GCN,初始化参数。两层GCN

model = GCN(nfeat=features.shape[1],

nhid=args.hidden,#2400->16->7

nclass=labels.max().item() + 1,

#labels.max().item()

# 6

# labels.max()

# tensor(6)

dropout=args.dropout)

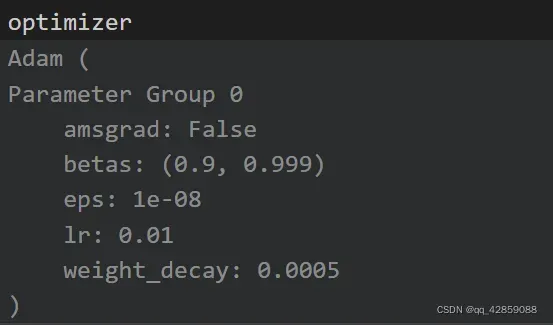

optimizer = optim.Adam(model.parameters(),

lr=args.lr, weight_decay=args.weight_decay)#有时间可以康康

if args.cuda:

model.cuda()

features = features.cuda()

adj = adj.cuda()

labels = labels.cuda()

idx_train = idx_train.cuda()

idx_val = idx_val.cuda()

idx_test = idx_test.cuda()

#是否使用cuda

def train(epoch):

t = time.time()

model.train()

optimizer.zero_grad() # GraphConvolution forward

output = model(features, adj) # 运行模型,输入参数 (features, adj)

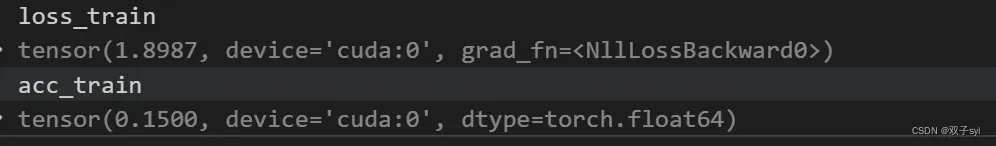

loss_train = F.nll_loss(output[idx_train], labels[idx_train])

acc_train = accuracy(output[idx_train], labels[idx_train])

loss_train.backward()

optimizer.step()

if not args.fastmode:

# Evaluate validation set performance separately,

# deactivates dropout during validation run.

model.eval()

output = model(features, adj)

loss_val = F.nll_loss(output[idx_val], labels[idx_val])

acc_val = accuracy(output[idx_val], labels[idx_val])

print('Epoch: {:04d}'.format(epoch+1),

'loss_train: {:.4f}'.format(loss_train.item()),

'acc_train: {:.4f}'.format(acc_train.item()),

'loss_val: {:.4f}'.format(loss_val.item()),

'acc_val: {:.4f}'.format(acc_val.item()),

'time: {:.4f}s'.format(time.time() - t))

print('Epoch: %d'%epoch)

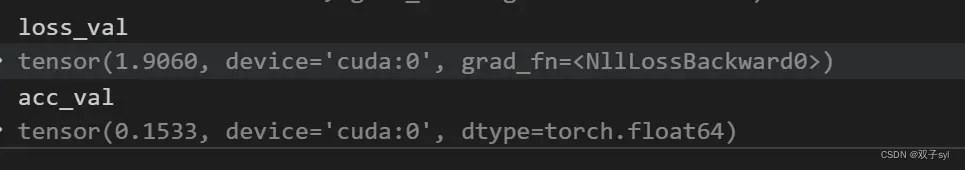

def test():

model.eval()

output = model(features, adj)

loss_test = F.nll_loss(output[idx_test], labels[idx_test])

acc_test = accuracy(output[idx_test], labels[idx_test])

print("Test set results:",

"loss= {:.4f}".format(loss_test.item()),

"accuracy= {:.4f}".format(acc_test.item()))

# Train model

t_total = time.time()

for epoch in range(args.epochs):

train(epoch)

print("Optimization Finished!")

print("Total time elapsed: {:.4f}s".format(time.time() - t_total))

# Testing

test()

layers.py

import math

import torch

from torch.nn.parameter import Parameter

from torch.nn.modules.module import Module

class GraphConvolution(Module):

"""

Simple GCN layer, similar to https://arxiv.org/abs/1609.02907

"""

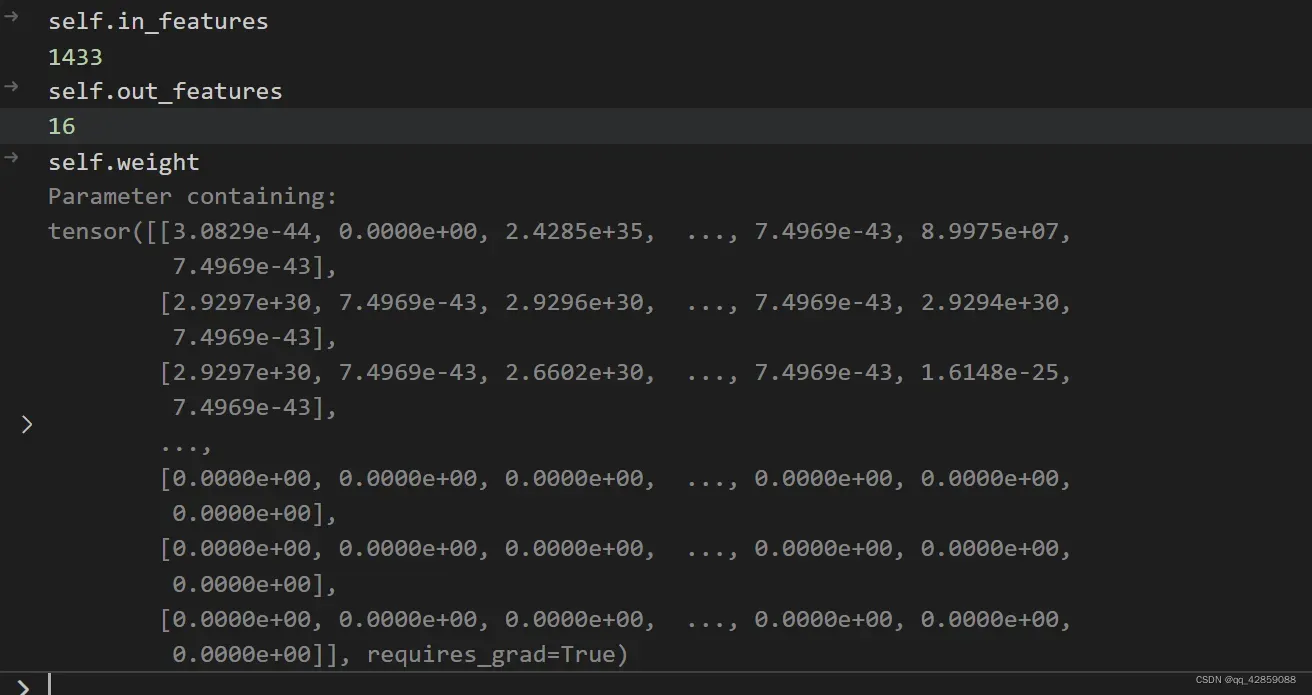

def __init__(self, in_features, out_features, bias=True):

super(GraphConvolution, self).__init__()

self.in_features = in_features

self.out_features = out_features

self.weight = Parameter(torch.FloatTensor(in_features, out_features)) # input_features, out_features组成的矩阵

if bias:

self.bias = Parameter(torch.FloatTensor(out_features))#向量

else:

self.register_parameter('bias', None)

self.reset_parameters()

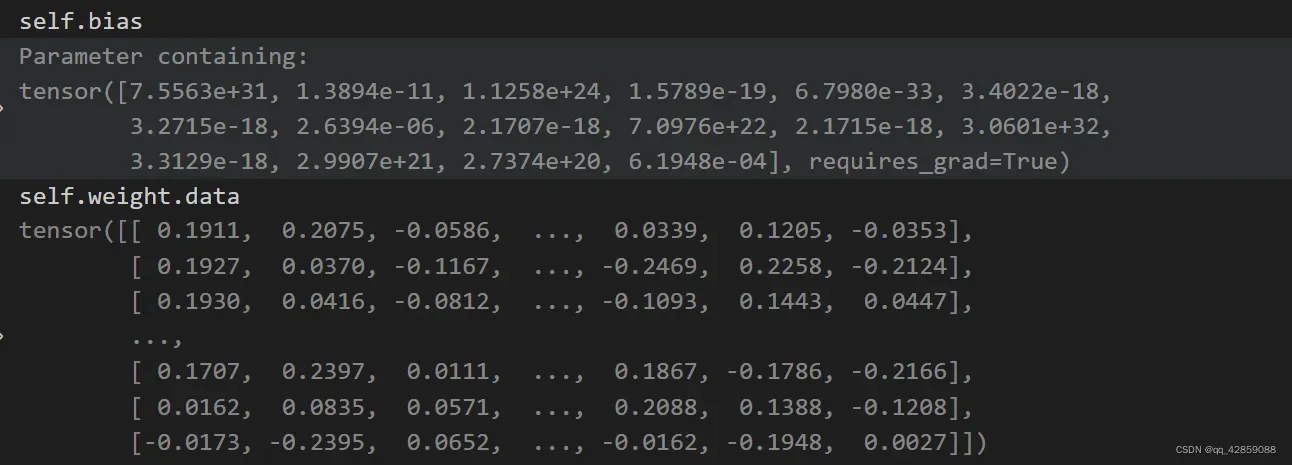

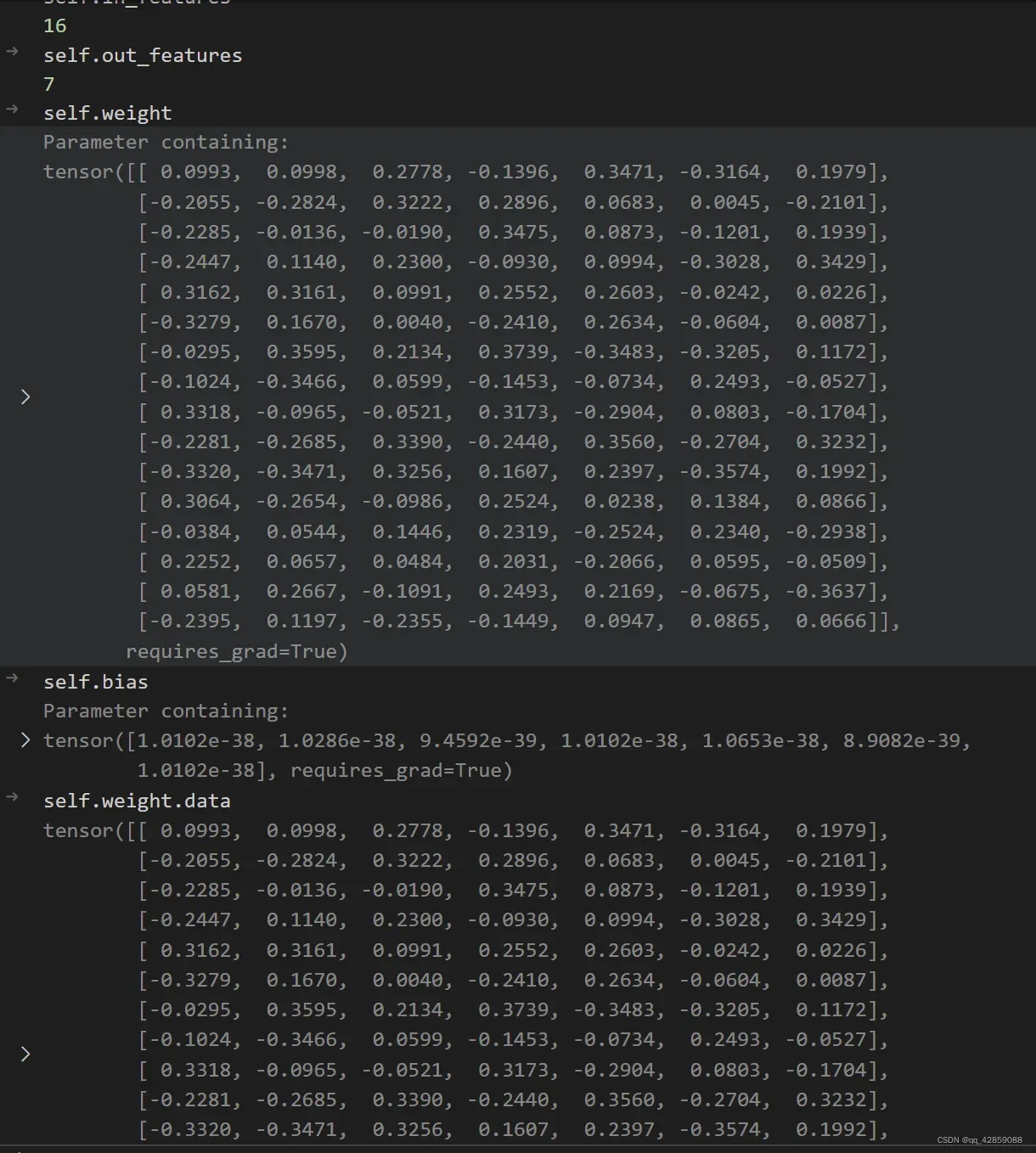

def reset_parameters(self):

stdv = 1. / math.sqrt(self.weight.size(1))

self.weight.data.uniform_(-stdv, stdv) # 随机化参数

if self.bias is not None:

self.bias.data.uniform_(-stdv, stdv)

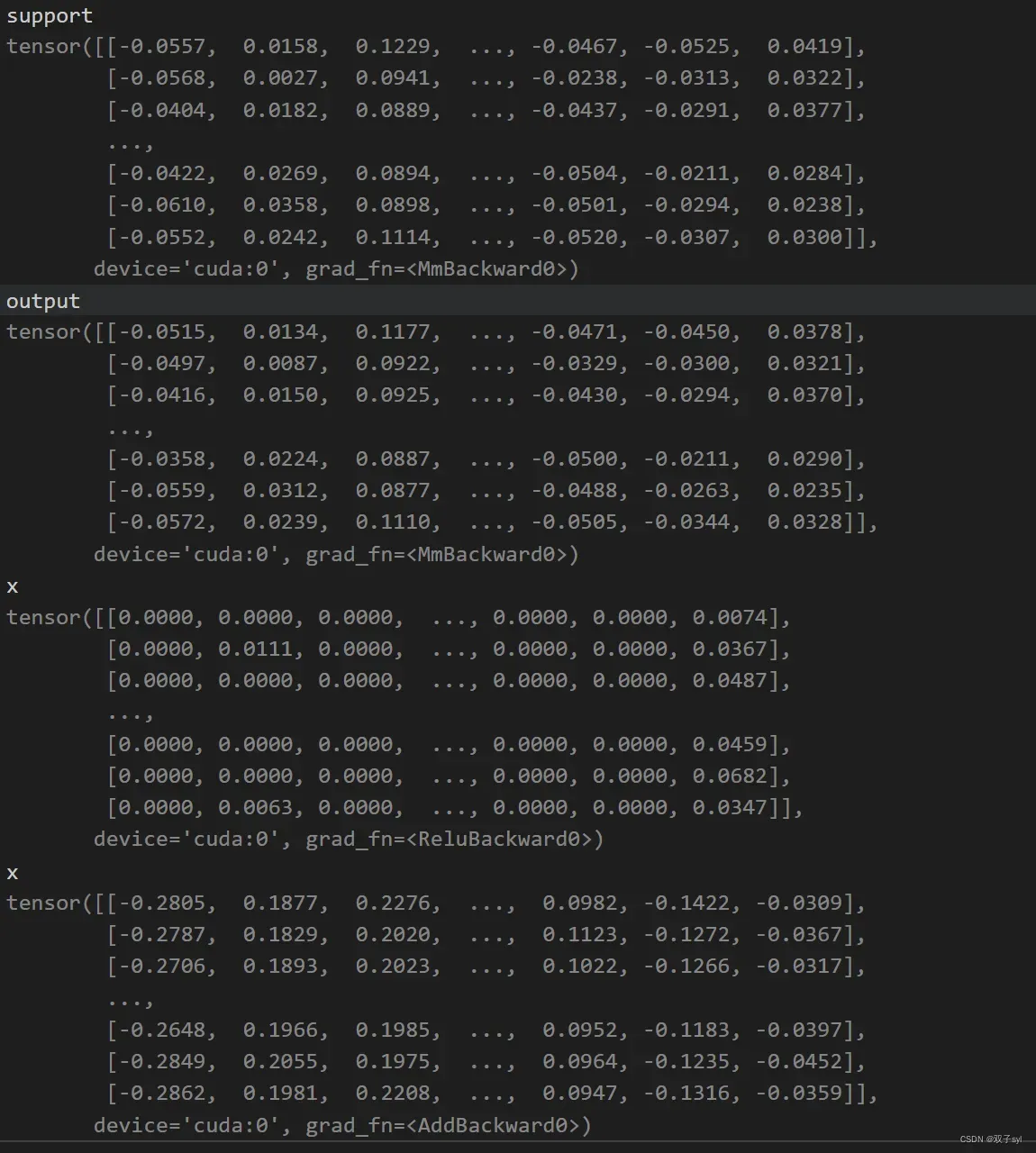

def forward(self, input, adj):

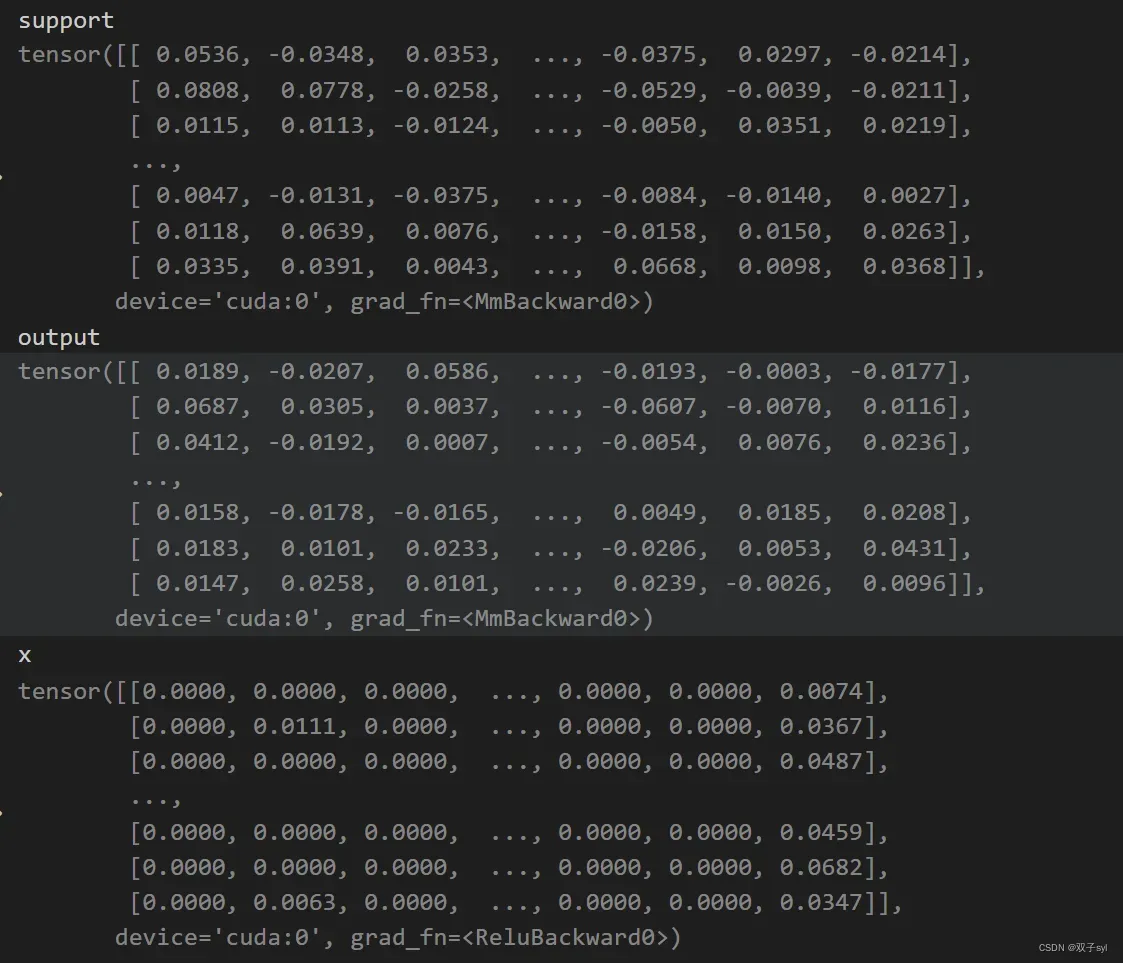

support = torch.mm(input, self.weight) # GraphConvolution forward。input*weight

output = torch.spmm(adj, support) # 稀疏矩阵的相乘,和mm一样的效果

if self.bias is not None:

return output + self.bias

else:

return output

def __repr__(self):

return self.__class__.__name__ + ' (' \

+ str(self.in_features) + ' -> ' \

+ str(self.out_features) + ')'

models.py

import torch.nn as nn

import torch.nn.functional as F

from layers import GraphConvolution

class GCN(nn.Module):

def __init__(self, nfeat, nhid, nclass, dropout):#初始化

super(GCN, self).__init__()

self.gc1 = GraphConvolution(nfeat, nhid) # 构建第一层 GCN

self.gc2 = GraphConvolution(nhid, nclass) # 构建第二层 GCN

self.dropout = dropout

def forward(self, x, adj):

x = F.relu(self.gc1(x, adj))#第一层,并用relu激活

x = F.dropout(x, self.dropout, training=self.training)#丢弃一部分特征

x = self.gc2(x, adj)#第二层

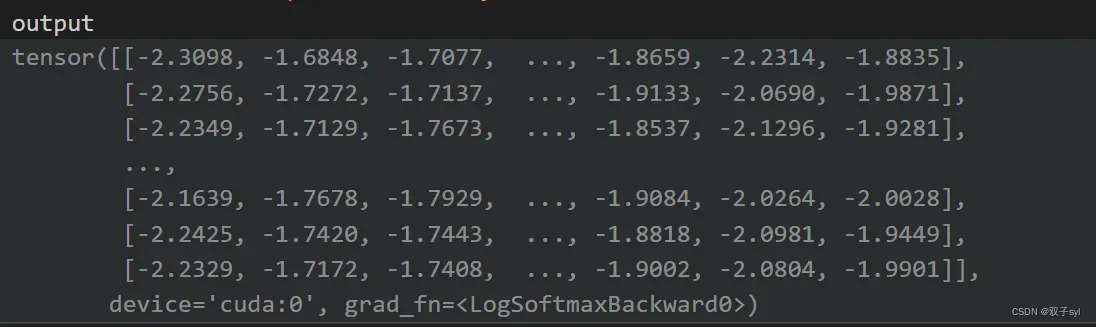

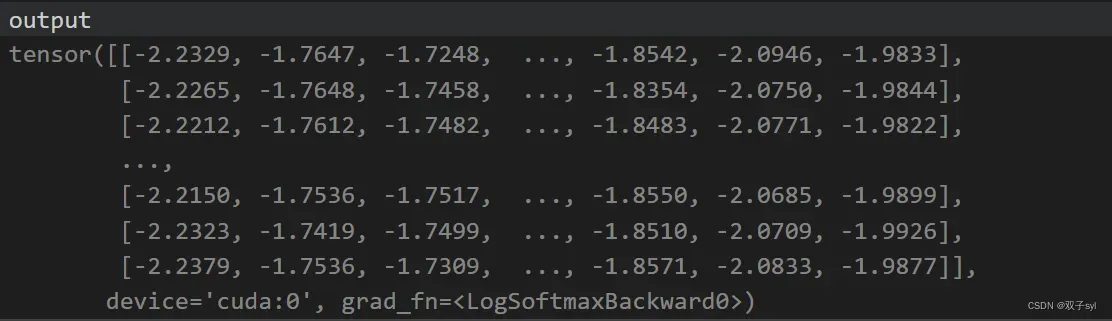

return F.log_softmax(x, dim=1)#softmax激活函数

debug调试

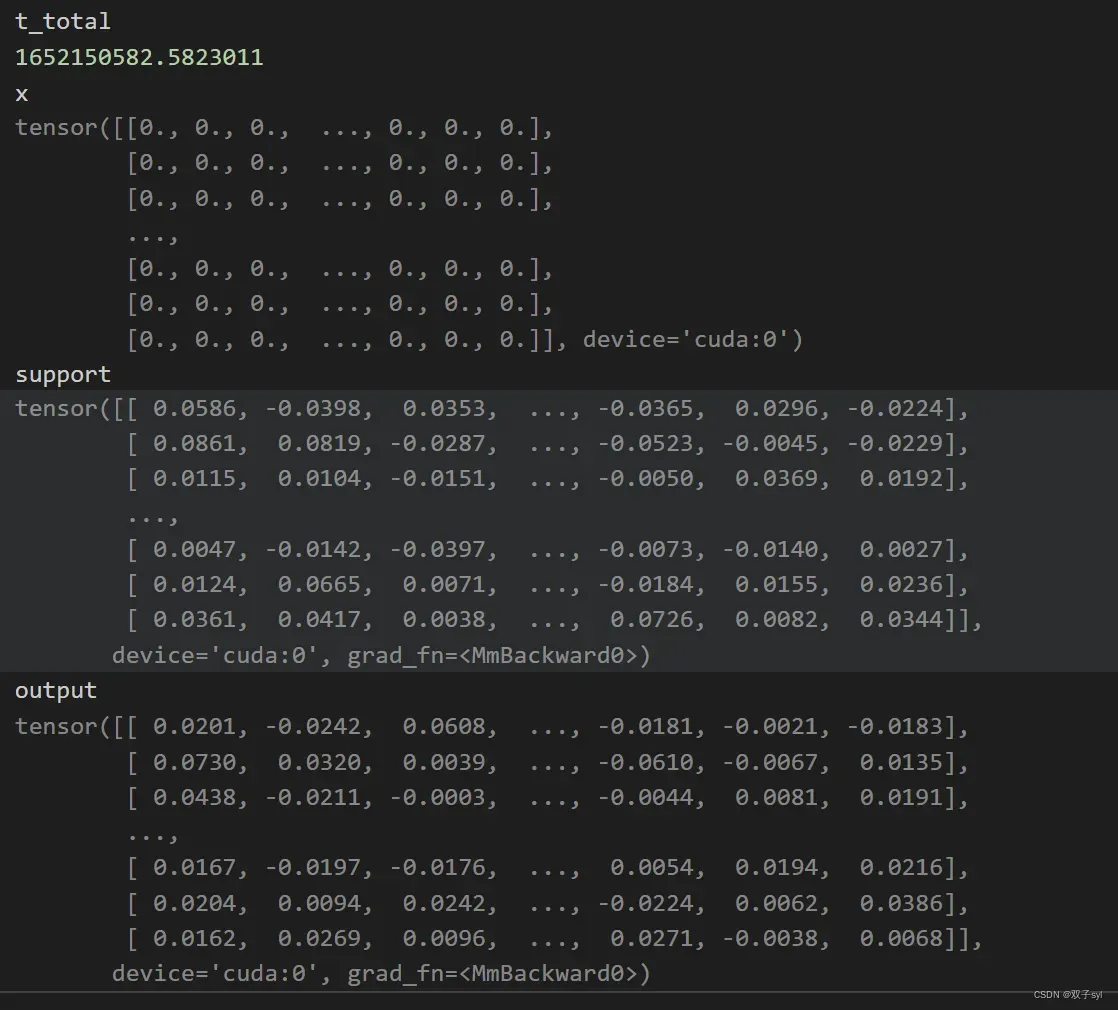

数据处理结果

layers.py的第一层初始化结果

layers.py的第二层初始化结果

优化结果

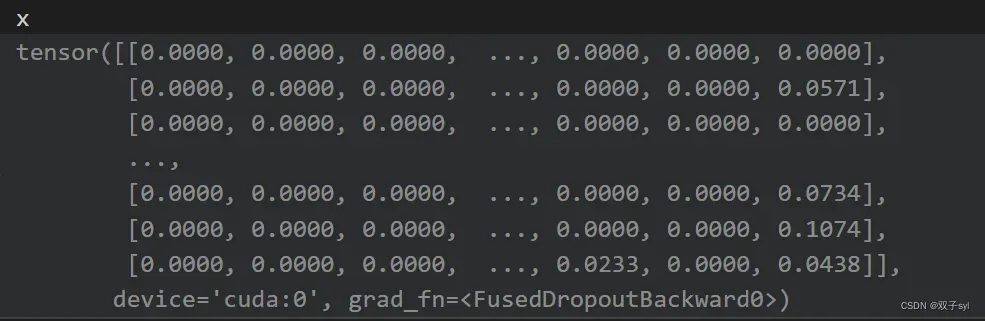

第一次训练,x = F.relu(self.gc1(x, adj))#第一层gcn,并用relu激活

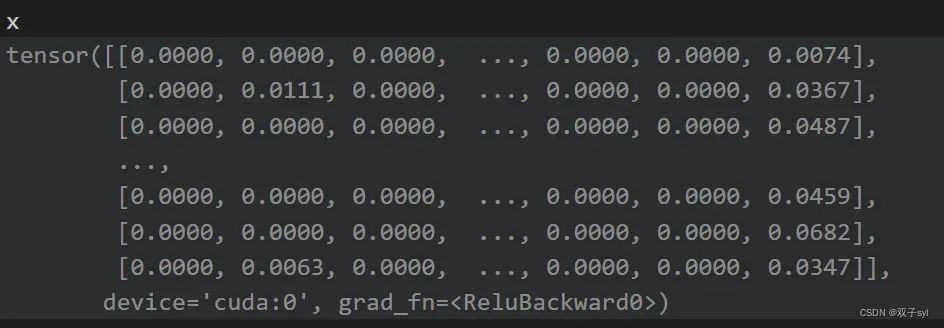

x = F.dropout(x, self.dropout, training=self.training)#丢弃一部分特征

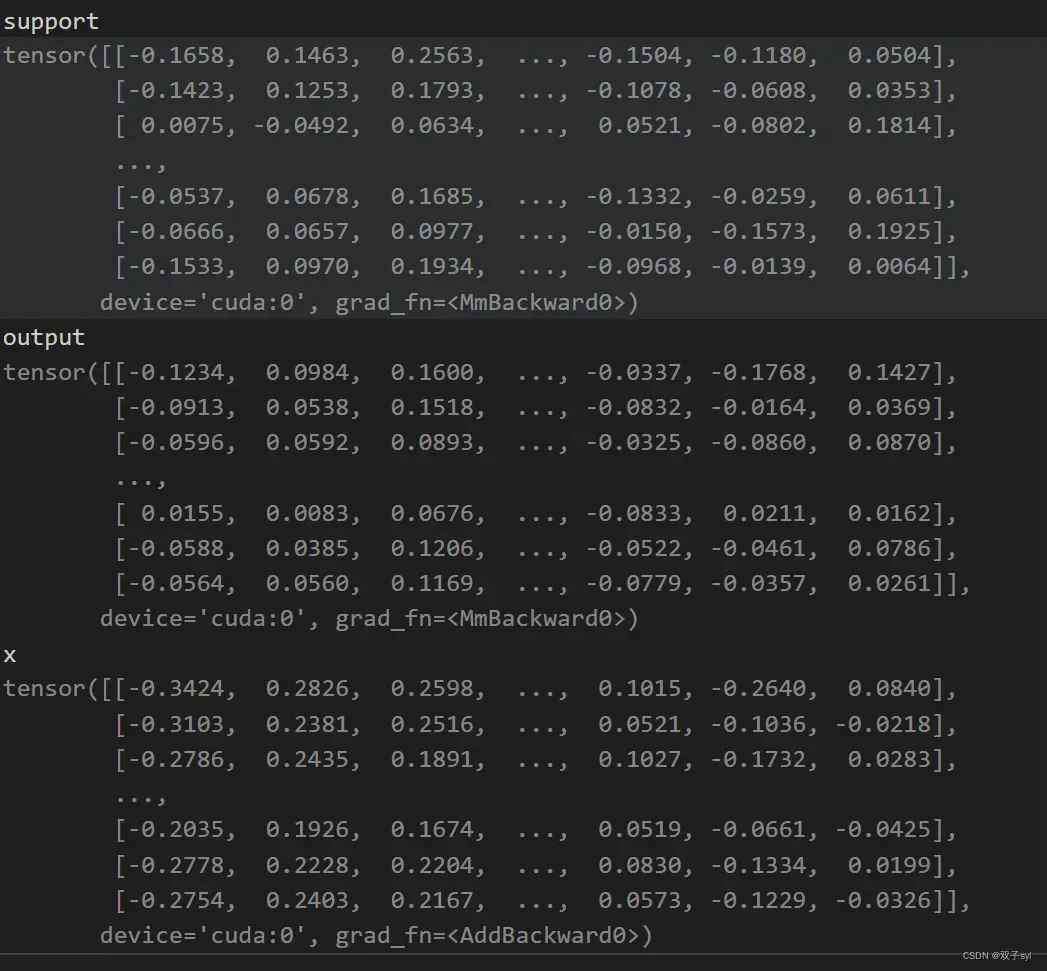

x = self.gc2(x, adj)#第二层gcn

第一次训练结果

第一次验证eval结果

第一层GCN

dropout之后

第二层gcn

验证eval结果输出

测试结果

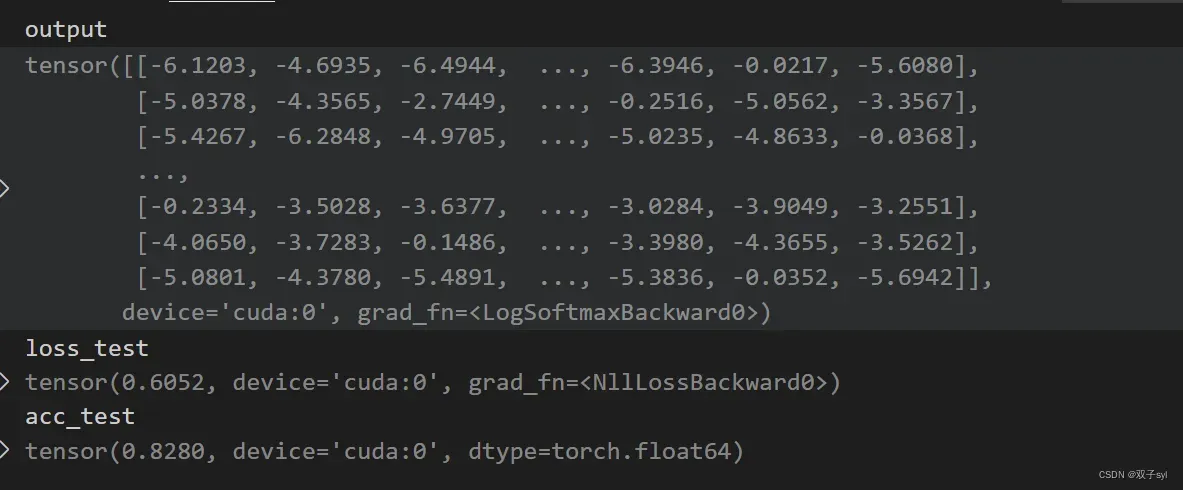

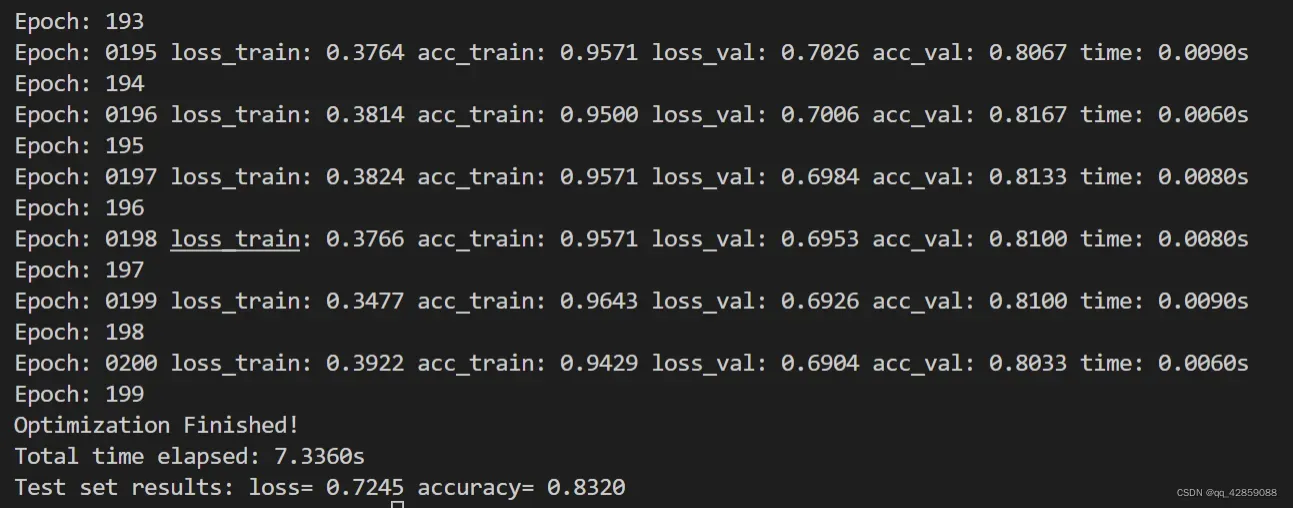

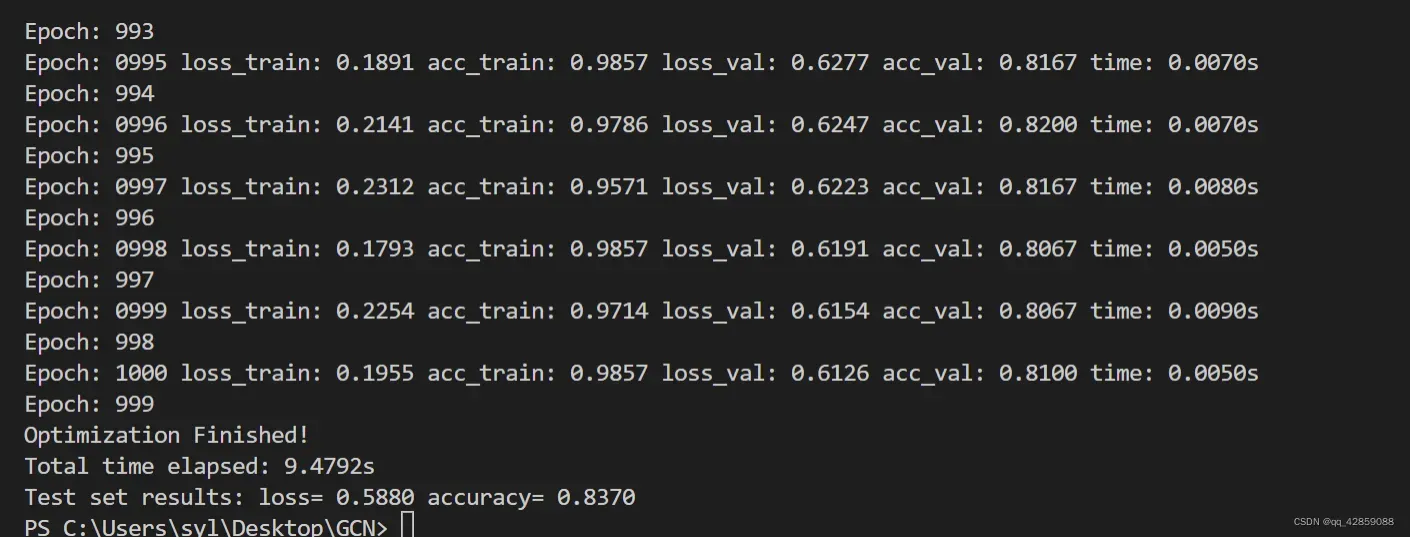

实验结果

训练200次结果

训练1000次结果

文章出处登录后可见!