学习来源:日撸 Java 三百行(71-80天,BP 神经网络)_闵帆的博客-CSDN博客

代码理解:

layerNumNodes

表示网络的基本结构。例如:[3, 4, 6, 2]说明输入端口有三个,即一条数据的三个属性。输出端口有两个,即数据类别有两个。中间有两个隐藏层,分别有4个和6个节点。

layerNodeValues

各网络节点的值。如上例, layerNodeValues.length 为 4。layerNodeValues[0].length = 3,layerNodeValues[1].length = 4,layerNodeValues[2].length = 6,layerNodeValues[3].length = 2。

layerNodeErrors

各网络节点上的误差。与layerNodeValues一致。

edgeWeights

各条边的权重。由于两层之间的边为多对多关系 (二维数组),多个层的边就成了三维数组。如上例,第0层就有(3+1)*4=16 条边(+1表示偏移量)。第1层就有(4+1)*6=30条边。第2层就有(6+1)*2=14条边。

edgeWeightsDelta

用于调整边的权重。与edgeWeightsDelta一致。

先前预测

1.初始化输入层。

2.每一层的神经元使用sigmoid函数激活。

后向调整权重

调整时考虑两个方面,一个方面是动量,即在原本权重的方向走一段距离,另一个方面是学习率,也就是梯度下降。

代码:

package machinelearning.ann;

/**

* Back-propagation neural networks. The code comes from

*

* @author Hui Xiao

*/

public class SimpleAnn extends GeneralAnn{

/**

* The value of each node that changes during the forward process. The first

* dimension stands for the layer, and the second stands for the node.

*/

public double[][] layerNodeValues;

/**

* The error on each node that changes during the back-propagation process.

* The first dimension stands for the layer, and the second stands for the

* node.

*/

public double[][] layerNodeErrors;

/**

* The weights of edges. The first dimension stands for the layer, the

* second stands for the node index of the layer, and the third dimension

* stands for the node index of the next layer.

*/

public double[][][] edgeWeights;

/**

* The change of edge weights. It has the same size as edgeWeights.

*/

public double[][][] edgeWeightsDelta;

/**

********************

* The first constructor.

*

* @param paraFilename

* The arff filename.

* @param paraLayerNumNodes

* The number of nodes for each layer (may be different).

* @param paraLearningRate

* Learning rate.

* @param paraMobp

* Momentum coefficient.

********************

*/

public SimpleAnn(String paraFilename, int[] paraLayerNumNodes, double paraLearningRate,

double paraMobp) {

super(paraFilename, paraLayerNumNodes, paraLearningRate, paraMobp);

// Step 1. Across layer initialization.

layerNodeValues = new double[numLayers][];

layerNodeErrors = new double[numLayers][];

edgeWeights = new double[numLayers - 1][][];

edgeWeightsDelta = new double[numLayers - 1][][];

// Step 2. Inner layer initialization.

for (int l = 0; l < numLayers; l++) {

layerNodeValues[l] = new double[layerNumNodes[l]];

layerNodeErrors[l] = new double[layerNumNodes[l]];

// One less layer because each edge crosses two layers.

if (l + 1 == numLayers) {

break;

} // of if

// In layerNumNodes[l] + 1, the last one is reserved for the offset.

edgeWeights[l] = new double[layerNumNodes[l] + 1][layerNumNodes[l + 1]];

edgeWeightsDelta[l] = new double[layerNumNodes[l] + 1][layerNumNodes[l + 1]];

for (int j = 0; j < layerNumNodes[l] + 1; j++) {

for (int i = 0; i < layerNumNodes[l + 1]; i++) {

// Initialize weights.

edgeWeights[l][j][i] = random.nextDouble();

} // Of for i

} // Of for j

} // Of for l

}// Of the constructor

/**

********************

* Forward prediction.

*

* @param paraInput

* The input data of one instance.

* @return The data at the output end.

********************

*/

public double[] forward(double[] paraInput) {

// Initialize the input layer.

for (int i = 0; i < layerNodeValues[0].length; i++) {

layerNodeValues[0][i] = paraInput[i];

} // Of for i

// Calculate the node values of each layer.

double z;

for (int l = 1; l < numLayers; l++) {

for (int j = 0; j < layerNodeValues[l].length; j++) {

// Initialize according to the offset, which is always +1

z = edgeWeights[l - 1][layerNodeValues[l - 1].length][j];

// Weighted sum on all edges for this node.

for (int i = 0; i < layerNodeValues[l - 1].length; i++) {

z += edgeWeights[l - 1][i][j] * layerNodeValues[l - 1][i];

} // Of for i

// Sigmoid activation.

// This line should be changed for other activation functions.

layerNodeValues[l][j] = 1 / (1 + Math.exp(-z));

} // Of for j

} // Of for l

return layerNodeValues[numLayers - 1];

}// Of forward

/**

********************

* Back propagation and change the edge weights.

*

* @param paraTarget

* For 3-class data, it is [0, 0, 1], [0, 1, 0] or [1, 0, 0].

********************

*/

public void backPropagation(double[] paraTarget) {

// Step 1. Initialize the output layer error.

int l = numLayers - 1;

for (int j = 0; j < layerNodeErrors[l].length; j++) {

layerNodeErrors[l][j] = layerNodeValues[l][j] * (1 - layerNodeValues[l][j])

* (paraTarget[j] - layerNodeValues[l][j]);

} // Of for j

// Step 2. Back-propagation even for l == 0

while (l > 0) {

l--;

// Layer l, for each node.

for (int j = 0; j < layerNumNodes[l]; j++) {

double z = 0.0;

// For each node of the next layer.

for (int i = 0; i < layerNumNodes[l + 1]; i++) {

if (l > 0) {

z += layerNodeErrors[l + 1][i] * edgeWeights[l][j][i];

} // Of if

// Weight adjusting.

edgeWeightsDelta[l][j][i] = mobp * edgeWeightsDelta[l][j][i]

+ learningRate * layerNodeErrors[l + 1][i] * layerNodeValues[l][j];

edgeWeights[l][j][i] += edgeWeightsDelta[l][j][i];

if (j == layerNumNodes[l] - 1) {

// Weight adjusting for the offset part.

edgeWeightsDelta[l][j + 1][i] = mobp * edgeWeightsDelta[l][j + 1][i]

+ learningRate * layerNodeErrors[l + 1][i];

edgeWeights[l][j + 1][i] += edgeWeightsDelta[l][j + 1][i];

} // Of if

} // Of for i

// Record the error according to the differential of Sigmoid.

// This line should be changed for other activation functions.

layerNodeErrors[l][j] = layerNodeValues[l][j] * (1 - layerNodeValues[l][j]) * z;

} // Of for j

} // Of while

}// Of backPropagation

/**

********************

* Test the algorithm.

********************

*/

public static void main(String[] args) {

int[] tempLayerNodes = { 4, 8, 8, 3 };

SimpleAnn tempNetwork = new SimpleAnn("D:/data/iris.arff", tempLayerNodes, 0.01,

0.6);

for (int round = 0; round < 5000; round++) {

tempNetwork.train();

} // Of for n

double tempAccuracy = tempNetwork.test();

System.out.println("The accuracy is: " + tempAccuracy);

}// Of main

}// Of class SimpleAnn

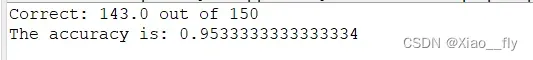

截图:

文章出处登录后可见!

已经登录?立即刷新