1安装python库

使用pip安装openai库,注意gpt3.5-turbo模型需要python>=3.9的版本支持,本文演示的python版本是python==3.10.10

pip install openai

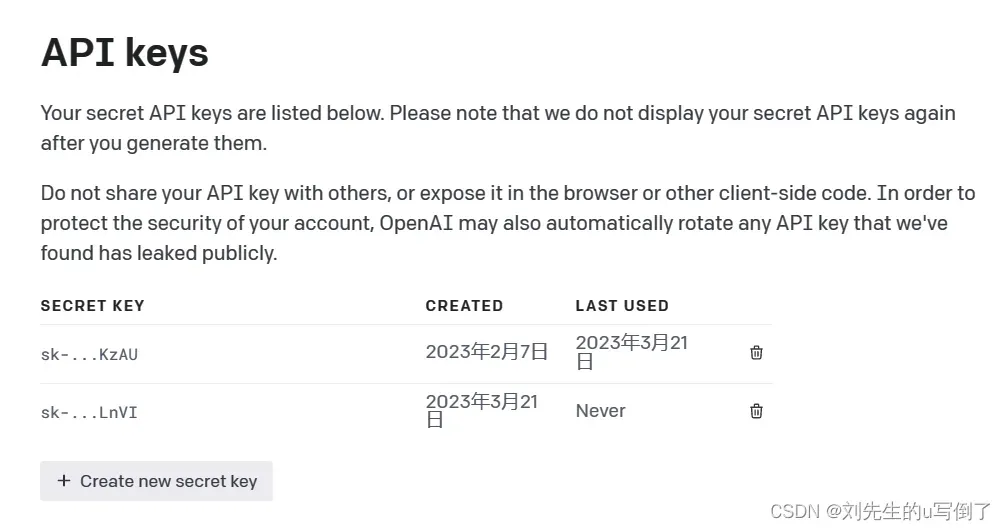

2创建api key

需要提前在openai官网上注册好账号,然后打开https://platform.openai.com/account/api-keys就可以创建接口keys

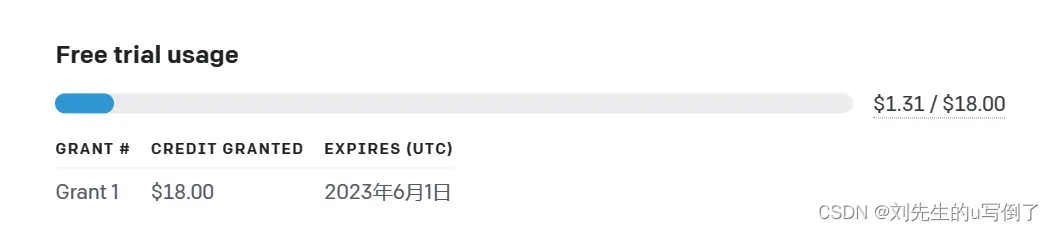

每个账号注册完成会有18美元在里面,每次调用api,就会花费里面的余额,注意余额也有过期时间。

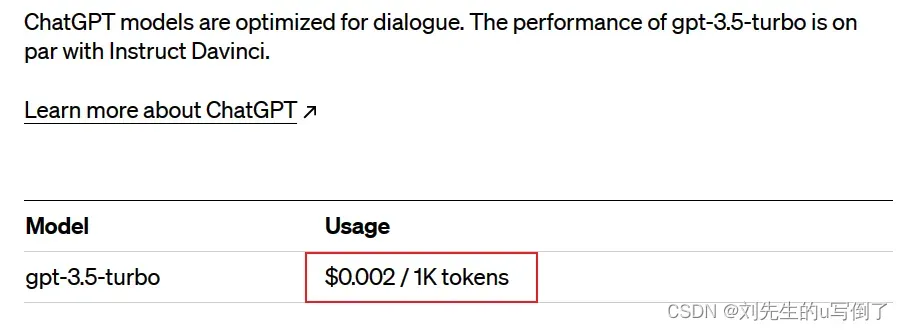

3费用介绍

调用gpt3.5-turbo的费用如下,可以看到每1000个token是0.002美元

关于token,官方也给出了说法

Multiple models, each with different capabilities and price points. Prices are per 1,000 tokens. You can think of tokens as pieces of words, where 1,000 tokens is about 750 words. This paragraph is 35 tokens.

原意就是1000个token大概是750个单词,而上面这段话有35个token。所以,不用过于担心这个费用的问题。

4 API调用

官方给出了两种调用Api的方法,分别是url请求和调用python包。

【1】使用curl工具请求

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "Say this is a test!"}],

"temperature": 0.7

}'

请求后返回的格式为:

{

"id":"chatcmpl-abc123",

"object":"chat.completion",

"created":1677858242,

"model":"gpt-3.5-turbo-0301",

"usage":{

"prompt_tokens":13,

"completion_tokens":7,

"total_tokens":20

},

"choices":[

{

"message":{

"role":"assistant",

"content":"\n\nThis is a test!"

},

"finish_reason":"stop",

"index":0

}

]

}

【2】调用python包

import openai

openai.api_key="OpenAI api key"

messages = []

system_message = input("What type of chatbot you want me to be?")

system_message_dict = {

"role": "system",

"content": system_message

}

messages.append(system_message_dict)

message = input("输入想要询问的信息: ")

user_message_dict = {

"role": "user",

"content": message

}

messages.append(user_message_dict)

response=openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages

)

print(response)

reply = response["choices"][0]["message"]["content"]

print(reply)

请求包返回的结果:

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "\u4e0b\u9762\u662f\u4e00\u4e2aJava\u5b9e\u73b0\u7684\u96ea\u82b1\u7b97\u6cd5\u751f\u6210\u5206\u5e03\u5f0fID\u7684\u4ee3\u7801\uff1a\n\n```java\npublic class SnowflakeIdGenerator {\n // \u5f00\u59cb\u65f6\u95f4\u6233\uff0c\u53ef\u4ee5\u6839\u636e\u9700\u8981\u81ea\u884c\u4fee\u6539\n private final static long START_TIMESTAMP = 1480166465631L;\n \n // \u6bcf\u90e8\u5206\u5360\u7528\u7684\u4f4d\u6570\n private final static long SEQUENCE_BIT = 12; // \u5e8f\u5217\u53f7\u5360\u7528\u7684\u4f4d\u6570\n private final static long MACHINE_BIT = 5; // \u673a\u5668\u6807\u8bc6\u5360\u7528\u7684\u4f4d\u6570\n private final static long DATACENTER_BIT = 5;// \u6570\u636e\u4e2d\u5fc3\u5360\u7528\u7684\u4f4d\u6570\n \n // \u6bcf\u90e8\u5206\u7684\u6700\u5927\u503c\n private final static long MAX_DATACENTER_NUM = ~(-1L << DATACENTER_BIT);\n private final static long MAX_MACHINE_NUM = ~(-1L << MACHINE_BIT);\n private final static long MAX_SEQUENCE = ~(-1L << SEQUENCE_BIT);\n \n // \u6bcf\u90e8\u5206\u5411\u5de6\u7684\u4f4d\u79fb\n private final static long MACHINE_LEFT = SEQUENCE_BIT;\n private final static long DATACENTER_LEFT = SEQUENCE_BIT + MACHINE_BIT;\n private final static long TIMESTAMP_LEFT = DATACENTER_LEFT + DATACENTER_BIT;\n \n private long datacenterId; // \u6570\u636e\u4e2d\u5fc3\u6807\u8bc6\n private long machineId; // \u673a\u5668\u6807\u8bc6\n private long sequence = 0L; // \u5e8f\u5217\u53f7\n private long lastTimestamp = -1L; // \u4e0a\u6b21\u751f\u6210ID\u7684\u65f6\u95f4\u6233\n \n public SnowflakeIdGenerator(long datacenterId, long machineId) {\n if (datacenterId > MAX_DATACENTER_NUM || datacenterId < 0) {\n throw new IllegalArgumentException(\"Datacenter ID can't be greater than \" + MAX_DATACENTER_NUM + \" or less than 0\");\n }\n if (machineId > MAX_MACHINE_NUM || machineId < 0) {\n throw new IllegalArgumentException(\"Machine ID can't be greater than \" + MAX_MACHINE_NUM + \" or less than 0\");\n }\n this.datacenterId = datacenterId;\n this.machineId = machineId;\n }\n \n public synchronized long nextId() {\n long timestamp = timeGen();\n \n // \u5982\u679c\u5f53\u524d\u65f6\u95f4\u5c0f\u4e8e\u4e0a\u6b21ID\u751f\u6210\u7684\u65f6\u95f4\u6233\uff0c\u8bf4\u660e\u7cfb\u7edf\u65f6\u949f\u56de\u9000\u8fc7\uff0c\u8fd9\u4e2a\u65f6\u5019\u5e94\u5f53\u629b\u51fa\u5f02\u5e38\n if (timestamp < lastTimestamp) {\n throw new RuntimeException(\"Clock moved backwards. Refusing to generate id for \" + (lastTimestamp - timestamp) + \" milliseconds\");\n }\n \n // \u5982\u679c\u662f\u540c\u4e00\u65f6\u95f4\u751f\u6210\u7684\uff0c\u5219\u8fdb\u884c\u6beb\u79d2\u5185\u5e8f\u5217\u53f7\u9012\u589e\n if (lastTimestamp == timestamp) {\n sequence = (sequence + 1) & MAX_SEQUENCE;\n // \u5982\u679c\u6beb\u79d2\u5185\u5e8f\u5217\u53f7\u5df2\u7ecf\u8fbe\u5230\u6700\u5927\uff0c\u5219\u6beb\u79d2\u5185\u5e8f\u5217\u53f7\u91cd\u7f6e\u4e3a0\uff0c\u91cd\u65b0\u751f\u6210\u4e0b\u4e00\u4e2aID\n if (sequence == 0L) {\n timestamp = tilNextMillis(lastTimestamp);\n }\n }\n // \u65f6\u95f4\u6233\u6539\u53d8\uff0c\u6beb\u79d2\u5185\u5e8f\u5217\u53f7\u91cd\u7f6e\u4e3a0\n else {\n sequence = 0L;\n }\n \n // \u4e0a\u6b21\u751f\u6210ID\u7684\u65f6\u95f4\u622a\n lastTimestamp = timestamp;\n \n // \u79fb\u4f4d\u5e76\u901a\u8fc7\u6216\u8fd0\u7b97\u62fc\u5230\u4e00\u8d77\u7ec4\u621064\u4f4d\u7684ID\n return ((timestamp - START_TIMESTAMP) << TIMESTAMP_LEFT)\n | (datacenterId << DATACENTER_LEFT)\n | (machineId << MACHINE_LEFT)\n | sequence;\n }\n \n /*\n * \u5185\u90e8\u65b9\u6cd5\uff0c\u751f\u6210\u5f53\u524d\u65f6\u95f4\u6233\n */\n private long timeGen() {\n return System.currentTimeMillis();\n }\n \n /*\n * \u5185\u90e8\u65b9\u6cd5\uff0c\u7b49\u5f85\u4e0b\u4e00\u4e2a\u6beb\u79d2\u7684\u5230\u6765\n */\n private long tilNextMillis(long lastTimestamp) {\n long timestamp = timeGen();\n while (timestamp <= lastTimestamp) {\n timestamp = timeGen();\n }\n return timestamp;\n }\n}\n```\n\n\u8fd9\u4e2a\u7c7b\u7684\u4f7f\u7528\u65b9\u6cd5\u5982\u4e0b\uff1a\n\n```java\nSnowflakeIdGenerator idGenerator = new SnowflakeIdGenerator(1, 1);\nlong id = idGenerator.nextId();\n```",

"role": "assistant"

}

}

],

"created": 1680359622,

"id": "chatcmpl-70WaUhGWydy5fBxdlrs9fhqMj7kqN",

"model": "gpt-3.5-turbo-0301",

"object": "chat.completion",

"usage": {

"completion_tokens": 923,

"prompt_tokens": 33,

"total_tokens": 956

}

}

5 补充

对于请求体messages中的role字段说明。

这里有三种角色,分别是system、user和assistant

- system:可以帮助设置assistant的行为,在

gpt-3.5-turbo-0301模型中还没有比较多地关注这个设置,在未来的模型中会比较关注 - user:这个是本次用户输入想要回答的一个问题。

- assistant:用于存储之前用户的历史回答。

这三种角色相互依赖构成的一组输入信息,构成了上下文的形式。

参考文章:

[1] https://openai.com/pricing

[2] https://platform.openai.com/account/api-keys

[3] https://platform.openai.com/docs/api-reference/introduction

[4] https://platform.openai.com/docs/introduction/overview

文章出处登录后可见!