1 使用卷积神经网络识别猫和狗数据集

1.1 理论基础

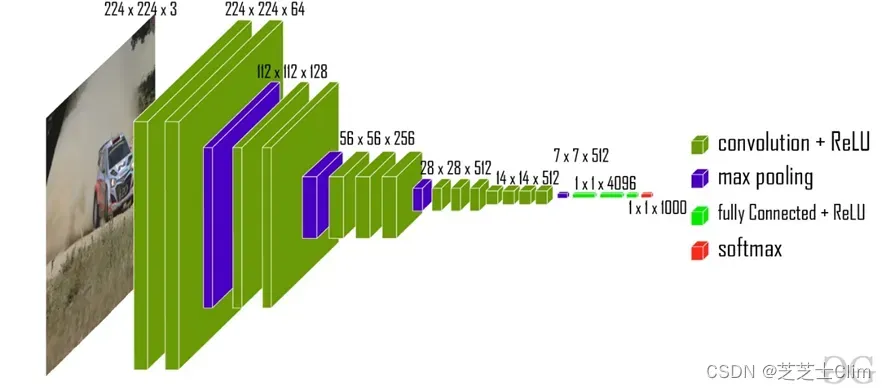

1.1.1 VGG架构

VGG16是由Karen Simonyan和Andrew Zisserman于2014年在论文“VERY DEEP CONVOLUTIONAL NETWORKS FOR LARGE SCALE IMAGE RECOGNITION”中提出的一种处理多分类、大范围图像识别问题的卷积神经网络架构,成功对ImageNet数据集的14万张图片进行了1000个类别的归类并有92.7%的准确率。

本项目即对Pytorch官方预训练的VGG16网络进行微调并完成了猫狗分类任务。实际的微调方法也十分简单,仅需将分类层的最后一层修改为(1x1x2)即可将分类结果从1000类修改为二分类。

图1 VGG16架构图

1.1.2 卷积神经网络

卷积神经网络是在深度神经网络的基础上改进的专用于图像识别的神经网络模型,其拥有四个主要特征层:卷积层、池化层、激活层、全连接层(深度神经网络)。其中卷积层通过采用卷积核对输入数据进行处理,提取输入数据的特定特征;池化层通过压缩图像数据大小实现神经网络运算的加速;激活层的作用与其在深度神经网络中一致,用于模拟人脑神经元的刺激结果;全连接层实际上即是深度神经网络,即图像数据经卷积层、池化层、激活层处理后输入深度神经网络进行分类运算。

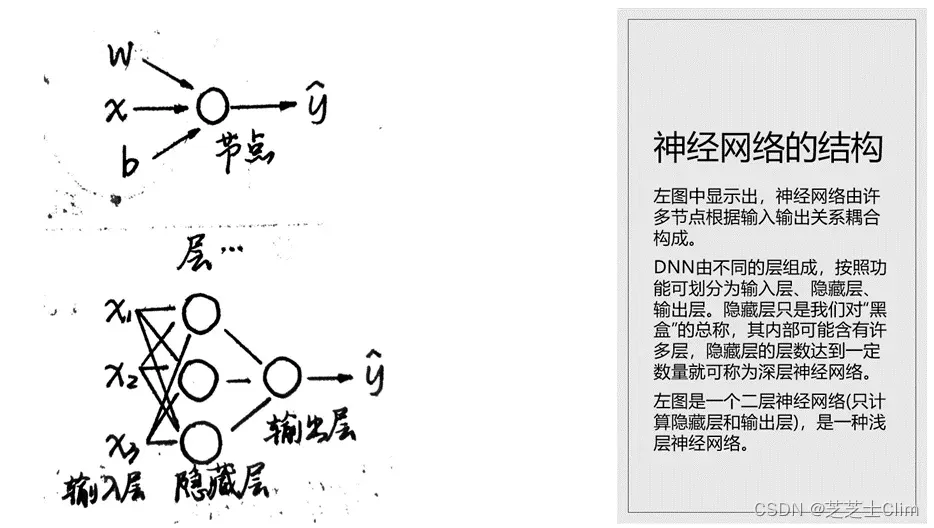

1.1.3 深度神经网络

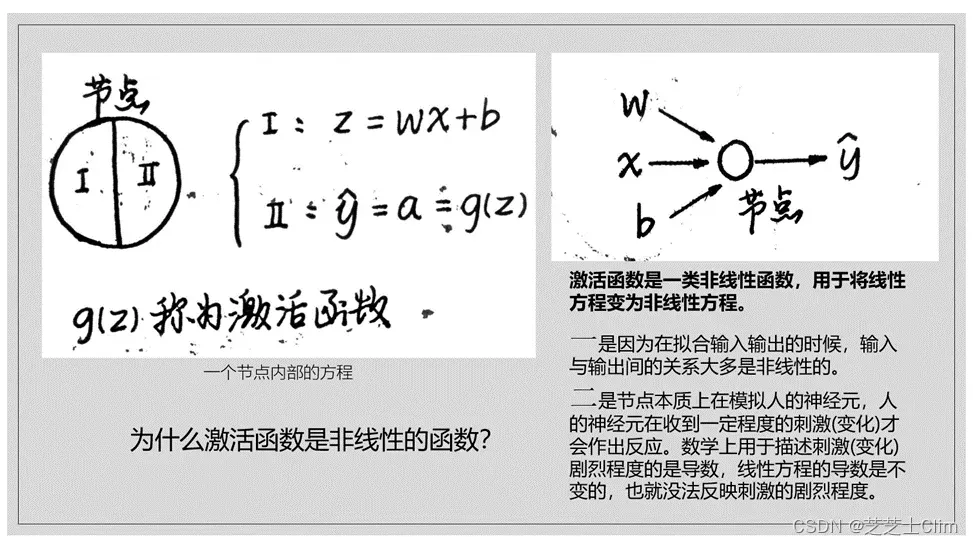

深度神经网络是深度学习的基础算法,下图为深度神经网络的部分介绍。

图2 深度神经网络的结构

图3 深度神经网络节点内部算法

2 代码

2.1 main.py

import torch

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

from training_settings import train, val

from vgg16 import vgg16

import os

# BATCH大小

BATCH_SIZE = 20

# 迭代次数

EPOCHS = 40

# 采用cpu还是gpu进行计算

DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 学习率

modellr = 1e-4

# 数据预处理

path = "D:\\Storage\\ProgramData\\Python\\DistingushCD\\net\\data\\dc_2000"

transform = transforms.Compose([transforms.CenterCrop(224), transforms.ToTensor(),transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

# 数据加载

dataset_train = datasets.ImageFolder(path + '\\' + 'train', transform)

print('trainset:{}'.format(dataset_train.class_to_idx))

dataset_test = datasets.ImageFolder(path + '\\' + 'test', transform)

print('testset:{}'.format(dataset_test.class_to_idx))

train_loader = DataLoader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)

test_loader = DataLoader(dataset_test, batch_size=BATCH_SIZE, shuffle=False)

# 设置模型

model = vgg16(pretrained=True, progress=True, num_classes=2)

model = model.to(DEVICE)

# 设置优化器

optimizer = torch.optim.Adam(model.parameters(), lr=modellr)

# sculer = torch.optim.lr_scheduler.StepLR(optimizer, step_size=1)

# 训练

for epoch in range(1, EPOCHS + 1):

train(model, DEVICE, train_loader, optimizer, epoch) # 训练过程函数

val(model, DEVICE, test_loader, optimizer) # 测试过程函数

# 储存模型

torch.save(model, 'D:\\Storage\\ProgramData\\Python\\DistingushCD\\netmodel.pth')

2.2 VGG16.py

import torch

import torch.nn as nn

from torch.hub import load_state_dict_from_url

model_urls = {

'vgg16': 'https://download.pytorch.org/models/vgg16-397923af.pth',

} # 下载模型

class VGG(nn.Module):

def __init__(

self,

features: nn.Module,

num_classes: int = 1000,

init_weights: bool = True

):

super(VGG, self).__init__()

self.features = features

self.avgpool = nn.AdaptiveAvgPool2d((7, 7))

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

def make_layers(cfg, batch_norm=False):

layers = []

in_channels = 3

for v in cfg:

if v == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v

return nn.Sequential(*layers)

cfgs = {

'D': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

}

def vgg16(pretrained=True, progress=True, num_classes=2):

model = VGG(make_layers(cfgs['D']))

if pretrained:

state_dict = load_state_dict_from_url(model_urls['vgg16'], model_dir='./model',

progress=progress)

model.load_state_dict(state_dict)

if num_classes != 1000:

model.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, num_classes),

)

return model

2.3 traning_settings.py

import torch

import torch.nn.functional as F

# cost函数设置

criterion = torch.nn.CrossEntropyLoss()

def train(model, device, train_loader, optimizer, epoch):

total_train = 0

for data in train_loader:

img, label = data

with torch.no_grad():

img = img.to(device)

label = label.to(device)

optimizer.zero_grad()

output = model(img)

train_loss = criterion(output, label).to(device)

train_loss.backward()

optimizer.step()

total_train += train_loss

print("Epoch:{}, Loss of training set:{:.5f}".format(epoch, total_train))

def val(model, device, test_lodaer, optimizer):

total_test = 0

total_accuracy = 0

total_num = len(test_lodaer.dataset)

for data in test_lodaer:

img, label = data

with torch.no_grad():

img = img.to(device)

label = label.to(device)

optimizer.zero_grad()

output = model(img)

test_loss = criterion(output, label).to(device)

total_test += test_loss

accuracy = (output.argmax(1) == label).sum()

total_accuracy += accuracy

print("Loss of testing set:{:.5f}, Accuracy of testing set:{:.1%}\n".format(total_test, total_accuracy/total_num))

2.4 prediction.py

import torch.utils.data.distributed

import torchvision.transforms as transforms

from torch.autograd import Variable

import os

from PIL import Image

classes = ('cat', 'dog')

transform_test = transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

DEVICE = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = torch.load('D:\\Storage\\ProgramData\\Python\\DistingushCD\\netmodel.pth')

model.eval()

model.to(DEVICE)

path = 'D:\\Storage\\ProgramData\\Python\\DistingushCD\\net\\data\\dc_2000\\to_test\\test1\\'

file = '1002.jpg'

img = Image.open(path + file)

img.show()

img = transform_test(img)

img.unsqueeze_(0)

img = Variable(img).to(DEVICE)

out = model(img)

# Predict

_, pred = torch.max(out.data, 1)

print('Image Name: {},\nprediction: It\'s a {}.'.format(file, classes[pred.data.item()]))

3 结果

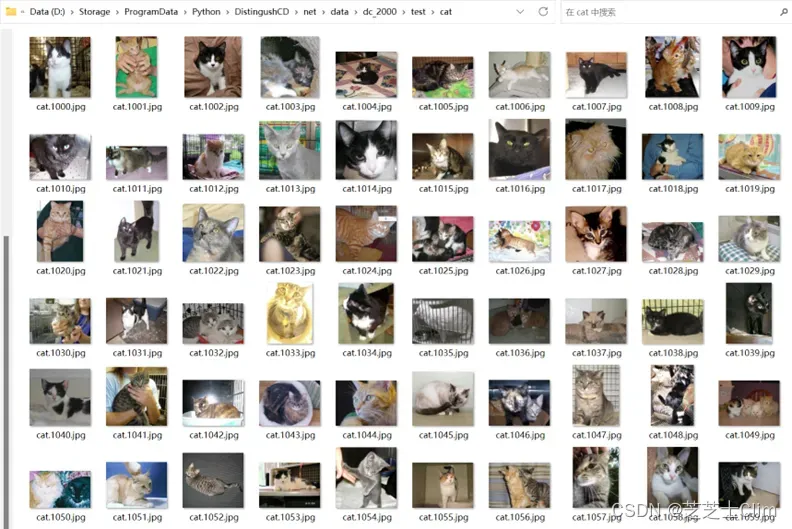

数据集预处理介绍

图4 测试集路径

图5 测试集图片命名

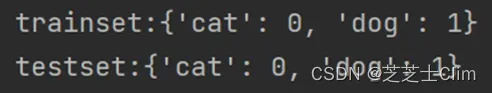

测试集和训练集路径地址和图片命名规则符合图5、图6,数据集和测试集通过DataLoader函数自动导入训练程序。

图6 训练集和测试集标签展示

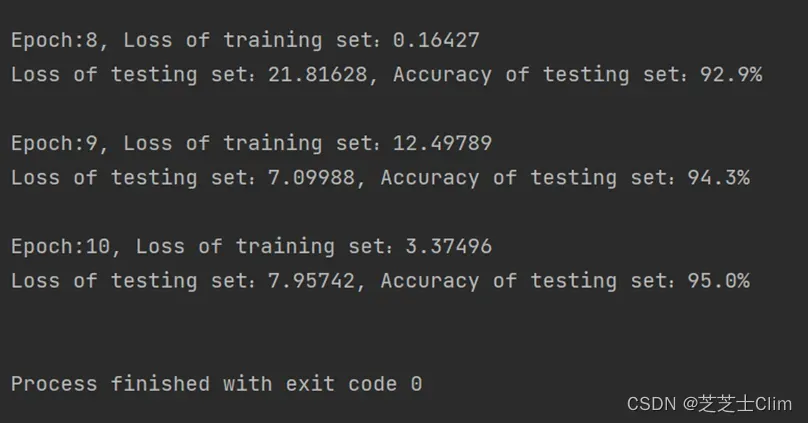

定量实验结果展示

图7 训练结果展示

训练结果采用测试集总损失值以及精确度衡量,通过图6可以看出模型训练后有较好的预测效果。

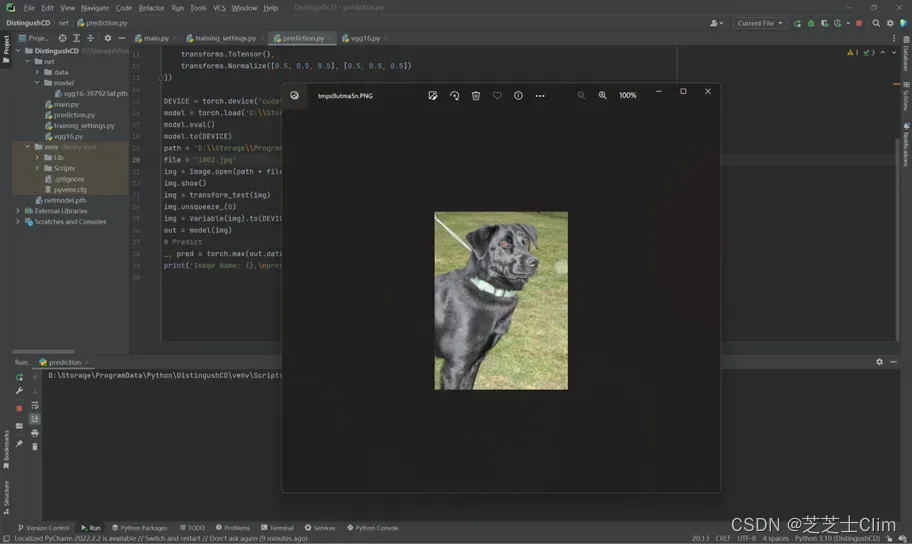

定性实验结果展示

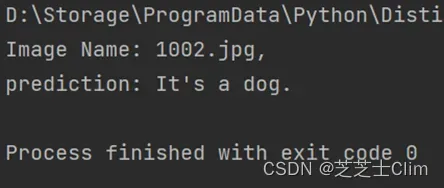

图8 在测试集中抽取一张“狗”照片进行预测

训练完毕后,通过编写新的脚本程序读取已储存的模型,并通过迁移学习预测输入的照片。

图9 图8所示照片的预测结果

通过已储存模型对图7所展示照片进行预测,可以成功预测出这是一张“狗”的照片。

分析实验结果

通过多次实验检测,通过微调VGG16训练的模型对猫狗分类有良好的预测结果,其测试集精确度达95%,能够基本完成项目目标。

文章出处登录后可见!