概率论相关知识点

条件概率:A,B为两个事件,且,称

为在事件A发生的条件下事件B发生的条件概率。

事件的独立性:若A,B两个事件相互独立,则,。

贝叶斯公式:设为样本空间中概率均不为零的一个完备事件组,则对任意事件A,且

,有:

朴素贝叶斯(Naive Bayes)算法是假设各个特征之间相互独立的情况下,通过特征向量,结合概率公式计算

,选择概率最大的类别标记。

基于特征条件独立性假设,有:

由于对所有类别来说相同,所以得到贝叶斯判定准则:

- 根据大数定理,当训练集包含充足的独立同分布样本时,

可通过各类样本出现的频率进行估计,令

表示训练集

中第

类样本的集合,因此可估计:

;

- 对离散属性而言,令

表示

中在第

个属性上取值为

的样本组成的集合,则条件概率

可估计为:

;

- 对连续属性考虑概率密度函数,假设

,其中

和

分别表示第

类样本在第

个属性上取值的取值和方差,则

不难发现,当乘式中的一个因子为0时(某个属性值在训练集中没有鱼某个类同时出现过),整个乘式的结果都为0,导致判别时出现问题。所以先在通过拉普拉斯修正来避免其他属性携带的信息被训练集中未出现的属性值”抹去“:

因此:

同理。

由于连乘操作容易造成下溢,所以通常使用对数进行计算,综上:

对于连续型属性值,有:

代码:

/**

* An inner class to store parameters.

*/

private class GaussianParameters {

double mu;

double sigma;

public GaussianParameters(double paraMu, double paraSigma) {

mu = paraMu;

sigma = paraSigma;

}// Of the constructor

public String toString() {

return "(" + mu + "," + sigma + ")";

}// Of toString

}// Of GaussianParamters

/**

* The data.

*/

Instances dataset;

/**

* The number of instances.

*/

int numClasses;

/**

* The number of instances.

*/

int numInstances;

/**

* The number of conditional attributes.

*/

int numConditions;

/**

* The prediction,including queried and predicted labels.

*/

int[] predicts;

/**

* Class distribution.

*/

double[] classDistribution;

/**

* Class distribution with Laplacian smooth.

*/

double[] classDistributionLaplacian;

/**

* To calculate the conditional probabilities for all classes over all

* attributes on all values.

*/

double[][][] conditionalCounts;

/**

* The conditional probabilities with Laplacian smooth.

*/

double[][][] conditionalProbabilitiesLaplacian;

/**

* The Gaussian parameters.

*/

GaussianParameters[][] gaussianParameters;

/**

* Data type.

*/

int dataType;

/**

* Nominal.

*/

public static final int NOMINAL = 0;

/**

* Numerical.

*/

public static final int NUMERICAL = 1;

在构造函数中读取样本:

/**

*********************

* The constructor.

*

* @param paraFilename The given file.

*********************

*/

public NaiveBayes(String paraFilename) {

dataset = null;

try {

FileReader fileReader = new FileReader(paraFilename);

dataset = new Instances(fileReader);

fileReader.close();

} catch (Exception ee) {

System.out.println("Cannot open the file: " + paraFilename + "\r\n" + ee);

System.exit(0);

} // Of try

dataset.setClassIndex(dataset.numAttributes() - 1);

numConditions = dataset.numAttributes() - 1;

numInstances = dataset.numInstances();

numClasses = dataset.attribute(numConditions).numValues();

}// Of the constructor.

设置dataType的setter:

/**

********************

* Set the data type.

*********************

*/

public void setDataType(int paraDataType) {

dataType = paraDataType;

}// Of setDataType

计算:

使用数组tempCounts记录每个类别标记的总数,相当于记录上面式子中的

/**

********************

* Calculate the class distribution with Laplacian smooth.

*********************

*/

public void calculateClassDistribution() {

classDistribution = new double[numClasses];

classDistributionLaplacian = new double[numClasses];

double[] tempCounts = new double[numClasses];

for (int i = 0; i < numInstances; i++) {

int tempClassValue = (int) dataset.instance(i).classValue();

tempCounts[tempClassValue]++;

} // Of for i

for (int i = 0; i < numClasses; i++) {

classDistribution[i] = tempCounts[i] / numInstances;

classDistributionLaplacian[i] = (tempCounts[i] + 1) / (numInstances + numClasses);

} // Of for i

System.out.println("Class distribution: " + Arrays.toString(classDistribution));

System.out.println("Class distribution Laplacian: " + Arrays.toString(classDistributionLaplacian));

}// Of calculateClassDistribution

对离散型属性:

计算

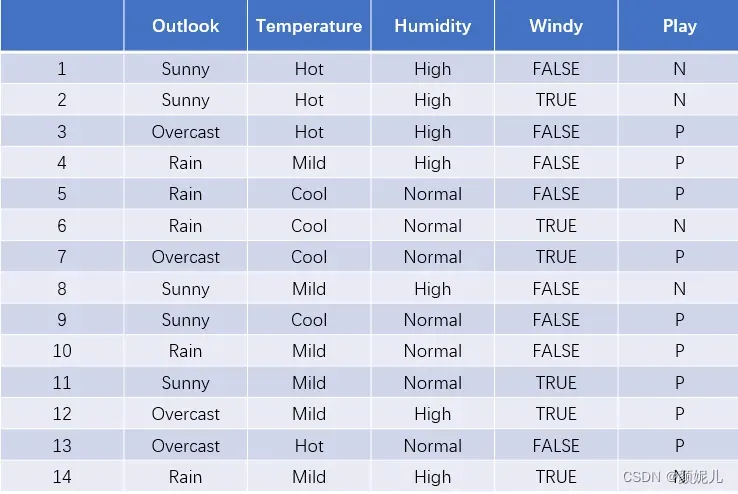

对conditionalCounts[][][]的理解(关键和难点):

第一维 i 表示类别,第二维 j 表示属性,第三维 k 表示属性下对应的取值,这个三维数组的值表示:类别为 i 的样本中的 j 属性的属性值为 k 的样本数。

以weather数据为例:

/**

********************

* Calculate the conditional probabilities with Laplacian smooth.Only scan the

* data set once.

*********************

*/

public void calculateConditionalProbabilities() {

conditionalCounts = new double[numClasses][numConditions][];

conditionalProbabilitiesLaplacian = new double[numClasses][numConditions][];

// Allocate space.

for (int i = 0; i < numClasses; i++) {

for (int j = 0; j < numConditions; j++) {

int tempNumValues = (int) dataset.attribute(j).numValues();

conditionalCounts[i][j] = new double[tempNumValues];

conditionalProbabilitiesLaplacian[i][j] = new double[tempNumValues];

} // Of for j

} // Of for i

// Count the numbers

int[] tempClassCounts = new int[numClasses];

for (int i = 0; i < numInstances; i++) {

int tempClass = (int) dataset.instance(i).classValue();

tempClassCounts[tempClass]++;

for (int j = 0; j < numConditions; j++) {

int tempValue = (int) dataset.instance(i).value(j);

conditionalCounts[tempClass][j][tempValue]++;

} // Of for j

} // Of for i

// Now for the real probability with Laplacian

for (int i = 0; i < numClasses; i++) {

for (int j = 0; j < numConditions; j++) {

int tempNumValues = (int) dataset.attribute(j).numValues();

for (int k = 0; k < tempNumValues; k++) {

conditionalProbabilitiesLaplacian[i][j][k] = (conditionalCounts[i][j][k] + 1)

/ (tempClassCounts[i] + tempNumValues);

} // Of for k

} // Of for j

} // Of for i

System.out.println("Conditional probabilities: " + Arrays.deepToString(conditionalCounts));

}// Of calculationConditionalProbabilities

分类:

通过求

的过程:

/**

********************

* Classify an instance with nominal data.

*********************

*/

public int classifyNominal(Instance paraInstance) {

// Find the biggest one

double tempBiggest = -10000;

int resultBestIndex = 0;

for (int i = 0; i < numClasses; i++) {

double tempPseudoProbability = Math.log(classDistributionLaplacian[i]);

for (int j = 0; j < numConditions; j++) {

int tempAttributeValue = (int) paraInstance.value(j);

tempPseudoProbability += Math.log(conditionalProbabilitiesLaplacian[i][j][tempAttributeValue]);

} // Of for j

if (tempBiggest < tempPseudoProbability) {

tempBiggest = tempPseudoProbability;

resultBestIndex = i;

} // Of if

} // Of for i

return resultBestIndex;

}// Of classifyNominal

对连续型属性:

每个类别下的不同特征都有一组高斯参数,所以:

gaussianParameters = new GaussianParameters[numClasses][numConditions];

是均值,所以先计算该类别标记下,某个特征的特征值的和,然后除以该标记的样本数得到

,然后再用

去求

。

/**

********************

* Calculate the conditional probabilities with Laplacian smooth.

*********************

*/

public void calculateGaussianParameters() {

gaussianParameters = new GaussianParameters[numClasses][numConditions];

double[] tempValuesArray = new double[numInstances];

int tempNumValues = 0;

double tempSum = 0;

for (int i = 0; i < numClasses; i++) {

for (int j = 0; j < numConditions; j++) {

tempSum = 0;

// Obtain values for this class.

tempNumValues = 0;

for (int k = 0; k < numInstances; k++) {

if ((int) dataset.instance(k).classValue() != i) {

continue;

} // Of if

tempValuesArray[tempNumValues] = dataset.instance(k).value(j);

tempSum += tempValuesArray[tempNumValues];

tempNumValues++;

} // Of for k

// Obtain parameters.

double tempMu = tempSum / tempNumValues;

double tempSigma = 0;

for (int k = 0; k < tempNumValues; k++) {

tempSigma += (tempValuesArray[k] - tempMu) * (tempValuesArray[k] - tempMu);

} // Of for k

tempSigma /= tempNumValues;

tempSigma = Math.sqrt(tempSigma);

gaussianParameters[i][j] = new GaussianParameters(tempMu, tempSigma);

} // Of for j

} // Of for i

System.out.println(Arrays.deepToString(gaussianParameters));

}// Of calculateGaussianParameters

分类:

/**

********************

* Classify an instance with numerical data.

*********************

*/

public int classifyNumerical(Instance paraInstance) {

// Find the biggest one

double tempBiggest = -10000;

int resultBestIndex = 0;

for (int i = 0; i < numClasses; i++) {

double tempPseudoProbability = Math.log(classDistributionLaplacian[i]);

for (int j = 0; j < numConditions; j++) {

double tempAttributeValue = paraInstance.value(j);

double tempSigma = gaussianParameters[i][j].sigma;

double tempMu = gaussianParameters[i][j].mu;

tempPseudoProbability += -Math.log(tempSigma)

- (tempAttributeValue - tempMu) * (tempAttributeValue - tempMu) / (2 * tempSigma * tempSigma);

} // Of for j

if (tempBiggest < tempPseudoProbability) {

tempBiggest = tempPseudoProbability;

resultBestIndex = i;

} // Of if

} // Of for i

return resultBestIndex;

}// Of classifyNumerical

使用leave-one-out测试:

/**

********************

* Classify all instances, the results are stored in predicts[].

*********************

*/

public void classify() {

predicts = new int[numInstances];

for (int i = 0; i < numInstances; i++) {

predicts[i] = classify(dataset.instance(i));

} // Of for i

}// Of classify

通过classify整合两个分类方法:

/**

********************

* Classify an instance.

*********************

*/

public int classify(Instance paraInstance) {

if (dataType == NOMINAL) {

return classifyNominal(paraInstance);

} else if (dataType == NUMERICAL) {

return classifyNumerical(paraInstance);

} // Of if

return -1;

}// Of classify

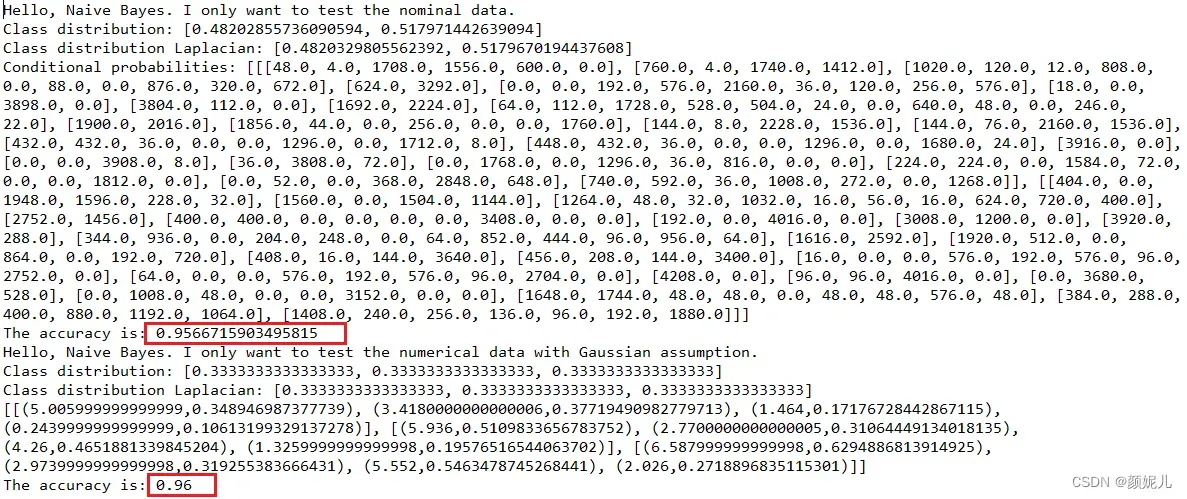

离散型测试:

/**

********************

* Test nominal data.

*********************

*/

public static void testNominal() {

System.out.println("Hello, Naive Bayes. I only want to test the nominal data.");

String tempFilename = "F:/sampledataMain/mushroom.arff";

NaiveBayes tempLearner = new NaiveBayes(tempFilename);

tempLearner.setDataType(NOMINAL);

tempLearner.calculateClassDistribution();

tempLearner.calculateConditionalProbabilities();

tempLearner.classify();

System.out.println("The accuracy is: " + tempLearner.computeAccuracy());

}// Of testNominal

连续型测试:

/**

********************

* Test numerical data.

*********************

*/

public static void testNumerical() {

System.out.println("Hello, Naive Bayes. I only want to test the numerical data with Gaussian assumption.");

String tempFilename = "F:/sampledataMain/iris.arff";

NaiveBayes tempLearner = new NaiveBayes(tempFilename);

tempLearner.setDataType(NUMERICAL);

tempLearner.calculateClassDistribution();

tempLearner.calculateGaussianParameters();

tempLearner.classify();

System.out.println("The accuracy is: " + tempLearner.computeAccuracy());

}// Of testNumerical

获取准确率:

/**

********************

* Compute accuracy.

*********************

*/

public double computeAccuracy() {

double tempCorrect = 0;

for (int i = 0; i < numInstances; i++) {

if (predicts[i] == (int) dataset.instance(i).classValue()) {

tempCorrect++;

} // Of if

} // Of for i

double resultAccuracy = tempCorrect / numInstances;

return resultAccuracy;

}// Of computeAccuracy

主函数:

/**

********************

* The entrance of the program.

*

* @param args Not used now.

*********************

*/

public static void main(String[] args) {

testNominal();

testNumerical();

}// Of main

运行结果:

文章出处登录后可见!