文章目录

- 一、项目思路

- 二、源码下载

- (1)网络模型:`resnet.py`

- (2)附属代码1:`_internally_replaced_utils.py`

- (3)附属代码2:`utils.py`

- 三、源码详解

- 3.1、导入模块

- 3.2、API接口:_resnet()

- 3.2.1、调用预训练模型

- (1)torchvision.models简介

- (2)在线下载预训练模型

- 3.2.2、ResNet网络(核心)

- (1)基础模块:BasicBlock

- (2)基础模块:Bottleneck

- (3)3×3卷积 + 1×1卷积

- 四、模型实战(打印权重参数个数 + 打印网络模型)

- 五、项目实战(CIFAR-10数据集分类)

- 参考文献

一、项目思路

该项目共有五个文件,都保存在同一个路径下:网络模型:resnet.py、附属代码1:_internally_replaced_utils.py、附属代码2:utils.py、模型实现(.py)、项目实战(.py)。

为什么使用ResNet? ResNet网络导入

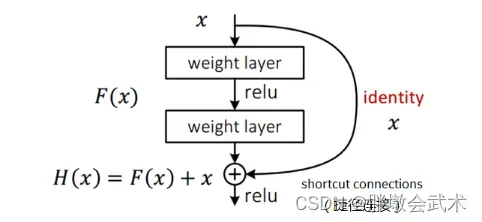

- 11、通过高速通道结构,使得其导数在原导数基础上加1,如此当原导数很小时,也能继续传递下去,进而解决梯度消失问题(再差不会比原来差);

在深度网络的反向传播中,由于导数的多次迭代训练,最后返回的梯度可能会无限趋近于0,导致网络的权重参数无法更新。- 22、y=f(x)+x式子中引入了恒等映射(当f(x)=0时,y=x+x=2),通过堆叠残差模块解决了当网络深度增加时神经网络的退化问题。

- 33、网络中只有两个池化层且使用stride=2的方式降低输出特征图,与采用池化层相比,虽不能完全避免但减少分辨率下降问题。

stride=2可以降低计算量,同时也可以增加感受野,提高模型性能。

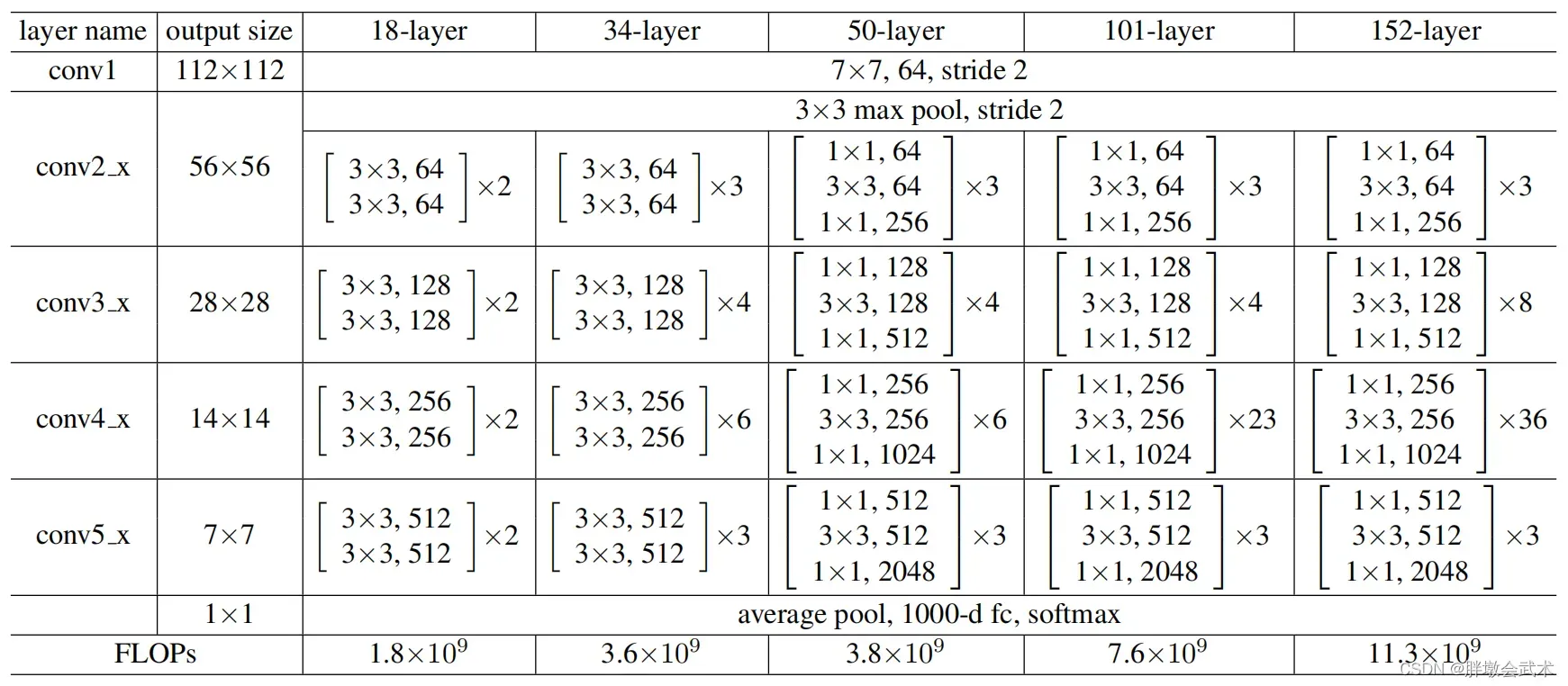

ResNet的种类:

二、源码下载

论文下载:Deep Residual Learning for Image Recognition

官方源码:vision/torchvision/models/resnet.py

由于官网源码不能打包下载,但只需要其中三个文件即可完成,如下:

(1)网络模型:resnet.py

(2)附属代码1:_internally_replaced_utils.py

(3)附属代码2:utils.py

(1)网络模型:resnet.py

from typing import Type, Any, Callable, Union, List, Optional

import torch

import torch.nn as nn

from torch import Tensor

from _internally_replaced_utils import load_state_dict_from_url

from utils import _log_api_usage_once

__all__ = [

"ResNet",

"resnet18",

"resnet34",

"resnet50",

"resnet101",

"resnet152",

"resnext50_32x4d",

"resnext101_32x8d",

"wide_resnet50_2",

"wide_resnet101_2",

]

model_urls = {

"resnet18": "https://download.pytorch.org/models/resnet18-f37072fd.pth",

"resnet34": "https://download.pytorch.org/models/resnet34-b627a593.pth",

"resnet50": "https://download.pytorch.org/models/resnet50-0676ba61.pth",

"resnet101": "https://download.pytorch.org/models/resnet101-63fe2227.pth",

"resnet152": "https://download.pytorch.org/models/resnet152-394f9c45.pth",

"resnext50_32x4d": "https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth",

"resnext101_32x8d": "https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth",

"wide_resnet50_2": "https://download.pytorch.org/models/wide_resnet50_2-95faca4d.pth",

"wide_resnet101_2": "https://download.pytorch.org/models/wide_resnet101_2-32ee1156.pth",

}

def conv3x3(in_planes: int, out_planes: int, stride: int = 1, groups: int = 1, dilation: int = 1) -> nn.Conv2d:

"""3x3 convolution with padding"""

return nn.Conv2d(

in_planes,

out_planes,

kernel_size=3,

stride=stride,

padding=dilation,

groups=groups,

bias=False,

dilation=dilation,

)

def conv1x1(in_planes: int, out_planes: int, stride: int = 1) -> nn.Conv2d:

"""1x1 convolution"""

return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)

class BasicBlock(nn.Module):

expansion: int = 1

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if groups != 1 or base_width != 64:

raise ValueError("BasicBlock only supports groups=1 and base_width=64")

if dilation > 1:

raise NotImplementedError("Dilation > 1 not supported in BasicBlock")

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class Bottleneck(nn.Module):

# Bottleneck in torchvision places the stride for downsampling at 3x3 convolution(self.conv2)

# while original implementation places the stride at the first 1x1 convolution(self.conv1)

# according to "Deep residual learning for image recognition"https://arxiv.org/abs/1512.03385.

# This variant is also known as ResNet V1.5 and improves accuracy according to

# https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch.

expansion: int = 4

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

width = int(planes * (base_width / 64.0)) * groups

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv1x1(inplanes, width)

self.bn1 = norm_layer(width)

self.conv2 = conv3x3(width, width, stride, groups, dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1x1(width, planes * self.expansion)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(

self,

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

num_classes: int = 1000,

zero_init_residual: bool = False,

groups: int = 1,

width_per_group: int = 64,

replace_stride_with_dilation: Optional[List[bool]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

_log_api_usage_once(self)

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64

self.dilation = 1

if replace_stride_with_dilation is None:

# each element in the tuple indicates if we should replace

# the 2x2 stride with a dilated convolution instead

replace_stride_with_dilation = [False, False, False]

if len(replace_stride_with_dilation) != 3:

raise ValueError(

"replace_stride_with_dilation should be None "

f"or a 3-element tuple, got {replace_stride_with_dilation}"

)

self.groups = groups

self.base_width = width_per_group

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2, dilate=replace_stride_with_dilation[0])

self.layer3 = self._make_layer(block, 256, layers[2], stride=2, dilate=replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block, 512, layers[3], stride=2, dilate=replace_stride_with_dilation[2])

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out", nonlinearity="relu")

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

# Zero-initialize the last BN in each residual branch,

# so that the residual branch starts with zeros, and each residual block behaves like an identity.

# This improves the model by 0.2~0.3% according to https://arxiv.org/abs/1706.02677

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck):

nn.init.constant_(m.bn3.weight, 0) # type: ignore[arg-type]

elif isinstance(m, BasicBlock):

nn.init.constant_(m.bn2.weight, 0) # type: ignore[arg-type]

def _make_layer(

self,

block: Type[Union[BasicBlock, Bottleneck]],

planes: int,

blocks: int,

stride: int = 1,

dilate: bool = False,

) -> nn.Sequential:

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = 1

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

norm_layer(planes * block.expansion),

)

layers = []

layers.append(

block(

self.inplanes, planes, stride, downsample, self.groups, self.base_width, previous_dilation, norm_layer

)

)

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

layers.append(

block(

self.inplanes,

planes,

groups=self.groups,

base_width=self.base_width,

dilation=self.dilation,

norm_layer=norm_layer,

)

)

return nn.Sequential(*layers)

def _forward_impl(self, x: Tensor) -> Tensor:

# See note [TorchScript super()]

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def forward(self, x: Tensor) -> Tensor:

return self._forward_impl(x)

def _resnet(

arch: str,

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

pretrained: bool,

progress: bool,

**kwargs: Any,

) -> ResNet:

model = ResNet(block, layers, **kwargs)

if pretrained:

state_dict = load_state_dict_from_url(model_urls[arch], progress=progress)

model.load_state_dict(state_dict)

return model

def resnet18(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-18 model from

`"Deep Residual Learning for Image Recognition" <https://arxiv.org/pdf/1512.03385.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet("resnet18", BasicBlock, [2, 2, 2, 2], pretrained, progress, **kwargs)

def resnet34(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-34 model from

`"Deep Residual Learning for Image Recognition" <https://arxiv.org/pdf/1512.03385.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet("resnet34", BasicBlock, [3, 4, 6, 3], pretrained, progress, **kwargs)

def resnet50(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-50 model from

`"Deep Residual Learning for Image Recognition" <https://arxiv.org/pdf/1512.03385.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet("resnet50", Bottleneck, [3, 4, 6, 3], pretrained, progress, **kwargs)

def resnet101(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-101 model from

`"Deep Residual Learning for Image Recognition" <https://arxiv.org/pdf/1512.03385.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet("resnet101", Bottleneck, [3, 4, 23, 3], pretrained, progress, **kwargs)

def resnet152(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNet-152 model from

`"Deep Residual Learning for Image Recognition" <https://arxiv.org/pdf/1512.03385.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

return _resnet("resnet152", Bottleneck, [3, 8, 36, 3], pretrained, progress, **kwargs)

def resnext50_32x4d(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNeXt-50 32x4d model from

`"Aggregated Residual Transformation for Deep Neural Networks" <https://arxiv.org/pdf/1611.05431.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

kwargs["groups"] = 32

kwargs["width_per_group"] = 4

return _resnet("resnext50_32x4d", Bottleneck, [3, 4, 6, 3], pretrained, progress, **kwargs)

def resnext101_32x8d(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""ResNeXt-101 32x8d model from

`"Aggregated Residual Transformation for Deep Neural Networks" <https://arxiv.org/pdf/1611.05431.pdf>`_.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

kwargs["groups"] = 32

kwargs["width_per_group"] = 8

return _resnet("resnext101_32x8d", Bottleneck, [3, 4, 23, 3], pretrained, progress, **kwargs)

def wide_resnet50_2(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""Wide ResNet-50-2 model from

`"Wide Residual Networks" <https://arxiv.org/pdf/1605.07146.pdf>`_.

The model is the same as ResNet except for the bottleneck number of channels

which is twice larger in every block. The number of channels in outer 1x1

convolutions is the same, e.g. last block in ResNet-50 has 2048-512-2048

channels, and in Wide ResNet-50-2 has 2048-1024-2048.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

kwargs["width_per_group"] = 64 * 2

return _resnet("wide_resnet50_2", Bottleneck, [3, 4, 6, 3], pretrained, progress, **kwargs)

def wide_resnet101_2(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

r"""Wide ResNet-101-2 model from

`"Wide Residual Networks" <https://arxiv.org/pdf/1605.07146.pdf>`_.

The model is the same as ResNet except for the bottleneck number of channels

which is twice larger in every block. The number of channels in outer 1x1

convolutions is the same, e.g. last block in ResNet-50 has 2048-512-2048

channels, and in Wide ResNet-50-2 has 2048-1024-2048.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

"""

kwargs["width_per_group"] = 64 * 2

return _resnet("wide_resnet101_2", Bottleneck, [3, 4, 23, 3], pretrained, progress, **kwargs)

(2)附属代码1:_internally_replaced_utils.py

import importlib.machinery

import os

from torch.hub import _get_torch_home

_HOME = os.path.join(_get_torch_home(), "datasets", "vision")

_USE_SHARDED_DATASETS = False

def _download_file_from_remote_location(fpath: str, url: str) -> None:

pass

def _is_remote_location_available() -> bool:

return False

try:

from torch.hub import load_state_dict_from_url # noqa: 401

except ImportError:

from torch.utils.model_zoo import load_url as load_state_dict_from_url # noqa: 401

def _get_extension_path(lib_name):

lib_dir = os.path.dirname(__file__)

if os.name == "nt":

# Register the main torchvision library location on the default DLL path

import ctypes

import sys

kernel32 = ctypes.WinDLL("kernel32.dll", use_last_error=True)

with_load_library_flags = hasattr(kernel32, "AddDllDirectory")

prev_error_mode = kernel32.SetErrorMode(0x0001)

if with_load_library_flags:

kernel32.AddDllDirectory.restype = ctypes.c_void_p

if sys.version_info >= (3, 8):

os.add_dll_directory(lib_dir)

elif with_load_library_flags:

res = kernel32.AddDllDirectory(lib_dir)

if res is None:

err = ctypes.WinError(ctypes.get_last_error())

err.strerror += f' Error adding "{lib_dir}" to the DLL directories.'

raise err

kernel32.SetErrorMode(prev_error_mode)

loader_details = (importlib.machinery.ExtensionFileLoader, importlib.machinery.EXTENSION_SUFFIXES)

extfinder = importlib.machinery.FileFinder(lib_dir, loader_details)

ext_specs = extfinder.find_spec(lib_name)

if ext_specs is None:

raise ImportError

return ext_specs.origin

(3)附属代码2:utils.py

import math

import pathlib

import warnings

from typing import Union, Optional, List, Tuple, BinaryIO, no_type_check

import numpy as np

import torch

from PIL import Image, ImageDraw, ImageFont, ImageColor

__all__ = ["make_grid", "save_image", "draw_bounding_boxes", "draw_segmentation_masks", "draw_keypoints"]

@torch.no_grad()

def make_grid(

tensor: Union[torch.Tensor, List[torch.Tensor]],

nrow: int = 8,

padding: int = 2,

normalize: bool = False,

value_range: Optional[Tuple[int, int]] = None,

scale_each: bool = False,

pad_value: int = 0,

**kwargs,

) -> torch.Tensor:

"""

Make a grid of images.

Args:

tensor (Tensor or list): 4D mini-batch Tensor of shape (B x C x H x W)

or a list of images all of the same size.

nrow (int, optional): Number of images displayed in each row of the grid.

The final grid size is ``(B / nrow, nrow)``. Default: ``8``.

padding (int, optional): amount of padding. Default: ``2``.

normalize (bool, optional): If True, shift the image to the range (0, 1),

by the min and max values specified by ``value_range``. Default: ``False``.

value_range (tuple, optional): tuple (min, max) where min and max are numbers,

then these numbers are used to normalize the image. By default, min and max

are computed from the tensor.

scale_each (bool, optional): If ``True``, scale each image in the batch of

images separately rather than the (min, max) over all images. Default: ``False``.

pad_value (float, optional): Value for the padded pixels. Default: ``0``.

Returns:

grid (Tensor): the tensor containing grid of images.

"""

if not (torch.is_tensor(tensor) or (isinstance(tensor, list) and all(torch.is_tensor(t) for t in tensor))):

raise TypeError(f"tensor or list of tensors expected, got {type(tensor)}")

if "range" in kwargs.keys():

warning = "range will be deprecated, please use value_range instead."

warnings.warn(warning)

value_range = kwargs["range"]

# if list of tensors, convert to a 4D mini-batch Tensor

if isinstance(tensor, list):

tensor = torch.stack(tensor, dim=0)

if tensor.dim() == 2: # single image H x W

tensor = tensor.unsqueeze(0)

if tensor.dim() == 3: # single image

if tensor.size(0) == 1: # if single-channel, convert to 3-channel

tensor = torch.cat((tensor, tensor, tensor), 0)

tensor = tensor.unsqueeze(0)

if tensor.dim() == 4 and tensor.size(1) == 1: # single-channel images

tensor = torch.cat((tensor, tensor, tensor), 1)

if normalize is True:

tensor = tensor.clone() # avoid modifying tensor in-place

if value_range is not None:

assert isinstance(

value_range, tuple

), "value_range has to be a tuple (min, max) if specified. min and max are numbers"

def norm_ip(img, low, high):

img.clamp_(min=low, max=high)

img.sub_(low).div_(max(high - low, 1e-5))

def norm_range(t, value_range):

if value_range is not None:

norm_ip(t, value_range[0], value_range[1])

else:

norm_ip(t, float(t.min()), float(t.max()))

if scale_each is True:

for t in tensor: # loop over mini-batch dimension

norm_range(t, value_range)

else:

norm_range(tensor, value_range)

if tensor.size(0) == 1:

return tensor.squeeze(0)

# make the mini-batch of images into a grid

nmaps = tensor.size(0)

xmaps = min(nrow, nmaps)

ymaps = int(math.ceil(float(nmaps) / xmaps))

height, width = int(tensor.size(2) + padding), int(tensor.size(3) + padding)

num_channels = tensor.size(1)

grid = tensor.new_full((num_channels, height * ymaps + padding, width * xmaps + padding), pad_value)

k = 0

for y in range(ymaps):

for x in range(xmaps):

if k >= nmaps:

break

# Tensor.copy_() is a valid method but seems to be missing from the stubs

# https://pytorch.org/docs/stable/tensors.html#torch.Tensor.copy_

grid.narrow(1, y * height + padding, height - padding).narrow( # type: ignore[attr-defined]

2, x * width + padding, width - padding

).copy_(tensor[k])

k = k + 1

return grid

@torch.no_grad()

def save_image(

tensor: Union[torch.Tensor, List[torch.Tensor]],

fp: Union[str, pathlib.Path, BinaryIO],

format: Optional[str] = None,

**kwargs,

) -> None:

"""

Save a given Tensor into an image file.

Args:

tensor (Tensor or list): Image to be saved. If given a mini-batch tensor,

saves the tensor as a grid of images by calling ``make_grid``.

fp (string or file object): A filename or a file object

format(Optional): If omitted, the format to use is determined from the filename extension.

If a file object was used instead of a filename, this parameter should always be used.

**kwargs: Other arguments are documented in ``make_grid``.

"""

grid = make_grid(tensor, **kwargs)

# Add 0.5 after unnormalizing to [0, 255] to round to nearest integer

ndarr = grid.mul(255).add_(0.5).clamp_(0, 255).permute(1, 2, 0).to("cpu", torch.uint8).numpy()

im = Image.fromarray(ndarr)

im.save(fp, format=format)

@torch.no_grad()

def draw_bounding_boxes(

image: torch.Tensor,

boxes: torch.Tensor,

labels: Optional[List[str]] = None,

colors: Optional[Union[List[Union[str, Tuple[int, int, int]]], str, Tuple[int, int, int]]] = None,

fill: Optional[bool] = False,

width: int = 1,

font: Optional[str] = None,

font_size: int = 10,

) -> torch.Tensor:

"""

Draws bounding boxes on given image.

The values of the input image should be uint8 between 0 and 255.

If fill is True, Resulting Tensor should be saved as PNG image.

Args:

image (Tensor): Tensor of shape (C x H x W) and dtype uint8.

boxes (Tensor): Tensor of size (N, 4) containing bounding boxes in (xmin, ymin, xmax, ymax) format. Note that

the boxes are absolute coordinates with respect to the image. In other words: `0 <= xmin < xmax < W` and

`0 <= ymin < ymax < H`.

labels (List[str]): List containing the labels of bounding boxes.

colors (color or list of colors, optional): List containing the colors

of the boxes or single color for all boxes. The color can be represented as

PIL strings e.g. "red" or "#FF00FF", or as RGB tuples e.g. ``(240, 10, 157)``.

fill (bool): If `True` fills the bounding box with specified color.

width (int): Width of bounding box.

font (str): A filename containing a TrueType font. If the file is not found in this filename, the loader may

also search in other directories, such as the `fonts/` directory on Windows or `/Library/Fonts/`,

`/System/Library/Fonts/` and `~/Library/Fonts/` on macOS.

font_size (int): The requested font size in points.

Returns:

img (Tensor[C, H, W]): Image Tensor of dtype uint8 with bounding boxes plotted.

"""

if not isinstance(image, torch.Tensor):

raise TypeError(f"Tensor expected, got {type(image)}")

elif image.dtype != torch.uint8:

raise ValueError(f"Tensor uint8 expected, got {image.dtype}")

elif image.dim() != 3:

raise ValueError("Pass individual images, not batches")

elif image.size(0) not in {1, 3}:

raise ValueError("Only grayscale and RGB images are supported")

if image.size(0) == 1:

image = torch.tile(image, (3, 1, 1))

ndarr = image.permute(1, 2, 0).numpy()

img_to_draw = Image.fromarray(ndarr)

img_boxes = boxes.to(torch.int64).tolist()

if fill:

draw = ImageDraw.Draw(img_to_draw, "RGBA")

else:

draw = ImageDraw.Draw(img_to_draw)

txt_font = ImageFont.load_default() if font is None else ImageFont.truetype(font=font, size=font_size)

for i, bbox in enumerate(img_boxes):

if colors is None:

color = None

elif isinstance(colors, list):

color = colors[i]

else:

color = colors

if fill:

if color is None:

fill_color = (255, 255, 255, 100)

elif isinstance(color, str):

# This will automatically raise Error if rgb cannot be parsed.

fill_color = ImageColor.getrgb(color) + (100,)

elif isinstance(color, tuple):

fill_color = color + (100,)

draw.rectangle(bbox, width=width, outline=color, fill=fill_color)

else:

draw.rectangle(bbox, width=width, outline=color)

if labels is not None:

margin = width + 1

draw.text((bbox[0] + margin, bbox[1] + margin), labels[i], fill=color, font=txt_font)

return torch.from_numpy(np.array(img_to_draw)).permute(2, 0, 1).to(dtype=torch.uint8)

@torch.no_grad()

def draw_segmentation_masks(

image: torch.Tensor,

masks: torch.Tensor,

alpha: float = 0.8,

colors: Optional[Union[List[Union[str, Tuple[int, int, int]]], str, Tuple[int, int, int]]] = None,

) -> torch.Tensor:

"""

Draws segmentation masks on given RGB image.

The values of the input image should be uint8 between 0 and 255.

Args:

image (Tensor): Tensor of shape (3, H, W) and dtype uint8.

masks (Tensor): Tensor of shape (num_masks, H, W) or (H, W) and dtype bool.

alpha (float): Float number between 0 and 1 denoting the transparency of the masks.

0 means full transparency, 1 means no transparency.

colors (color or list of colors, optional): List containing the colors

of the masks or single color for all masks. The color can be represented as

PIL strings e.g. "red" or "#FF00FF", or as RGB tuples e.g. ``(240, 10, 157)``.

By default, random colors are generated for each mask.

Returns:

img (Tensor[C, H, W]): Image Tensor, with segmentation masks drawn on top.

"""

if not isinstance(image, torch.Tensor):

raise TypeError(f"The image must be a tensor, got {type(image)}")

elif image.dtype != torch.uint8:

raise ValueError(f"The image dtype must be uint8, got {image.dtype}")

elif image.dim() != 3:

raise ValueError("Pass individual images, not batches")

elif image.size()[0] != 3:

raise ValueError("Pass an RGB image. Other Image formats are not supported")

if masks.ndim == 2:

masks = masks[None, :, :]

if masks.ndim != 3:

raise ValueError("masks must be of shape (H, W) or (batch_size, H, W)")

if masks.dtype != torch.bool:

raise ValueError(f"The masks must be of dtype bool. Got {masks.dtype}")

if masks.shape[-2:] != image.shape[-2:]:

raise ValueError("The image and the masks must have the same height and width")

num_masks = masks.size()[0]

if colors is not None and num_masks > len(colors):

raise ValueError(f"There are more masks ({num_masks}) than colors ({len(colors)})")

if colors is None:

colors = _generate_color_palette(num_masks)

if not isinstance(colors, list):

colors = [colors]

if not isinstance(colors[0], (tuple, str)):

raise ValueError("colors must be a tuple or a string, or a list thereof")

if isinstance(colors[0], tuple) and len(colors[0]) != 3:

raise ValueError("It seems that you passed a tuple of colors instead of a list of colors")

out_dtype = torch.uint8

colors_ = []

for color in colors:

if isinstance(color, str):

color = ImageColor.getrgb(color)

colors_.append(torch.tensor(color, dtype=out_dtype))

img_to_draw = image.detach().clone()

# TODO: There might be a way to vectorize this

for mask, color in zip(masks, colors_):

img_to_draw[:, mask] = color[:, None]

out = image * (1 - alpha) + img_to_draw * alpha

return out.to(out_dtype)

@torch.no_grad()

def draw_keypoints(

image: torch.Tensor,

keypoints: torch.Tensor,

connectivity: Optional[List[Tuple[int, int]]] = None,

colors: Optional[Union[str, Tuple[int, int, int]]] = None,

radius: int = 2,

width: int = 3,

) -> torch.Tensor:

"""

Draws Keypoints on given RGB image.

The values of the input image should be uint8 between 0 and 255.

Args:

image (Tensor): Tensor of shape (3, H, W) and dtype uint8.

keypoints (Tensor): Tensor of shape (num_instances, K, 2) the K keypoints location for each of the N instances,

in the format [x, y].

connectivity (List[Tuple[int, int]]]): A List of tuple where,

each tuple contains pair of keypoints to be connected.

colors (str, Tuple): The color can be represented as

PIL strings e.g. "red" or "#FF00FF", or as RGB tuples e.g. ``(240, 10, 157)``.

radius (int): Integer denoting radius of keypoint.

width (int): Integer denoting width of line connecting keypoints.

Returns:

img (Tensor[C, H, W]): Image Tensor of dtype uint8 with keypoints drawn.

"""

if not isinstance(image, torch.Tensor):

raise TypeError(f"The image must be a tensor, got {type(image)}")

elif image.dtype != torch.uint8:

raise ValueError(f"The image dtype must be uint8, got {image.dtype}")

elif image.dim() != 3:

raise ValueError("Pass individual images, not batches")

elif image.size()[0] != 3:

raise ValueError("Pass an RGB image. Other Image formats are not supported")

if keypoints.ndim != 3:

raise ValueError("keypoints must be of shape (num_instances, K, 2)")

ndarr = image.permute(1, 2, 0).numpy()

img_to_draw = Image.fromarray(ndarr)

draw = ImageDraw.Draw(img_to_draw)

img_kpts = keypoints.to(torch.int64).tolist()

for kpt_id, kpt_inst in enumerate(img_kpts):

for inst_id, kpt in enumerate(kpt_inst):

x1 = kpt[0] - radius

x2 = kpt[0] + radius

y1 = kpt[1] - radius

y2 = kpt[1] + radius

draw.ellipse([x1, y1, x2, y2], fill=colors, outline=None, width=0)

if connectivity:

for connection in connectivity:

start_pt_x = kpt_inst[connection[0]][0]

start_pt_y = kpt_inst[connection[0]][1]

end_pt_x = kpt_inst[connection[1]][0]

end_pt_y = kpt_inst[connection[1]][1]

draw.line(

((start_pt_x, start_pt_y), (end_pt_x, end_pt_y)),

width=width,

)

return torch.from_numpy(np.array(img_to_draw)).permute(2, 0, 1).to(dtype=torch.uint8)

def _generate_color_palette(num_masks: int):

palette = torch.tensor([2 ** 25 - 1, 2 ** 15 - 1, 2 ** 21 - 1])

return [tuple((i * palette) % 255) for i in range(num_masks)]

@no_type_check

def _log_api_usage_once(obj: str) -> None: # type: ignore

if torch.jit.is_scripting() or torch.jit.is_tracing():

return

# NOTE: obj can be an object as well, but mocking it here to be

# only a string to appease torchscript

if isinstance(obj, str):

torch._C._log_api_usage_once(obj)

else:

torch._C._log_api_usage_once(f"{obj.__module__}.{obj.__class__.__name__}")

三、源码详解

3.1、导入模块

from typing import Type, Any, Callable, Union, List, Optional

import torch

import torch.nn as nn

from torch import Tensor

from _internally_replaced_utils import load_state_dict_from_url

from utils import _log_api_usage_once

3.2、API接口:_resnet()

该接口分为两个功能:

(1)可以根据输入参数选择是否调用预训练模型。

(2)可以调用自定义的不同网络层数量的ResNet网络;

3.2.1、调用预训练模型

(1)torchvision.models简介

torchvision 是 pytorch 中的视觉处理工具,主要分以下四个部分:

- torchvision.datasets —— 提供数据集下载(在线)

- torchvision.models —— 提供预训练模型

- torchvision.utils —— 制作图像网格

- torchvision.transforms —— 图像预处理

更多详细介绍请看这篇文章:Pytorch基础(全)

在 torchvision.models 中提供了多种经典模型:AlexNet,DenseNet,ResNet,GoogLeNet,MobileNet,Shufflenet,SqueezeNet,VGG 等常用结构,并提供了预训练模型。

import torchvision

# 共提供resnet不同网络层模型:resnet18、resnet32、resnet50、resnet101、resnet152、resnet50_2、

# 输入参数pretrained:表示是否调用预训练模型来初始化权重参数。默认False

model = torchvision.models.resnet50(pretrained=True)

model = torchvision.models.resnet50(pretrained=False) # 等价于model = torchvision.models.resnet50()

(2)在线下载预训练模型

ResNet网络中定义了不同网络层的模型,并可以通过在线方式下载预训练模型(.pth)。

__all__ = [

"ResNet",

"resnet18",

"resnet34",

"resnet50",

"resnet101",

"resnet152",

"resnext50_32x4d",

"resnext101_32x8d",

"wide_resnet50_2",

"wide_resnet101_2",

]

model_urls = {

"resnet18": "https://download.pytorch.org/models/resnet18-f37072fd.pth",

"resnet34": "https://download.pytorch.org/models/resnet34-b627a593.pth",

"resnet50": "https://download.pytorch.org/models/resnet50-0676ba61.pth",

"resnet101": "https://download.pytorch.org/models/resnet101-63fe2227.pth",

"resnet152": "https://download.pytorch.org/models/resnet152-394f9c45.pth",

"resnext50_32x4d": "https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth",

"resnext101_32x8d": "https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth",

"wide_resnet50_2": "https://download.pytorch.org/models/wide_resnet50_2-95faca4d.pth",

"wide_resnet101_2": "https://download.pytorch.org/models/wide_resnet101_2-32ee1156.pth",

}

3.2.2、ResNet网络(核心)

ResNet网络主要分为三个部分:头部(输入)、主体(卷积)、尾部(输出)。

- (1)主体由四个block构成,每个block之间的网络框架相同但是网络层数量不同。

- (2)根据不同网络层数量分为两种基础模块:BasicBlock类、Bottleneck类。

- 前者用来构建ResNet18、ResNet34网络,后者用来构建ResNet50及以上的网络。

- (3)模型中主要用到两种卷积层:3×3卷积、1×1卷积。

- 两个函数都继承于torch.nn网络中的Conv2d()。其中,ResNet18/34网络使用3×3的卷积,ResNet50及以上使用3×3卷积 + 1×1卷积。

ResNet类继承于torch.nn.Module,主要包括三大部分:__ init __()、_make_layer()、_forward_impl()。

__ init __():定义了模型涉及到的网络层。- 主要包括:卷积层、BN层、激活函数ReLU、最大池化层、全局平均池化层、全连接层、激活函数softmax。

_forward_impl():定义了前向传播过程。_make_layer():在__ init __()中调用,定义了block的网络框架。- 通过连续四次调用,每次输入对应block的个数,进而实现四个block的构建。理论上我们可以根据Resnet类定义任意大小的Resnet网络。

问题1:resnet中bottleneck模块的三个卷积1×1、3×3、1×1的原理与作用。

主要作用是减小模型的参数量,同时提高网络的计算效率。

- 第一个1×1卷积的作用:将输入的特征图进行压缩,减少计算和存储的成本;

- 第二个3×3卷积的作用:进行特征提取和非线性变换;

- 第三个1×1卷积的作用:将特征图的通道数恢复到原来的大小,并且通过跨层连接的方式将前面层的信息传递到后面层。

问题2:详细介绍1×1卷积及其作用

1×1卷积是指卷积核的大小为1×1的卷积操作。它的作用是将输入特征图的通道数进行压缩或扩展,从而改变特征图的深度。具体来讲,1×1卷积可以实现以下两种功能:

- 压缩:通过使用1×1卷积来减少输入特征图的通道数,从而减少了计算和存储的成本。这种方法通常被用于减少网络参数的数量,并且可以保持较高的分类性能。

- 扩展:通过使用1×1卷积来增加输入特征图的通道数。这种方法通常被用于增加网络的非线性表示能力,从而提高网络的性能。

问题3:resnet网络中定义了downsample,详细解释其功能与使用情况

- 在ResNet中,downsample是一种用于减小特征图尺寸的操作。当网络深度增加时,特征图的尺寸会不断减小,downsample操作可以在某些层中使用,以减小特征图的尺寸,从而使得特征图的尺寸与网络的深度相适应。

- downsample通常在残差块中使用,用于减小特征图的尺寸。在残差块中,downsample可以通过卷积操作或者池化操作来实现。当使用卷积操作时,downsample会通过卷积核的步幅来减小特征图的尺寸;当使用池化操作时,downsample会通过池化层的参数来减小特征图的尺寸。

- 在使用downsample时,需要考虑到特征图的尺寸与网络深度的适应关系,以保证网络的训练效果。如果downsample的操作不当,会导致特征图尺寸过小,从而影响网络的训练效果。因此,在使用downsample时,需要对网络的结构和参数进行合理的调整,以达到最佳的训练效果。

class ResNet(nn.Module):

def __init__(

self,

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

num_classes: int = 1000,

zero_init_residual: bool = False,

groups: int = 1,

width_per_group: int = 64,

replace_stride_with_dilation: Optional[List[bool]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

_log_api_usage_once(self)

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64

self.dilation = 1

if replace_stride_with_dilation is None:

# each element in the tuple indicates if we should replace

# the 2x2 stride with a dilated convolution instead

replace_stride_with_dilation = [False, False, False]

if len(replace_stride_with_dilation) != 3:

raise ValueError(

"replace_stride_with_dilation should be None "

f"or a 3-element tuple, got {replace_stride_with_dilation}"

)

self.groups = groups

self.base_width = width_per_group

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2, dilate=replace_stride_with_dilation[0])

self.layer3 = self._make_layer(block, 256, layers[2], stride=2, dilate=replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block, 512, layers[3], stride=2, dilate=replace_stride_with_dilation[2])

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out", nonlinearity="relu")

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

# Zero-initialize the last BN in each residual branch,

# so that the residual branch starts with zeros, and each residual block behaves like an identity.

# This improves the model by 0.2~0.3% according to https://arxiv.org/abs/1706.02677

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck):

nn.init.constant_(m.bn3.weight, 0) # type: ignore[arg-type]

elif isinstance(m, BasicBlock):

nn.init.constant_(m.bn2.weight, 0) # type: ignore[arg-type]

def _make_layer(

self,

block: Type[Union[BasicBlock, Bottleneck]],

planes: int,

blocks: int,

stride: int = 1,

dilate: bool = False,

) -> nn.Sequential:

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = 1

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

norm_layer(planes * block.expansion),

)

layers = []

layers.append(

block(

self.inplanes, planes, stride, downsample, self.groups, self.base_width, previous_dilation, norm_layer

)

)

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

layers.append(

block(

self.inplanes,

planes,

groups=self.groups,

base_width=self.base_width,

dilation=self.dilation,

norm_layer=norm_layer,

)

)

return nn.Sequential(*layers)

def _forward_impl(self, x: Tensor) -> Tensor:

# See note [TorchScript super()]

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def forward(self, x: Tensor) -> Tensor:

return self._forward_impl(x)

(1)基础模块:BasicBlock

class BasicBlock(nn.Module):

expansion: int = 1

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if groups != 1 or base_width != 64:

raise ValueError("BasicBlock only supports groups=1 and base_width=64")

if dilation > 1:

raise NotImplementedError("Dilation > 1 not supported in BasicBlock")

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

(2)基础模块:Bottleneck

class Bottleneck(nn.Module):

# Bottleneck in torchvision places the stride for downsampling at 3x3 convolution(self.conv2)

# while original implementation places the stride at the first 1x1 convolution(self.conv1)

# according to "Deep residual learning for image recognition"https://arxiv.org/abs/1512.03385.

# This variant is also known as ResNet V1.5 and improves accuracy according to

# https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch.

expansion: int = 4

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

width = int(planes * (base_width / 64.0)) * groups

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv1x1(inplanes, width)

self.bn1 = norm_layer(width)

self.conv2 = conv3x3(width, width, stride, groups, dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1x1(width, planes * self.expansion)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

(3)3×3卷积 + 1×1卷积

def conv3x3(in_planes: int, out_planes: int, stride: int = 1, groups: int = 1, dilation: int = 1) -> nn.Conv2d:

"""3x3 convolution with padding"""

return nn.Conv2d(

in_planes,

out_planes,

kernel_size=3,

stride=stride,

padding=dilation,

groups=groups,

bias=False,

dilation=dilation,

)

def conv1x1(in_planes: int, out_planes: int, stride: int = 1) -> nn.Conv2d:

"""1x1 convolution"""

return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)

四、模型实战(打印权重参数个数 + 打印网络模型)

import resnet

if __name__ == '__main__':

# (1)模型初始化

res1 = resnet.resnet18() # 11689512 paramerters

res2 = resnet.resnet34() # 21797672 paramerters

res3 = resnet.resnet50() # 25557032 paramerters

res4 = resnet.resnet101() # 44549160 paramerters

res5 = resnet.resnet152() # 60192808 paramerters

res6 = resnet.resnext50_32x4d() # 25028904 paramerters

res7 = resnet.resnext101_32x8d() # 88791336 paramerters

res8 = resnet.wide_resnet50_2() # 68883240 paramerters

res9 = resnet.wide_resnet101_2() # 126886696 paramerters

# (2)打印网络模型的权重参数总数

print("model have {} paramerters in total" .format(sum(x.numel() for x in res1.parameters())))

print("model have {} paramerters in total" .format(sum(x.numel() for x in res2.parameters())))

print("model have {} paramerters in total" .format(sum(x.numel() for x in res3.parameters())))

print("model have {} paramerters in total" .format(sum(x.numel() for x in res4.parameters())))

print("model have {} paramerters in total" .format(sum(x.numel() for x in res5.parameters())))

print("model have {} paramerters in total" .format(sum(x.numel() for x in res6.parameters())))

print("model have {} paramerters in total" .format(sum(x.numel() for x in res7.parameters())))

print("model have {} paramerters in total" .format(sum(x.numel() for x in res8.parameters())))

print("model have {} paramerters in total" .format(sum(x.numel() for x in res9.parameters())))

# (3)打印模型

# print(res1)

五、项目实战(CIFAR-10数据集分类)

链接:https://pan.baidu.com/s/1XFKKea9ioFyFuZXShaFlJQ?pwd=ewq4

提取码:ewq4

import torch

from torch.utils.data import DataLoader

from torchvision import datasets, utils

from torchvision.transforms import ToTensor, transforms

import matplotlib.pyplot as plt

import os

import resnet

def main():

##################################################

# (1)超参数设置

batch_size = 64

epochs = 10

learning_rate = 0.01

##################################################

# (2)训练参数设置

# res1 = resnet.resnet18()

# res2 = resnet.resnet34()

# res3 = resnet.resnet50()

# res4 = resnet.resnet101()

# res5 = resnet.resnet152()

# print(res1)

res = resnet.resnet18() # 模型初始化

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 可用设备

model = res.to(device) # 模型加载到设备中

cost = torch.nn.CrossEntropyLoss() # 交叉熵损失

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate) # Adam优化器

##################################################

# (3)数据预处理 + 数据下载 + 数据装载

model_dir = os.path.join(os.getcwd(), 'saved_models' + os.sep) # 预训练模型保存路径(os.sep不可删除)

transform = transforms.Compose(

[ToTensor(), transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5]), transforms.Resize((224, 224))])

training_data = datasets.CIFAR10(root="data_dir", train=True, download=True, transform=transform)

testing_data = datasets.CIFAR10(root="data_dir", train=False, download=True, transform=transform)

train_data = DataLoader(dataset=training_data, batch_size=batch_size, shuffle=True, drop_last=True)

test_data = DataLoader(dataset=testing_data, batch_size=batch_size, shuffle=True, drop_last=True)

# classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

print("train_data length: ", len(train_data))

print("test_data length: ", len(test_data))

##################################################

# (4)训练 + 测试(每训练一个epoch,都测试一次)

print("start training", '*' * 50)

print("Net have {} paramerters in total.".format(sum(x.numel() for x in model.parameters()))) # 打印模型的权重参数总数

losses = []

for epoch in range(epochs):

##################################################

# (5)图形化显示每个批次的图像(手动处理图像 + 图形化显示) ———— 冗余可删

images, labels = next(iter(train_data)) # 迭代器(分别存储当前批次的图像和标签)

print(images.shape) # 打印图像尺寸(当前批次的所有图像)

img = utils.make_grid(images)

img = img.numpy().transpose(1, 2, 0) # 转换为numpy格式并进行通道转换(RGB-BGR)

mean = [0.5, 0.5, 0.5] # 均值

std = [0.5, 0.5, 0.5] # 标准差

img = img * std + mean # 图像标准化处理(手动)

# plt.imshow(img) # 绘制图像(当前批次的所有图像)

# plt.show() # 显示图像(当前批次的所有图像)

# print("batch_size labels: ", ''.join('%8s' % classes[labels[j]] for j in range(batch_size))) # 打印当前批次的标签

##################################################

# (6)模型训练

model.train()

running_loss, running_correct = 0.0, 0.0

num_batch_size = 0

for x_train, y_train in train_data: # 遍历训练数据

x_train, y_train = x_train.to(device), y_train.to(device) # 数据加载到设备中

outputs = model(x_train) # 前向传播

_, pred = torch.max(outputs.data, 1) # 获取最大概率值

optimizer.zero_grad() # 梯度清零

loss = cost(outputs, y_train) # 损失计算

loss.backward() # 反向传播

optimizer.step() # 梯度更新

losses.append(loss.data.numpy()) # 保存loss值并转换为numpy格式用于绘图

running_loss += loss.item() # 累加损失

running_correct += torch.sum(pred == y_train.data) # 累加准确率

num_batch_size += 1

print("[opoch=%3d/%3d, batch=%3d/%3d], [train_loss=%3f, train_correct=%3f]"

% (epoch + 1, epochs, num_batch_size, len(train_data),

running_loss / (batch_size * num_batch_size), running_correct / (batch_size * num_batch_size)))

##################################################

# (7)模型测试

model.eval()

testing_loss, testing_correct = 0.0, 0.0

for X_test, y_test in test_data: # 遍历测试数据

X_test, y_test = X_test.to(device), y_test.to(device) # 数据加载到设备中

outputs = model(X_test) # 前向传播

_, pred = torch.max(outputs.data, 1) # 获取最大概率值

loss = cost(outputs, y_test) # 损失计算

testing_loss += loss.item() # 累加损失

testing_correct += torch.sum(pred == y_test.data) # 累加准确率

# 打印训练损失、训练准确度、测试损失、测试准确度(每一个epoch)

print("Train Loss is:{:.4f}, Train Accuracy is:{:.4f}%, Test Loss is:{:.4f} Test Accuracy is:{:.4f}%".format(

running_loss / len(training_data), 100 * running_correct / len(training_data),

testing_loss / len(testing_data), 100 * testing_correct / len(testing_data)))

##################################################

# (8)保存预训练模型(每一个epoch)

torch.save(model.state_dict(), model_dir + "Epoch%d_TrainLoss%3f_ACC%3f_TestLoss%3f_ACC%3f.pth"

% (epochs, running_loss / len(training_data), 100 * running_correct / len(training_data),

testing_loss / len(testing_data), 100 * testing_correct / len(testing_data)))

##################################################

# (9)绘制loss图(每一个epoch)

plt.plot(losses)

plt.xlabel('Steps') # 设置x轴标签

plt.ylabel('Loss') # 设置y轴标签

plt.show()

if __name__ == '__main__':

main()

参考文献

1、Pytorch从零构建ResNet50

2、pytorch实现ResNet50模型(小白学习,详细讲解)

3、pytorch源码解读之resnet.py的实现

4、ResNet简介及代码实现

文章出处登录后可见!