YOLOv5目录结构

├── data:主要是存放一些超参数的配置文件(这些文件(yaml文件)是用来配置训练集和测试集还有验证集的路径的,其中还包括目标检测的种类数和种类的名称);还有一些官方提供测试的图片。如果是训练自己的数据集的话,那么就需要修改其中的yaml文件。但是自己的数据集不建议放在这个路径下面,而是建议把数据集放到yolov5项目的同级目录下面。

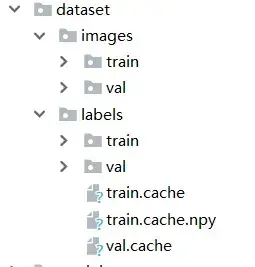

|——dataset :存放自己的数据集,分为images和labels两部分

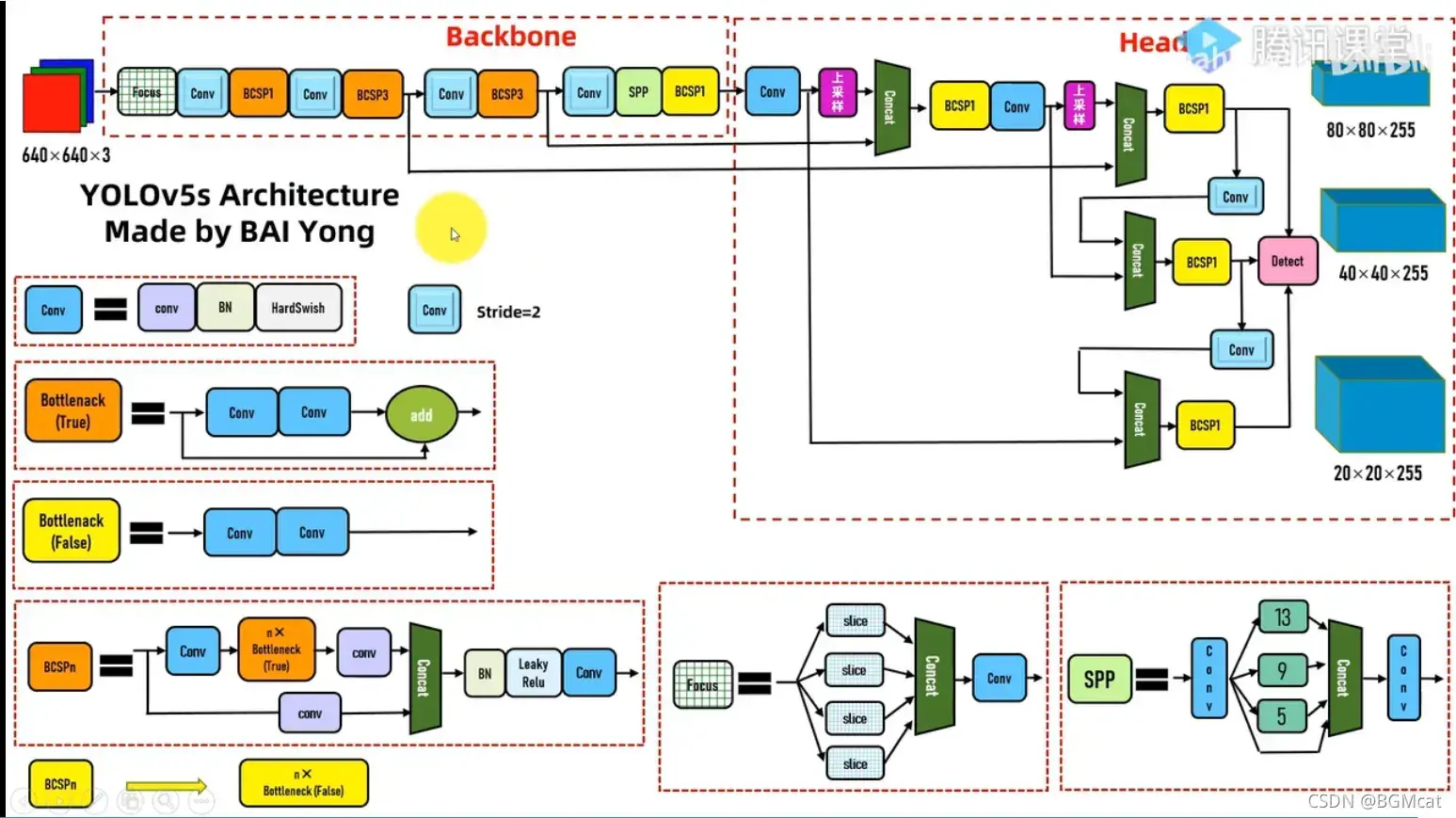

├── models:里面主要是一些网络构建的配置文件和函数,其中包含了该项目的四个不同的版本,分别为是s、m、l、x。从名字就可以看出,这几个版本的大小。他们的检测测度分别都是从快到慢,但是精确度分别是从低到高。这就是所谓的鱼和熊掌不可兼得。如果训练自己的数据集的话,就需要修改这里面相对应的yaml文件来训练自己模型。

├── utils:存放的是工具类的函数,里面有loss函数,metrics函数,plots函数等等。

├── weights:放置训练好的权重参数pt文件。

├── detect.py:利用训练好的权重参数进行目标检测,可以进行图像、视频和摄像头的检测。

├── train.py:训练自己的数据集的函数。

├── test.py:测试训练的结果的函数。

|—— hubconf.py:pytorch hub 相关代码

|—— sotabench.py: coco数据集测试脚本

|—— tutorial.ipynb: jupyter notebook 演示文件

├──requirements.txt:这是一个文本文件,里面写着使用yolov5项目的环境依赖包的一些版本,可以利用该文本导入相应版本的包。

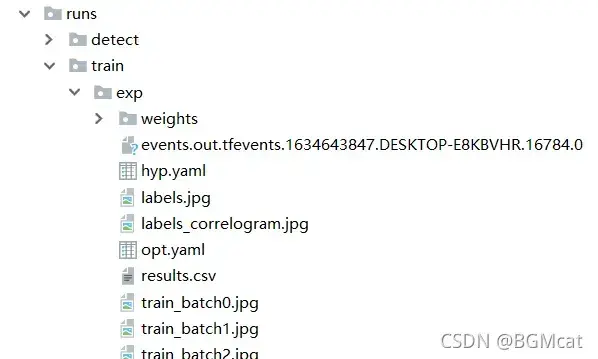

|—-run日志文件,每次训练的数据,包含权重文件,训练数据,直方图等

|——LICENCE 版权文件

以上就是yolov5项目代码的整体介绍。我们训练和测试自己的数据集基本就是利用到如上的代码。

data文件夹

- yaml多种数据集的配置文件,如coco,coco128,pascalvoc等

- hyps 超参数微调配置文件

- scripts文件夹存放着下载数据集额shell命令

在利用自己的数据集进行训练时,需要将配置文件中的路径进行修改,改成自己对应的数据集所在目录,最好复制+重命名。

train: E:/project/yolov5/yolov5-master/dataset/images/train # train images

val: E:/project/yolov5/yolov5-master/dataset/images/val # val images

dataset文件夹

存放着自己的数据集,但应按照image和label分开,同时每一个文件夹下,又应该分为train,val。

.cache文件为缓存文件,将数据加载到内存中,方便下次调用快速。

model文件夹

common.py 网络组件模块

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

"""

Common modules

"""

import logging

import math

import warnings

from copy import copy

from pathlib import Path

import numpy as np

import pandas as pd

import requests

import torch

import torch.nn as nn

from PIL import Image

from torch.cuda import amp

from utils.datasets import exif_transpose, letterbox

from utils.general import colorstr, increment_path, make_divisible, non_max_suppression, save_one_box, \

scale_coords, xyxy2xywh

from utils.plots import Annotator, colors

from utils.torch_utils import time_sync

LOGGER = logging.getLogger(__name__)

#为same卷积或same池化自动扩充

def autopad(k, p=None): # kernel, padding

# Pad to 'same'

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

#标准的卷积 conv+BN+hardswish

class Conv(nn.Module):

# Standard convolution

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, 卷积核kernel, 步长stride, padding, groups

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())

def forward(self, x):#正向传播

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

return self.act(self.conv(x))

#深度可分离卷积网络

class DWConv(Conv):

# Depth-wise convolution class

def __init__(self, c1, c2, k=1, s=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

super().__init__(c1, c2, k, s, g=math.gcd(c1, c2), act=act)

class TransformerLayer(nn.Module):

# Transformer layer https://arxiv.org/abs/2010.11929 (LayerNorm layers removed for better performance)

def __init__(self, c, num_heads):

super().__init__()

self.q = nn.Linear(c, c, bias=False)

self.k = nn.Linear(c, c, bias=False)

self.v = nn.Linear(c, c, bias=False)

self.ma = nn.MultiheadAttention(embed_dim=c, num_heads=num_heads)

self.fc1 = nn.Linear(c, c, bias=False)

self.fc2 = nn.Linear(c, c, bias=False)

def forward(self, x):

x = self.ma(self.q(x), self.k(x), self.v(x))[0] + x

x = self.fc2(self.fc1(x)) + x

return x

class TransformerBlock(nn.Module):

# Vision Transformer https://arxiv.org/abs/2010.11929

def __init__(self, c1, c2, num_heads, num_layers):

super().__init__()

self.conv = None

if c1 != c2:

self.conv = Conv(c1, c2)

self.linear = nn.Linear(c2, c2) # learnable position embedding

self.tr = nn.Sequential(*[TransformerLayer(c2, num_heads) for _ in range(num_layers)])

self.c2 = c2

def forward(self, x):

if self.conv is not None:

x = self.conv(x)

b, _, w, h = x.shape

p = x.flatten(2).unsqueeze(0).transpose(0, 3).squeeze(3)

return self.tr(p + self.linear(p)).unsqueeze(3).transpose(0, 3).reshape(b, self.c2, w, h)

class Bottleneck(nn.Module):

# Standard bottleneck

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5): # ch_in, ch_out, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_, c2, 3, 1, g=g)

self.add = shortcut and c1 == c2

def forward(self, x):

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

class BottleneckCSP(nn.Module):

# CSP Bottleneck https://github.com/WongKinYiu/CrossStagePartialNetworks

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)

self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)

self.cv4 = Conv(2 * c_, c2, 1, 1)

self.bn = nn.BatchNorm2d(2 * c_) # applied to cat(cv2, cv3)

self.act = nn.LeakyReLU(0.1, inplace=True)

#*把list拆分为一个个元素

self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])

def forward(self, x):

y1 = self.cv3(self.m(self.cv1(x)))

y2 = self.cv2(x)

return self.cv4(self.act(self.bn(torch.cat((y1, y2), dim=1))))

class C3(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1) # act=FReLU(c2)

self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])

# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])

def forward(self, x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))

class C3TR(C3):

# C3 module with TransformerBlock()

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e)

self.m = TransformerBlock(c_, c_, 4, n)

class C3SPP(C3):

# C3 module with SPP()

def __init__(self, c1, c2, k=(5, 9, 13), n=1, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e)

self.m = SPP(c_, c_, k)

class C3Ghost(C3):

# C3 module with GhostBottleneck()

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e) # hidden channels

self.m = nn.Sequential(*[GhostBottleneck(c_, c_) for _ in range(n)])

#金字塔池化

class SPP(nn.Module):

# Spatial Pyramid Pooling (SPP) layer https://arxiv.org/abs/1406.4729

def __init__(self, c1, c2, k=(5, 9, 13)):

super().__init__()

c_ = c1 // 2 # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_ * (len(k) + 1), c2, 1, 1)

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])

def forward(self, x):

x = self.cv1(x)

with warnings.catch_warnings():

warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning

return self.cv2(torch.cat([x] + [m(x) for m in self.m], 1))

class SPPF(nn.Module):

# Spatial Pyramid Pooling - Fast (SPPF) layer for YOLOv5 by Glenn Jocher

def __init__(self, c1, c2, k=5): # equivalent to SPP(k=(5, 9, 13))

super().__init__()

c_ = c1 // 2 # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_ * 4, c2, 1, 1)

self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)

def forward(self, x):

x = self.cv1(x)

with warnings.catch_warnings():

warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning

y1 = self.m(x)

y2 = self.m(y1)

return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))

#把宽和高填充整合到c空间中

class Focus(nn.Module):

# Focus wh information into c-space

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

super().__init__()

self.conv = Conv(c1 * 4, c2, k, s, p, g, act)

# self.contract = Contract(gain=2)

def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)

return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))

# return self.conv(self.contract(x))

class GhostConv(nn.Module):

# Ghost Convolution https://github.com/huawei-noah/ghostnet

def __init__(self, c1, c2, k=1, s=1, g=1, act=True): # ch_in, ch_out, kernel, stride, groups

super().__init__()

c_ = c2 // 2 # hidden channels

self.cv1 = Conv(c1, c_, k, s, None, g, act)

self.cv2 = Conv(c_, c_, 5, 1, None, c_, act)

def forward(self, x):

y = self.cv1(x)

return torch.cat([y, self.cv2(y)], 1)

class GhostBottleneck(nn.Module):

# Ghost Bottleneck https://github.com/huawei-noah/ghostnet

def __init__(self, c1, c2, k=3, s=1): # ch_in, ch_out, kernel, stride

super().__init__()

c_ = c2 // 2

self.conv = nn.Sequential(GhostConv(c1, c_, 1, 1), # pw

DWConv(c_, c_, k, s, act=False) if s == 2 else nn.Identity(), # dw

GhostConv(c_, c2, 1, 1, act=False)) # pw-linear

self.shortcut = nn.Sequential(DWConv(c1, c1, k, s, act=False),

Conv(c1, c2, 1, 1, act=False)) if s == 2 else nn.Identity()

def forward(self, x):

return self.conv(x) + self.shortcut(x)

class Contract(nn.Module):

# Contract width-height into channels, i.e. x(1,64,80,80) to x(1,256,40,40)

def __init__(self, gain=2):

super().__init__()

self.gain = gain

def forward(self, x):

b, c, h, w = x.size() # assert (h / s == 0) and (W / s == 0), 'Indivisible gain'

s = self.gain

x = x.view(b, c, h // s, s, w // s, s) # x(1,64,40,2,40,2)

x = x.permute(0, 3, 5, 1, 2, 4).contiguous() # x(1,2,2,64,40,40)

return x.view(b, c * s * s, h // s, w // s) # x(1,256,40,40)

class Expand(nn.Module):

# Expand channels into width-height, i.e. x(1,64,80,80) to x(1,16,160,160)

def __init__(self, gain=2):

super().__init__()

self.gain = gain

def forward(self, x):

b, c, h, w = x.size() # assert C / s ** 2 == 0, 'Indivisible gain'

s = self.gain

x = x.view(b, s, s, c // s ** 2, h, w) # x(1,2,2,16,80,80)

x = x.permute(0, 3, 4, 1, 5, 2).contiguous() # x(1,16,80,2,80,2)

return x.view(b, c // s ** 2, h * s, w * s) # x(1,16,160,160)

class Concat(nn.Module):

# Concatenate a list of tensors along dimension

def __init__(self, dimension=1):

super().__init__()

self.d = dimension

def forward(self, x):

return torch.cat(x, self.d)

#自动调整大小

class AutoShape(nn.Module):

# YOLOv5 input-robust model wrapper for passing cv2/np/PIL/torch inputs. Includes preprocessing, inference and NMS

conf = 0.25 # NMS confidence threshold

iou = 0.45 # NMS IoU threshold

classes = None # (optional list) filter by class

multi_label = False # NMS multiple labels per box

max_det = 1000 # maximum number of detections per image

def __init__(self, model):

super().__init__()

self.model = model.eval()

def autoshape(self):

LOGGER.info('AutoShape already enabled, skipping... ') # model already converted to model.autoshape()

return self

def _apply(self, fn):

# Apply to(), cpu(), cuda(), half() to model tensors that are not parameters or registered buffers

self = super()._apply(fn)

m = self.model.model[-1] # Detect()

m.stride = fn(m.stride)

m.grid = list(map(fn, m.grid))

if isinstance(m.anchor_grid, list):

m.anchor_grid = list(map(fn, m.anchor_grid))

return self

@torch.no_grad()

def forward(self, imgs, size=640, augment=False, profile=False):

# Inference from various sources. For height=640, width=1280, RGB images example inputs are:

# file: imgs = 'data/images/zidane.jpg' # str or PosixPath

# URI: = 'https://ultralytics.com/images/zidane.jpg'

# OpenCV: = cv2.imread('image.jpg')[:,:,::-1] # HWC BGR to RGB x(640,1280,3)

# PIL: = Image.open('image.jpg') or ImageGrab.grab() # HWC x(640,1280,3)

# numpy: = np.zeros((640,1280,3)) # HWC

# torch: = torch.zeros(16,3,320,640) # BCHW (scaled to size=640, 0-1 values)

# multiple: = [Image.open('image1.jpg'), Image.open('image2.jpg'), ...] # list of images

t = [time_sync()]

p = next(self.model.parameters()) # for device and type

if isinstance(imgs, torch.Tensor): # torch

with amp.autocast(enabled=p.device.type != 'cpu'):

return self.model(imgs.to(p.device).type_as(p), augment, profile) # inference

# Pre-process

n, imgs = (len(imgs), imgs) if isinstance(imgs, list) else (1, [imgs]) # number of images, list of images

shape0, shape1, files = [], [], [] # image and inference shapes, filenames

for i, im in enumerate(imgs):

f = f'image{i}' # filename

if isinstance(im, (str, Path)): # filename or uri

im, f = Image.open(requests.get(im, stream=True).raw if str(im).startswith('http') else im), im

im = np.asarray(exif_transpose(im))

elif isinstance(im, Image.Image): # PIL Image

im, f = np.asarray(exif_transpose(im)), getattr(im, 'filename', f) or f

files.append(Path(f).with_suffix('.jpg').name)

if im.shape[0] < 5: # image in CHW

im = im.transpose((1, 2, 0)) # reverse dataloader .transpose(2, 0, 1)

im = im[..., :3] if im.ndim == 3 else np.tile(im[..., None], 3) # enforce 3ch input

s = im.shape[:2] # HWC

shape0.append(s) # image shape

g = (size / max(s)) # gain

shape1.append([y * g for y in s])

imgs[i] = im if im.data.contiguous else np.ascontiguousarray(im) # update

shape1 = [make_divisible(x, int(self.stride.max())) for x in np.stack(shape1, 0).max(0)] # inference shape

x = [letterbox(im, new_shape=shape1, auto=False)[0] for im in imgs] # pad

x = np.stack(x, 0) if n > 1 else x[0][None] # stack

x = np.ascontiguousarray(x.transpose((0, 3, 1, 2))) # BHWC to BCHW

x = torch.from_numpy(x).to(p.device).type_as(p) / 255. # uint8 to fp16/32

t.append(time_sync())

with amp.autocast(enabled=p.device.type != 'cpu'):

# Inference

y = self.model(x, augment, profile)[0] # forward

t.append(time_sync())

# Post-process

y = non_max_suppression(y, self.conf, iou_thres=self.iou, classes=self.classes,

multi_label=self.multi_label, max_det=self.max_det) # NMS

for i in range(n):

scale_coords(shape1, y[i][:, :4], shape0[i])

t.append(time_sync())

return Detections(imgs, y, files, t, self.names, x.shape)

class Detections:

# YOLOv5 detections class for inference results

def __init__(self, imgs, pred, files, times=None, names=None, shape=None):

super().__init__()

d = pred[0].device # device

gn = [torch.tensor([*[im.shape[i] for i in [1, 0, 1, 0]], 1., 1.], device=d) for im in imgs] # normalizations

self.imgs = imgs # list of images as numpy arrays

self.pred = pred # list of tensors pred[0] = (xyxy, conf, cls)

self.names = names # class names

self.files = files # image filenames

self.xyxy = pred # xyxy pixels

self.xywh = [xyxy2xywh(x) for x in pred] # xywh pixels

self.xyxyn = [x / g for x, g in zip(self.xyxy, gn)] # xyxy normalized

self.xywhn = [x / g for x, g in zip(self.xywh, gn)] # xywh normalized

self.n = len(self.pred) # number of images (batch size)

self.t = tuple((times[i + 1] - times[i]) * 1000 / self.n for i in range(3)) # timestamps (ms)

self.s = shape # inference BCHW shape

def display(self, pprint=False, show=False, save=False, crop=False, render=False, save_dir=Path('')):

crops = []

for i, (im, pred) in enumerate(zip(self.imgs, self.pred)):

s = f'image {i + 1}/{len(self.pred)}: {im.shape[0]}x{im.shape[1]} ' # string

if pred.shape[0]:

for c in pred[:, -1].unique():

n = (pred[:, -1] == c).sum() # detections per class

s += f"{n} {self.names[int(c)]}{'s' * (n > 1)}, " # add to string

if show or save or render or crop:

annotator = Annotator(im, example=str(self.names))

for *box, conf, cls in reversed(pred): # xyxy, confidence, class

label = f'{self.names[int(cls)]} {conf:.2f}'

if crop:

file = save_dir / 'crops' / self.names[int(cls)] / self.files[i] if save else None

crops.append({'box': box, 'conf': conf, 'cls': cls, 'label': label,

'im': save_one_box(box, im, file=file, save=save)})

else: # all others

annotator.box_label(box, label, color=colors(cls))

im = annotator.im

else:

s += '(no detections)'

im = Image.fromarray(im.astype(np.uint8)) if isinstance(im, np.ndarray) else im # from np

if pprint:

LOGGER.info(s.rstrip(', '))

if show:

im.show(self.files[i]) # show

if save:

f = self.files[i]

im.save(save_dir / f) # save

if i == self.n - 1:

LOGGER.info(f"Saved {self.n} image{'s' * (self.n > 1)} to {colorstr('bold', save_dir)}")

if render:

self.imgs[i] = np.asarray(im)

if crop:

if save:

LOGGER.info(f'Saved results to {save_dir}\n')

return crops

def print(self):

self.display(pprint=True) # print results

LOGGER.info(f'Speed: %.1fms pre-process, %.1fms inference, %.1fms NMS per image at shape {tuple(self.s)}' %

self.t)

def show(self):

self.display(show=True) # show results

def save(self, save_dir='runs/detect/exp'):

save_dir = increment_path(save_dir, exist_ok=save_dir != 'runs/detect/exp', mkdir=True) # increment save_dir

self.display(save=True, save_dir=save_dir) # save results

def crop(self, save=True, save_dir='runs/detect/exp'):

save_dir = increment_path(save_dir, exist_ok=save_dir != 'runs/detect/exp', mkdir=True) if save else None

return self.display(crop=True, save=save, save_dir=save_dir) # crop results

def render(self):

self.display(render=True) # render results

return self.imgs

def pandas(self):

# return detections as pandas DataFrames, i.e. print(results.pandas().xyxy[0])

new = copy(self) # return copy

ca = 'xmin', 'ymin', 'xmax', 'ymax', 'confidence', 'class', 'name' # xyxy columns

cb = 'xcenter', 'ycenter', 'width', 'height', 'confidence', 'class', 'name' # xywh columns

for k, c in zip(['xyxy', 'xyxyn', 'xywh', 'xywhn'], [ca, ca, cb, cb]):

a = [[x[:5] + [int(x[5]), self.names[int(x[5])]] for x in x.tolist()] for x in getattr(self, k)] # update

setattr(new, k, [pd.DataFrame(x, columns=c) for x in a])

return new

def tolist(self):

# return a list of Detections objects, i.e. 'for result in results.tolist():'

x = [Detections([self.imgs[i]], [self.pred[i]], self.names, self.s) for i in range(self.n)]

for d in x:

for k in ['imgs', 'pred', 'xyxy', 'xyxyn', 'xywh', 'xywhn']:

setattr(d, k, getattr(d, k)[0]) # pop out of list

return x

def __len__(self):

return self.n

#用于二级分类

class Classify(nn.Module):

# Classification head, i.e. x(b,c1,20,20) to x(b,c2)

def __init__(self, c1, c2, k=1, s=1, p=None, g=1): # ch_in, ch_out, kernel, stride, padding, groups

super().__init__()

self.aap = nn.AdaptiveAvgPool2d(1) # to x(b,c1,1,1)

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g) # to x(b,c2,1,1)

self.flat = nn.Flatten()

def forward(self, x):

z = torch.cat([self.aap(y) for y in (x if isinstance(x, list) else [x])], 1) # cat if list

return self.flat(self.conv(z)) # flatten to x(b,c2)

experimental.py 实验性质代码

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

"""

Experimental modules

"""

import numpy as np

import torch

import torch.nn as nn

from models.common import Conv

from utils.downloads import attempt_download

class CrossConv(nn.Module):

# Cross Convolution Downsample

def __init__(self, c1, c2, k=3, s=1, g=1, e=1.0, shortcut=False):

# ch_in, ch_out, kernel, stride, groups, expansion, shortcut

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, (1, k), (1, s))

self.cv2 = Conv(c_, c2, (k, 1), (s, 1), g=g)

self.add = shortcut and c1 == c2

def forward(self, x):

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

class Sum(nn.Module):

# Weighted sum of 2 or more layers https://arxiv.org/abs/1911.09070

def __init__(self, n, weight=False): # n: number of inputs

super().__init__()

self.weight = weight # apply weights boolean

self.iter = range(n - 1) # iter object

if weight:

self.w = nn.Parameter(-torch.arange(1., n) / 2, requires_grad=True) # layer weights

def forward(self, x):

y = x[0] # no weight

if self.weight:

w = torch.sigmoid(self.w) * 2

for i in self.iter:

y = y + x[i + 1] * w[i]

else:

for i in self.iter:

y = y + x[i + 1]

return y

class MixConv2d(nn.Module):

# Mixed Depth-wise Conv https://arxiv.org/abs/1907.09595

def __init__(self, c1, c2, k=(1, 3), s=1, equal_ch=True):

super().__init__()

groups = len(k)

if equal_ch: # equal c_ per group

i = torch.linspace(0, groups - 1E-6, c2).floor() # c2 indices

c_ = [(i == g).sum() for g in range(groups)] # intermediate channels

else: # equal weight.numel() per group

b = [c2] + [0] * groups

a = np.eye(groups + 1, groups, k=-1)

a -= np.roll(a, 1, axis=1)

a *= np.array(k) ** 2

a[0] = 1

c_ = np.linalg.lstsq(a, b, rcond=None)[0].round() # solve for equal weight indices, ax = b

self.m = nn.ModuleList([nn.Conv2d(c1, int(c_[g]), k[g], s, k[g] // 2, bias=False) for g in range(groups)])

self.bn = nn.BatchNorm2d(c2)

self.act = nn.LeakyReLU(0.1, inplace=True)

def forward(self, x):

return x + self.act(self.bn(torch.cat([m(x) for m in self.m], 1)))

#模型集成

class Ensemble(nn.ModuleList):

# Ensemble of models

def __init__(self):

super().__init__()

def forward(self, x, augment=False, profile=False, visualize=False):

y = []

for module in self:

y.append(module(x, augment, profile, visualize)[0])

# y = torch.stack(y).max(0)[0] # max ensemble

# y = torch.stack(y).mean(0) # mean ensemble

y = torch.cat(y, 1) # nms ensemble

return y, None # inference, train output

#加载模型权重文件,并构造模型

def attempt_load(weights, map_location=None, inplace=True, fuse=True):

from models.yolo import Detect, Model

# Loads an ensemble of models weights=[a,b,c] or a single model weights=[a] or weights=a

model = Ensemble()

for w in weights if isinstance(weights, list) else [weights]:

ckpt = torch.load(attempt_download(w), map_location=map_location) # load,下载不在本地的权重文件

if fuse:

model.append(ckpt['ema' if ckpt.get('ema') else 'model'].float().fuse().eval()) # FP32 model

else:

model.append(ckpt['ema' if ckpt.get('ema') else 'model'].float().eval()) # without layer fuse

# Compatibility updates

for m in model.modules():

if type(m) in [nn.Hardswish, nn.LeakyReLU, nn.ReLU, nn.ReLU6, nn.SiLU, Detect, Model]:

m.inplace = inplace # pytorch 1.7.0 compatibility

if type(m) is Detect:

if not isinstance(m.anchor_grid, list): # new Detect Layer compatibility

delattr(m, 'anchor_grid')

setattr(m, 'anchor_grid', [torch.zeros(1)] * m.nl)

elif type(m) is Conv:

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

if len(model) == 1:

return model[-1] # return model

else:

print(f'Ensemble created with {weights}\n')

for k in ['names']:

setattr(model, k, getattr(model[-1], k))

model.stride = model[torch.argmax(torch.tensor([m.stride.max() for m in model])).int()].stride # max stride

return model # return ensemble

tf.py 模型导出脚本,负责将模型转化,TensorFlow, Keras and TFLite versions of YOLOv5

yolo.py 整体网络代码

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

"""

YOLO-specific modules

Usage:

$ python path/to/models/yolo.py --cfg yolov5s.yaml

"""

import argparse

import sys

from copy import deepcopy

from pathlib import Path

FILE = Path(__file__).resolve()

ROOT = FILE.parents[1] # YOLOv5 root directory

if str(ROOT) not in sys.path:

sys.path.append(str(ROOT)) # add ROOT to PATH

# ROOT = ROOT.relative_to(Path.cwd()) # relative

from models.common import *

from models.experimental import *

from utils.autoanchor import check_anchor_order

from utils.general import check_yaml, make_divisible, print_args, set_logging

from utils.plots import feature_visualization

from utils.torch_utils import copy_attr, fuse_conv_and_bn, initialize_weights, model_info, scale_img, \

select_device, time_sync

try:

import thop # for FLOPs computation

except ImportError:

thop = None

LOGGER = logging.getLogger(__name__)

class Detect(nn.Module):

stride = None # strides computed during build

onnx_dynamic = False # ONNX export parameter

def __init__(self, nc=80, anchors=(), ch=(), inplace=True): # detection layer

super().__init__()

self.nc = nc # number of classes

self.no = nc + 5 # number of outputs per anchor

self.nl = len(anchors) # number of detection layers

self.na = len(anchors[0]) // 2 # number of anchors

self.grid = [torch.zeros(1)] * self.nl # init grid

#模型中保存的参数有两种:y一种是反向传播需要被optimizer更新的,称之为parameter

#一种是反向传播不需要被optimizer更新,称为buffer

#第二种参数需要创建tensor,然后将tensor通过register——buffer进行注册

#可以通过model。buffers()返回,注册完成后参数也会自动保存到orderdict中去

#optim.step 只能更新nn.parameter 类型的参数

self.anchor_grid = [torch.zeros(1)] * self.nl # init anchor grid

self.register_buffer('anchors', torch.tensor(anchors).float().view(self.nl, -1, 2)) # shape(nl,na,2)

self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv 1*1 卷积

self.inplace = inplace # use in-place ops (e.g. slice assignment)

def forward(self, x):

z = [] # inference output

for i in range(self.nl):

x[i] = self.m[i](x[i]) # conv

bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

if not self.training: # inference

if self.grid[i].shape[2:4] != x[i].shape[2:4] or self.onnx_dynamic:

self.grid[i], self.anchor_grid[i] = self._make_grid(nx, ny, i)

y = x[i].sigmoid()

if self.inplace:

y[..., 0:2] = (y[..., 0:2] * 2. - 0.5 + self.grid[i]) * self.stride[i] # xy

y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh

else: # for YOLOv5 on AWS Inferentia https://github.com/ultralytics/yolov5/pull/2953

xy = (y[..., 0:2] * 2. - 0.5 + self.grid[i]) * self.stride[i] # xy

wh = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh

y = torch.cat((xy, wh, y[..., 4:]), -1)

z.append(y.view(bs, -1, self.no))

return x if self.training else (torch.cat(z, 1), x)#预测框坐标信息

def _make_grid(self, nx=20, ny=20, i=0):

d = self.anchors[i].device

yv, xv = torch.meshgrid([torch.arange(ny).to(d), torch.arange(nx).to(d)])

grid = torch.stack((xv, yv), 2).expand((1, self.na, ny, nx, 2)).float()

anchor_grid = (self.anchors[i].clone() * self.stride[i]) \

.view((1, self.na, 1, 1, 2)).expand((1, self.na, ny, nx, 2)).float()

return grid, anchor_grid

#网络模型类

class Model(nn.Module):

def __init__(self, cfg='yolov5s.yaml', ch=3, nc=None, anchors=None): # model, input channels, number of classes

super().__init__()

if isinstance(cfg, dict):

self.yaml = cfg # model dict

else: # is *.yaml

import yaml # for torch hub

self.yaml_file = Path(cfg).name

with open(cfg, errors='ignore') as f:

self.yaml = yaml.safe_load(f) # model dict

# Define model

ch = self.yaml['ch'] = self.yaml.get('ch', ch) # input channels

if nc and nc != self.yaml['nc']:

LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}")

self.yaml['nc'] = nc # override yaml value

if anchors:

LOGGER.info(f'Overriding model.yaml anchors with anchors={anchors}')

self.yaml['anchors'] = round(anchors) # override yaml value

self.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch]) # model, savelist

self.names = [str(i) for i in range(self.yaml['nc'])] # default names

self.inplace = self.yaml.get('inplace', True)

# Build strides, anchors

m = self.model[-1] # Detect()

if isinstance(m, Detect):

s = 256 # 2x min stride

m.inplace = self.inplace

m.stride = torch.tensor([s / x.shape[-2] for x in self.forward(torch.zeros(1, ch, s, s))]) # forward

m.anchors /= m.stride.view(-1, 1, 1)

check_anchor_order(m)

self.stride = m.stride

self._initialize_biases() # only run once,初始化偏执

# Init weights, biases

initialize_weights(self)#初始化权重

self.info()#每一层的信息

LOGGER.info('')

def forward(self, x, augment=False, profile=False, visualize=False):

if augment:

return self._forward_augment(x) # augmented inference, None

return self._forward_once(x, profile, visualize) # single-scale inference, train

def _forward_augment(self, x):

img_size = x.shape[-2:] # height, width

s = [1, 0.83, 0.67] # scales

f = [None, 3, None] # flips (2-ud, 3-lr)

y = [] # outputs

for si, fi in zip(s, f):

xi = scale_img(x.flip(fi) if fi else x, si, gs=int(self.stride.max()))

yi = self._forward_once(xi)[0] # forward

# cv2.imwrite(f'img_{si}.jpg', 255 * xi[0].cpu().numpy().transpose((1, 2, 0))[:, :, ::-1]) # save

yi = self._descale_pred(yi, fi, si, img_size)

y.append(yi)

y = self._clip_augmented(y) # clip augmented tails

return torch.cat(y, 1), None # augmented inference, train

def _forward_once(self, x, profile=False, visualize=False):

y, dt = [], [] # outputs

for m in self.model:

if m.f != -1: # if not from previous layer

x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

if profile:

self._profile_one_layer(m, x, dt)

x = m(x) # run

y.append(x if m.i in self.save else None) # save output

if visualize:

feature_visualization(x, m.type, m.i, save_dir=visualize)

return x

def _descale_pred(self, p, flips, scale, img_size):

# de-scale predictions following augmented inference (inverse operation)

if self.inplace:

p[..., :4] /= scale # de-scale

if flips == 2:

p[..., 1] = img_size[0] - p[..., 1] # de-flip ud

elif flips == 3:

p[..., 0] = img_size[1] - p[..., 0] # de-flip lr

else:

x, y, wh = p[..., 0:1] / scale, p[..., 1:2] / scale, p[..., 2:4] / scale # de-scale

if flips == 2:

y = img_size[0] - y # de-flip ud

elif flips == 3:

x = img_size[1] - x # de-flip lr

p = torch.cat((x, y, wh, p[..., 4:]), -1)

return p

def _clip_augmented(self, y):

# Clip YOLOv5 augmented inference tails

nl = self.model[-1].nl # number of detection layers (P3-P5)

g = sum(4 ** x for x in range(nl)) # grid points

e = 1 # exclude layer count

i = (y[0].shape[1] // g) * sum(4 ** x for x in range(e)) # indices

y[0] = y[0][:, :-i] # large

i = (y[-1].shape[1] // g) * sum(4 ** (nl - 1 - x) for x in range(e)) # indices

y[-1] = y[-1][:, i:] # small

return y

def _profile_one_layer(self, m, x, dt):

c = isinstance(m, Detect) # is final layer, copy input as inplace fix

o = thop.profile(m, inputs=(x.copy() if c else x,), verbose=False)[0] / 1E9 * 2 if thop else 0 # FLOPs

t = time_sync()

for _ in range(10):

m(x.copy() if c else x)

dt.append((time_sync() - t) * 100)

if m == self.model[0]:

LOGGER.info(f"{'time (ms)':>10s} {'GFLOPs':>10s} {'params':>10s} {'module'}")

LOGGER.info(f'{dt[-1]:10.2f} {o:10.2f} {m.np:10.0f} {m.type}')

if c:

LOGGER.info(f"{sum(dt):10.2f} {'-':>10s} {'-':>10s} Total")

def _initialize_biases(self, cf=None): # initialize biases into Detect(), cf is class frequency

# https://arxiv.org/abs/1708.02002 section 3.3

# cf = torch.bincount(torch.tensor(np.concatenate(dataset.labels, 0)[:, 0]).long(), minlength=nc) + 1.

m = self.model[-1] # Detect() module

for mi, s in zip(m.m, m.stride): # from

b = mi.bias.view(m.na, -1) # conv.bias(255) to (3,85)

b.data[:, 4] += math.log(8 / (640 / s) ** 2) # obj (8 objects per 640 image)

b.data[:, 5:] += math.log(0.6 / (m.nc - 0.99)) if cf is None else torch.log(cf / cf.sum()) # cls

mi.bias = torch.nn.Parameter(b.view(-1), requires_grad=True)

def _print_biases(self):

m = self.model[-1] # Detect() module

for mi in m.m: # from

b = mi.bias.detach().view(m.na, -1).T # conv.bias(255) to (3,85)

LOGGER.info(

('%6g Conv2d.bias:' + '%10.3g' * 6) % (mi.weight.shape[1], *b[:5].mean(1).tolist(), b[5:].mean()))

# def _print_weights(self):

# for m in self.model.modules():

# if type(m) is Bottleneck:

# LOGGER.info('%10.3g' % (m.w.detach().sigmoid() * 2)) # shortcut weights

def fuse(self): # fuse model Conv2d() + BatchNorm2d() layers

LOGGER.info('Fusing layers... ')

for m in self.model.modules():

if isinstance(m, (Conv, DWConv)) and hasattr(m, 'bn'):

m.conv = fuse_conv_and_bn(m.conv, m.bn) # update conv

delattr(m, 'bn') # remove batchnorm

m.forward = m.forward_fuse # update forward

self.info()

return self

def autoshape(self): # add AutoShape module

LOGGER.info('Adding AutoShape... ')

m = AutoShape(self) # wrap model

copy_attr(m, self, include=('yaml', 'nc', 'hyp', 'names', 'stride'), exclude=()) # copy attributes

return m

def info(self, verbose=False, img_size=640): # print model information

model_info(self, verbose, img_size)

def _apply(self, fn):

# Apply to(), cpu(), cuda(), half() to model tensors that are not parameters or registered buffers

self = super()._apply(fn)

m = self.model[-1] # Detect()

if isinstance(m, Detect):

m.stride = fn(m.stride)

m.grid = list(map(fn, m.grid))

if isinstance(m.anchor_grid, list):

m.anchor_grid = list(map(fn, m.anchor_grid))

return self

#解析网络模型配置文件,并构建模型

def parse_model(d, ch): # model_dict, input_channels(3)

LOGGER.info('\n%3s%18s%3s%10s %-40s%-30s' % ('', 'from', 'n', 'params', 'module', 'arguments'))

#将模型结构的depth_multiple,width_multiple提取出来,赋值给gd,gw

anchors, nc, gd, gw = d['anchors'], d['nc'], d['depth_multiple'], d['width_multiple']

na = (len(anchors[0]) // 2) if isinstance(anchors, list) else anchors # number of anchors

no = na * (nc + 5) # number of outputs = anchors * (classes + 5)

layers, save, c2 = [], [], ch[-1] # layers, savelist, ch out

for i, (f, n, m, args) in enumerate(d['backbone'] + d['head']): # from, number, module, args

m = eval(m) if isinstance(m, str) else m # eval strings

for j, a in enumerate(args):

try:

args[j] = eval(a) if isinstance(a, str) else a # eval strings

except NameError:

pass

# 控制深度

n = n_ = max(round(n * gd), 1) if n > 1 else n # depth gain

if m in [Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost]:

c1, c2 = ch[f], args[0] #c1:3

if c2 != no: # if not output

#控制宽度(卷积核个数)

c2 = make_divisible(c2 * gw, 8)

args = [c1, c2, *args[1:]]

if m in [BottleneckCSP, C3, C3TR, C3Ghost]:

args.insert(2, n) # number of repeats

n = 1

elif m is nn.BatchNorm2d:

args = [ch[f]]

elif m is Concat:

c2 = sum([ch[x] for x in f])

elif m is Detect:

args.append([ch[x] for x in f])

if isinstance(args[1], int): # number of anchors

args[1] = [list(range(args[1] * 2))] * len(f)

elif m is Contract:

c2 = ch[f] * args[0] ** 2

elif m is Expand:

c2 = ch[f] // args[0] ** 2

else:

c2 = ch[f]

#*arg表示会接收任意数量个参数,调用时打包为一个元组传入实参

m_ = nn.Sequential(*[m(*args) for _ in range(n)]) if n > 1 else m(*args) # module

t = str(m)[8:-2].replace('__main__.', '') # module type

np = sum([x.numel() for x in m_.parameters()]) # number params

m_.i, m_.f, m_.type, m_.np = i, f, t, np # attach index, 'from' index, type, number params

LOGGER.info('%3s%18s%3s%10.0f %-40s%-30s' % (i, f, n_, np, t, args)) # print

save.extend(x % i for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist

layers.append(m_)

if i == 0:

ch = []

ch.append(c2)

return nn.Sequential(*layers), sorted(save)

if __name__ == '__main__':

#建立参数解析对象

parser = argparse.ArgumentParser()

#添加属性

parser.add_argument('--cfg', type=str, default='yolov5s.yaml', help='model.yaml')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--profile', action='store_true', help='profile model speed')

opt = parser.parse_args()

opt.cfg = check_yaml(opt.cfg) # check YAML

print_args(FILE.stem, opt)

set_logging()

device = select_device(opt.device)

# Create model

model = Model(opt.cfg).to(device)

model.train()

# Profile

if opt.profile:

img = torch.rand(8 if torch.cuda.is_available() else 1, 3, 640, 640).to(device)

y = model(img, profile=True)

# Tensorboard (not working https://github.com/ultralytics/yolov5/issues/2898)

# from torch.utils.tensorboard import SummaryWriter

# tb_writer = SummaryWriter('.')

# LOGGER.info("Run 'tensorboard --logdir=models' to view tensorboard at http://localhost:6006/")

# tb_writer.add_graph(torch.jit.trace(model, img, strict=False), []) # add model graph

yolo5s.yaml 网络模型配置文件

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes 类别数

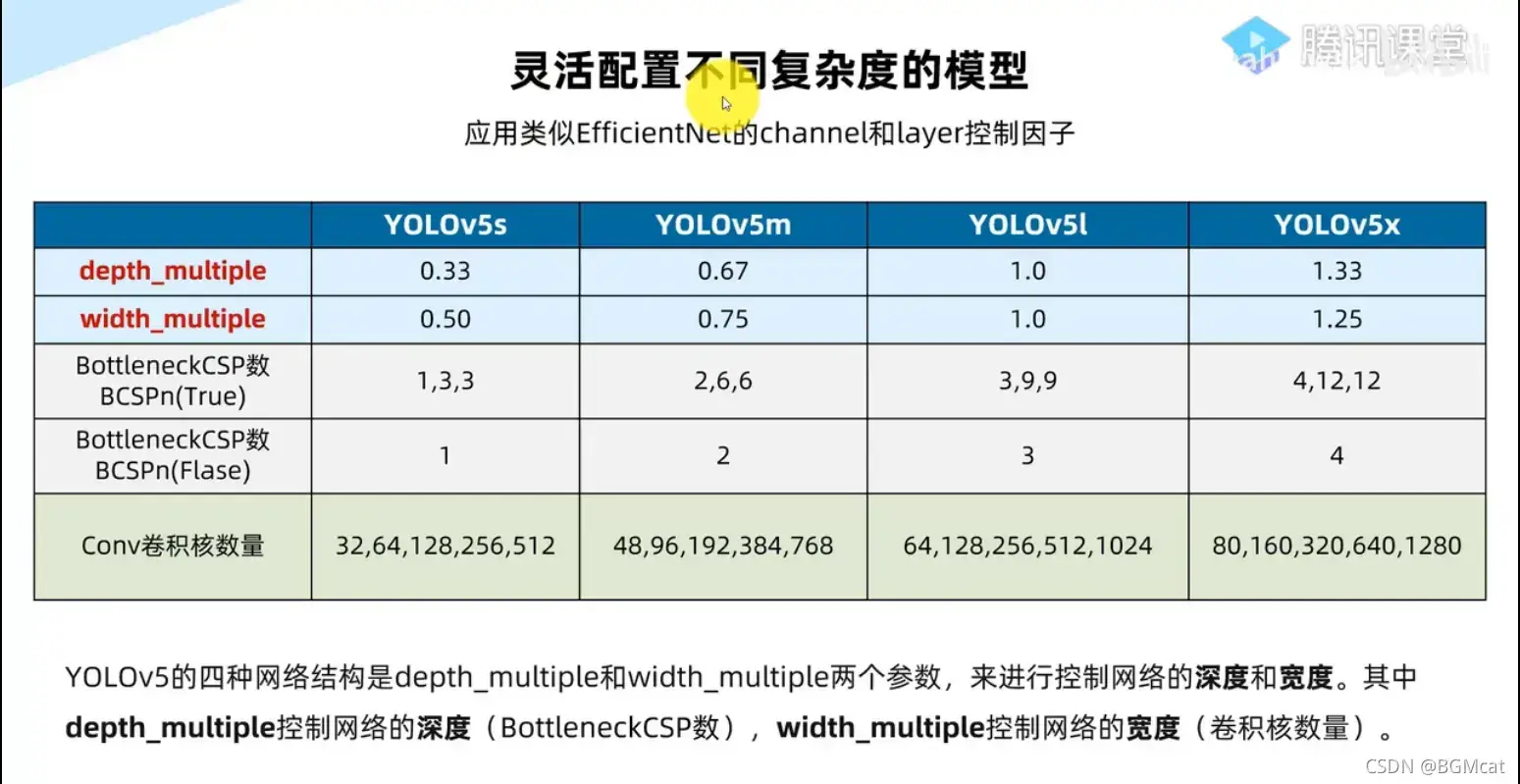

depth_multiple: 0.33 # model depth multiple 控制模型的深度

width_multiple: 0.50 # layer channel multiple 控制conv 通道的个数,卷积核数量

#depth_multiple: 表示BottleneckCSP模块的层缩放因子,将所有的BotleneckCSP模块的B0ttleneck乘上该参数得到最终个数

#width_multiple表示卷积通道的缩放因子,就是将配置里的backbone和head部分有关conv通道的设置,全部乘以该系数

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

#from 列参数 :当前模块输入来自那一层输出,-1 表示是从上一层获得的输入

#number 列参数:本模块重复次数,1 表示只有一个,3 表示有三个相同的模块

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4 128表示128个卷积核,3 表示3*3卷积核,2表示步长为2

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

#作者没有分neck模块,所以head部分包含了panet+detect部分

head:

[[-1, 1, Conv, [512, 1, 1]], #上采样

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

run 文件夹

train文件夹存放着训练数据时记录的数据过程

detect文件夹存放着使用训练好的模型,每次预测判断的数据

utils文件夹

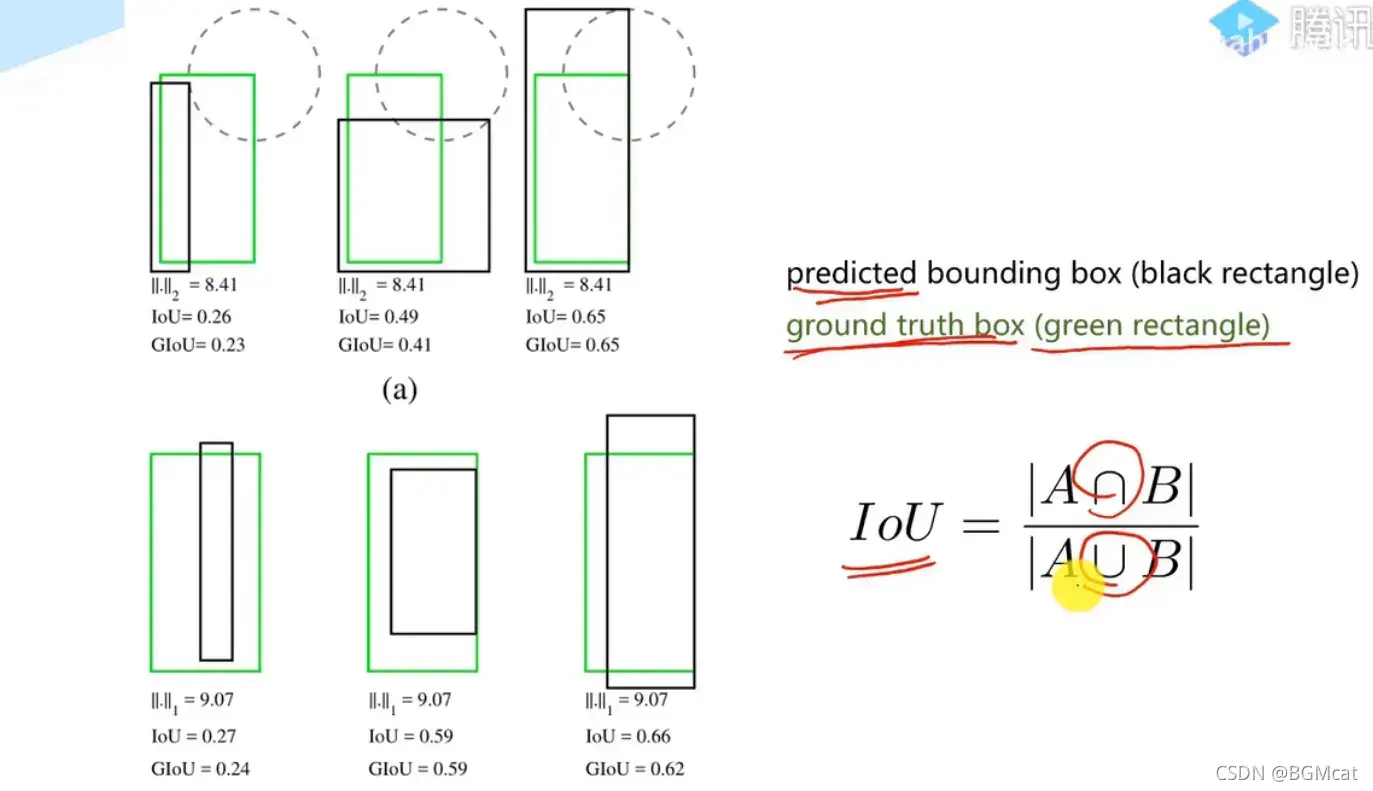

目标检测性能指标

检测精度

- precision,recall,f1 score

- iou(intersection over union)交并比

- P-R curve (precision-recall curve)

- AP (average precison)

- mAP (mean ap)

检测速度

- 前传耗时

- 每秒帧数FPS

- 浮点运算量 FLOPS

activation.py 激活函数相关代码

augmentations.py 图片扩展变换相关函数

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

"""

Image augmentation functions

"""

import logging

import math

import random

import cv2

import numpy as np

from utils.general import colorstr, segment2box, resample_segments, check_version

from utils.metrics import bbox_ioa

class Albumentations:

# YOLOv5 Albumentations class (optional, only used if package is installed)

def __init__(self):

self.transform = None

try:

import albumentations as A

check_version(A.__version__, '1.0.3') # version requirement

self.transform = A.Compose([

A.Blur(p=0.01),

A.MedianBlur(p=0.01),

A.ToGray(p=0.01),

A.CLAHE(p=0.01),

A.RandomBrightnessContrast(p=0.0),

A.RandomGamma(p=0.0),

A.ImageCompression(quality_lower=75, p=0.0)],

bbox_params=A.BboxParams(format='yolo', label_fields=['class_labels']))

logging.info(colorstr('albumentations: ') + ', '.join(f'{x}' for x in self.transform.transforms if x.p))

except ImportError: # package not installed, skip

pass

except Exception as e:

logging.info(colorstr('albumentations: ') + f'{e}')

def __call__(self, im, labels, p=1.0):

if self.transform and random.random() < p:

new = self.transform(image=im, bboxes=labels[:, 1:], class_labels=labels[:, 0]) # transformed

im, labels = new['image'], np.array([[c, *b] for c, b in zip(new['class_labels'], new['bboxes'])])

return im, labels

def augment_hsv(im, hgain=0.5, sgain=0.5, vgain=0.5):

# HSV color-space augmentation

if hgain or sgain or vgain:

r = np.random.uniform(-1, 1, 3) * [hgain, sgain, vgain] + 1 # random gains

hue, sat, val = cv2.split(cv2.cvtColor(im, cv2.COLOR_BGR2HSV))

dtype = im.dtype # uint8

x = np.arange(0, 256, dtype=r.dtype)

lut_hue = ((x * r[0]) % 180).astype(dtype)

lut_sat = np.clip(x * r[1], 0, 255).astype(dtype)

lut_val = np.clip(x * r[2], 0, 255).astype(dtype)

im_hsv = cv2.merge((cv2.LUT(hue, lut_hue), cv2.LUT(sat, lut_sat), cv2.LUT(val, lut_val)))

cv2.cvtColor(im_hsv, cv2.COLOR_HSV2BGR, dst=im) # no return needed

def hist_equalize(im, clahe=True, bgr=False):

# Equalize histogram on BGR image 'im' with im.shape(n,m,3) and range 0-255

yuv = cv2.cvtColor(im, cv2.COLOR_BGR2YUV if bgr else cv2.COLOR_RGB2YUV)

if clahe:

c = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8, 8))

yuv[:, :, 0] = c.apply(yuv[:, :, 0])

else:

yuv[:, :, 0] = cv2.equalizeHist(yuv[:, :, 0]) # equalize Y channel histogram

return cv2.cvtColor(yuv, cv2.COLOR_YUV2BGR if bgr else cv2.COLOR_YUV2RGB) # convert YUV image to RGB

def replicate(im, labels):

# Replicate labels

h, w = im.shape[:2]

boxes = labels[:, 1:].astype(int)

x1, y1, x2, y2 = boxes.T

s = ((x2 - x1) + (y2 - y1)) / 2 # side length (pixels)

for i in s.argsort()[:round(s.size * 0.5)]: # smallest indices

x1b, y1b, x2b, y2b = boxes[i]

bh, bw = y2b - y1b, x2b - x1b

yc, xc = int(random.uniform(0, h - bh)), int(random.uniform(0, w - bw)) # offset x, y

x1a, y1a, x2a, y2a = [xc, yc, xc + bw, yc + bh]

im[y1a:y2a, x1a:x2a] = im[y1b:y2b, x1b:x2b] # im4[ymin:ymax, xmin:xmax]

labels = np.append(labels, [[labels[i, 0], x1a, y1a, x2a, y2a]], axis=0)

return im, labels

#缩放图片,resize保持图片的宽高比,剩下的部分有灰色填充

def letterbox(im, new_shape=(640, 640), color=(114, 114, 114), auto=True, scaleFill=False, scaleup=True, stride=32):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

# Scale ratio (new / old)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

#缩放到输入大小img_size的时候,如果没有设置上采样,则只能下采样

#上采用会使图片变得模糊,影响性能

if not scaleup: # only scale down, do not scale up (for better val mAP)

r = min(r, 1.0)

# Compute padding

ratio = r, r # width, height ratios

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

if auto: # minimum rectangle 获取最小的矩形填充

dw, dh = np.mod(dw, stride), np.mod(dh, stride) # wh padding

#scaleFill为True则不进行填充

elif scaleFill: # stretch

dw, dh = 0.0, 0.0

new_unpad = (new_shape[1], new_shape[0])

ratio = new_shape[1] / shape[1], new_shape[0] / shape[0] # width, height ratios

#计算上下左右填充大小

dw /= 2 # divide padding into 2 sides

dh /= 2

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im, ratio, (dw, dh)

#随机透视变换

#计算方法为坐标向量和变换矩阵的乘积

def random_perspective(im, targets=(), segments=(), degrees=10, translate=.1, scale=.1, shear=10, perspective=0.0,

border=(0, 0)):

# torchvision.transforms.RandomAffine(degrees=(-10, 10), translate=(.1, .1), scale=(.9, 1.1), shear=(-10, 10))

# targets = [cls, xyxy]

height = im.shape[0] + border[0] * 2 # shape(h,w,c)

width = im.shape[1] + border[1] * 2

# Center

C = np.eye(3)

C[0, 2] = -im.shape[1] / 2 # x translation (pixels)

C[1, 2] = -im.shape[0] / 2 # y translation (pixels)

# Perspective

P = np.eye(3)

P[2, 0] = random.uniform(-perspective, perspective) # x perspective (about y)

P[2, 1] = random.uniform(-perspective, perspective) # y perspective (about x)

# Rotation and Scale

R = np.eye(3)

a = random.uniform(-degrees, degrees)

# a += random.choice([-180, -90, 0, 90]) # add 90deg rotations to small rotations

s = random.uniform(1 - scale, 1 + scale)

# s = 2 ** random.uniform(-scale, scale)

R[:2] = cv2.getRotationMatrix2D(angle=a, center=(0, 0), scale=s)

# Shear

S = np.eye(3)

S[0, 1] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # x shear (deg)

S[1, 0] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # y shear (deg)

# Translation

T = np.eye(3)

T[0, 2] = random.uniform(0.5 - translate, 0.5 + translate) * width # x translation (pixels)

T[1, 2] = random.uniform(0.5 - translate, 0.5 + translate) * height # y translation (pixels)

# Combined rotation matrix

M = T @ S @ R @ P @ C # order of operations (right to left) is IMPORTANT

if (border[0] != 0) or (border[1] != 0) or (M != np.eye(3)).any(): # image changed

if perspective:

im = cv2.warpPerspective(im, M, dsize=(width, height), borderValue=(114, 114, 114))

else: # affine

im = cv2.warpAffine(im, M[:2], dsize=(width, height), borderValue=(114, 114, 114))

# Visualize

# import matplotlib.pyplot as plt

# ax = plt.subplots(1, 2, figsize=(12, 6))[1].ravel()

# ax[0].imshow(im[:, :, ::-1]) # base

# ax[1].imshow(im2[:, :, ::-1]) # warped

# Transform label coordinates

n = len(targets)

if n:

use_segments = any(x.any() for x in segments)

new = np.zeros((n, 4))

if use_segments: # warp segments

segments = resample_segments(segments) # upsample

for i, segment in enumerate(segments):

xy = np.ones((len(segment), 3))

xy[:, :2] = segment

xy = xy @ M.T # transform

xy = xy[:, :2] / xy[:, 2:3] if perspective else xy[:, :2] # perspective rescale or affine

# clip

new[i] = segment2box(xy, width, height)

else: # warp boxes

xy = np.ones((n * 4, 3))

xy[:, :2] = targets[:, [1, 2, 3, 4, 1, 4, 3, 2]].reshape(n * 4, 2) # x1y1, x2y2, x1y2, x2y1

xy = xy @ M.T # transform

xy = (xy[:, :2] / xy[:, 2:3] if perspective else xy[:, :2]).reshape(n, 8) # perspective rescale or affine

# create new boxes

x = xy[:, [0, 2, 4, 6]]

y = xy[:, [1, 3, 5, 7]]

new = np.concatenate((x.min(1), y.min(1), x.max(1), y.max(1))).reshape(4, n).T

# clip

new[:, [0, 2]] = new[:, [0, 2]].clip(0, width)

new[:, [1, 3]] = new[:, [1, 3]].clip(0, height)

# filter candidates

i = box_candidates(box1=targets[:, 1:5].T * s, box2=new.T, area_thr=0.01 if use_segments else 0.10)

targets = targets[i]

targets[:, 1:5] = new[i]

return im, targets

def copy_paste(im, labels, segments, p=0.5):

# Implement Copy-Paste augmentation https://arxiv.org/abs/2012.07177, labels as nx5 np.array(cls, xyxy)

n = len(segments)

if p and n:

h, w, c = im.shape # height, width, channels

im_new = np.zeros(im.shape, np.uint8)

for j in random.sample(range(n), k=round(p * n)):

l, s = labels[j], segments[j]

box = w - l[3], l[2], w - l[1], l[4]

ioa = bbox_ioa(box, labels[:, 1:5]) # intersection over area

if (ioa < 0.30).all(): # allow 30% obscuration of existing labels

labels = np.concatenate((labels, [[l[0], *box]]), 0)

segments.append(np.concatenate((w - s[:, 0:1], s[:, 1:2]), 1))

cv2.drawContours(im_new, [segments[j].astype(np.int32)], -1, (255, 255, 255), cv2.FILLED)

result = cv2.bitwise_and(src1=im, src2=im_new)

result = cv2.flip(result, 1) # augment segments (flip left-right)

i = result > 0 # pixels to replace

# i[:, :] = result.max(2).reshape(h, w, 1) # act over ch

im[i] = result[i] # cv2.imwrite('debug.jpg', im) # debug

return im, labels, segments

#cutout数据增强,填充随机颜色

def cutout(im, labels, p=0.5):

# Applies image cutout augmentation https://arxiv.org/abs/1708.04552

if random.random() < p:

h, w = im.shape[:2]

scales = [0.5] * 1 + [0.25] * 2 + [0.125] * 4 + [0.0625] * 8 + [0.03125] * 16 # image size fraction

for s in scales:

mask_h = random.randint(1, int(h * s)) # create random masks

mask_w = random.randint(1, int(w * s))

# box

xmin = max(0, random.randint(0, w) - mask_w // 2)

ymin = max(0, random.randint(0, h) - mask_h // 2)

xmax = min(w, xmin + mask_w)

ymax = min(h, ymin + mask_h)

# apply random color mask

im[ymin:ymax, xmin:xmax] = [random.randint(64, 191) for _ in range(3)]

# return unobscured labels

if len(labels) and s > 0.03:

box = np.array([xmin, ymin, xmax, ymax], dtype=np.float32)

ioa = bbox_ioa(box, labels[:, 1:5]) # intersection over area

labels = labels[ioa < 0.60] # remove >60% obscured labels

return labels

def mixup(im, labels, im2, labels2):

# Applies MixUp augmentation https://arxiv.org/pdf/1710.09412.pdf

r = np.random.beta(32.0, 32.0) # mixup ratio, alpha=beta=32.0

im = (im * r + im2 * (1 - r)).astype(np.uint8)

labels = np.concatenate((labels, labels2), 0)

return im, labels

#挑选合适的目标框送入训练,阈值

def box_candidates(box1, box2, wh_thr=2, ar_thr=20, area_thr=0.1, eps=1e-16): # box1(4,n), box2(4,n)

# Compute candidate boxes: box1 before augment, box2 after augment, wh_thr (pixels), aspect_ratio_thr, area_ratio

w1, h1 = box1[2] - box1[0], box1[3] - box1[1]

w2, h2 = box2[2] - box2[0], box2[3] - box2[1]

ar = np.maximum(w2 / (h2 + eps), h2 / (w2 + eps)) # aspect ratio

return (w2 > wh_thr) & (h2 > wh_thr) & (w2 * h2 / (w1 * h1 + eps) > area_thr) & (ar < ar_thr) # candidates

autoanchor.py 自动描边相关函数

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

"""

Auto-anchor utils

"""

import random

import numpy as np

import torch

import yaml

from tqdm import tqdm

from utils.general import colorstr

#检查anchor顺序和stride的顺序是否一致

def check_anchor_order(m):

# Check anchor order against stride order for YOLOv5 Detect() module m, and correct if necessary

a = m.anchors.prod(-1).view(-1) # anchor area

da = a[-1] - a[0] # delta a

ds = m.stride[-1] - m.stride[0] # delta s

if da.sign() != ds.sign(): # same order

print('Reversing anchor order')

m.anchors[:] = m.anchors.flip(0)

#自动绘制外边框,描框

def check_anchors(dataset, model, thr=4.0, imgsz=640):

# Check anchor fit to data, recompute if necessary

prefix = colorstr('autoanchor: ')

print(f'\n{prefix}Analyzing anchors... ', end='')

m = model.module.model[-1] if hasattr(model, 'module') else model.model[-1] # Detect()

shapes = imgsz * dataset.shapes / dataset.shapes.max(1, keepdims=True)

scale = np.random.uniform(0.9, 1.1, size=(shapes.shape[0], 1)) # augment scale

wh = torch.tensor(np.concatenate([l[:, 3:5] * s for s, l in zip(shapes * scale, dataset.labels)])).float() # wh

def metric(k): # compute metric

r = wh[:, None] / k[None]

x = torch.min(r, 1. / r).min(2)[0] # ratio metric wh 方向找最小值

best = x.max(1)[0] # best_x nx9个anchor中找最大值

aat = (x > 1. / thr).float().sum(1).mean() # anchors above threshold

bpr = (best > 1. / thr).float().mean() # best possible recall

return bpr, aat

anchors = m.anchors.clone() * m.stride.to(m.anchors.device).view(-1, 1, 1) # current anchors

bpr, aat = metric(anchors.cpu().view(-1, 2))

print(f'anchors/target = {aat:.2f}, Best Possible Recall (BPR) = {bpr:.4f}', end='')

if bpr < 0.98: # threshold to recompute,如果bpr小于98,则根据k-mean算法聚类新的描框

print('. Attempting to improve anchors, please wait...')

na = m.anchors.numel() // 2 # number of anchors

try:

anchors = kmean_anchors(dataset, n=na, img_size=imgsz, thr=thr, gen=1000, verbose=False)

except Exception as e:

print(f'{prefix}ERROR: {e}')

new_bpr = metric(anchors)[0]

if new_bpr > bpr: # replace anchors

anchors = torch.tensor(anchors, device=m.anchors.device).type_as(m.anchors)

m.anchors[:] = anchors.clone().view_as(m.anchors) / m.stride.to(m.anchors.device).view(-1, 1, 1) # loss

check_anchor_order(m)

print(f'{prefix}New anchors saved to model. Update model *.yaml to use these anchors in the future.')

else:

print(f'{prefix}Original anchors better than new anchors. Proceeding with original anchors.')

print('') # newline

#anchor做kmean处理,用到了进化算法

def kmean_anchors(dataset='./data/coco128.yaml', n=9, img_size=640, thr=4.0, gen=1000, verbose=True):

""" Creates kmeans-evolved anchors from training dataset

Arguments:

dataset: path to data.yaml, or a loaded dataset

n: number of anchors

img_size: image size used for training

thr: anchor-label wh ratio threshold hyperparameter hyp['anchor_t'] used for training, default=4.0

gen: generations to evolve anchors using genetic algorithm

verbose: print all results

Return:

k: kmeans evolved anchors

Usage:

from utils.autoanchor import *; _ = kmean_anchors()

"""

from scipy.cluster.vq import kmeans

thr = 1. / thr

prefix = colorstr('autoanchor: ')

def metric(k, wh): # compute metrics

r = wh[:, None] / k[None]

x = torch.min(r, 1. / r).min(2)[0] # ratio metric

# x = wh_iou(wh, torch.tensor(k)) # iou metric

return x, x.max(1)[0] # x, best_x

def anchor_fitness(k): # mutation fitness

_, best = metric(torch.tensor(k, dtype=torch.float32), wh)

return (best * (best > thr).float()).mean() # fitness

def print_results(k):

k = k[np.argsort(k.prod(1))] # sort small to large

x, best = metric(k, wh0)

bpr, aat = (best > thr).float().mean(), (x > thr).float().mean() * n # best possible recall, anch > thr

print(f'{prefix}thr={thr:.2f}: {bpr:.4f} best possible recall, {aat:.2f} anchors past thr')

print(f'{prefix}n={n}, img_size={img_size}, metric_all={x.mean():.3f}/{best.mean():.3f}-mean/best, '

f'past_thr={x[x > thr].mean():.3f}-mean: ', end='')

for i, x in enumerate(k):

print('%i,%i' % (round(x[0]), round(x[1])), end=', ' if i < len(k) - 1 else '\n') # use in *.cfg

return k

if isinstance(dataset, str): # *.yaml file

with open(dataset, errors='ignore') as f:

data_dict = yaml.safe_load(f) # model dict

from utils.datasets import LoadImagesAndLabels

dataset = LoadImagesAndLabels(data_dict['train'], augment=True, rect=True)

# Get label wh

shapes = img_size * dataset.shapes / dataset.shapes.max(1, keepdims=True)

wh0 = np.concatenate([l[:, 3:5] * s for s, l in zip(shapes, dataset.labels)]) # wh

# Filter

i = (wh0 < 3.0).any(1).sum()

if i:

print(f'{prefix}WARNING: Extremely small objects found. {i} of {len(wh0)} labels are < 3 pixels in size.')

wh = wh0[(wh0 >= 2.0).any(1)] # filter > 2 pixels

# wh = wh * (np.random.rand(wh.shape[0], 1) * 0.9 + 0.1) # multiply by random scale 0-1

# Kmeans calculation

print(f'{prefix}Running kmeans for {n} anchors on {len(wh)} points...')

s = wh.std(0) # sigmas for whitening

k, dist = kmeans(wh / s, n, iter=30) # points, mean distance,使用scipy包含的kmeans函数

assert len(k) == n, f'{prefix}ERROR: scipy.cluster.vq.kmeans requested {n} points but returned only {len(k)}'

k *= s

wh = torch.tensor(wh, dtype=torch.float32) # filtered

wh0 = torch.tensor(wh0, dtype=torch.float32) # unfiltered

k = print_results(k)

# Plot

# k, d = [None] * 20, [None] * 20

# for i in tqdm(range(1, 21)):

# k[i-1], d[i-1] = kmeans(wh / s, i) # points, mean distance

# fig, ax = plt.subplots(1, 2, figsize=(14, 7), tight_layout=True)

# ax = ax.ravel()

# ax[0].plot(np.arange(1, 21), np.array(d) ** 2, marker='.')

# fig, ax = plt.subplots(1, 2, figsize=(14, 7)) # plot wh

# ax[0].hist(wh[wh[:, 0]<100, 0],400)

# ax[1].hist(wh[wh[:, 1]<100, 1],400)

# fig.savefig('wh.png', dpi=200)

# Evolve

npr = np.random

f, sh, mp, s = anchor_fitness(k), k.shape, 0.9, 0.1 # fitness, generations, mutation prob, sigma

pbar = tqdm(range(gen), desc=f'{prefix}Evolving anchors with Genetic Algorithm:') # progress bar

for _ in pbar:

v = np.ones(sh)

while (v == 1).all(): # mutate until a change occurs (prevent duplicates)

v = ((npr.random(sh) < mp) * random.random() * npr.randn(*sh) * s + 1).clip(0.3, 3.0)

kg = (k.copy() * v).clip(min=2.0)

fg = anchor_fitness(kg)

if fg > f:#有更好的fitness

f, k = fg, kg.copy()#使用进化后的描框值

pbar.desc = f'{prefix}Evolving anchors with Genetic Algorithm: fitness = {f:.4f}'

if verbose:

print_results(k)

return print_results(k)

callback.py

datasets.py 读取数据集,并做处理的相关函数

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

"""

Dataloaders and dataset utils

"""

import glob

import hashlib

import json

import logging

import os

import random

import shutil

import time

from itertools import repeat

from multiprocessing.pool import ThreadPool, Pool

from pathlib import Path

from threading import Thread

from zipfile import ZipFile

import cv2

import numpy as np

import torch

import torch.nn.functional as F

import yaml

from PIL import Image, ExifTags

from torch.utils.data import Dataset

from tqdm import tqdm

from utils.augmentations import Albumentations, augment_hsv, copy_paste, letterbox, mixup, random_perspective

from utils.general import check_dataset, check_requirements, check_yaml, clean_str, segments2boxes, \

xywh2xyxy, xywhn2xyxy, xyxy2xywhn, xyn2xy

from utils.torch_utils import torch_distributed_zero_first

# Parameters

HELP_URL = 'https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data'

#图片格式

IMG_FORMATS = ['bmp', 'jpg', 'jpeg', 'png', 'tif', 'tiff', 'dng', 'webp', 'mpo'] # acceptable image suffixes

#视频格式

VID_FORMATS = ['mov', 'avi', 'mp4', 'mpg', 'mpeg', 'm4v', 'wmv', 'mkv'] # acceptable video suffixes

NUM_THREADS = min(8, os.cpu_count()) # number of multiprocessing threads

# Get orientation exif tag

# 是专门为数码相机相片设计的

for orientation in ExifTags.TAGS.keys():

if ExifTags.TAGS[orientation] == 'Orientation':

break

#返回文件列表的hash值

def get_hash(paths):

# Returns a single hash value of a list of paths (files or dirs)

size = sum(os.path.getsize(p) for p in paths if os.path.exists(p)) # sizes

h = hashlib.md5(str(size).encode()) # hash sizes

h.update(''.join(paths).encode()) # hash paths

return h.hexdigest() # return hash

#获取图片的宽高信息

def exif_size(img):

# Returns exif-corrected PIL size

s = img.size # (width, height)

try:

rotation = dict(img._getexif().items())[orientation]#调整数据相机照片方向

if rotation == 6: # rotation 270

s = (s[1], s[0])

elif rotation == 8: # rotation 90

s = (s[1], s[0])

except:

pass

return s

def exif_transpose(image):

"""

Transpose a PIL image accordingly if it has an EXIF Orientation tag.

From https://github.com/python-pillow/Pillow/blob/master/src/PIL/ImageOps.py

:param image: The image to transpose.

:return: An image.

"""

exif = image.getexif()

orientation = exif.get(0x0112, 1) # default 1

if orientation > 1:

method = {2: Image.FLIP_LEFT_RIGHT,

3: Image.ROTATE_180,

4: Image.FLIP_TOP_BOTTOM,

5: Image.TRANSPOSE,

6: Image.ROTATE_270,

7: Image.TRANSVERSE,

8: Image.ROTATE_90,

}.get(orientation)

if method is not None:

image = image.transpose(method)

del exif[0x0112]

image.info["exif"] = exif.tobytes()

return image

def create_dataloader(path, imgsz, batch_size, stride, single_cls=False, hyp=None, augment=False, cache=False, pad=0.0,

rect=False, rank=-1, workers=8, image_weights=False, quad=False, prefix=''):

# Make sure only the first process in DDP process the dataset first, and the following others can use the cache

with torch_distributed_zero_first(rank):

dataset = LoadImagesAndLabels(path, imgsz, batch_size,

augment=augment, # augment images

hyp=hyp, # augmentation hyperparameters

rect=rect, # rectangular training

cache_images=cache,

single_cls=single_cls,

stride=int(stride),

pad=pad,

image_weights=image_weights,

prefix=prefix)

batch_size = min(batch_size, len(dataset))

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, workers]) # number of workers

sampler = torch.utils.data.distributed.DistributedSampler(dataset) if rank != -1 else None

loader = torch.utils.data.DataLoader if image_weights else InfiniteDataLoader

# Use torch.utils.data.DataLoader() if dataset.properties will update during training else InfiniteDataLoader()

dataloader = loader(dataset,

batch_size=batch_size,

num_workers=0,

sampler=sampler,

pin_memory=True,

collate_fn=LoadImagesAndLabels.collate_fn4 if quad else LoadImagesAndLabels.collate_fn)

return dataloader, dataset

class InfiniteDataLoader(torch.utils.data.dataloader.DataLoader):

""" Dataloader that reuses workers

Uses same syntax as vanilla DataLoader

"""

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

object.__setattr__(self, 'batch_sampler', _RepeatSampler(self.batch_sampler))

self.iterator = super().__iter__()

def __len__(self):

return len(self.batch_sampler.sampler)

def __iter__(self):

for i in range(len(self)):

yield next(self.iterator)

class _RepeatSampler(object):

""" Sampler that repeats forever

Args:

sampler (Sampler)

"""

def __init__(self, sampler):

self.sampler = sampler

def __iter__(self):

while True:

yield from iter(self.sampler)

#定义迭代器,用于detect.py

class LoadImages:

# YOLOv5 image/video dataloader, i.e. `python detect.py --source image.jpg/vid.mp4`

def __init__(self, path, img_size=640, stride=32, auto=True):

p = str(Path(path).resolve()) # os-agnostic absolute path

#采用正则表达式来提取图片,视频,可以获取文件路径

if '*' in p:

files = sorted(glob.glob(p, recursive=True)) # glob

elif os.path.isdir(p):

files = sorted(glob.glob(os.path.join(p, '*.*'))) # dir

elif os.path.isfile(p):

files = [p] # files

else:

raise Exception(f'ERROR: {p} does not exist')

#提取照片和视频的文件路径

images = [x for x in files if x.split('.')[-1].lower() in IMG_FORMATS]

videos = [x for x in files if x.split('.')[-1].lower() in VID_FORMATS]

# 获取图片与视频的数量

ni, nv = len(images), len(videos)

self.img_size = img_size#图片的大小

self.stride = stride

self.files = images + videos#整合到一个列表

self.nf = ni + nv # number of files

self.video_flag = [False] * ni + [True] * nv

#初始化模块信息,对于image和video做不同的处理

self.mode = 'image'

self.auto = auto

if any(videos):#如果包含视频文件,就初始化OpenCV中的视频模块

self.new_video(videos[0]) # new video

else:

self.cap = None

#打印提示信息

assert self.nf > 0, f'No images or videos found in {p}. ' \

f'Supported formats are:\nimages: {IMG_FORMATS}\nvideos: {VID_FORMATS}'

def __iter__(self):

self.count = 0

return self

def __next__(self):

if self.count == self.nf:#数据是否读完了

raise StopIteration

path = self.files[self.count]

if self.video_flag[self.count]:#如果是视频

# Read video

self.mode = 'video'

ret_val, img0 = self.cap.read()

if not ret_val:

self.count += 1

self.cap.release()#释放对象

if self.count == self.nf: # last video

raise StopIteration

else:

path = self.files[self.count]

self.new_video(path)

ret_val, img0 = self.cap.read()

self.frame += 1

print(f'video {self.count + 1}/{self.nf} ({self.frame}/{self.frames}) {path}: ', end='')

else:

# Read image

self.count += 1

img0 = cv2.imread(path) # BGR

assert img0 is not None, 'Image Not Found ' + path

print(f'image {self.count}/{self.nf} {path}: ', end='')

# Padded resize

img = letterbox(img0, self.img_size, stride=self.stride, auto=self.auto)[0]

# Convert

img = img.transpose((2, 0, 1))[::-1] # HWC to CHW, BGR to RGB

img = np.ascontiguousarray(img)#将数组内存转化为连续,提高运行速度

return path, img, img0, self.cap#返回resize+pad图片,原始图片,视频对象

def new_video(self, path):

self.frame = 0#记录帧数

self.cap = cv2.VideoCapture(path)#初始化视频对象

self.frames = int(self.cap.get(cv2.CAP_PROP_FRAME_COUNT))#获取总帧数

def __len__(self):

return self.nf # number of files

#未使用

class LoadWebcam: # for inference

# YOLOv5 local webcam dataloader, i.e. `python detect.py --source 0`

def __init__(self, pipe='0', img_size=640, stride=32):

# self.mode = 'cap'

self.img_size = img_size

self.stride = stride

self.pipe = eval(pipe) if pipe.isnumeric() else pipe

self.cap = cv2.VideoCapture(self.pipe) # video capture object

self.cap.set(cv2.CAP_PROP_BUFFERSIZE, 3) # set buffer size

def __iter__(self):

self.count = -1

return self

def __next__(self):

self.count += 1

if cv2.waitKey(1) == ord('q'): # q to quit

self.cap.release()

cv2.destroyAllWindows()

raise StopIteration

# Read frame

ret_val, img0 = self.cap.read()

img0 = cv2.flip(img0, 1) # flip left-right

# Print

assert ret_val, f'Camera Error {self.pipe}'

img_path = 'webcam.jpg'

print(f'webcam {self.count}: ', end='')

# Padded resize

img = letterbox(img0, self.img_size, stride=self.stride)[0]

# Convert

img = img.transpose((2, 0, 1))[::-1] # HWC to CHW, BGR to RGB

img = np.ascontiguousarray(img)

return img_path, img, img0, self.cap

def __len__(self):

return 0

#定义迭代器,用于detect.py文件,处理摄像头

'''

cv2视频函数;

cap.grap()获取视频的下一帧,返回T/F

cap.retrieve()在grap后使用,对获取的帧进行解码,返回T/F

cap.read(frame)结合了grap和retrieve的功能,抓取下一帧并解码

'''

class LoadStreams:

#多个ip或者rtsp摄像头

# YOLOv5 streamloader, i.e. `python detect.py --source 'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP streams`

def __init__(self, sources='streams.txt', img_size=640, stride=32, auto=True):

self.mode = 'stream'

self.img_size = img_size

self.stride = stride

#如果sources是一个保存了多个视频流的文件

#获取每一个视频流,保存为一个文件

if os.path.isfile(sources):

with open(sources, 'r') as f:

sources = [x.strip() for x in f.read().strip().splitlines() if len(x.strip())]

else:

sources = [sources]

n = len(sources)

self.imgs, self.fps, self.frames, self.threads = [None] * n, [0] * n, [0] * n, [None] * n

self.sources = [clean_str(x) for x in sources] # clean source names for later,视频流个数

self.auto = auto

for i, s in enumerate(sources): # index, source

# Start thread to read frames from video stream

print(f'{i + 1}/{n}: {s}... ', end='')#打印当前视频,总视频数,视频流地址

if 'youtube.com/' in str(s) or 'youtu.be/' in str(s): # if source is YouTube video

check_requirements(('pafy', 'youtube_dl'))

import pafy

s = pafy.new(s).getbest(preftype="mp4").url # YouTube URL

s = eval(s) if s.isnumeric() else s # i.e. s = '0' local webcam #如果source = 0 就打开摄像头,否则打开视频流的地址

cap = cv2.VideoCapture(s)

assert cap.isOpened(), f'Failed to open {s}'

w = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))#视频宽高

h = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

self.fps[i] = max(cap.get(cv2.CAP_PROP_FPS) % 100, 0) or 30.0 # 30 FPS fallback,视频帧率

self.frames[i] = max(int(cap.get(cv2.CAP_PROP_FRAME_COUNT)), 0) or float('inf') # infinite stream fallback

_, self.imgs[i] = cap.read() # guarantee first frame

#多线程读取,daemon=True 表示守护线程

self.threads[i] = Thread(target=self.update, args=([i, cap, s]), daemon=True)

print(f" success ({self.frames[i]} frames {w}x{h} at {self.fps[i]:.2f} FPS)")

self.threads[i].start()

print('') # newline

# check for common shapes

#获取进行resize+pad之后的shape,letter函数默认按照矩形推理填充

s = np.stack([letterbox(x, self.img_size, stride=self.stride, auto=self.auto)[0].shape for x in self.imgs])

self.rect = np.unique(s, axis=0).shape[0] == 1 # rect inference if all shapes equal

if not self.rect:#画面不同形状警告

print('WARNING: Different stream shapes detected. For optimal performance supply similarly-shaped streams.')

def update(self, i, cap, stream):

# Read stream `i` frames in daemon thread

n, f, read = 0, self.frames[i], 1 # frame number, frame array, inference every 'read' frame

while cap.isOpened() and n < f:

n += 1

# _, self.imgs[index] = cap.read()

cap.grab()

if n % read == 0:#

success, im = cap.retrieve()

if success:

self.imgs[i] = im

else:

print('WARNING: Video stream unresponsive, please check your IP camera connection.')

self.imgs[i] *= 0

cap.open(stream) # re-open stream if signal was lost

time.sleep(1 / self.fps[i]) # wait time

def __iter__(self):

self.count = -1

return self

def __next__(self):

self.count += 1

#q键退出

if not all(x.is_alive() for x in self.threads) or cv2.waitKey(1) == ord('q'): # q to quit

cv2.destroyAllWindows()

raise StopIteration

# Letterbox

img0 = self.imgs.copy()

# letterbox对图片进行缩放

img = [letterbox(x, self.img_size, stride=self.stride, auto=self.rect and self.auto)[0] for x in img0]

# Stack

#t图片拼接以前

img = np.stack(img, 0)

# Convert

img = img[..., ::-1].transpose((0, 3, 1, 2)) # BGR to RGB, BHWC to BCHW

img = np.ascontiguousarray(img)

return self.sources, img, img0, None

def __len__(self):

return len(self.sources) # 1E12 frames = 32 streams at 30 FPS for 30 years

#返回标记文件,。txt

def img2label_paths(img_paths):

# Define label paths as a function of image paths

sa, sb = os.sep + 'images' + os.sep, os.sep + 'labels' + os.sep # /images/, /labels/ substrings

return [sb.join(x.rsplit(sa, 1)).rsplit('.', 1)[0] + '.txt' for x in img_paths]

#自定义数据集,重写len,getitem方法

class LoadImagesAndLabels(Dataset):

# YOLOv5 train_loader/val_loader, loads images and labels for training and validation

cache_version = 0.6 # dataset labels *.cache version

def __init__(self, path, img_size=640, batch_size=16, augment=False, hyp=None, rect=False, image_weights=False,

cache_images=False, single_cls=False, stride=32, pad=0.0, prefix=''):

self.img_size = img_size#输入图片大小

self.augment = augment#数据增强

self.hyp = hyp#超参数

self.image_weights = image_weights#图片采样权重

self.rect = False if image_weights else rect#矩形训练

#mosaic数据增强

self.mosaic = self.augment and not self.rect # load 4 images at a time into a mosaic (only during training)

#mosic数据边界增强

self.mosaic_border = [-img_size // 2, -img_size // 2]

self.stride = stride#模型下采样步长

self.path = path

self.albumentations = Albumentations() if augment else None

try:

f = [] # image files

for p in path if isinstance(path, list) else [path]:

#获取数据集路径,包含图片路径txt文件或者图片的文件夹

p = Path(p) # os-agnostic

if p.is_dir(): # dir

f += glob.glob(str(p / '**' / '*.*'), recursive=True)#获取所有匹配的文件路径

# f = list(p.rglob('**/*.*')) # pathlib

elif p.is_file(): # file

with open(p, 'r') as t:

t = t.read().strip().splitlines() #获取图片路径,切换相对路径

parent = str(p.parent) + os.sep#获取上级父目录,os.sep为自适应系统分隔符,Windows \ linux为 / mac 为:

f += [x.replace('./', parent) if x.startswith('./') else x for x in t] # local to global path

# f += [p.parent / x.lstrip(os.sep) for x in t] # local to global path (pathlib)

else:

raise Exception(f'{prefix}{p} does not exist')

#图片排序

self.img_files = sorted([x.replace('/', os.sep) for x in f if x.split('.')[-1].lower() in IMG_FORMATS])

# self.img_files = sorted([x for x in f if x.suffix[1:].lower() in img_formats]) # pathlib

assert self.img_files, f'{prefix}No images found'

except Exception as e:

raise Exception(f'{prefix}Error loading data from {path}: {e}\nSee {HELP_URL}')

# Check cache

self.label_files = img2label_paths(self.img_files) # labels,返回标签

cache_path = (p if p.is_file() else Path(self.label_files[0]).parent).with_suffix('.cache')#cache labels,将标签加载到内存中不必每次在读取

try:

cache, exists = np.load(cache_path, allow_pickle=True).item(), True # load dict

assert cache['version'] == self.cache_version # same version

assert cache['hash'] == get_hash(self.label_files + self.img_files) # same hash

except:

cache, exists = self.cache_labels(cache_path, prefix), False # cache

# Display cache

nf, nm, ne, nc, n = cache.pop('results') # found, missing, empty, corrupted, total

if exists:

d = f"Scanning '{cache_path}' images and labels... {nf} found, {nm} missing, {ne} empty, {nc} corrupted"

tqdm(None, desc=prefix + d, total=n, initial=n) # display cache results

if cache['msgs']:

logging.info('\n'.join(cache['msgs'])) # display warnings

assert nf > 0 or not augment, f'{prefix}No labels in {cache_path}. Can not train without labels. See {HELP_URL}'

# Read cache

[cache.pop(k) for k in ('hash', 'version', 'msgs')] # remove items

labels, shapes, self.segments = zip(*cache.values())#解压cacha

self.labels = list(labels)

self.shapes = np.array(shapes, dtype=np.float64)

#根据索引排序数据集与标签路径,shape,h/w

self.img_files = list(cache.keys()) # update

self.label_files = img2label_paths(cache.keys()) # update

n = len(shapes) # number of images

bi = np.floor(np.arange(n) / batch_size).astype(np.int) # batch index

nb = bi[-1] + 1 # number of batches

self.batch = bi # batch index of image

self.n = n

self.indices = range(n)

# Update labels

include_class = [] # filter labels to include only these classes (optional)

include_class_array = np.array(include_class).reshape(1, -1)

for i, (label, segment) in enumerate(zip(self.labels, self.segments)):

if include_class:

j = (label[:, 0:1] == include_class_array).any(1)

self.labels[i] = label[j]

if segment:

self.segments[i] = segment[j]

if single_cls: # single-class training, merge all classes into 0

self.labels[i][:, 0] = 0

if segment:

self.segments[i][:, 0] = 0

# Rectangular Training

if self.rect:

# Sort by aspect ratio

s = self.shapes # wh

ar = s[:, 1] / s[:, 0] # aspect ratio

irect = ar.argsort()

self.img_files = [self.img_files[i] for i in irect]

self.label_files = [self.label_files[i] for i in irect]

self.labels = [self.labels[i] for i in irect]

self.shapes = s[irect] # wh

ar = ar[irect]

# Set training image shapes

shapes = [[1, 1]] * nb

for i in range(nb):

ari = ar[bi == i]