背景

上一篇文章介绍了【Tools】神经网络、深度学习和机器学习模型可视化工具——Netron。写完之后我有琢磨了一下,是否还有其他的可视化工具,例如Tensorboard。网上查阅了一下,果不其然还真有。下面介绍一下。

基于Tensorboard的模型可视化

或者使用之前文档介绍的内容进行可视化。代码显示如下:

mport torch.nn as nn

import torch

from torch.utils.tensorboard import SummaryWriter

class TextCNN(nn.Module):

def __init__(self):

super().__init__()

vocab_size = 10000

embedding_dim = 128

filter_number = 60

output_size = 10

kernel_list = [3, 4, 5]

max_length = 200

drop_out = 0.5

self.embedding = nn.Embedding(vocab_size, embedding_dim)

self.convs = nn.ModuleList([

nn.Sequential(nn.Conv1d(in_channels=embedding_dim, out_channels=filter_number,

kernel_size=kernel),

nn.LeakyReLU(),

nn.MaxPool1d(kernel_size=max_length - kernel + 1))

for kernel in kernel_list

])

self.fc = nn.Linear(filter_number * len(kernel_list), output_size)

self.dropout = nn.Dropout(drop_out)

def forward(self, x) :

x = self.embedding(x)

x = x.permute(0, 2, 1) # 维度转换,满足一维卷积的输入

out = [conv(x) for conv in self.convs]

out = torch.cat(out, dim=1) # [128, 300, 1, 1],各通道的数据拼接在一起

out = out.view(x.size(0), -1) # 展平

out = self.fc(out) # 结果输出[128, 2]

out = self.dropout(out) # 构建dropout层out = self.dropout(out) # 构建dropout层

return out

# 模型出初始化

model = TextCNN()

inputs = torch.tensor([[1, 2, 3, 4, 5]*40], dtype=torch.long)

with SummaryWriter() as writer:

writer.add_graph(model=model, input_to_model=inputs, verbose=False)

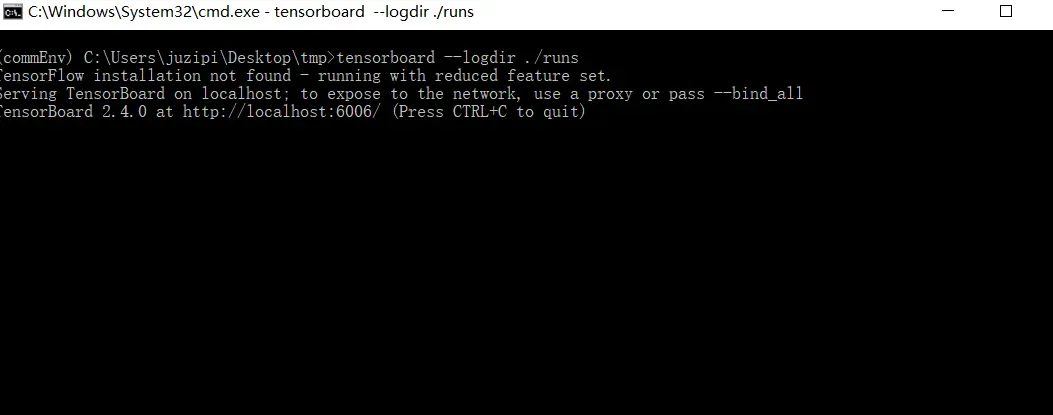

执行完代码后,会默认生成一个文件夹runs,然后在控制台中执行:tensorboard --logdir ./runs就会有类似于如下图的内容:

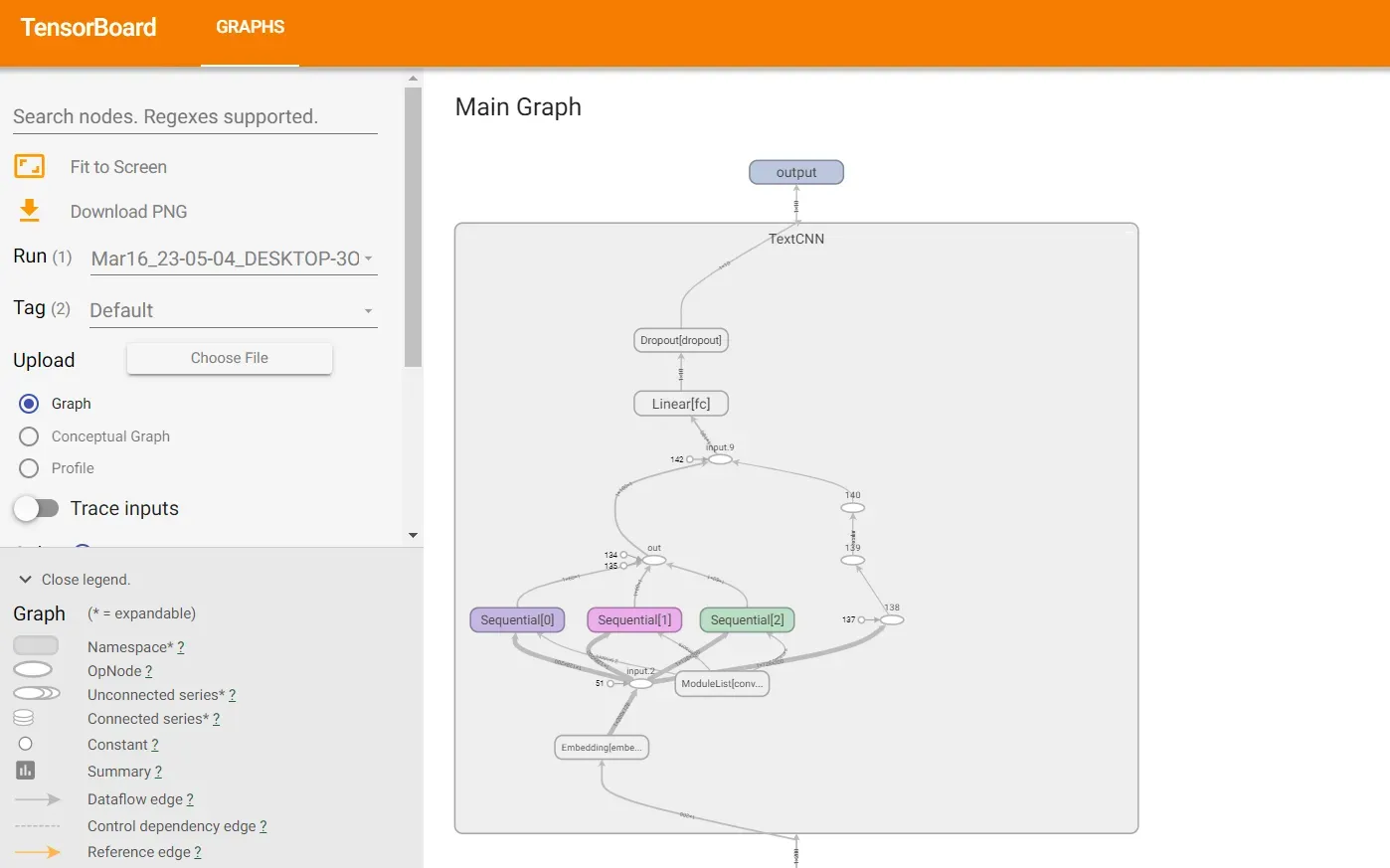

然后在浏览器中打开它来查看它。

模型的具体结构可以通过点击各个模块查看。

需要说明的是,input_to_model参数应该是模型输入的参数。

文章出处登录后可见!

已经登录?立即刷新