论文链接:https://arxiv.org/abs/2003.10152

代码链接:https://github.com/WXinlong/SOLO

1. 环境搭建

- 除了mmcv单独安装,其他用:

pip3 install requirements.txt

- 安装mmcv:solo的代码用mmdet v1.0.0,搭配的是mmcv 0.2.16(有人用将mmdet替换成openlab-mmdetection官网最新mmcv,希望这样可以更换mmcv为最新的,是不可行的!所以这样就必须用mmcv 0.2.16了)

- 会通过以下方式报错:

- pip install mmcv-full==0.2.16 -f https://download.openmmlab.com/mmcv/dist/cu111/torch1.9.0/index.html

- 所以采用先下载mmcv 0.2.16代码,再编译:

- git clone -b v0.2.16 https://github.com/open-mmlab/mmcv.git

- cd mmcv

- pip install -e .

- 将代码添加到环境变量中:(**是你自己的文件路径)

- export PYTHONPATH=$PYTHONPATH:**/SOLO-master

2. 修改配置文件

- SOLO-master/mmdet/core/evaluation/class_names.py

def coco_classes():

return [

'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train',

'truck', 'boat', 'traffic_light', 'fire_hydrant', 'stop_sign',

'parking_meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep',

'cow', 'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella',

'handbag', 'tie', 'suitcase', 'frisbee', 'skis', 'snowboard',

'sports_ball', 'kite', 'baseball_bat', 'baseball_glove', 'skateboard',

'surfboard', 'tennis_racket', 'bottle', 'wine_glass', 'cup', 'fork',

'knife', 'spoon', 'bowl', 'banana', 'apple', 'sandwich', 'orange',

'broccoli', 'carrot', 'hot_dog', 'pizza', 'donut', 'cake', 'chair',

'couch', 'potted_plant', 'bed', 'dining_table', 'toilet', 'tv',

'laptop', 'mouse', 'remote', 'keyboard', 'cell_phone', 'microwave',

'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase',

'scissors', 'teddy_bear', 'hair_drier', 'toothbrush'

]

修改你自己的类别:

def coco_classes():

return [

'park',

]

- SOLO-master/mmdet/datasets/coco.py

@DATASETS.register_module

class CocoDataset(CustomDataset):

CLASSES = ('person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus',

'train', 'truck', 'boat', 'traffic_light', 'fire_hydrant',

'stop_sign', 'parking_meter', 'bench', 'bird', 'cat', 'dog',

'horse', 'sheep', 'cow', 'elephant', 'bear', 'zebra', 'giraffe',

'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports_ball', 'kite', 'baseball_bat',

'baseball_glove', 'skateboard', 'surfboard', 'tennis_racket',

'bottle', 'wine_glass', 'cup', 'fork', 'knife', 'spoon', 'bowl',

'banana', 'apple', 'sandwich', 'orange', 'broccoli', 'carrot',

'hot_dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted_plant', 'bed', 'dining_table', 'toilet', 'tv', 'laptop',

'mouse', 'remote', 'keyboard', 'cell_phone', 'microwave',

'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock',

'vase', 'scissors', 'teddy_bear', 'hair_drier', 'toothbrush')

变成:

@DATASETS.register_module

class CocoDataset(CustomDataset):

CLASSES = ('park',)

- SOLO-master/configs/solov2/solov2_x101_dcn_fpn_8gpu_3x.py:

- 修改权重 resnext101_64x4d-ee2c6f71.pth 和 SOLOv2_X101_DCN_3x.pth 路径

- model中 bbox_head 的 num_classes 改为自己分类数+1(背景)

- model中 mask_feat_head = w*h*s^2

- 修改 train 、 val 、 test 的 ann_file 和 img_prefix 路径

- 添加 evaluation = dict(interval=1) :每1个 epoch 对 val 数据测试(但是在验证阶段,显存会增加,a100,40G在训练时用的32G,验证时直接爆了,我加了 with torch.no_grad(): ,在验证阶段不反向传播,还是爆了,有知道怎么弄了评论区告诉我一下!),代码中**是自己的路径

- 修改文件保存路径 work_dir

# model settings

model = dict(

type='SOLOv2',

# pretrained='open-mmlab://resnext101_64x4d',

pretrained='pre_model/resnext101_64x4d-ee2c6f71.pth', # 改为自己的权重路径

backbone=dict(

type='ResNeXt',

depth=101,

groups=64,

base_width=4,

num_stages=4,

out_indices=(0, 1, 2, 3),

frozen_stages=1,

style='pytorch',

dcn=dict(

type='DCNv2',

deformable_groups=1,

fallback_on_stride=False),

stage_with_dcn=(False, True, True, True)),

neck=dict(

type='FPN',

in_channels=[256, 512, 1024, 2048],

out_channels=256,

start_level=0,

num_outs=5),

bbox_head=dict(

type='SOLOv2Head',

# num_classes=81,

num_classes=2,

in_channels=256,

stacked_convs=4,

use_dcn_in_tower=True,

type_dcn='DCNv2',

seg_feat_channels=512,

strides=[8, 8, 16, 32, 32],

scale_ranges=((1, 96), (48, 192), (96, 384), (192, 768), (384, 2048)),

sigma=0.2,

num_grids=[40, 36, 24, 16, 12],

ins_out_channels=256,

loss_ins=dict(

type='DiceLoss',

use_sigmoid=True,

loss_weight=3.0),

loss_cate=dict(

type='FocalLoss',

use_sigmoid=True,

gamma=2.0,

alpha=0.25,

loss_weight=1.0)),

mask_feat_head=dict(

type='MaskFeatHead',

in_channels=256,

out_channels=128,

start_level=0,

end_level=3,

num_classes=256,

# num_classes=1,

conv_cfg=dict(type='DCNv2'),

norm_cfg=dict(type='GN', num_groups=32, requires_grad=True)),

)

# training and testing settings

train_cfg = dict()

test_cfg = dict(

nms_pre=500,

score_thr=0.1,

mask_thr=0.5,

update_thr=0.05,

kernel='gaussian', # gaussian/linear

sigma=2.0,

max_per_img=100)

# dataset settings

dataset_type = 'CocoDataset'

# data_root = '/home/wenjie.yuan/Cow/cow_Baseline/cow_datasets/train_dataset/200/'

data_root = '/home/wenjie.yuan/Ins/Zero-shot-Instance-Segmentation-main/data/coco/'

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

dict(type='Resize',

img_scale=[(1333, 800), (1333, 768), (1333, 736),

(1333, 704), (1333, 672), (1333, 640)],

# img_scale=[(4096, 800), (4096, 1000)],

multiscale_mode='value',

keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_pisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks']),

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

# img_scale=(4096, 800),

# img_scale=[(4096, 800), (4096, 900),(4096, 1000)],

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_pisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img']),

])

]

data = dict(

imgs_per_gpu=8,

workers_per_gpu=0,

train=dict(

type=dataset_type,

ann_file='**/SOLO-master/train_flfr_200000_0318.json',

img_prefix='**/dataset/flfr/images',

pipeline=train_pipeline),

val=dict(

type=dataset_type,

ann_file='**/SOLO-master/val_flfr_2500_0318.json',

img_prefix='**/dataset/flfr/images',

pipeline=test_pipeline),

test=dict(

type=dataset_type,

ann_file='**/SOLO-master/val_flfr_2500_0318.json',

img_prefix='**/dataset/flfr/images',

pipeline=test_pipeline))

# evaluation = dict(metric=['bbox','segm'], interval=1)

evaluation = dict(interval=1)

# optimizer

optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.0001)

optimizer_config = dict(grad_clip=dict(max_norm=35, norm_type=2))

# learning policy

lr_config = dict(

policy='step',

warmup='linear',

warmup_iters=500,

warmup_ratio=0.01,

step=[27, 33])

checkpoint_config = dict(interval=12)

# yapf:disable

log_config = dict(

# interval=50,

interval=10,

hooks=[

dict(type='TextLoggerHook'),

# dict(type='TensorboardLoggerHook')

])

# yapf:enable

# runtime settings

total_epochs = 36

device_ids = range(8)

dist_params = dict(backend='nccl')

log_level = 'INFO'

work_dir = './work_dirs/solov2_release_x101_dcn_fpn_8gpu_3x_batch8_train200000'

load_from = './pre_model/SOLOv2_X101_DCN_3x.pth'

resume_from = None

workflow = [('train', 1)]

3. 下载权重

- resnext101_64x4d-ee2c6f71.pth

官网没找到,从一篇博客中找到的: resnext101_64x4d-ee2c6f71.pth - SOLOv2_X101_DCN_3x

- 去官网 https://github.com/WXinlong/SOLO 下载

4. 自定义数据集转COCO格式

- 参考链接: https://zhuanlan.zhihu.com/p/56881826

- 我的数据格式:

- array类型,里面每个元素是一张图片

- 每个图片里面所有 segmentation 放在一个 array 里面, bbox 也一样,按照coco格式,是要

- coco格式注意点:

- image的 id 和 annotation 的 image_id 号一致

- annotation的 id 在不同图片里面也要不一样,即每个 bbox 的 ground true 必须不一样,我用 i*100+j 把每张图片的 id 区分开(即每个图片里 bbox 不超过100即可,我试了20是还会重复的),特别重要!因为一样的情况代码mmdet v1.0.0代码没有提示错误,会继续运行,但是运行时间很短,一定要注意!

- coco的 image 和 annotation 每个元素代表什么如下:

image{

"id" : int, # 图像id,可从0开始

"width" : int, # 图像的宽

"height" : int, # 图像的高

"file_name" : str, # 文件名

"license" : int, # 遵循哪个协议

"flickr_url" : str, # flickr图片链接url

"coco_url" : str, # COCO图片链接url

"date_captured" : datetime, # 获取数据的日期

}

annotation{

"id" : int, # 注释id编号

"image_id" : int, # 图像id编号

"category_id" : int, # 类别id编号

"segmentation" : RLE or [polygon], # 分割具体数据

"area" : float, # 目标检测的区域大小

"bbox" : [x,y,width,height], # 目标检测框的坐标详细位置信息

"iscrowd" : 0 or 1, # 目标是否被遮盖,默认为0

}

categories[{

"id" : int, # 类别id编号

"name" : str, # 类别名字

"supercategory" : str, # 类别所属的大类,如哈巴狗和狐狸犬都属于犬科这个大类

}]

- 改写后的代码如下:

# *_* : coding: utf-8 *_*

'''

datasets process for object detection project.

for convert customer dataset format to coco data format,

'''

import traceback

import argparse

import datetime

import json

import cv2

import os

import numpy as np

__CLASS__ = ['__background__', 'park'] # class dictionary, background must be in first index.

def argparser():

parser = argparse.ArgumentParser("define argument parser for pycococreator!")

parser.add_argument("-r", "--root_path", default="/**/dataset/flfr", help="path of root directory")

parser.add_argument("-p", "--json_path", default='/**/SOLO-master/train_flfr_0105.json', help="datasets path ")

parser.add_argument("-s", "--save_path", default='/**/SOLO-master/train_flfr_10000_0317.json', help="save path ")

return parser.parse_args()

def MainProcessing(args):

'''main process source code.'''

annotations = {} # annotations dictionary, which will dump to json format file.

root_path = args.root_path

json_path = args.json_path

save_path = args.save_path

# coco annotations info.

annotations["info"] = {

"description": "customer dataset format convert to COCO format",

"url": "/share/parking/pony.pan/dataset/flfr",

"version": "1.0",

"year": 2022,

"contributor": "wenjie.yuan",

"date_created": "2022/03/17"

}

# coco annotations licenses.

annotations["licenses"] = [{

"url": "https://www.apache.org/licenses/LICENSE-2.0.html",

"id": 1,

"name": "Apache License 2.0"

}]

# coco annotations categories.

annotations["categories"] = []

for cls, clsname in enumerate(__CLASS__):

if clsname == '__background__':

continue

annotations["categories"].append(

{

"supercategory": "object",

"id": cls,

"name": clsname

}

)

annotations["images"] = []

annotations["annotations"] = []

with open(json_path, 'r') as f:

json_ = json.load(f)

# for i in range(len(json_)):

# for i in range(10000):

for i in range(200000):

tmp = json_[i]

id = i

annotations["images"].append(

{

"license": 1,

"file_name":tmp['filename'].split('/')[-1],

"coco_url": "",

"height": tmp['height'],

"width": tmp['width'],

"date_captured": datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'),

"flickr_url": "",

"id": id

}

)

for j in range(len(tmp['ann']['kpts'])):

annotations["annotations"].append(

{

"id": i*100+j,

"image_id": id,

# "category_id": cls+1,

"category_id": 1,

# "segmentation": np.array(tmp['ann']['kpts'][j])[[True,True, False,True,True, False,True,True, False,True,True, False]].tolist(),

"segmentation": [[tmp['ann']['kpts'][j][0], tmp['ann']['kpts'][j][1], tmp['ann']['kpts'][j][3], tmp['ann']['kpts'][j][4], tmp['ann']['kpts'][j][6], tmp['ann']['kpts'][j][7], tmp['ann']['kpts'][j][9], tmp['ann']['kpts'][j][10]]],

"area": tmp['ann']['areas'][j],

"bbox": tmp['ann']['bboxes'][j],

"iscrowd": 0,

}

)

with open(save_path, "w") as f:

json.dump(annotations, f)

if __name__ == "__main__":

print("begining to convert customer format to coco format!")

args = argparser()

try:

MainProcessing(args)

except Exception as e:

traceback.print_exc()

print("successful to convert customer format to coco format")

4. 训练&测试

- 8卡训练:

- bash tools/dist_train.sh solov2_x101_dcn_fpn_8gpu_3x.py 8

- 在训练期间验证:

- bash tools/dist_train.sh solov2_x101_dcn_fpn_8gpu_3x.py 8 –validate

- 预测和可视化

- python tools/test_ins_vis.py solov2_x101_dcn_fpn_8gpu_3x.py work_dirs/solov2_release_x101_dcn_fpn_8gpu_3x_batch8_train10000_job/epoch_36.pth –show –save_dir ./out_file

- 8卡评估(单卡太慢了):

- ./tools/dist_test.sh solov2_x101_dcn_fpn_8gpu_3x.py work_dirs/solov2_release_x101_dcn_fpn_8gpu_3x/epoch_36.pth 8 –show –out results_solo.pkl –eval segm

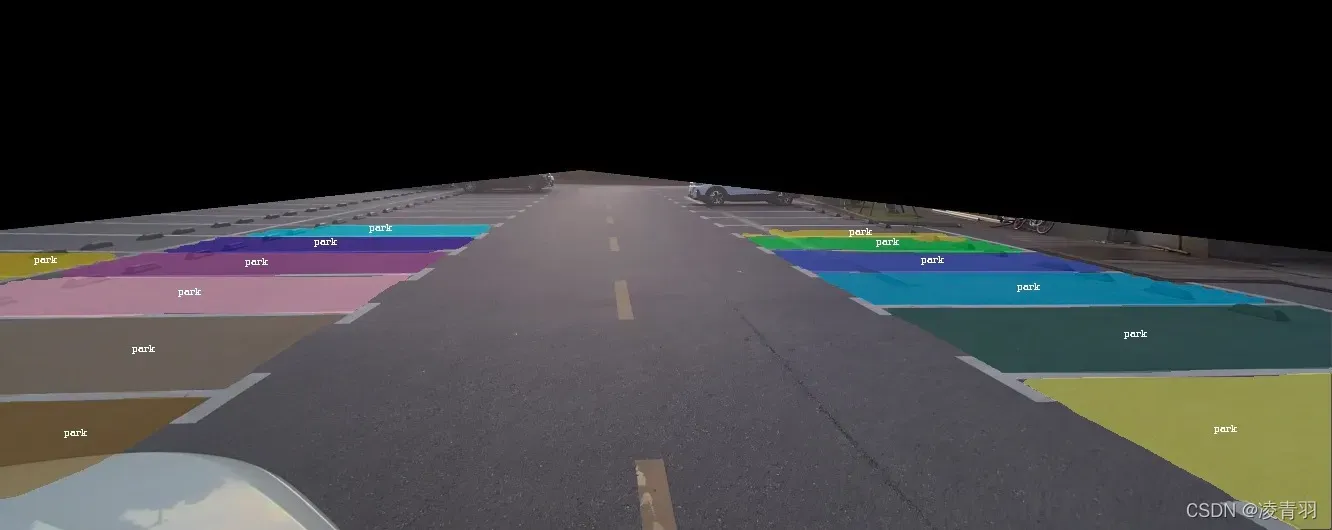

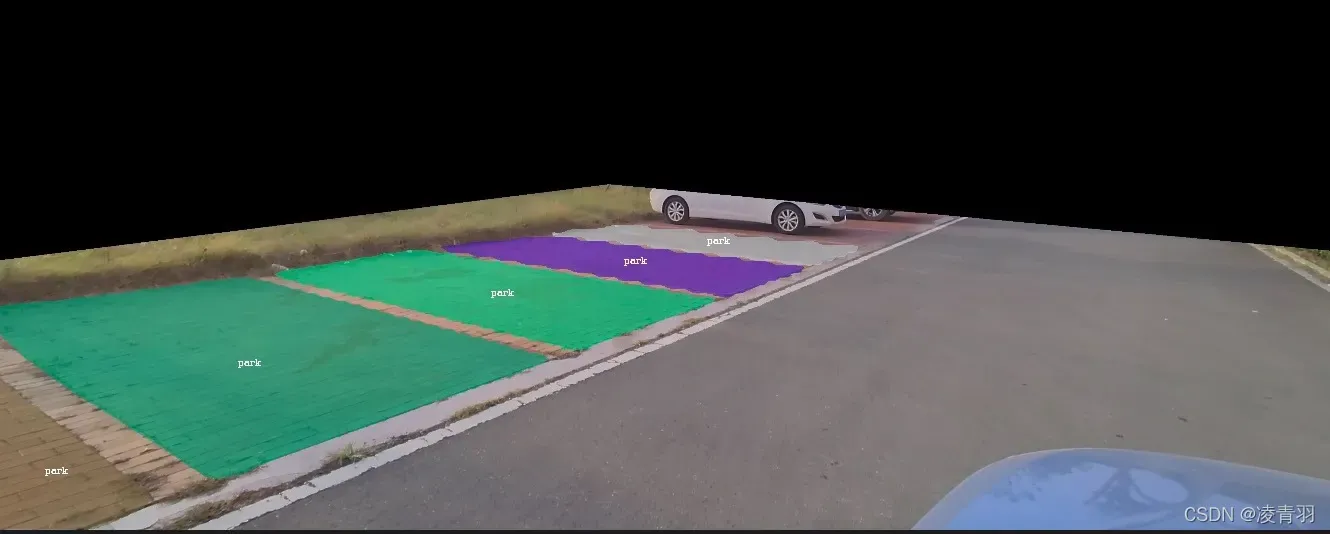

5.实验结果

6. 遇到的Bug

- AssertionError: MMCV==0.2.16 is used but incompatible. Please install mmcv>=1.3.8, <=1.4.0.

- 原因:不用安装mmcv 1.3.8,本代码就是用的MMCV==0.2.16,是因为环境变量没加进去的原因

- 办法: export PYTHONPATH=$PYTHONPATH:**/SOLO-master

文章出处登录后可见!

已经登录?立即刷新