最近要在地平线旭日x3上部署yolov8,但是模型后处理过程地平线官方并没有给例程,所以自己研究一下,由于地平线的模型量化只支持onnx中opset10/11的模型,所以转化过程要设置opset为11。在default.yaml文件中配置输出onnx,opset11,导出onnx模型。

在我自己的电脑上进行了onnx本地cpu推理,大概是50ms一帧,也就是20帧左右,下面介绍yolov8后处理的debug过程:

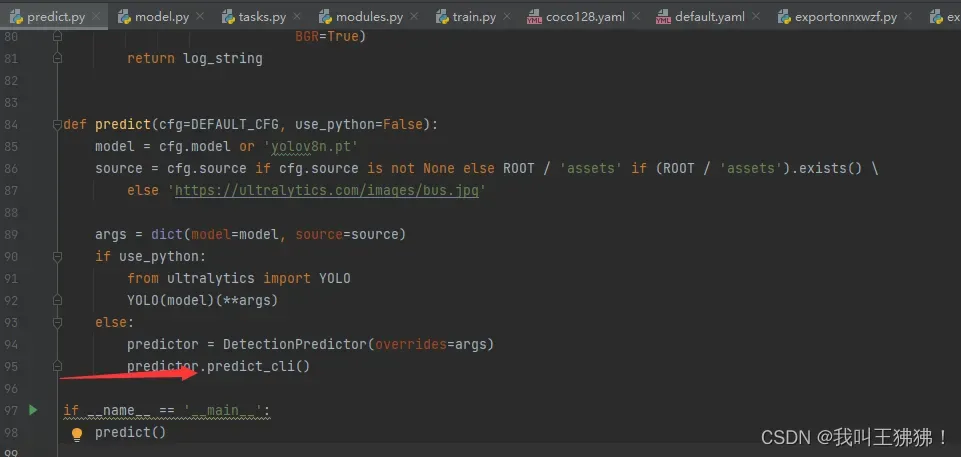

1.首先从predict_cli这个函数开始

2.在1之后进入到stream_inference函数(推理)中:

在默认超参数设置函数中的setup_model用来完成辨别后端模型是哪种形式

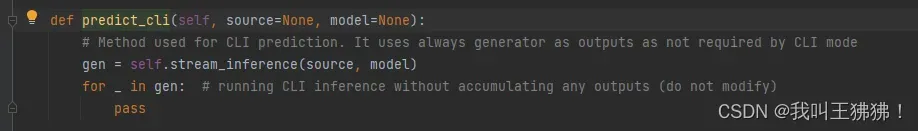

3.进入setup_source

check_imgsz检查图片大小是否符合,然后load_inference_source处理推理源数据

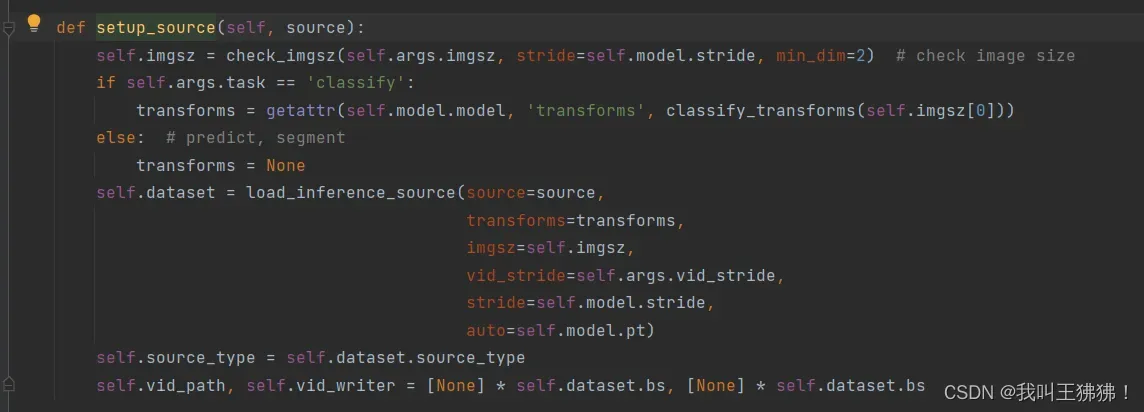

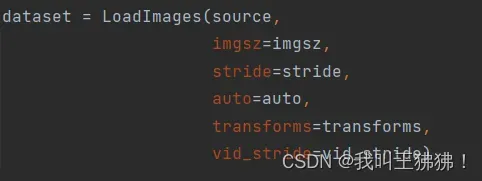

然后是LoadImages类,这个类中使用了LetterBox,LetterBox的作用是将输入的图像调整为指定大小(`imgsz`参数),并在图像周围添加黑色背景以保持纵横比。

这段代码首先使用stack函数将由LetterBox函数处理后的多张图像的形状组合成一个新的数组 s。然后,使用np.unique函数找到数组s中独特的行,并获取其行数。如果独特行的数量为1,那么说明所有图像的形状都相同,可以进行矩形推断(rect inference)。这段代码的作用是计算数据集中所有图像的形状,并确定是否可以进行矩形推断。此外,它还存储数据集的变换和大小。

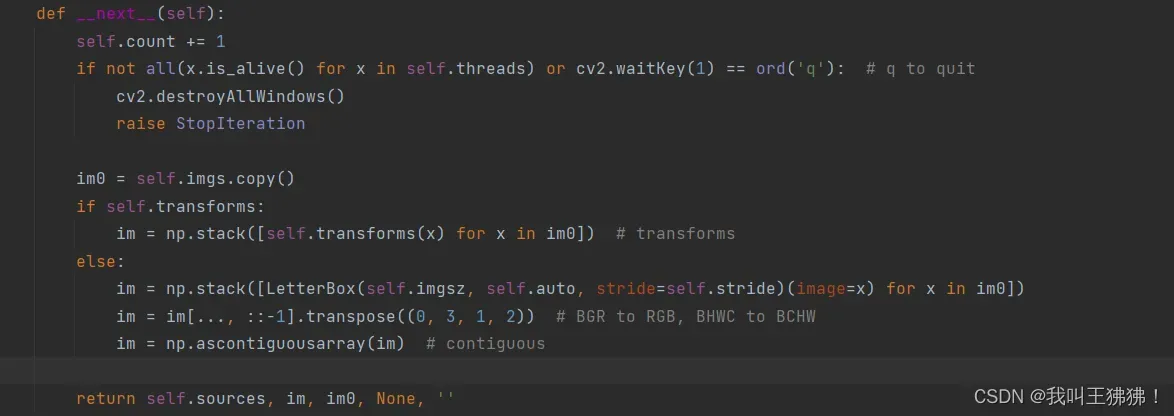

transforms为None,所以在每次迭代时将使用LetterBox函数对每个图像都使用LetterBox函数进行调整,并将它们堆叠到一个新的数组im中。然后,将im数组中的所有图像从 BGR 格式转换为 RGB 格式,并将它们的维度从 BHWC (批次,高度,宽度,通道)转换为 BCHW (批次,通道,高度,宽度)。

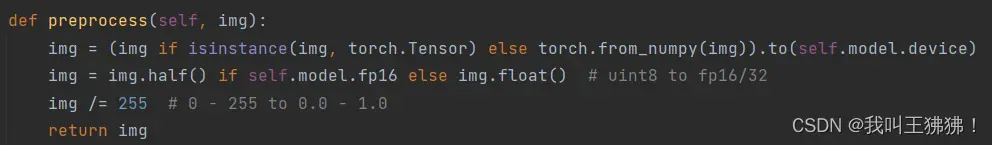

4.进行输入onnx之前的预处理,即preprocess

对数据进行了归一化处理,未进行半精度。

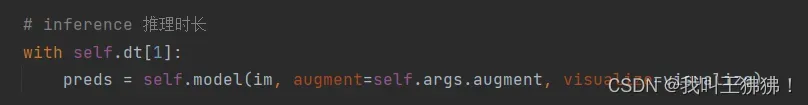

5.inference

输出的形状是1*84*8400,这里使用的是coco128的数据集,类别是80个类

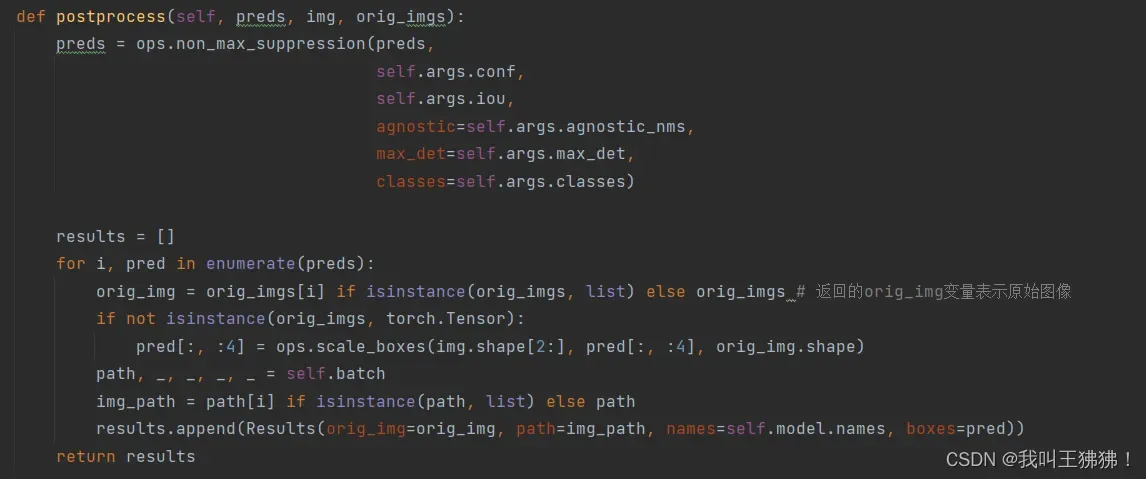

6.进入本文主题,后处理postprocess

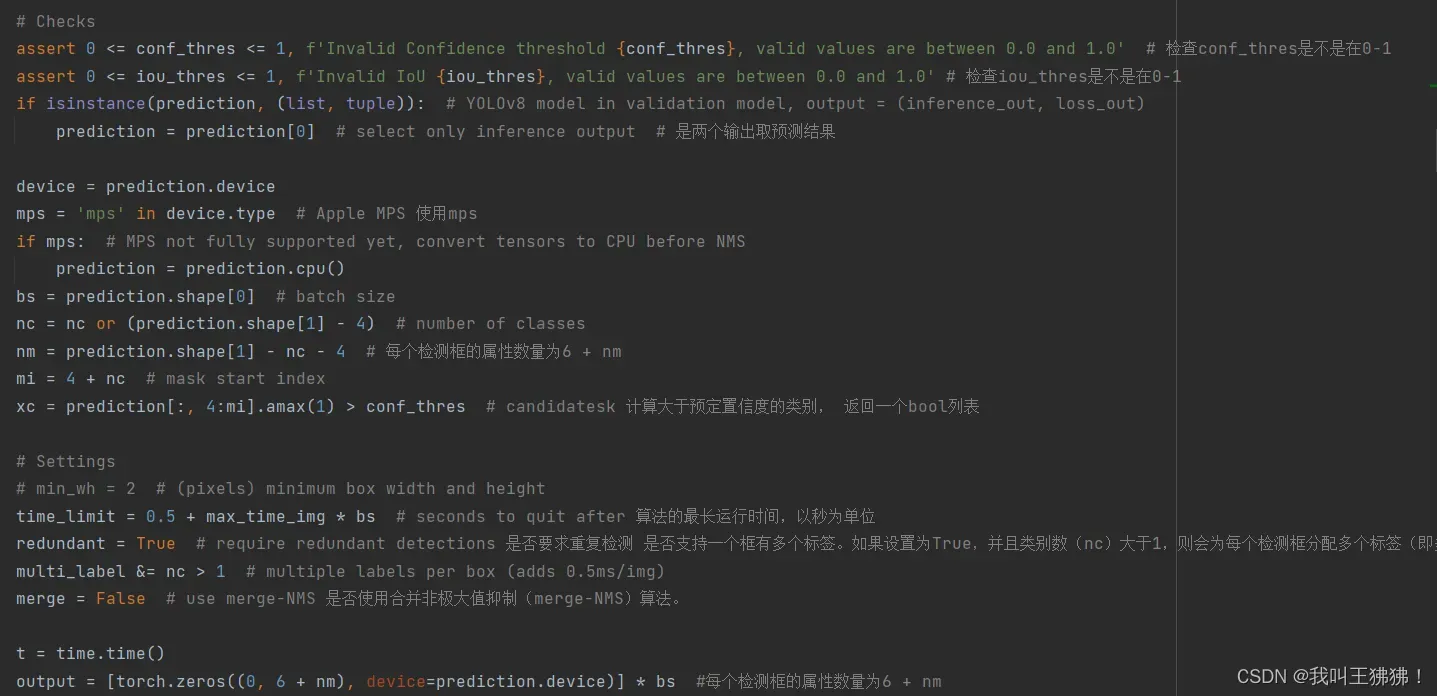

(1)调用了ops代码中的non_max_suppression方法

对于模型输出一般是两个,一个是预测结果,一个是损失函数输出结果,所以在perdiction中选择了第一维,还有一些变量记录:

nc:类数

nm:每个矩形框的属性数量

mi:属性起始位置

xc:返回的一个1*8400的Tensor,bool型的,表示该检测框是否满足置信度阈值的要求

output:输出每个检测框的属性。

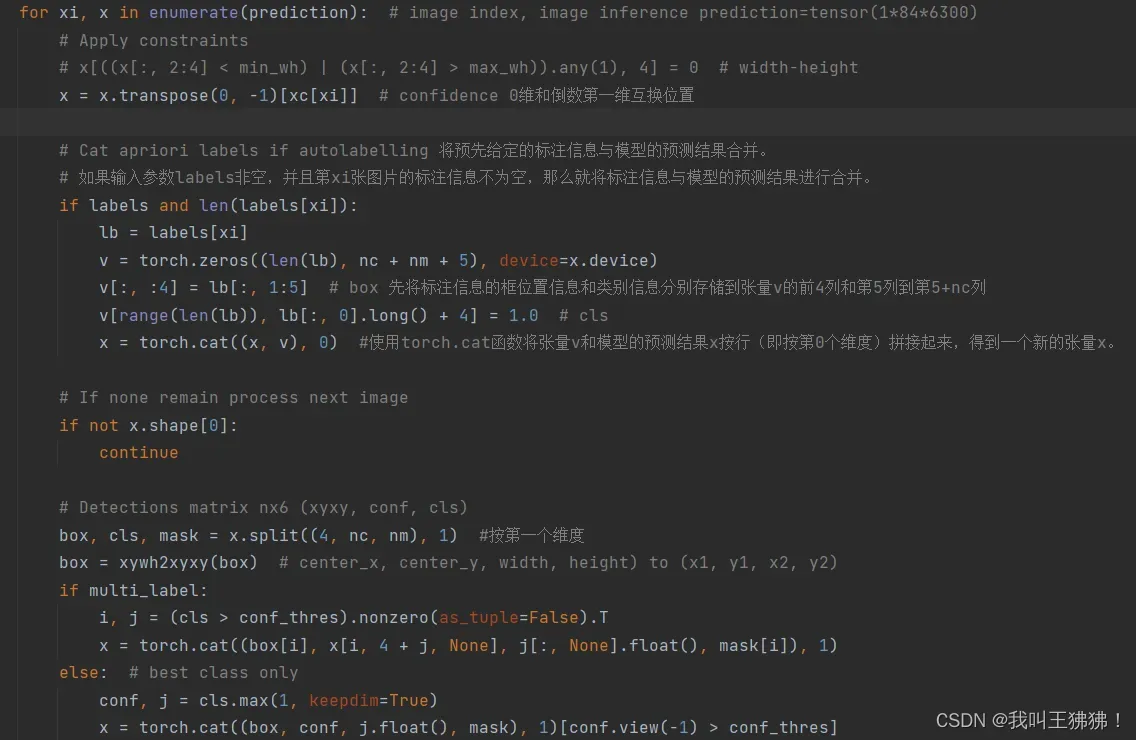

(2)开始对预测结果进行处理,xi为索引,x为84*8400的tensor

以自带的bus.jpg为例,模型输出找到了48个符合conf的目标框,于是x变成了48*84的tensor

将x切片,在84中,切成了4(box),80(cls),0(mask)

在xywhxyxy函数中,将box的四个元素从x,y(中心点),框的宽高转为了(x1,y1),(x2,y2),multi_label是false,所以返回了置信度最大的类的索引和置信度,然后将box

(48*4),conf(48*1),j(48*1),mask(48*0)进行一维拼接得到一个(48*6)的tensor

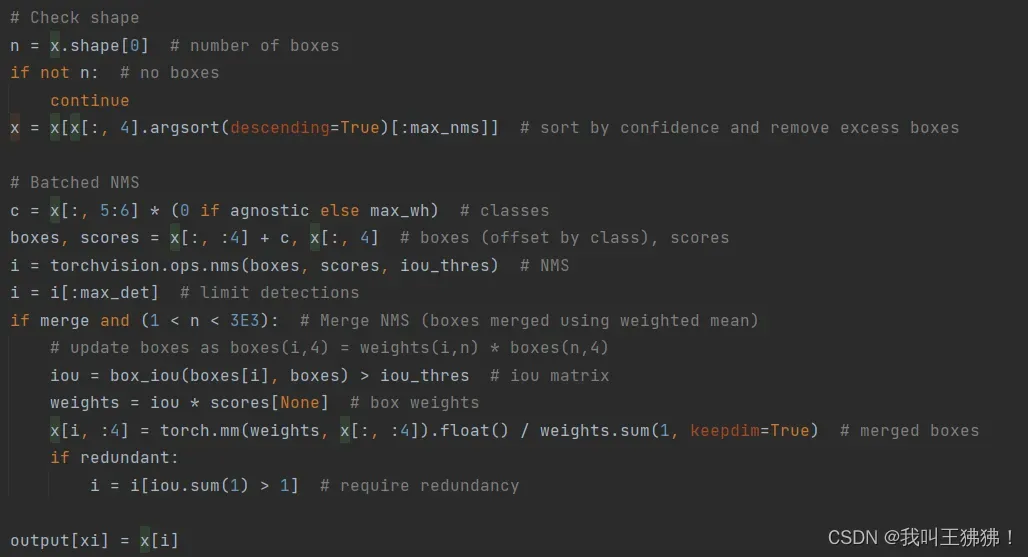

n是框的数量,然后对框进行排序(降序),选超参数中设置的max_nms个框,默认为300,最后x仍然是一个(48*6)的tensor,然后对着48个框进行对应类别的conf计算,max=wh表示加入框的大小时对score的影响,最后返回的c是一个(48*1)

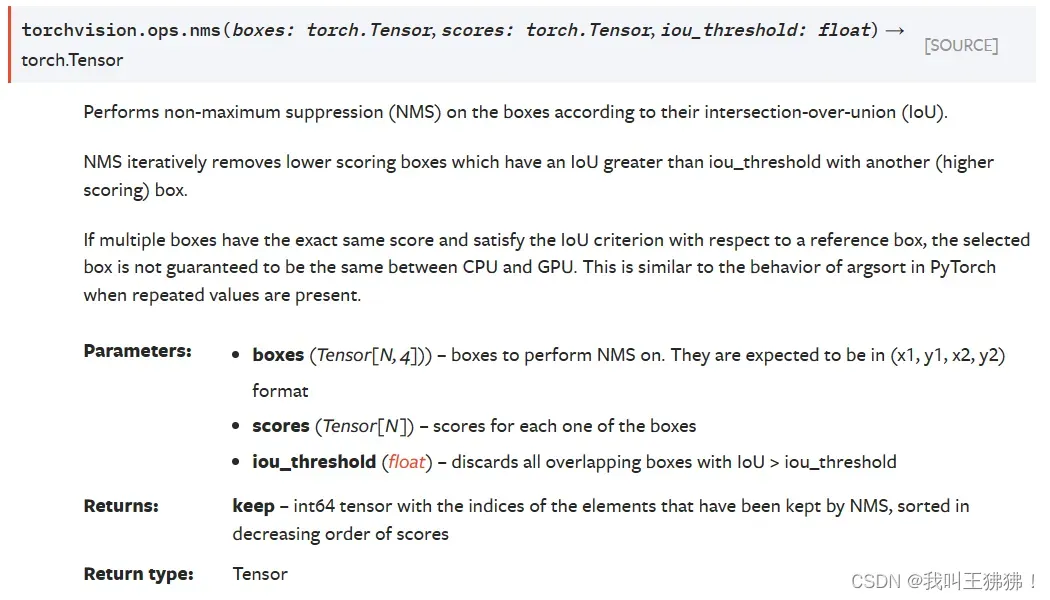

(3)使用了torchvision中的nms的方法,返回了一个剩下的检测框的索引tensor->i,在本次debug中i里面有5个索引,所以是剩下了5个框,具体nms的过程:

①首先,根据模型预测的边界框,计算每个边界框的置信度得分。

②接着,从置信度得分最高的边界框开始,按照置信度得分从高到低的顺序对所有边界框进行排序。

③然后,选择置信度得分最高的边界框,并将其添加到输出列表中。

④对于剩余的边界框,计算它们与输出列表中最后一个边界框的重叠区域(即交集),并计算它们的重叠区域与它们自身区域的比值(即IoU)。如果该比值大于某个阈值(通常为0.5),则将该边界框从列表中删除,否则保留该边界框。

⑤重复步骤3和4,直到所有边界框都被处理完毕。

贴出官方函数的内容:

源码:

import torch

from torch.jit.annotations import Tuple

from torch import Tensor

from ._box_convert import _box_cxcywh_to_xyxy, _box_xyxy_to_cxcywh, _box_xywh_to_xyxy, _box_xyxy_to_xywh

import torchvision

from torchvision.extension import _assert_has_ops

[docs]def nms(boxes: Tensor, scores: Tensor, iou_threshold: float) -> Tensor:

"""

Performs non-maximum suppression (NMS) on the boxes according

to their intersection-over-union (IoU).

NMS iteratively removes lower scoring boxes which have an

IoU greater than iou_threshold with another (higher scoring)

box.

If multiple boxes have the exact same score and satisfy the IoU

criterion with respect to a reference box, the selected box is

not guaranteed to be the same between CPU and GPU. This is similar

to the behavior of argsort in PyTorch when repeated values are present.

Parameters

----------

boxes : Tensor[N, 4])

boxes to perform NMS on. They

are expected to be in (x1, y1, x2, y2) format

scores : Tensor[N]

scores for each one of the boxes

iou_threshold : float

discards all overlapping

boxes with IoU > iou_threshold

Returns

-------

keep : Tensor

int64 tensor with the indices

of the elements that have been kept

by NMS, sorted in decreasing order of scores

"""

_assert_has_ops()

return torch.ops.torchvision.nms(boxes, scores, iou_threshold)

[docs]@torch.jit._script_if_tracing

def batched_nms(

boxes: Tensor,

scores: Tensor,

idxs: Tensor,

iou_threshold: float,

) -> Tensor:

"""

Performs non-maximum suppression in a batched fashion.

Each index value correspond to a category, and NMS

will not be applied between elements of different categories.

Parameters

----------

boxes : Tensor[N, 4]

boxes where NMS will be performed. They

are expected to be in (x1, y1, x2, y2) format

scores : Tensor[N]

scores for each one of the boxes

idxs : Tensor[N]

indices of the categories for each one of the boxes.

iou_threshold : float

discards all overlapping boxes

with IoU > iou_threshold

Returns

-------

keep : Tensor

int64 tensor with the indices of

the elements that have been kept by NMS, sorted

in decreasing order of scores

"""

if boxes.numel() == 0:

return torch.empty((0,), dtype=torch.int64, device=boxes.device)

# strategy: in order to perform NMS independently per class.

# we add an offset to all the boxes. The offset is dependent

# only on the class idx, and is large enough so that boxes

# from different classes do not overlap

else:

max_coordinate = boxes.max()

offsets = idxs.to(boxes) * (max_coordinate + torch.tensor(1).to(boxes))

boxes_for_nms = boxes + offsets[:, None]

keep = nms(boxes_for_nms, scores, iou_threshold)

return keep

[docs]def remove_small_boxes(boxes: Tensor, min_size: float) -> Tensor:

"""

Remove boxes which contains at least one side smaller than min_size.

Arguments:

boxes (Tensor[N, 4]): boxes in (x1, y1, x2, y2) format

min_size (float): minimum size

Returns:

keep (Tensor[K]): indices of the boxes that have both sides

larger than min_size

"""

ws, hs = boxes[:, 2] - boxes[:, 0], boxes[:, 3] - boxes[:, 1]

keep = (ws >= min_size) & (hs >= min_size)

keep = torch.where(keep)[0]

return keep

[docs]def clip_boxes_to_image(boxes: Tensor, size: Tuple[int, int]) -> Tensor:

"""

Clip boxes so that they lie inside an image of size `size`.

Arguments:

boxes (Tensor[N, 4]): boxes in (x1, y1, x2, y2) format

size (Tuple[height, width]): size of the image

Returns:

clipped_boxes (Tensor[N, 4])

"""

dim = boxes.dim()

boxes_x = boxes[..., 0::2]

boxes_y = boxes[..., 1::2]

height, width = size

if torchvision._is_tracing():

boxes_x = torch.max(boxes_x, torch.tensor(0, dtype=boxes.dtype, device=boxes.device))

boxes_x = torch.min(boxes_x, torch.tensor(width, dtype=boxes.dtype, device=boxes.device))

boxes_y = torch.max(boxes_y, torch.tensor(0, dtype=boxes.dtype, device=boxes.device))

boxes_y = torch.min(boxes_y, torch.tensor(height, dtype=boxes.dtype, device=boxes.device))

else:

boxes_x = boxes_x.clamp(min=0, max=width)

boxes_y = boxes_y.clamp(min=0, max=height)

clipped_boxes = torch.stack((boxes_x, boxes_y), dim=dim)

return clipped_boxes.reshape(boxes.shape)

[docs]def box_convert(boxes: Tensor, in_fmt: str, out_fmt: str) -> Tensor:

"""

Converts boxes from given in_fmt to out_fmt.

Supported in_fmt and out_fmt are:

'xyxy': boxes are represented via corners, x1, y1 being top left and x2, y2 being bottom right.

'xywh' : boxes are represented via corner, width and height, x1, y2 being top left, w, h being width and height.

'cxcywh' : boxes are represented via centre, width and height, cx, cy being center of box, w, h

being width and height.

Arguments:

boxes (Tensor[N, 4]): boxes which will be converted.

in_fmt (str): Input format of given boxes. Supported formats are ['xyxy', 'xywh', 'cxcywh'].

out_fmt (str): Output format of given boxes. Supported formats are ['xyxy', 'xywh', 'cxcywh']

Returns:

boxes (Tensor[N, 4]): Boxes into converted format.

"""

allowed_fmts = ("xyxy", "xywh", "cxcywh")

if in_fmt not in allowed_fmts or out_fmt not in allowed_fmts:

raise ValueError("Unsupported Bounding Box Conversions for given in_fmt and out_fmt")

if in_fmt == out_fmt:

return boxes.clone()

if in_fmt != 'xyxy' and out_fmt != 'xyxy':

# convert to xyxy and change in_fmt xyxy

if in_fmt == "xywh":

boxes = _box_xywh_to_xyxy(boxes)

elif in_fmt == "cxcywh":

boxes = _box_cxcywh_to_xyxy(boxes)

in_fmt = 'xyxy'

if in_fmt == "xyxy":

if out_fmt == "xywh":

boxes = _box_xyxy_to_xywh(boxes)

elif out_fmt == "cxcywh":

boxes = _box_xyxy_to_cxcywh(boxes)

elif out_fmt == "xyxy":

if in_fmt == "xywh":

boxes = _box_xywh_to_xyxy(boxes)

elif in_fmt == "cxcywh":

boxes = _box_cxcywh_to_xyxy(boxes)

return boxes

[docs]def box_area(boxes: Tensor) -> Tensor:

"""

Computes the area of a set of bounding boxes, which are specified by its

(x1, y1, x2, y2) coordinates.

Arguments:

boxes (Tensor[N, 4]): boxes for which the area will be computed. They

are expected to be in (x1, y1, x2, y2) format

Returns:

area (Tensor[N]): area for each box

"""

return (boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1])

# implementation from https://github.com/kuangliu/torchcv/blob/master/torchcv/utils/box.py

# with slight modifications

[docs]def box_iou(boxes1: Tensor, boxes2: Tensor) -> Tensor:

"""

Return intersection-over-union (Jaccard index) of boxes.

Both sets of boxes are expected to be in (x1, y1, x2, y2) format.

Arguments:

boxes1 (Tensor[N, 4])

boxes2 (Tensor[M, 4])

Returns:

iou (Tensor[N, M]): the NxM matrix containing the pairwise IoU values for every element in boxes1 and boxes2

"""

area1 = box_area(boxes1)

area2 = box_area(boxes2)

lt = torch.max(boxes1[:, None, :2], boxes2[:, :2]) # [N,M,2]

rb = torch.min(boxes1[:, None, 2:], boxes2[:, 2:]) # [N,M,2]

wh = (rb - lt).clamp(min=0) # [N,M,2]

inter = wh[:, :, 0] * wh[:, :, 1] # [N,M]

iou = inter / (area1[:, None] + area2 - inter)

return iou

# Implementation adapted from https://github.com/facebookresearch/detr/blob/master/util/box_ops.py

[docs]def generalized_box_iou(boxes1: Tensor, boxes2: Tensor) -> Tensor:

"""

Return generalized intersection-over-union (Jaccard index) of boxes.

Both sets of boxes are expected to be in (x1, y1, x2, y2) format.

Arguments:

boxes1 (Tensor[N, 4])

boxes2 (Tensor[M, 4])

Returns:

generalized_iou (Tensor[N, M]): the NxM matrix containing the pairwise generalized_IoU values

for every element in boxes1 and boxes2

"""

# degenerate boxes gives inf / nan results

# so do an early check

assert (boxes1[:, 2:] >= boxes1[:, :2]).all()

assert (boxes2[:, 2:] >= boxes2[:, :2]).all()

area1 = box_area(boxes1)

area2 = box_area(boxes2)

lt = torch.max(boxes1[:, None, :2], boxes2[:, :2]) # [N,M,2]

rb = torch.min(boxes1[:, None, 2:], boxes2[:, 2:]) # [N,M,2]

wh = (rb - lt).clamp(min=0) # [N,M,2]

inter = wh[:, :, 0] * wh[:, :, 1] # [N,M]

union = area1[:, None] + area2 - inter

iou = inter / union

lti = torch.min(boxes1[:, None, :2], boxes2[:, :2])

rbi = torch.max(boxes1[:, None, 2:], boxes2[:, 2:])

whi = (rbi - lti).clamp(min=0) # [N,M,2]

areai = whi[:, :, 0] * whi[:, :, 1]

return iou - (areai - union) / areai以上为官方源码,对于以上函数做了一些解释:

def nms(boxes: Tensor, scores: Tensor, iou_threshold: float) -> Tensor:

基本 NMS 函数。给定一组边界框和相应的得分,该函数利用它们之间的 IoU 值对边界框进行排序,并应用阈值策略来删除重叠的边界框。该函数包括以下步骤:

接收输入参数 boxes (N, 4) , N 是边界框的数量,scores(N,),包含每个边界框的得分和 IoU 阈值。返回 keep,包含保留的边界框的索引,这些边界框已按其得分从高到低排序。

def batched_nms(

boxes: Tensor,

scores: Tensor,

idxs: Tensor,

iou_threshold: float, ) -> Tensor:

批处理模式下执行 NMS 的 PyTorch 函数。该函数支持按类别对边界框进行分组,并独立地对每个类别进行 NMS。实现包括以下步骤:

接收输入参数 boxes(N, 4), N 是边界框的数量、scores(N,),其中包含每个边界框的得分)、idxs (N,) 的,包含每个边界框对应的类别索引和IoU 阈值。如果输入张量 boxes 中没有边界框,则返回一个空的张量,表示没有要保留的边界框。否则,计算每个类别的偏移量以确保边界框之间不会重叠。具体来说,将每个类别的索引乘以一个大于所有边界框坐标的最大值的值,并将其转换为与 boxes 张量相同的数据类型。将偏移后的边界框与相应的得分和类别索引一起传递给 nms 函数,以独立地对每个类别的边界框执行 NMS。返回 keep 张量,其中包含保留的边界框的索引,这些边界框已按其得分从高到低排序。

def remove_small_boxes(boxes: Tensor, min_size: float) -> Tensor:

函数remove_small_boxes用于删除至少有一个边界框较小的边界框。包括以下步骤:

接收输入参数boxes(N, 4)的张量, N 是边界框的数量和min_size(最小尺寸)。计算每个边界框的宽度和高度,并确定哪些边界框的宽度和高度都大于或等于min_size。返回keep,包含保留的边界框的索引,这些边界框的宽度和高度都大于或等于min_size。

def clip_boxes_to_image(boxes: Tensor, size: Tuple[int, int]) -> Tensor:

clip_boxes_to_image用于将边界框裁剪到给定图像的边界内。该函数包括以下步骤:

接收输入参数boxes(N, 4)的张量和size(一个二元组,表示图像的高度和宽度), 将输入张量boxes中的坐标分别提取为boxes_x和boxes_y张量,并计算图像的高度和宽度。对于JIT 模式),将 boxes_x和boxes_y张量的值裁剪到[0, width] 和 [0, height]范围内,分别代表图像的宽度和高度。对于非跟踪模式,使用 clamp函数执行相同的操作。将裁剪后的boxes_x和boxes_y张量合并为一个张量,并返回形状为(N, 4)的clipped_boxes张量,其中每个边界框的坐标都已被裁剪到图像的边界内。

def box_convert(boxes: Tensor, in_fmt: str, out_fmt: str) -> Tensor:

这是一个用于转换边界框格式的 PyTorch 函数。

xyxy:表示通过左上角和右下角的坐标表示边界框。

xywh:表示通过左上角坐标和宽度和高度表示边界框。

cxcywh:表示通过中心点坐标和宽度和高度表示边界框。

_box_xyxy_to_xywh:将边界框从 `’xyxy’` 格式转换为 `’xywh’` 格式。

_box_xyxy_to_cxcywh:将边界框从 `’xyxy’` 格式转换为 `’cxcywh’` 格式。

_box_xywh_to_xyxy:将边界框从 `’xywh’` 格式转换为 `’xyxy’` 格式。

_box_cxcywh_to_xyxy:将边界框从 `’cxcywh’` 格式转换为 `’xyxy’` 格式。

返回转换后的边界框 boxes。

def box_area(boxes: Tensor) -> Tensor:

计算边界框的面积,这个较为简答

def box_iou(boxes1: Tensor, boxes2: Tensor) -> Tensor:

计算边界框交并比,函数 box_iou 接收输入参数 boxes1 和 boxes2,分别表示两组边界框,其格式为 (x1, y1, x2, y2)。

计算两组边界框的面积 area1 和 area2,使用函数 box_area 计算每个边界框的面积。对于每个边界框对 (i, j),找到它们的左上角坐标和右下角坐标的最大值和最小值,分别记为 lt 和 rb。计算每个边界框对的宽度和高度 wh,即 wh[i,j] = rb[i,j] - lt[i,j],并将负数部分截断为0。计算每个边界框对的交集面积 inter,即 inter[i,j] = wh[i,j, 0] * wh[i,j, 1]。计算边界框对的并集面积 union,即 union[i,j] = area1[i] + area2[j] - inter[i,j]。计算边界框对的交并比 iou,即 iou[i,j] = inter[i,j] / union[i,j]。返回一个形状为 (N, M) 的 iou,N 和 M 分别为两组边界框的数量。

然后是选x(48*6)中的5个,变成了(5*6)最后的output也是5*6

最后就是将boxes恢复到原图尺寸画框框了!下一步就是将这个过程在地平线上实现了!

文章出处登录后可见!