GreenLIT:使用 GPT-J 和多任务学习来创作新剧本

如何微调 ML 模型以创建具有新标题、情节摘要和脚本的电视节目和电影

我在之前的一篇文章中展示了我如何微调 GPT-J 来生成 Haikus,结果非常好。对于我的最新实验 GreenLIT,我想突破使用 GPT-J 进行创意写作的极限,看看它是否可以为全新的电视节目和电影制作脚本。[0]

这是 GreenLIT 的组件和流程的框图。我将在下面的部分中详细讨论这些。

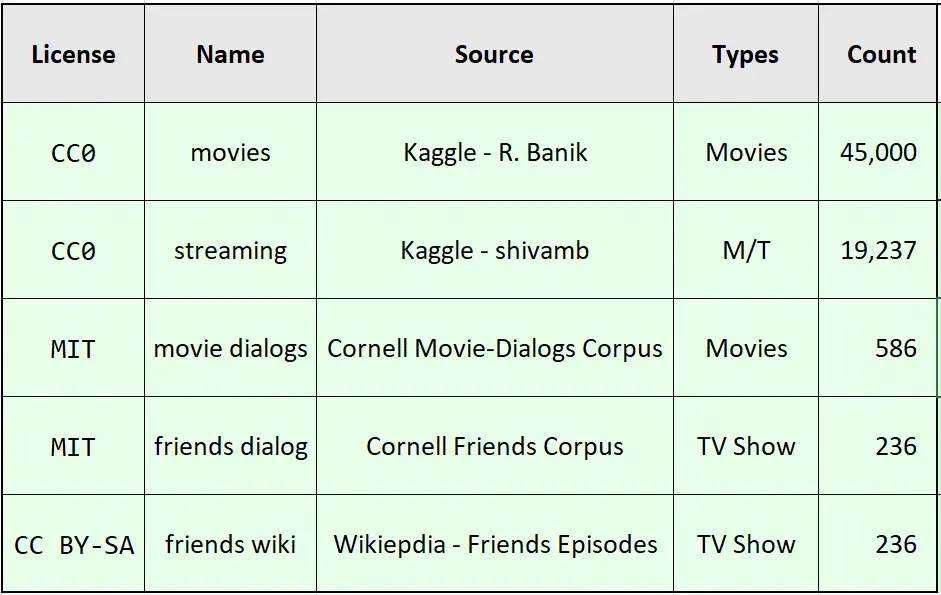

首先,我在网上搜索并找到了五个开源数据集,其中包含我用作训练数据的文本:

- 康奈尔大学 ConvoKit 项目中的电影对话框(MIT 许可)[1]

- Rounak Banik 在 Kaggle 上的电影情节摘要(CC0 许可证)[2]

- ConvoKit 项目中的 Friends Dialogs(MIT 许可)[1]

- 来自维基百科的朋友情节摘要(CC-BY-SA 许可证)[3]

- Shivam Bansal 在 Kaggle 上的流媒体节目分析(CC0 许可证)[4]

第一个和第三个数据集包含来自电影脚本和流行情景喜剧“朋友”的对话。其他数据集包括电视节目和电影的标题、类型和情节摘要。我编写了一些 Python 代码来预处理和过滤文本,为 GPT-J 学习的四个任务创建一组条目:

- 类型和主题 → 标题和情节

- 标题和情节 → 对话框

- 标题和情节 → 脚本

- Dialog → Script

请注意,对话仅表示角色名称和台词,而脚本是对话加上舞台指导。我将在下面进一步详细解释所有这些。

我从 GPT-J [5] 模型开始,它是 GPT-3 [6] 的开源变体。 GPT-J 之前接受过来自网络的大量文本数据集的训练,称为 The Pile [7]。

我在 Google Colab 上用超过 38,000 个训练条目对 GPT-J 进行了 20 小时的微调,以创建我的 GreenLIT 模型。微调后,我使用以下文本提示作为输入。

GENRE: crime drama

THEME: cryptocurrency根据这个提示,GreenLIT 模型生成了以下标题和绘图摘要:

#1. TITLE: Bitcoin Heist

SUMMARY: Two young men embark on a heist that could be the start of a new revolution.#2. TITLE: Satoshi's Daughter

SUMMARY: A crime drama about a woman who inherits a cryptocurrency fortune.#3. TITLE: The Dark Web

SUMMARY: The story of two brothers who join the underground Bitcoin economy.#4. TITLE: Cryptostorm

SUMMARY: Cryptostorm revolves around the story of a young man who has been trying to keep his life on track after being sent to

prison for a crime he didn't commit.#5. TITLE: The Cypher

SUMMARY: A young hacker joins a group of underground hackers to create a secure platform.

我从这个列表中选择了#3,The Dark Web,并将标题和摘要发送回 GreenLIT 以创建脚本的第一页。这里是。

TITLE: The Dark Web

SUMMARY: The story of two brothers who join the underground Bitcoin economy.

SCRIPT:[Scene: The living room of the house, the door opens and the brothers come in. They are both dressed in black, have their hoods up.]LEO - Okay, we did it! We hit the mother lode!

DREW - We hit the mother lode?

LEO - Yeah!

DREW - How much is in here?

LEO - Six hundred and forty dollars worth.

DREW - (To himself) Well, that's better thaan nothing.

LEO - The bitcoins are untraceable.

DREW - The bitcoins?

LEO - Yeah, the Bitcoin is basically just a name for a cryptographic currency.

DREW - A cryptographic currency?

LEO - Yeah, like, a secure, internet way of transferring money from one person to another.

DREW - Sounds like they invented the internet.

LEO - Well, the internet is just a network of computers connected to other computers by phone lines, but the internet is also the thing that makes it possible to transfer money from one computer to another without a bank.

DREW - Oh, yeah?

LEO - Yeah.

DREW - How do you do that?

LEO - Well, it's actually really complicated, and I don't understand it myself, but the short version is that the Bitcoin is run by a bunch of computers all connected to each other. It's kind of like a decentralized Ponzi scheme.

DREW - A decentralized Ponzi scheme?

LEO - Yeah, you know, like the government runs a Ponzi scheme.

好吧,有趣。该脚本似乎幽默地解释了加密货币的基础知识并稍微发展了角色。并带有一些社会评论。请稍等,我给我的代理人打电话。 😉

您可以在 Google Colab 上免费查看 GreenLIT。请务必在下面的附录中查看更多示例输出。[0]

System Details

在以下部分中,我将深入探讨 GreenLIT 中使用的组件和流程的详细信息。我将首先讨论一种称为多任务学习的神经网络训练技术。

Multitask Learning

我对 GreenLIT 项目有两个主要目标,(A) 在给定类型和主题的情况下创建新节目的标题和情节摘要,以及 (B) 在给定标题和情节摘要的情况下创建脚本的第一页。虽然微调两个专门的 AI 模型会起作用,但我想看看一个微调的模型是否可以完成这两项任务。这样做有几个好处。正如我在我的 Deep Haiku 项目中发现的那样,为多个但相似的任务微调一个模型,称为多任务学习,可以改善这两个任务的结果。 Rich Caruna 在卡内基梅隆大学研究了这项技术 [10]。[0]

多任务学习是一种归纳迁移的方法,它通过使用相关任务的训练信号中包含的域信息作为归纳偏差来提高泛化能力。它通过在使用共享表示的同时并行学习任务来做到这一点;为每项任务学到的知识可以帮助更好地学习其他任务。 — 丰富的卡鲁纳

为了解释多任务学习的工作原理,Alexandr Honchar 在他的文章中描述了一个称为“特征选择复查”的概念。他说,“如果一个特征对不止一项任务很重要,那么这个特征很可能对你的数据确实非常重要和具有代表性”,并且在多任务学习期间系统会加强。[0]

另一个优势是实际效率——只需加载一个 AI 模型即可执行两项任务。使用一种模型可以减少磁盘存储、加载时间和 GPU 内存。

接下来,我将讨论我是如何为该项目收集训练数据的。

Gathering Training Data

为了针对第一项任务微调系统,生成新节目的标题和情节摘要,我寻找了包含电影和电视节目元数据的开源数据集。

Gathering Movie Plots

在拥有大量数据集的 Kaggle 上,我发现了 Rounak Banik 的大量电影情节摘要列表,称为电影数据集。它包含超过 40K 电影的标题、发行年份、类型、摘要等。他在 CC0(公共领域)许可下发布了数据集。这是 5 个条目的示例。[0]

我使用了一个名为 KeyBERT [9] 的模块从摘要中提取主题。你可以在这里看到我的 Python 代码。[0]

我在 Shivam Bansal 的 Kaggle 上找到了另一组数据集。他收集了 Netflix、亚马逊、Hulu 和 Disney+ 上大约 2 万个流媒体节目的摘要。这是数据示例。[0]

我再次使用 KeyBERT 从流媒体节目的摘要中捕捉主题。

为了教 GPT-J 如何根据流派和主题创建标题和摘要,我为每个电影和电视节目组装了一个这样的条目。

GENRE: action science fiction

THEME: saving the world

TITLE: The Matrix

SUMMARY: Set in the 22nd century, The Matrix tells the story of a computer hacker who joins a group of underground insurgents fighting the vast and powerful computers who now rule the earth.GENRE: comedy sitcom

THEME: workplace comedy

TITLE: 30 Rock

SUMMARY: The life of the head writer at a late-night television variety show. From the creator and stars of SNL comes this workplace comedy. A brash network executive bullies head writer Liz Lemon into hiring an unstable movie star.

收集影视剧本

接下来,我搜索了脚本数据集。引用 The Dark Web 的 Leo 的话,当我从康奈尔找到 ConvoKit 时,“我击中了母脉”。集合数据集的正式名称是康奈尔对话分析工具包 [1],在 MIT 开源许可下发布。

[ConvoKit] 包含提取对话特征和分析对话中社会现象的工具,使用受 scikit-learn 启发(并兼容)的单一统一界面。包含几个大型会话数据集以及示例在这些数据集上使用工具包的脚本。 — 乔纳森 P. Chang 等人。

我使用来自 ConvoKit 中两个数据集的对话框来微调 GreenLIT。以下是他们网站上的数据集的描述。

- Cornell Movie-Dialogs Corpus – 从原始电影脚本中提取的大量元数据丰富的虚构对话集合。 (617 部电影中 10292 对电影角色之间的 220579 次对话交流)。

- Friends Corpus – 1990 年代流行的美国电视情景喜剧《老友记》十季中发生的所有对话的集合。

这是康奈尔电影对话语料库中卢旺达酒店的对话片段。

PAUL - What's wrong?

ZOZO - Beg your pardon sir, you are Hutu. You are safe there.

PAUL - You are with me, Zozo, don't worry.

ZOZO - What is it like to fly on a plane, sir?

PAUL - It depends where you sit Zozo. In coach it is like the bus to Giterama.

ZOZO - That is why they call it coach?

PAUL - Maybe. But in business class there are fine wines, linens, Belgian chocolates.

ZOZO - You have taken business class?

PAUL - Many times.

PAUL - I will try my best George but these days I have no time for rallies or politics.

GEORGE - Politics is power, Paul. And money.Gathering TV Scriptss

这里有一段来自 Friends Corpus 的脚本片段,设置在该团伙最喜欢的咖啡店 Central Perk。

SCRIPT:

[Scene, Central Perk]MONICA - There's nothing to tell! He's just some guy I work with!

JOEY - C'mon, you're going out with the guy! There's gotta be something wrong with him!

CHANDLER - All right Joey, be nice. So does he have a hump? A hump and a hairpiece?

PHOEBE - Wait, does he eat chalk?(They all stare, bemused.)PHOEBE - Just, 'cause, I don't want her to go through what I went through with Carl- oh!

MONICA - Okay, everybody relax. This is not even a date. It's just two people going out to dinner and- not having sex.

CHANDLER - Sounds like a date to me.

Adding Stage Directions

请注意,与《老友记》的剧本不同,《卢旺达酒店》的剧本没有任何舞台指导。它只有对话框。

为了教 GreenLIT 模型如何添加舞台方向,我从 Friends 创建了一组脚本,其中只有对话框,如下所示,然后是脚本。这些培训条目组成为:“DIALOG:”+ 台词 + “SCRIPT:”+ 带有舞台方向的台词。

DIALOG:

MONICA - There's nothing to tell! He's just some guy I work with!

JOEY - C'mon, you're going out with the guy! There's gotta be something wrong with him!

CHANDLER - All right Joey, be nice. So does he have a hump? A hump and a hairpiece?

PHOEBE - Wait, does he eat chalk?

PHOEBE - Just, 'cause, I don't want her to go through what I went through with Carl- oh!

MONICA - Okay, everybody relax. This is not even a date. It's just two people going out to dinner and- not having sex.

CHANDLER - Sounds like a date to me.微调后,如果我以“… DIALOG:”结束提示,它将创建一个对话框。但是如果我以“… SCRIPT:”结束提示,它将知道生成带有舞台方向的对话。这是行动中的多任务学习!

接下来,我将讨论如何解决生成脚本中重复字符名称的问题。

Diversifying Character Names

经过一些初步实验后,我注意到在训练数据集中包含 Friends 脚本导致模型经常使用六个中心字符的名称。例如,该系统将创建以 18 世纪为背景的时期作品,其中包含名为 Joey、Phoebe 和 Chandler 的角色。

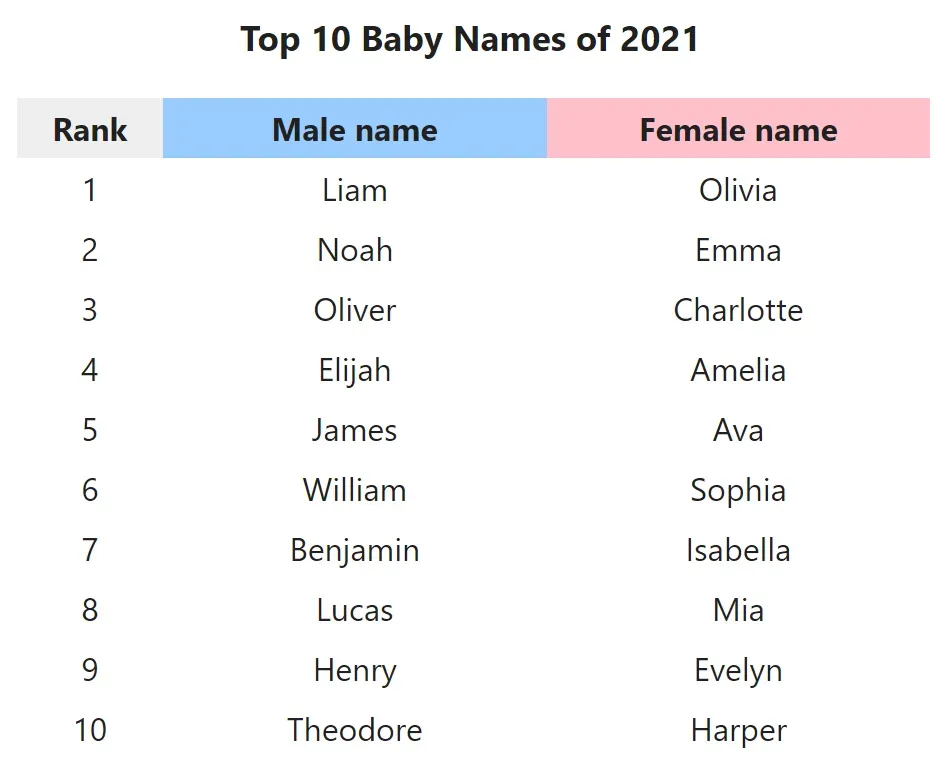

为了使角色名称多样化,我更换了 236 集《老友记》中每一集的角色名称。我使用了美国社会保障局收集的名字列表。[0]

例如,上面显示的脚本将这些字符名称用于训练数据:

Ross → Lucas

Chandler → Antonio

Joey → Eddie

Rachel → Charlotte

Phoebe → Stella

Monica → Luciana

我还将对“Central Perk”的所有引用更改为“Coffee Shop”,以帮助删除脚本的“Friends-ness”。这是修改后的脚本:

SCRIPT:

[Scene, Coffee Shop]LUCIANA - There's nothing to tell! He's just some guy I work with!

EDDIE - C'mon, you're going out with the guy! There's gotta be something wrong with him!

ANTONIO - All right Eddie, be nice. So does he have a hump? A hump and a hairpiece?

STELLA - Wait, does he eat chalk?(They all stare, bemused.)STELLA - Just, 'cause, I don't want her to go through what I went through with Carl- oh!

LUCIANA - Okay, everybody relax. This is not even a date. It's just two people going out to dinner and- not having sex.

ANTONIO - Sounds like a date to me.

有趣的是,简单地更改角色名称使它看起来像是一个不同的节目。

为朋友收集情节摘要

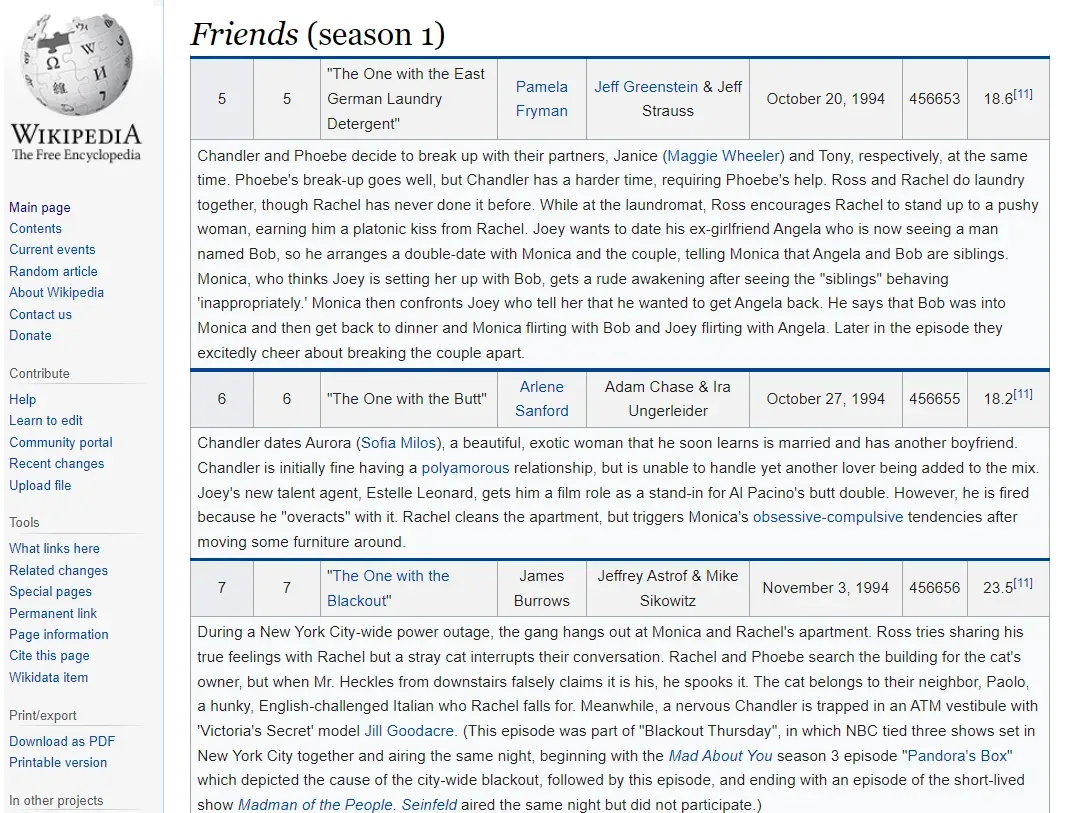

由于 ConvoKit 数据集不包含任何情节摘要,因此我从 Wikipedia 中抓取了所有 Friends 剧集的摘要。

获取摘要的源代码在这里。我再次使用 KeyBERT 来获取剧集主题的关键字。[0]

这是我为训练 GPT-J 收集的数据的摘要。

完成所有培训后,我开始微调 GPT-J 以创建新的节目和脚本。

微调 GPT-J

与我在 Deep Haiku 项目中所做的类似,我微调了 GPT-J 以学习和运行 GreenLIT 所需的所有四个任务:[0]

- 类型和主题 → 标题和情节

- 标题和情节 → 对话框

- 标题和情节 → 脚本

- Dialog → Script

Eleuther AI [5] 的 GPT-J 模型是 OpenAI 的居里模型的大小,是第二大 GPT-3 模型 [6]。 Eleuther AI 在 The Pile 上训练了模型,这是一个用于语言建模的庞大 (800GB) 不同文本数据集 [7]。

Google Colabs 只有 16 Gigs 内存的 GPU,如果按原样使用 GPT-J 将耗尽内存。为了在 Colab 上运行它,我使用 Edward Hu 等人的 Low-Rank Adaptation 技术将具有 32 位参数的 GPT-J 6-B 转换为 8 位。在微软 [8]。您可以在此处阅读 Eleuther AI 模型卡的详细信息。[0]

Running the System

在对模型进行微调后,我用它来创建新的标题和摘要以及节目脚本。

然而,我注意到的第一件事是该系统通常会为之前制作的节目生成标题。这并不奇怪,因为我使用现有节目的数据对其进行了微调。为了弥补这一点,我创建了一个小的 Python 函数来检查之前是否使用过任何新创建的标题,并使用 Wikipedia 作为参考。[0]

例如,我使用 GENRE: 犯罪喜剧和 THEME: buddy bank heist 运行系统。七个生成的标题中有四个来自现有节目。我在下面的结果中用一串星号标记了这些“重复项”。

01 The Bank Heist Gang - A group of friends decides to do a bank heist before things go haywire.02 *** The Bank Job - A group of ex-cons and petty criminals rob a bank in a small town. While carrying out the heist, they become involved in romantic entanglements, and the heist itself turns into a big, silly mess.03 *** Buddy Buddy - The film opens with a young man who, while on a business trip, is robbed by his own bodyguard who is also the son of the man who owns the bank.04 The Bumstead Affair - When a bank robbery goes awry, a group of young men decide to get rich quick by robbing the other robbers.05 *** Get Shorty - Get Shorty is a crime comedy centered around three friends who get involved in a heist.06 The Great American Caper - A man and his best friend have a scheme to rob a bank.07 *** The Hustler - A Las Vegas bank robber attempts to double-cross the mob by holding up their own bank... and the movie changes into a slapstick comedy.

我从原始标题中选择了 The Bumstead Affair 来查看生成的脚本。这里是。

[Scene: All of the guys are in the basement where Trench is at the computer. The guys are discussing the upcoming robbery attempt.]D'JACK - We're gonna hit the bank on Sunday. The place is closed on Sunday. The cops will never know.

TRENCH - Yeah, but...

D'JACK - But what?

TRENCH - What about the cameras?

D'JACK - The cameras are gonna be out.(The guys are looking at the computer monitor)D'JACK - And we'll make sure the alarm's gonna be out, too.

TRENCH - Yeah, but then...

D'JACK - Then what?

TRENCH - Then we'll be in the bank and we're gonna get rich?

D'JACK - Right, that's the goal.

好的,这很简单。但我确实喜欢 Trench 和 D’Jack 的角色名称。我也喜欢包含舞台指导。

Discussion of Results

我用 GreenLIT 玩了一周左右,它似乎在为节目提出新想法方面做得很好,尽管它经常重复使用标题。请注意,插入新颖的流派和主题会减少复制。

但是,生成的脚本中的对话框对我来说似乎有点乏味。好消息是对话似乎很自然,但散文的内容往往很简陋。这可能是因为根据设计,所有新脚本都是针对场景 1,节目的第 1 页。他们直接进入介绍性的说明。

查看附录以获取更多示例脚本。

源代码和 Colabs

这个项目的所有源代码都可以在 GitHub 上找到。我在 CC BY-SA 许可下发布了源代码。[0][1]

Acknowledgments

我要感谢 Jennifer Lim 和 Oliver Strimpel 对这个项目的帮助。

References

[1] Jonathan P. Chang、Caleb Chiam、Liye Fu、Andrew Wang、Justine Zhang、Cristian Danescu-Niculescu-Mizil。 2020. “ConvoKit:对话分析工具包” SIGDIAL 论文集。[0]

[2] R. Banik,Kaggle 上的电影数据集(2018 年)[0]

[3] 维基百科,朋友情节摘要[0]

[4] S. Bansal,Kaggle 上的流媒体电影和电视节目(2022 年)[0]

[5] GPT-J, Mesh-Transformer-JAX: Model-Parallel Implementation of Transformer Language Model with JAX (2021)[0]

[6] GPT-3、T. B. Brown、B. Mann、N. Ryder、M. Subbiah、J. Kaplan、P. Dhariwal、A.Neelakantan 等。语言模型很少学习(2020)[0]

[7] L.Gao 等人,The Pile: An 800GB Dataset of Diverse Text for Language Modeling (2020)[0]

[8] R. Caruana,多任务学习 (1997)[0]

[9] M. Grootendorst,KeyBERT:使用 BERT 进行最小关键字提取(2020 年)[0]

[10] E. Hu 等,LoRA:Low-rank Adaptation of Large Language Models (2021)[0]

Appendix

以下新节目的剧本是使用 GreenLIT 创建的。我提供了类型和主题,系统生成了 5 到 10 个标题和摘要。然后我选择了我最喜欢的,系统生成了脚本。

Robopocalypse

GENRE: science fiction drama

THEME: robot uprising

TITLE: Robopocalypse

SUMMARY: Robopocalypse is a science fiction drama about a coming robot uprising, and the people left behind to survive it.

SCRIPT:[Scene: A lab. Dr. Nathan Frost is looking at a robot on a cart. A technician is walking around the room, adjusting wires on the robot.]FROST - What the hell is he doing?

TECHNICIAN - We're trying to figure that out. He's been giving us static for the last three hours.

FROST - Static?

TECHNICIAN - Yeah, like he's been transmitting.

FROST - What does he do?

TECHNICIAN - I've been running diagnostics on him, but there's nothing wrong.

FROST - Does he have a malfunction?

TECHNICIAN - We've tried every possible combination of switches, buttons, and dials, and nothing seems to work.

FROST - He's got to be controlled by a central computer. Who is he?

TECHNICIAN - He doesn't have a tag, like the other robots. He's supposed to be a model.

FROST - Where'd he come from?

TECHNICIAN - He looks like the others, except for the fact that he's a few hundred years ahead of the development schedule.

FROST - Well, he's ours now, so I guess we'll have to wait till he figures out what he's supposed to do.

夜依旧

GENRE: thriller drama

THEME: cold war spy

TITLE: The Night Stands Still

SUMMARY: When a Russian defector arrives at the home of an American diplomat, he makes a startling confession, and the diplomat and the diplomat's wife are forced to hunt for a killer, and a traitor.[Scene: The American embassy in Moscow. The diplomat, an older man, is sitting alone in his office. His wife enters.]ROBERT - How are you doing?

SYLVIA- I'm not sure.

ROBERT- Oh.ROBERT- The guy's in there. He's a Russian defector. He came here last night.

SYLVIA - What did he say?

ROBERT - Well, he's not exactly talking, but he has to be kept alive. And he's got something to tell us.

SYLVIA- What?

ROBERT - I don't know. He came in last night and made these phone calls.SYLVIA - He's on the run. I'm sorry.

ROBERT - It's not your fault, Sylvia.

Stoner High

GENRE: stoner comedy

THEME: goofy friends smoke pot

TITLE: Stoner High

SUMMARY: A group of high school students try to save a local marijuana grow operation from a sheriff.

SCRIPT:[Scene: A room at Stoner High. The school principal is looking at a report from the sheriff]PRINCIPAL - What's going on, Sheriff?

JASON - The grower's name is Randy "Duke" Burdon. He's got a rap sheet a mile long. They found marijuana plants growing in his garage.

PRINCIPAL - Duke Burdon?

JASON - That's the guy. He's been arrested for growing pot twice before, but he always gets off on a technicality.

PRINCIPAL - Are you sure that's him?

JASON - I know this guy.PRINCIPAL - Okay. I'll find out what I can...

JASON - I don't think that's a good idea.

PRINCIPAL - Why not?

JASON - Because you're a principal. And because you're too old to party, and you don't need to be the target of a bunch of kids.

PRINCIPAL - I'm not going to let it happen.

JASON - And what if it does?

PRINCIPAL - I'll handle it.

要无限制地访问 Medium 上的所有文章,请以每月 5 美元的价格成为会员。非会员每个月只能阅读三个锁定的故事。[0]

文章出处登录后可见!